Module 13 Data Science Basics

In October 2012, the Harvard Business Review published an article calling data science the “sexiest job of the 21st century”, and comparing data scientists with the ubiquitous “quants” of the ’90s: a data scientist is a “hybrid of data hacker, analyst, communicator, and trusted adviser” [12].

Would-be data scientists are usually introduced to the field via machine learning algorithms and applications. While we will discuss these topics in later modules, we would like to start by with some of important non-technical (and semi-technical) notions that are often unfortunately swept aside in favour of diving head first into murky analysis waters.

In this section, we focus on some of the fundamental ideas and concepts that underlie and drive forward the discipline of data science, as well as the contexts in which these concepts are typically applied. We also highlight issues related to the ethics of practical data science. We conclude the section by getting a bit more concrete and considering the analytical workflow of a typical data science project, the types of roles and responsibilities that generally arise during data science projects and some basics of how to think about data, as a prelude to more technical topics.

13.1 Introduction

Let’s start by talking about data.

13.1.1 What Is Data?

It is surprisingly difficult to give a clear-cut definition of data – we cannot even seem to agree on whether it should be used in the singluar or the plural:

“the data is ...” vs. “the data are ...”

From a strictly linguistic point of view, a datum (borrowed from Latin) is “a piece of information;” data, then, should mean “pieces of information.” We can also think of it as a collection of “pieces of information”, and we would then use data to represent the whole (being potentially greater than the sum of its parts) or simply the idealized concept.

When it comes to actual data analysis, however, is the distinction really that important? Is it even clear what data is, from the definition above, and where it comes from? Is the following data?

To paraphrase U.S. Justice Potter Stewart, while it may be hard to define what data is, “we know it when we see it.” This position may strike some of you as unsatisfying; to overcome this (sensible) objection, we will think of data simply as a collection of facts about objects and their attributes.

For instance, consider the apple and the sandwich below:

Figure 13.1: An apple and a sandwich

Let us say that they have the following attributes:

Object: apple

Shape: spherical

Colour: red

Function: food

Location: fridge

Owner: Jen

Object: sandwich

Shape: rectangle

Colour: brown

Function: food

Location: office

Owner: Pat

As long as we remember that a person or an object is not simply the sum of its attributes, this rough definition should not be too problematic. Note, however, that there remains some ambiguity when it comes to measuring (and recording) the attributes.

We dare say that no one has ever beheld an apple quite like the one shown above: for starters, it is a 2-dimensional representation of a 3-dimensional object. Additionally, while the overall shape of the sandwich is vaguely rectangular (as seen from above, say), it is not an exact rectangle. While no one would seriously dispute the shape attribute of the sandwich being recorded as “rectangle”, a measurement error has occurred.

For most analytical purposes, this error may not be significant, but it is impossible to dismiss it as such for all tasks.

More problematic might be the fact that the apple’s shape attribute is given in terms of a volume, whereas the sandwich’s is recorded as an a area; the measurement types are incompatible. Similar remarks can be made about all the attributes – the function of an apple may be “food” from Jen’s perspective, but from the point of view of an apple tree, that is emphatically not the case; the sandwich is definitely not uniformly “brown,” and so on.

Furthermore, there are a number of potential attributes that are not even mentioned: size, weight, time, etc. Measurement errors and incomplete lists are always part of the picture, but most people would recognize that the collection of attributes does provide a reasonable description of the objects. This is the pragmatic definition of data that we will use throughout.

13.1.2 From Objects and Attributes to Datasets

Raw data may exist in any format; we will reserve the term dataset to represent a collection fo data that could conceivably be fed into algorithms for analytical purposes.

Often, these appear in a table format, with rows and columns;19 attributes are the fields (or columns) in such a dataset; objects are instances (or rows).

Objects are then described by their feature vector – the collection of attributes associated with value(s) of interest. The feature vector for a given observation is also know as the observation’s signature. For instance, the dataset of physical objects could contain the following items:

| ID | shape | colour | function | location | owner |

|---|---|---|---|---|---|

| 1 | spherical | red | food | fridge | Jen |

| 2 | rectangle | brown | food | office | Pat |

| 3 | round | white | tell time | lounge | school |

| … | … | … | … | … | … |

We will revisit thesen notions in Structuring and Organizing Data.

13.1.3 Data in the News

We collected a sample of headlines and article titles showcasing the growing role of data science (DS), machine learning (ML), and artificial/augmented intelligence (AI) in different domains of society.

While these demonstrate some of the functionality/capabilities of DS/ML/AI technologies, it is important to remain aware that new technologies are always accompanied by emerging (and not always positive) social consequences.

“Robots are better than doctors at diagnosing some cancers, major study finds” [13]

“Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet” [14]

“Google AI claims 99% accuracy in metastatic breast cancer detection” [15]

“Data scientists find connections between birth month and health” [16]

“Scientists using GPS tracking on endangered Dhole wild dogs” [17]

“These AI-invented paint color names are so bad they’re good” [18]

“We tried teaching an AI to write Christmas movie plots. Hilarity ensued. Eventually.” [19]

“Math model determines who wrote Beatles’ "In My Life": Lennon or McCartney?” [20]

“Scientists use Instagram data to forecast top models at New York Fashion Week” [21]

“How big data will solve your email problem” [22]

“Artificial intelligence better than physicists at designing quantum science experiments” [23]

“This researcher studied 400,000 knitters and discovered what turns a hobby into a business” [24]

“Wait, have we really wiped out 60% of animals?” [25]

“Amazon scraps secret AI recruiting tool that showed bias against women” [26]

“Facebook documents seized by MPs investigating privacy breach” [27]

“Firm led by Google veterans uses A.I. to ‘nudge’ workers toward happiness” [28]

“At Netflix, who wins when it’s Hollywood vs.the algorithm?” [29]

“AlphaGo vanquishes world’s top Go player, marking A.I.’s superiority over human mind” [30]

“An AI-written novella almost won a literary prize” [31]

“Elon Musk: Artificial intelligence may spark World War III” [32]

“A.I. hype has peaked so what’s next?” [33]

Opinions on the topic are varied – to some, DS/ML/AI provide examples of brilliant successes, while to others it is the dangerous failures that are at the forefront.

What do you think?

13.1.4 The Analog/Digital Data Dichotomy

Humans have been collecting data for a long time. In the award-winning Against the Grain: A Deep History of the Earliest States, J.C.Scott argues that data collection was a major enabler of the modern nation-state (he also argues that this was not necessarily beneficial to humanity at large, but this is another matter altogether) [34].

For most of the history of data collection, humans were living in what might best be called the analogue world – a world where our understanding was grounded in a continuous experience of physical reality.

Nonetheless, even in the absence of computers, our data collection activities were, arguably, the first steps taken towards a different strategy for understanding and interacting with the world. Data, by its very nature, leads us to conceptualize the world in a way that is, in some sense, more discrete than continuous.

By translating our experiences and observations into numbers and categories, we re-conceptualize the world into one with sharper and more definable boundaries than our raw experience might otherwise suggest. Fast-forward to the modern world and the culmination of this conceptual discretization strategy is clear to see in our adoption of the digitalcomputer, which represents everything as a series of 1s and 0s.20

Somewhat surprisingly, this very minimalist representational strategy has been wildly successful at representing our physical world, arguably beyond our most ambitious dreams, and we find ourselves now at a point where what we might call the digital world is taking on a reality as pervasive and important as the physical one.

Clearly, this digital world is built on top of the physical world, but very importantly, the two do not operate under the same set of rules:

in the physical world, the default is to forget; in the digital world, the default is to remember;

in the physical world, the default is private; in the digital world, the default is public;

in the physical world, copying is hard; in the digital world, copying is easy.

As a result of these different rules of operation, the digital is making things that were once hidden, visible; once veiled, transparent. Considering data science in light of this new digital world, we might suggest that data scientists are, in essence, scientists of the digital, in much the same way that regular scientists are scientists of the physical: data scientists seek to discover the fundamental principles of data and understand the ways in which these fundamental principles manifest themselves in different digital phenomena.

Ultimately, however, data and the digital world are tied to the physical world. Consequently, what is done with data has repercussions in the physical world; and it is crucial for analysts and consultants to have a solid grasp of the fundamentals and context of data work before leaping into the tools and techniques that drive it forward.

13.2 Conceptual Frameworks for Data Work

In simple terms, we use data to represent the world. But this is not the only strategy at our disposal: we might also (and in combination) describe the world using language, or represent it by building physical models.

The common thread is the more basic concept of representation – the idea that one object can stand in for another, and be used in its stead in order to indirectly engage with the object being represented. Humans are representational animals par excellence; our use of representations becomes almost transparent to us, at times.

On some level, we do understand that “the map is not the territory”, but we do not have to make much of an effort to use the map to navigate the territory. The transition from the representation to the represented is typically quite seamless. This is arguably one of humanity’s major strengths, but in the world of data science it can also act as an Achilles’ heel, preventing analysts from working successfully with clients and project partners, and from appropriately transferring analytical results to the real world contexts that could benefit from them.

The best protection against these potential threats is the existence of a well thought out and explicitly described conceptual framework, by which we mean, in its broadest sense:

a specification of which parts of the world are being represented;

how they are represented;

the nature of the relationship between the represented and the representing, and

appropriate and rigorous strategies for applying the results of the analysis that is carried out in this representational framework.

It would be possible to construct such a specification from scratch, in a piecemeal fashion, for each new project, but it is worth noting that there are some overarching modeling frameworks that are broadly applicable to many different phenomena, which can then be moulded to fit these more specific instances.

13.2.1 Three Modeling Strategies

We suggest that there are three main not mutually exclusive modeling strategies that can be used to guide the specification of a phenomenon or domain:

mathematical modeling;

computer modeling, and

systems modeling.

We start with a description of the latter as it requires, in its simplest form, no special knowledge of techniques/concepts from mathematics or computer science.

13.2.1.1 Systems Modeling

General Systems Theory was initially put forward by L.von Bertalanffy, a biologist, who felt that it should be possible to describe many disparate natural phenomena using a common conceptual framework – one which would be capable of describing man disparate phenomena, all as systems of interacting objects.

Although Bertalanffy himself presented abstracted, mathematical, descriptions of his general systems concepts, his broad strategy is relatively easily translated into a purely conceptual framework.

Within this framework, when presented with a novel domain or situation, we ask ourselves the following questions:

which objects seem most relevant or involved in the system behaviours in which we are most interested?

what are the properties of these objects?

what are the behaviours (or actions) of these objects?

what are the relationships between these objects?

how do the relationships between objects influence their properties and behaviours?

As we find the answers to these questions about the system of interest, we start to develop a sense that we understand the system and its relevant behaviours.

By making this knowledge explicit, e.g.via diagrams and descriptions, and by sharing it amongst those with whom we are working, we can further develop a consistent, shared understanding of the system with which we are engaged. If this activity is carried out prior to data collection, it can ensure that the right data is collected.

If this activity is carried out after data collection, it can ensure that the process of interpreting what the data represents and how the latter should be used going forward is on solid footing.

13.2.1.2 Mathematical and Computer Modeling

The other modeling approaches arguably come with their own general frameworks for interpreting and representing real-world phenomena and situations, separate from, but still compatible with, this systems perspective.

These disciplines have developed their own mathematical/digital (logical) worlds that are distinct from the tangible, physical world studied by chemists, biologists, and so on; these frameworks can then be used to describe real-world phenomena by drawing parallels between the properties of objects in these different worlds and reasoning via these parallels.

Why these constructed worlds and the conceptual frameworks they provide are so effective at representing and describing the actual world, and thus allowing us to understand and manipulate it, is more of a philosophical question than a pragmatic one.

We will only note that they are highly effective at doing so, which provides the impetus and motivation to learn more about how these worlds operate, and how, in turn, they can provide data scientists with a means to engage with domains and systems through a powerful, rigorous and shared conceptual framework.

13.2.2 Information Gathering

The importance of achieving contextual understanding of a dataset cannot be over-emphasized. In the abstract we have suggested that this context can be gained by using conceptual frameworks. But more concretely, how does this understanding come about?

It can be reached through:

field trips;

interviews with subject matter experts (SMEs);

readings/viewings;

data exploration (even just trying to obtain or gain access to the data can prove a major pain),

etc.

In general, clients or stakeholders are not a uniform entity – it is even conceivable that client data specialists and SMEs will resent the involvement of analysts (external and/or internal).

Thankfully, this stage of the process provides analysts and consultants the opportunity to show that every one is pulling in the same direction, by

asking meaningful questions;

taking an interest in the SMEs’/clients’ experiences, and

acknowledging everyone’s ability to contribute.

A little tact goes a long way when it comes to information gathering.

13.2.2.1 Thinking in Systems Terms

We have already noted that a system is made up of objects with properties that potentially change over time. Within the system we perceive actions and evolving properties, leading us to think in terms of processes.

To put it another way, in order to understand how various aspects of the world interact with one another, we need to carve out chunks corresponding to the aspects and define their boundaries. Working with other intelligences requires this type of shared understanding of what is being studied. Objects themselves have various properties.

Natural processes generate (or destroy) objects, and may change the properties of these objects over time. We observe, quantify, and record particular values of these properties at particular points in time.

This process generates data points in our attempt to capture the underlying reality to some acceptable degree of accuracy and error, but it remains crucial for data analysts and data scientists to remember that even the best system model only ever provides an approximation of the situation under analysis; with some luck, experience, and foresight, these approximations might turn out to be valid.

13.2.2.2 Identifying Gaps in Knowledge

A gap in knowledge is identified when we realize that what we thought we knew about a system proves incomplete (or blatantly false).

This can arise as the result of a certain naı̈veté vis-à-vis the situation being modeled, but it can also be emblematic of the nature of the project under consideration: with too many moving parts and grandiose objectives, there cannot help but be knowledge gaps.21

Knowledge gaps might occur repeatedly, at any moment in the process:

data cleaning;

data consolidation;

data analysis;

even during communication of the results (!).

When faced with such a gap, the best approach is to be flexible: go back, ask questions, and modify the system representation as often as is necessary. For obvious reasons, it is preferable to catch these gaps early on in the process.

13.2.2.3 Conceptual Models

Consider the following situation: you are away on business and you forgot to hand in a very important (and urgently required) architectural drawing to your supervisor before leaving. Your office will send a gopher to pick it up in your living space. How would you explain to them, by phone, how to find the document?

If the gopher has previously been in your living space, if their living space is comparable to yours, or if your spouse is at home, the process may be able to be sped up considerably, but with somebody for whom the space is new (or someone with a visual impairment, say), it is easy to see how things could get complicated.

But time is of the essence – you and the gopher need to get the job done correctly as quickly as possible. What is your strategy?

Conceptual models are built using methodical investigation tools:

diagrams;

structured interviews;

structured descriptions,

etc.

Data analysts and data scientists should beware implicit conceptual models – they go hand-in-hand with knowledge gaps.

In our opinion, it is preferable to err on the side of “too much conceptual modeling” than the alternative (although, at some point we have to remember that every modeling excercise is wrong22 and that there is nothing wrong with building better models in an iterative manner, over the bones of previously-discarded simpler models).

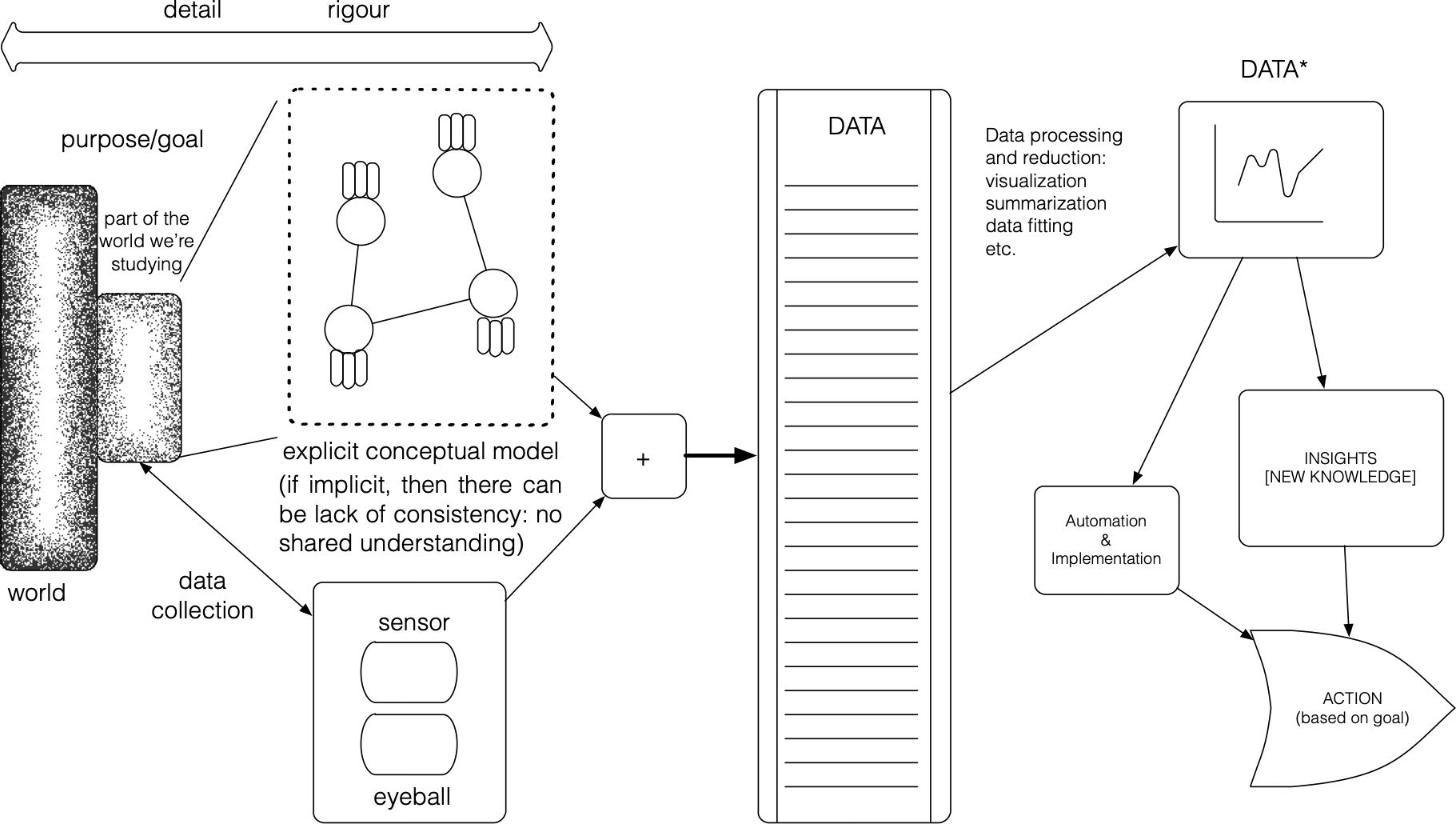

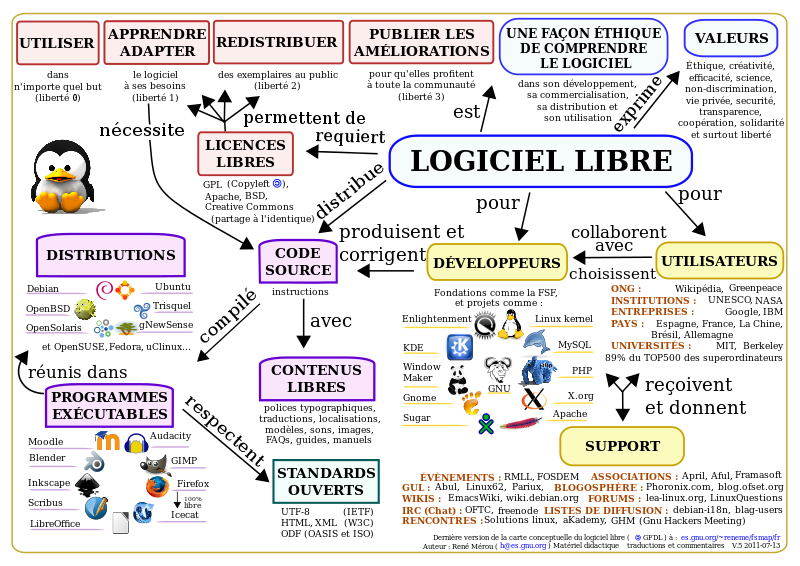

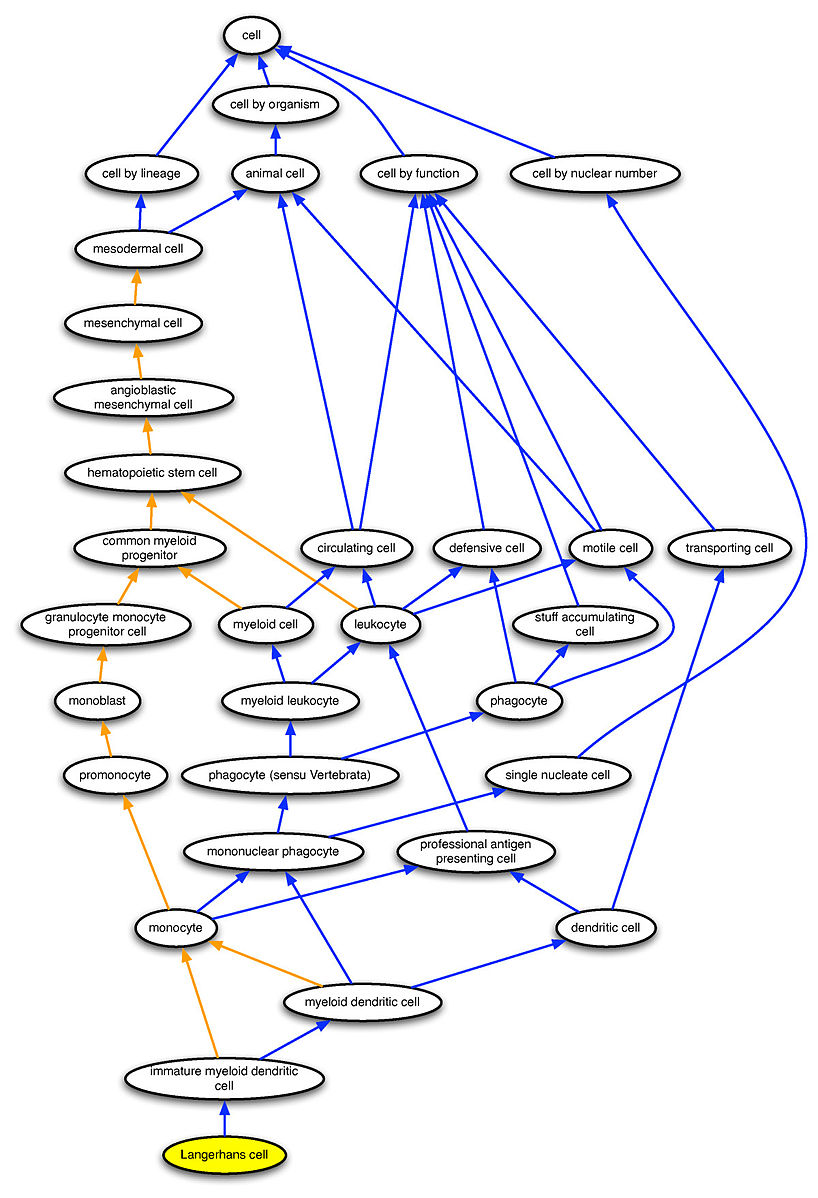

Roughly speaking, a conceptual model is a model that is not implemented as a scale-model or computer code, but one which exists only conceptually, often in the form of a diagram or verbal description of a system – boxes and arrows, mind maps, lists, definitions (see Figures 13.2 and 13.3.

Figure 13.2: A schematic diagram of systems thinking as it applies to a general problem (J. Schellinck).

Conceptual models do not necessarily attempt to capture specific behaviours, but they emphasize the possible states of the system: the focus is on object types, not on specific instances, with abstraction as the ultimate objective.

Figure 13.3: A conceptual model of the ‘free software’ system (in French) [35].

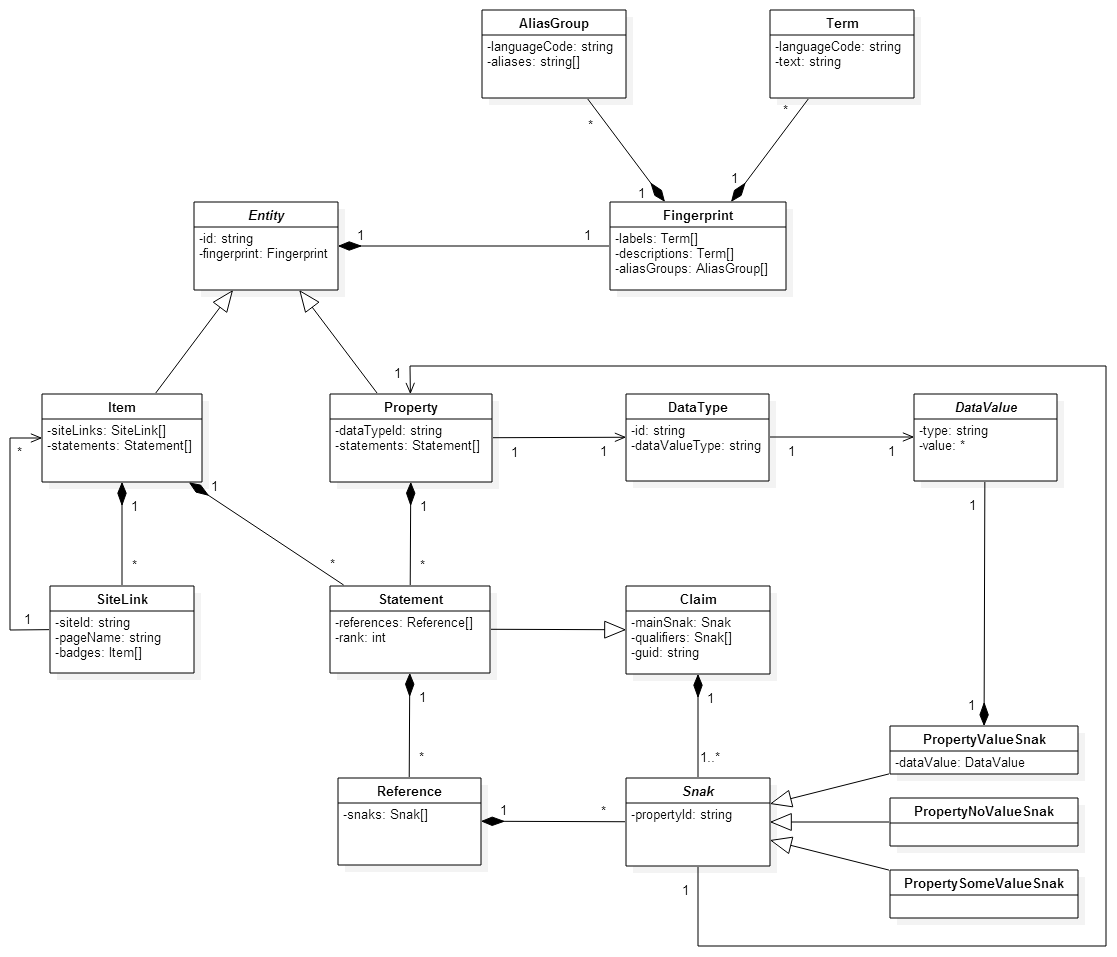

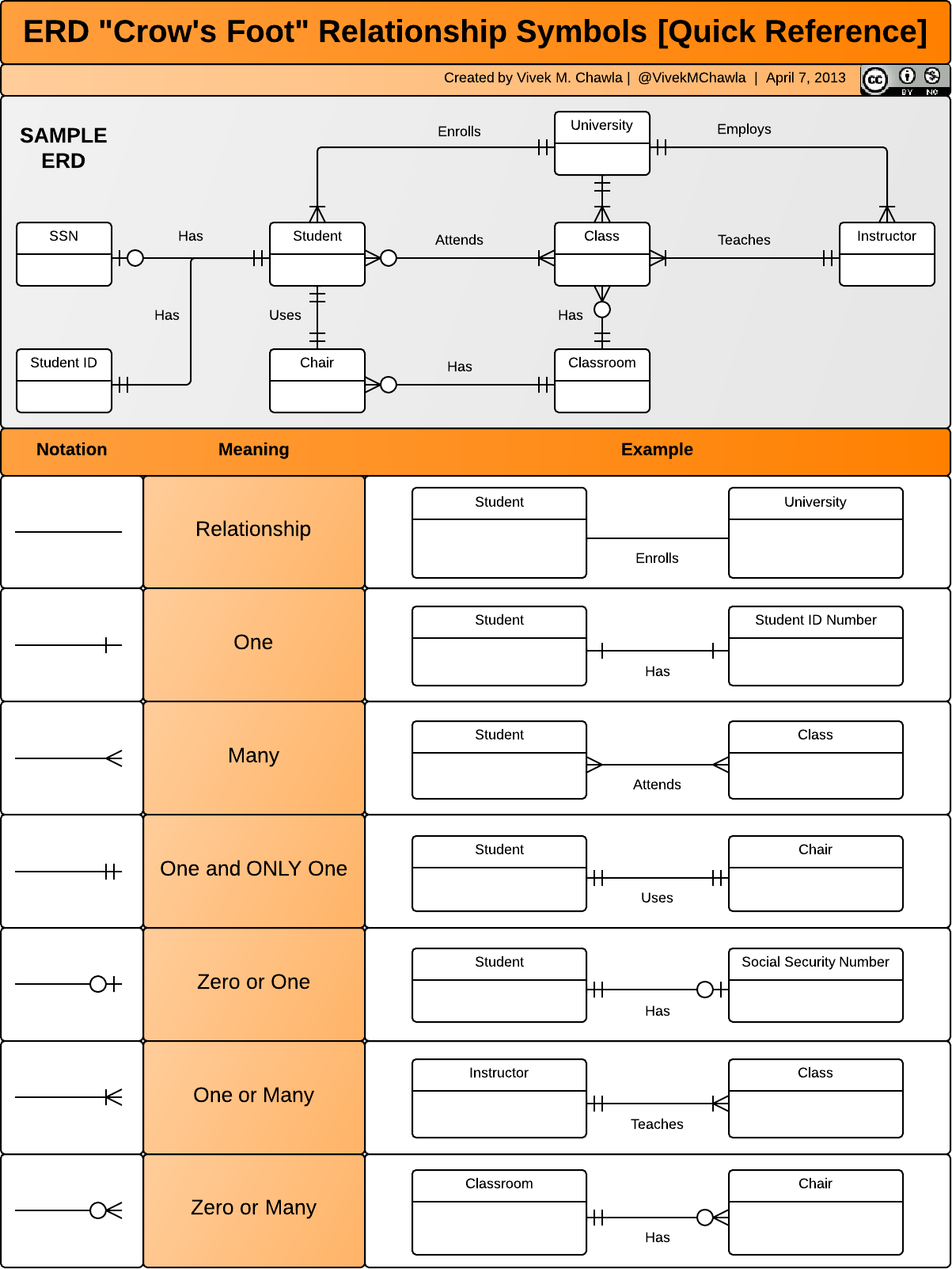

Conceptual modeling is not an exact science – it is more about making internal conceptual models explicit and tangible, and providing data analysis teams with the opportunity to examine and explore their ideas and assumptions. Attempts to formalize the concept include (see Figure 13.4):

Universal Modeling Language (UML);

Entity Relationship Models (ER), generally connected to relational databases.

.png)

Figure 13.4: Examples of UML diagram (Wikibase Data Model, on the left [36]) and ER conceptual map (on the right [37]).

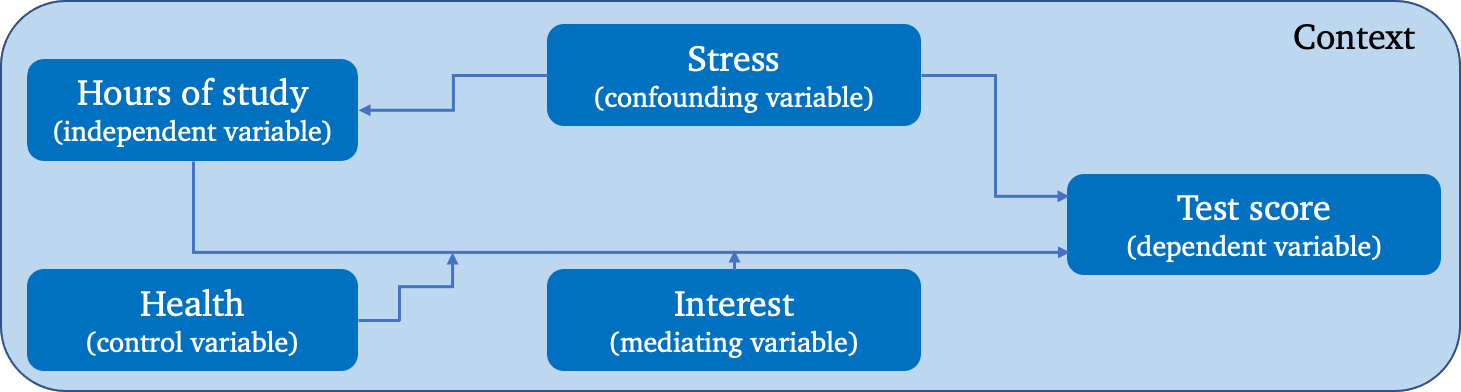

In practice, we must first select a system for the task at hand, then generate a conceptual model that encompasses:

relevant and key objects (abstract or concrete);

properties of these objects, and their values;

relationships between objects (part-whole, is-a, object-specific, one-to-many), and

relationships between properties across instances of an object type.

A simplistic example describing a supposed relationship between a presumed cause (hours of study) and a presumed effect (test score) is shown below:

Figure 13.5: A simple conceptual model.

13.2.2.4 Relating the Data to the System

From a pragmatic perspective, stakeholders and analysts alike need to know if the data which has been collected and analyzed will be useful to understand the system.

This question can best be answered if we understand:

how the data is collected;

the approximate nature of both data and system, and

what the data represents (observations and features).

Is the combination of system and data sufficient to understand the aspects of the world under consideration? Once again, this is difficult to answer in practice.

Contextual knowledge can help, but if the data, the system, and the world are out of alignment, any data insight drawn from mathematical, ontological, programmatical, or data models of the situation might ultimately prove useless.

13.2.3 Cognitive Biases

Adding to the challenge of building good conceptual models and using these to interpret the data is the fact that we are all vulnerable to a vast array of cognitive biases, which influence both how we construct our models and how we look for patterns in the data.

These biases are difficult to detect in the spur of the moment, but being aware of them, making a conscious effort to identify them, and setting up a clear and pre-defined set of thresholds and strategies for analysis will help reduce their negative impact. Here is a sample of such biases (taken from [38], [39]).

- Anchoring bias

causes us to rely too heavily on the first piece of information we are given about a topic; in a salary negotiation, for instance, whoever makes the first offer establishes a range of reasonable possibilities in both parties’ minds.

- Availability heuristic

describes our tendency to use information that comes to mind quickly and easily when making decisions about the future; someone might argue that climate change is a hoax because the weather in their neck of the woods has not (yet!) changed.

- Bandwagon Effect

refers to our habit of adopting certain behaviours or beliefs because many others do the same; if all analyses conducted until now have shown no association between factors and , we might forego testing for the association in a new dataset.

- Choice-supporting bias

causes us to view our actions in a positive light, even if they are flawed; we are more likely to sweep anomalous or odd results under the carpet when they arise from our own analyses.

- Clustering illusion

refers to our tendency to see patterns in random events; if a die has rolled five 3’s in a row, we might conclude that the next throw is more (or less) likely to come up a 3 (gambling fallacy).

- Confirmation bias

describes our tendency to notice, focus on, and give greater credence to evidence that fits with our existing beliefs; gaffes made by politicians you oppose reinforces your dislike.

- Conservation bias

occurs when we favour prior evidence over new information; it might be difficult to accept that there is an association between factors and if none had been found in the past.

- Ostrich effect

describes how people often avoid negative information, including feedback that could help them monitor their goal progress; a professor might chose to not consult their teaching evaluations, for whatever reason.

- Outcome bias

refers to our tendency to judge a decision on the outcome, rather than on why it was made; the fact that analysts gave Clinton an 80% chance of winning the 2016 U.S.Presidential Election does not mean that the forecasts were wrong.

- Overconfidence

causes us to take greater risks in our daily lives; experts are particularly prone to this, as they are more convinced that they are right.

- Pro-innovation bias

occurs when proponents of a technology overvalue its usefulness and undervalue its limitations; in the end, Big Data is not going to solve all of our problems.

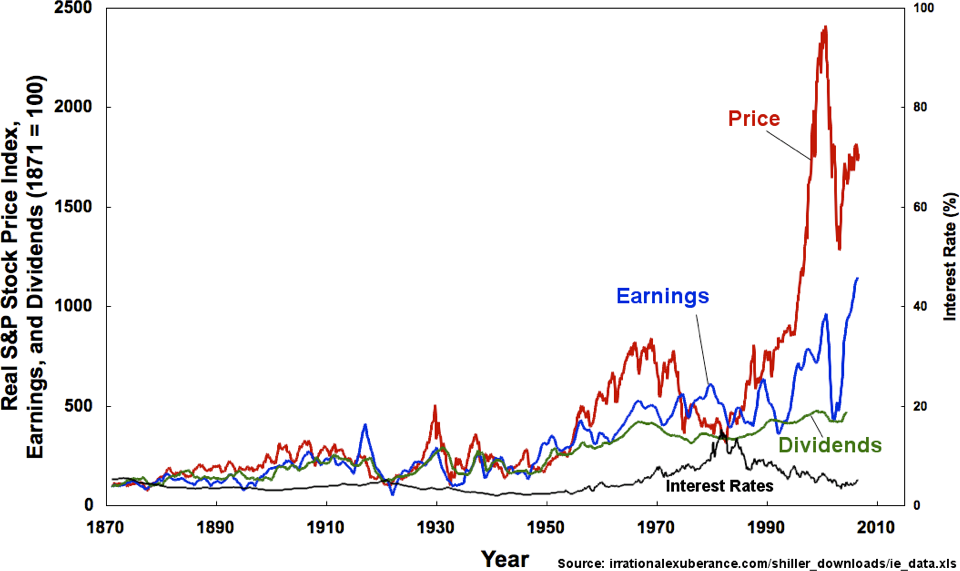

- Recency bias

occurs when we favour new information over prior evidence; investors tend to view today’s market as the "forever’ market and make poor decisions as a result.

- Salience Bias

describes our tendency to focus on items or information that are more noteworthy while ignoring those that do not grab our attention; you might be more worried about dying in a plane crash than in a car crash, even though the latter occurs more frequently than the former.

- Survivorship Bias

is a cognitive shortcut that occurs when a visible successful subgroup is mistaken as an entire group, due to the failure subgroup not being visible; when trying to get the full data picture, it helps to know what observations did not make it into the dataset.

- Zero-Risk Bias

relates to our preference for absolute certainty; we tend to opt for situations where we can completely eliminate risk, seeking solace in the figure of 0%, over alternatives that may actually offer greater risk reduction.

Other biases impact our ability to make informed decisions:

base rate fallacy, bounded rationality, category size bias, commitment bias, Dunning-Kruger effect, framing effect, hot-hand fallacy, IKEA effect, illusion of explanatory depth, illusion of validity, illusory correlations, look elsewhere effect, optimism effect, planning fallacy, representative heuristic, response bias, selective perception, stereotyping, etc. [38], [39].

13.3 Ethics in the Data Science Context

A lapse in ethics can be a conscious choice… but it can also be negligence. R. Schutt, C. O’Neill [40]

In most empirical disciplines, ethics are brought up fairly early in the educational process and may end up playing a crucial role in researchers’ activities. At Memorial University of Newfoundland, for instance, “proposals for research in the social sciences, humanities, sciences, and engineering, including some health-related research in these areas,” must receive approval from specific .

This could, among other cases, apply to research and analysis involving [41]:

living human subjects;

human remains, cadavers, tissues, biological fluids, embryos or foetuses;

a living individual in the public arena if s/he is to be interviewed and/or private papers accessed;

secondary use of data – health records, employee records, student records, computer listings, banked tissue – if any form of identifier is involved and/or if private information pertaining to individuals is involved, and

quality assurance studies and program evaluations which address a research question.

In our experience, data scientists and data analysts who come to the field by way of mathematics, statistics, computer science, economics, or engineering, however, are not as likely to have encountered ethical research boards or to have had formal ethics training.23 Furthermore, discussions on ethical matters are often tabled, perhaps understandably, in favour of pressing technical or administrative considerations (such as algorithm selection, data cleaning strategies, contractual issues, etc.) when faced with hard deadlines.

The problem, of course, is that the current deadline is eventually replaced by another deadline, and then by a new deadline, with the end result that the conversation may never take place. It is to address this all-too-common scenario that we take the time to discuss ethics in the data science context; more information is available in [42].

13.3.1 The Need for Ethics

When large scale data collection first became possible, there was to some extent a ‘Wild West’ mentality to data collection and use. To borrow from the old English law principle, whatever was not prohibited (from a technological perspective) was allowed.

Now, however, professional codes of conduct are being devised for data scientists [43]–[45], outlining responsible ways to practice data science – ways that are legitimate rather than fraudulent, and ethical rather than unethical.24 Although this shifts some added responsibility onto data scientists, it also provides them with protection from clients or employers who would hire them to carry out data science in questionable ways – they can refuse on the grounds that it is against their professional code of conduct.

13.3.2 What Is/Are Ethics?

Broadly speaking, ethics refers to the study and definition of right and wrong conducts. Ethics may consider what is a right or a wrong action in general, or consider how broad ethical principles are appropriately applied in more specific circumstances.

And, as noted by R.W. Paul and L. Elder, ethics is not (necessarily) the same as social convention, religious beliefs, or laws [49]; that distinction is not always fully understood. The following influential ethical theories are often used to frame the debate around ethical issues in the data science context:

Kant’s golden rule: do unto others as you would have them do unto you;

Consequentialism: the end justifies the means;

Utilitarianism: act in order to maximize positive effect;

Moral Rights: act to maintain and protect the fundamental rights and privileges of the people affected by actions;

Justice: distribute benefits and harm among stakeholders in a fair, equitable, or impartial way.

In general, it is important to remember that our planet’s inhabitants subscribe to a wide variety of ethical codes, including also:

Confucianism, Taoism, Buddhism, Shinto, Ubuntu, Te Ara Tika (Maori), First Nations Principles of OCAP, various aspects of Islamic ethics, etc.

It is not too difficult to imagine contexts in which either of these (or other ethical codes, or combinations thereof) would be better-suited to the task at hand – the challenge is to remember to inquire and to heed the answers.

13.3.3 Ethics and Data Science

How might these ethical theories apply to data analysis? The (former) University of Virginia’s Centre for Big Data Ethics, Law and Policy suggested some specific examples of data science ethics questions [50]:

who, if anyone, owns data?

are there limits to how data can be used?

are there value-biases built into certain analytics?

are there categories that should never be used in analyzing personal data?

should data be publicly available to all researchers?

The answers may depend on a number of factors, not least of which being who is actually providing them. To give you an idea of some of the complexities, let us consider the first of those questions: who, if anyone, owns data?

In some sense, the data analysts who transform the data’s potential into usable insights are only one of the links in the entire chain. Processing and analyzing the data would be impossible without raw data on which to work, so the data collectors also have a strong ownership claim to the data.

But collecting the data can be a costly endeavour, and it is easy to imagine how the sponsors or employers (who made the process economically viable in the first place) might feel that the data and its insights are rightfully theirs to dispose of as they wish.

In some instances, the law may chime in as well. One can easily include other players: in the final analysis, this simple question turns out to be far from easily answered. This also highlights some of the features of the data analysis process: there is more to data analysis than just data analysis. The answer is not easily forthcoming, and may change from one case to another.

A similar challenge arises in regards to open data, where the “pro” and “anti” factions both have strong arguments (see [51]–[53], and [54] for a science-fictional treatment of the transparency-vs.-secrecy/security debate).

A general principle of data analysis is to eschew the anecdotal in favour of the general – from a purely analytical perspective, too narrow a focus on specific observations can end up obscuring the full picture (a vivid illustration can be found in [55]).

But data points are not solely marks on paper or electro-magnetic bytes on the cloud. Decisions made on the basis of data science (in all manners of contexts, from security, to financial and marketing context, as well as policy) may affect living beings in negative ways. And it can not be ignored that outlying/marginal individuals and minority groups often suffer disproportionately at the hands of so-called evidence-based decisions [56]–[58].

13.3.4 Guiding Principles

Under the assumption that one is convinced of the importance of proceeding ethically, it could prove helpful to have a set of guiding principles to aid in these efforts.

In his seminal science fiction series about positronic robots, Isaac Asimov introduced the now-famous Laws of Robotics, which he believed would have to be built-in so that robots (and by extension, any tool used by human beings) could overcome humanity’s Frankeinstein’s complex (the fear of mechanical beings) and help rather than hinder human social, scientific, cultural, and ecomomic activities [59]:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given to it by human beings, except where such orders would conflict with the 1st Law.

3. A robot must protect its own existence as long as such protection does not conflict with the 1st and 2nd Law.

Had they been uniformly well-implemented and respected, the potential for story-telling would have been somewhat reduced; thankfully, Asimov found entertaining ways to break the Laws (and to resolve the resulting conflicts) which made the stories both enjoyable and insightful.

Interestingly enough, he realized over time that a Zeroth Law had to supersede the First in order for the increasingly complex and intelligent robots to succeed in their goals. Later on, other thinkers contributed a few others, filling in some of the holes.

Asimov’s (expanded) Laws of Robotics:

00. A robot may not harm sentience or, through inaction, allow sentience to come to harm.

0. A robot may not harm humanity, or, through inaction, allow humanity to come to harm, as long as this action/inaction does not conflict with the 00th Law.

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm, as long as this does not conflict with the 00th or the 0th Law.

2. A robot must obey the orders given to it by human beings, except where such orders would conflict with the 00th, the 0th or the 1st Law.

3. A robot must protect its own existence as long as such protection does not conflict with the 00th, the 0th, the 1st or the 2nd Law.

4. A robot must reproduce, as long as such reproduction does not interfere with the 00th, the 0th, the 1st, the 2nd or the 3rd Law.

5. A robot must know it is a robot, unless such knowledge would contradict the 00th, the 0th, the 1st, the 2nd, the 3rd or the 4th Law.]

We cannot speak for the validity of these laws for robotics (a term coined by Asimov, by the way), but we do find the entire set satisfyingly complete.

What does this have to do with data science? Various thinkers have discussed the existence and potential merits of different sets of Laws ([60]) – wouldn’t it be useful if there were Laws of Analytics, moral principles that could help us conduct data science ethically?

13.3.4.1 Best Practices

Such universal principles are unlikely to exist, but best practices have nonetheless be suggested over the years.

- “Do No Harm”

Data collected from an individual should not be used to harm the individual. This may be difficult to track in practice, as data scientists and analysts do not always participate in the ultimate decision process.

- Informed Consent

Covers a wide variety of ethical issues, chief among them being that individuals must agree to the collection and use of their data, and that they must have a real understanding of what they are consenting to, and of possible consequences for them and others.

- The Respect of “Privacy”

This principle is dearly-held in theory, but it is hard to adhere to it religiously with robots and spiders constantly trolling the net for personal data. In the Transparent Society, D. Brin (somewhat) controversially suggests that privacy and total transparency are closely linked [52]:

"And yes, transparency is also the trick to protecting privacy, if we empower citizens to notice when neighbors [sic] infringe upon it. Isn’t that how you enforce your own privacy in restaurants, where people leave each other alone, because those who stare or listen risk getting caught?’

- Keeping Data Public

Another aspect of data privacy, and a thornier issue – should some data be kept private? Most? All? It is fairly straightforward to imagine scenarios where adherence to the principle of public data could cause harm to individuals (revealing the source of a leak in a country without where the government routinely jails members of the opposition, say), contradicting the first principle against causing harm. But it is just as easy to imagine scenarios where keeping data private would have a similar effect.

- Opt-in/Opt-out

Informed consent requires the ability to not consent, i.e. to opt out. Non-active consent is not really consent.

- Anonymize Data

Identifying fields should be removed from the dataset prior to processing and analysis. Let any temptation to use personal information in an inappropriate manner be removed from the get-go, but be aware that this is easier said than done, from a technical perspective.

- Let the Data Speak

It is crucial to absolutely restrain one’s self from cherry-picking the data. Use all of it in some way or another; validate your analysis and make sure your results are repeatable.

13.3.5 The Good, the Bad, and the Ugly

Data projects could whimsically be classified as good, bad or ugly, either from a technical or from an ethical standpoint (or both). We have identified instances in each of these classes (of course, our own biases are showing):

good projects increases knowledge, can help uncover hidden links, and so on: [14]–[16], [20], [23], [24], [30], [61]–[68]

bad projects, if not done properly, can lead to bad decisions, which can in turn decrease the public’s confidence and potentially harm some individuals: [17], [21], [28], [29], [55]

ugly projects are, flat out, unsavoury applications; they are poorly executed from a technical perspective, or put a lot of people at risk; these (and similar approaches/studies) should be avoided: [26], [27], [56]–[58], [69]

13.4 Analytics Workflow

An overriding component of the discussion so far has been the importance of context. And although the reader may be eager at this point to move into data analysis proper, there is one more context that should be considered first – the project context.

We have alluded to the idea that data science is much more than simply data analysis and this is apparent when we look at the typical steps involved in a data science project. Inevitably, data analysis pieces take place within this larger project context, as well as in the context of a larger technical infrastructure or pre-existing system.

13.4.1 The “Analytical” Method

As with the scientific method, there is a “step-by-step” guide to data analysis:

statement of objective

data collection

data clean-up

data analysis/analytics

dissemination

documentation

Notice that data analysis only makes up a small segment of the entire flow.

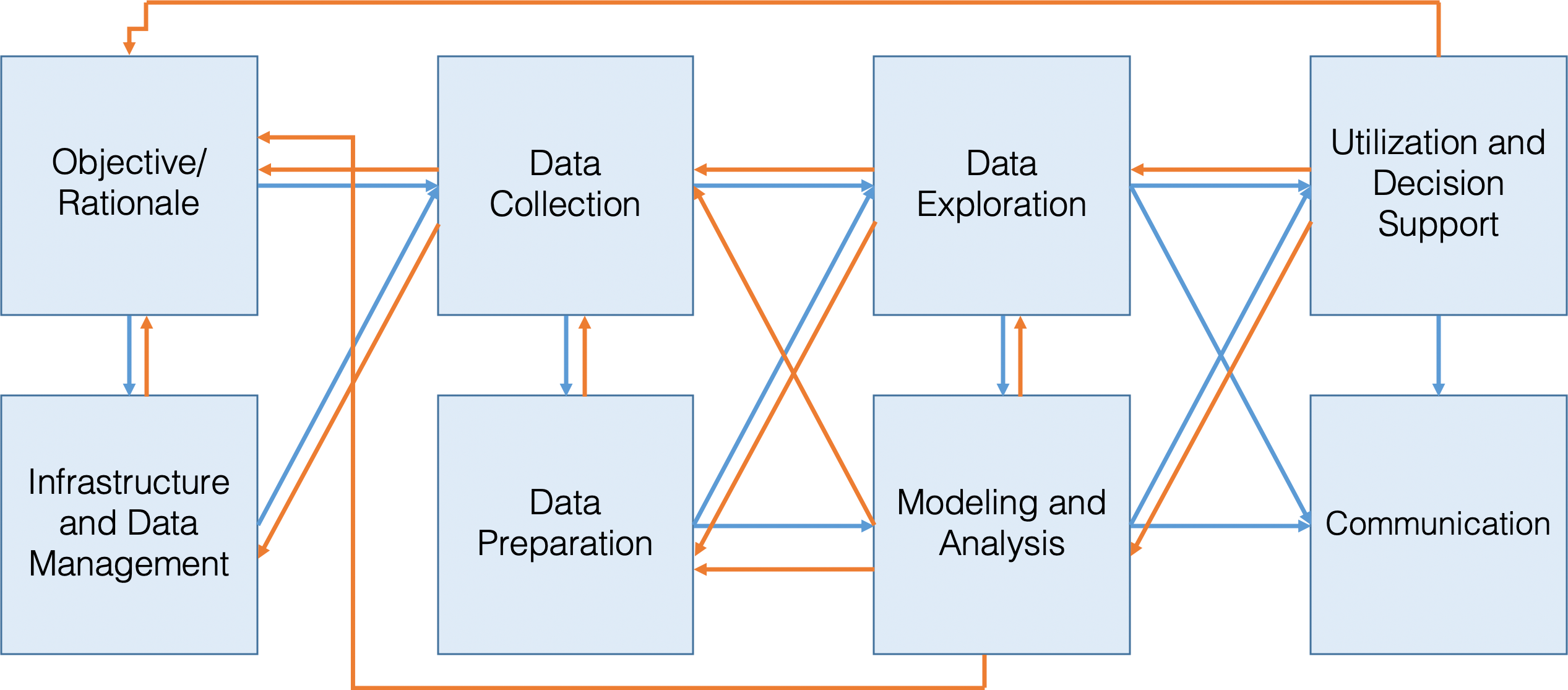

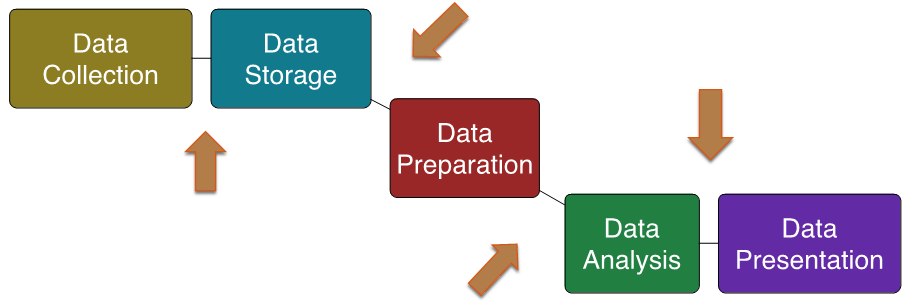

In practice, the process often end up being a bit of a mess, with steps taken out of sequence, steps added-in, repetitions and re-takes (see Figure 13.6).

Figure 13.6: The reality of the analytic workflow – it is definitely not a linear process! [peronsal file].

And yet… it tends to work on the whole, if conducted correctly.

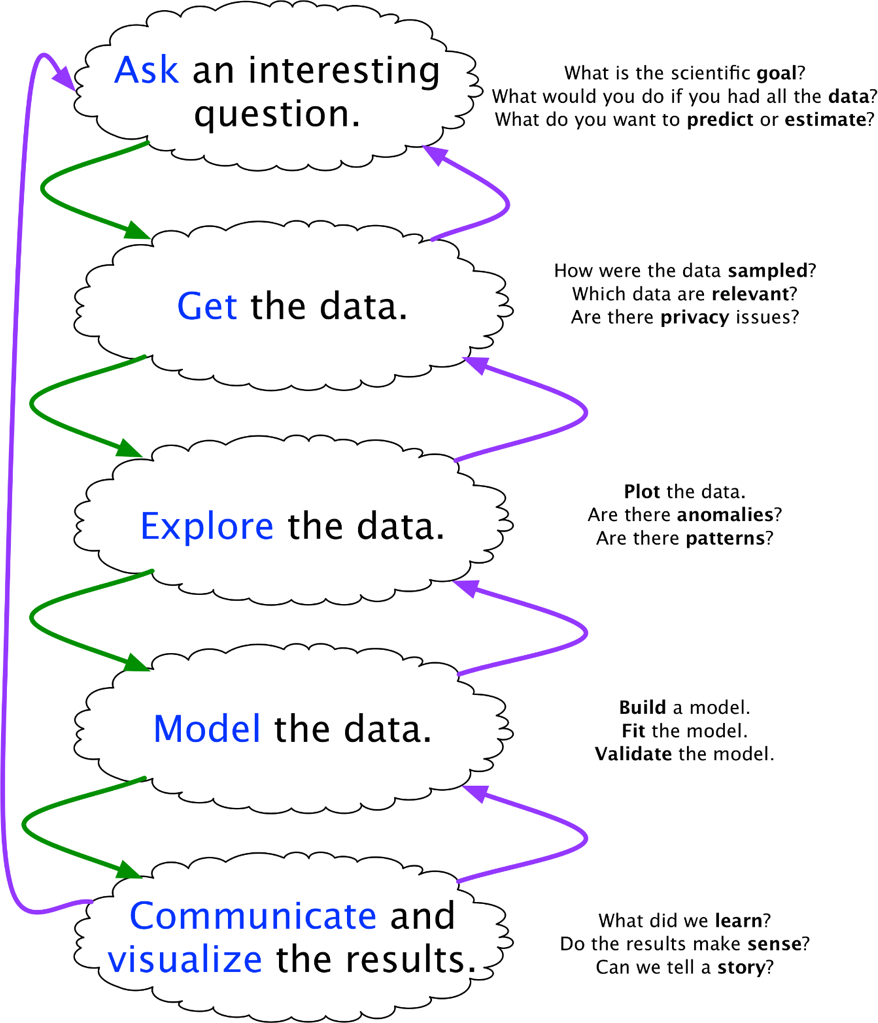

J. Blitzstein and H. Pfister (who teach a well-rated data science course at Harvard) provide their own workflow diagram, but the similarities are easy to spot (see Figure 13.7).

Figure 13.7: Blitzstein and Pfister’s data science workflow [reference lost].

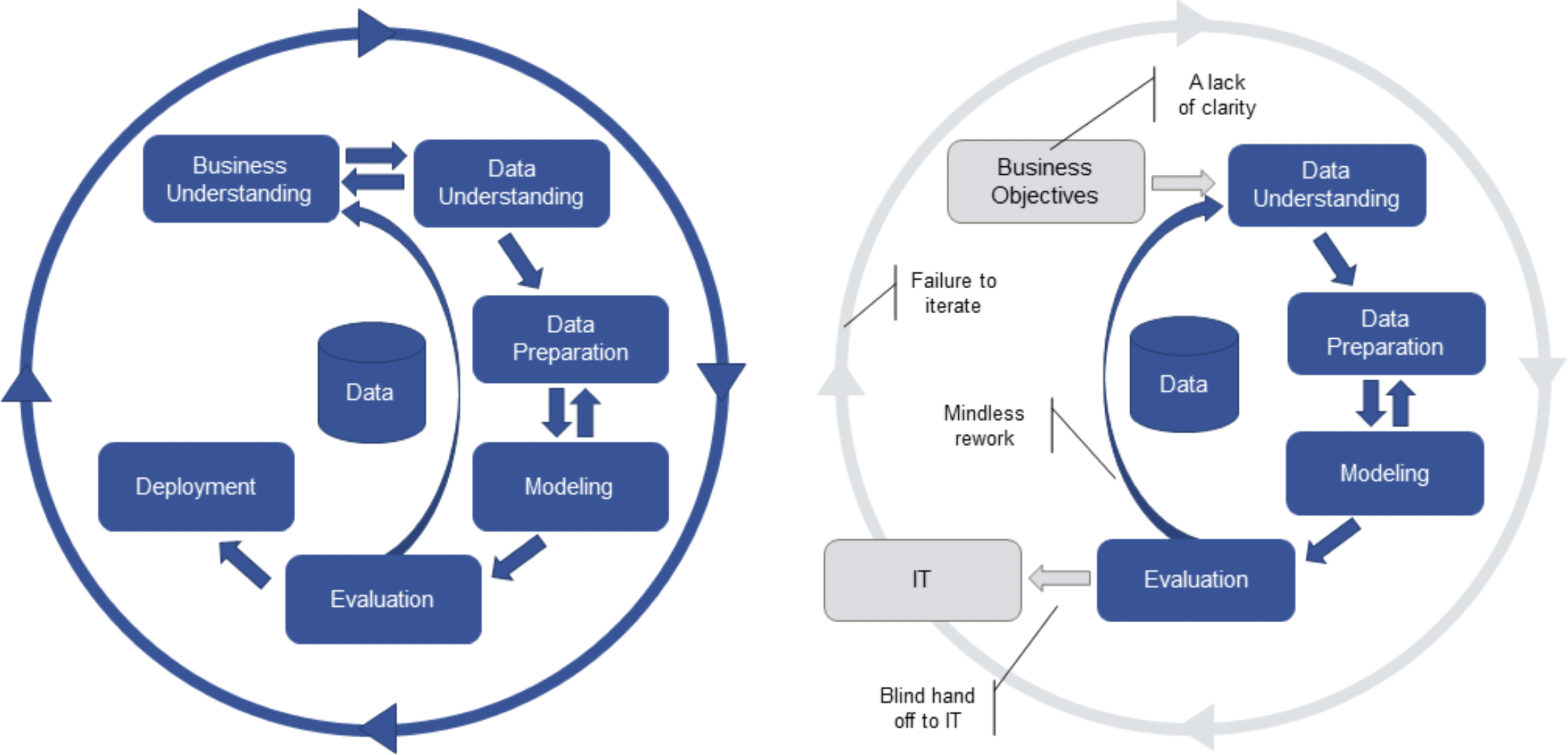

The Cross Industry Standard Process, Data Mining is another such framework, with projects consisting of 6 steps:

business understanding

data understanding

data preparation

modeling

evaluation

deployment

The process is iterative and interactive – the dependencies are highlighted in Figure 13.8.

Figure 13.8: Theoretical (on the left) and corrupted (on the righ) CRISP-DM processes [70].

In practice, data analysis is often corrupted by:

lack of clarity;

mindless rework;

blind hand-off to IT, and

failure to iterate.

CRISP-DM has a definite old-hat flavour (as witnessed by the use of the outdated expression “data mining”), but it can be useful to check off its sub-components, if only as a sanity check.

- Business Understanding

understanding the business goal

assessing the situation

translating the goal in a data analysis objective

developing a project plan

- Data Understanding

considering data requirements

collecting and exploring data

- Data Preparation

selection of appropriate data

data integration and formatting

data cleaning and processing

- Modeling

selecting appropriate techniques

splitting into training/testing sets

exploring alternatives methods

fine tuning model settings

- Evaluation

evaluation of model in a business context

model approval

- Deployment

reporting findings

planning the deployment

deploying the model

distributing and integrating the results

developing a maintenance plan

reviewing the project

planning the next steps

All these approaches have a common core: data science projects are iterative and (often) non-sequential.

Helping the clients and/or stakeholders recognize this central truth will make it easier for analysts and consultants to plan the data science process and to obtain actionable insights for organizations and sponsors.

The main take-away from this section, however, is that there is a lot of real estate in the process before we can even start talking about modeling and analysis – in truth, data analysis is not solely about data analysis.

13.4.2 Data Collection, Storage, Processing, and Modeling

Data enters the data science pipeline by first being collected. There are various ways to do this:

data may be collected in a single pass;

it may be collected in batches, or

it may be collected continuously.

The mode of entry may have an impact on the subsequent steps, including on how frequently models, metrics, and other outputs are updated.

Once it is collected, data must be stored. Choices related to storage (and processing) must reflect:

how the data is collected (mode of entry);

how much data there is to store and process (small vs. big), and

the type of access and processing that will be required (how fast, how much, by whom).

Unfortunately, stored data may go stale (both figuratively, as in addresses no longer accurate, names having changed, etc., and literally, as in physical decay of the data and storage space); regular data audits are recommended.

The data must be processed before it can be analyzed. This is discussed in detail in Data Preparation, but the key point is that raw data has to be converted into a format that is amenable to analysis, by

identifying invalid, unsound, and anomalous entries;

dealing with missing values;

transforming the variables and the datasets so that they meet the requirements of the selected algorithms.

In contrast, the analysis step itself is almost anti-climactic – simply run the selected methods/algorithms on the processed data. The specifics of this procedure depend, of course, on the choice of method/algorithm.

We will not yet get into the details of how to make that choice25, but data science teams should be familiar with a fair number of techniques and approaches:

data cleaning

descriptive statistics and correlation

probability and inferential statistics

regression analysis (linear and other variants)

survey sampling

bayesian analysis

classification and supervised learning

clustering and unsupervised learning

anomaly detection and outlier analysis

time series analysis and forecasting

optimization

high-dimensional data analysis

stochastic modeling

distributed computing

etc.

These only represent a small slice of the analysis pie; it is difficult to imagine that any one analyst/data scientist could master all (or even a majority of them) at any moment, but that is one of the reasons why data science is a team activity (more on this in Roles and Responsibilities).

13.4.3 Model Assessment and Life After Analysis

Before applying the findings from a model or an analysis, one must first confirm that the model is reaching valid conclusions about the system of interest.

All analytical processes are, by their very nature, reductive – the raw data is eventually transformed into a small(er) numerical outcome (or summary) by various analytical methods, which we hope is still related to the system of interest (see Conceptual Frameworks for Data Work).

Data science methodologies include an assessment (evaluation, validation) phase. This does not solely provide an analytical sanity check (i.e., are the results analytically compatible with the data?); it can be used to determine when the system and the data science process have stepped out of alignment. Note that past successes can lead to reluctance to re-assess and re-evaluate a model (the so-called tyranny of past success); even if the analytical approach has been vetted and has given useful answers in the past, it may not always do so.

At what point does one determine that the current data model is out-of-date? At what point does one determine that the current model is no longer useful? How long does it take a model to react to a conceptual shift?26 This is another reason why regular audits are recommended – as long as the analysts remain in the picture, the only obstacle to performance evaluation might be the technical difficulty of conducting said evaluation.

When an analysis or model is ‘released into the wild’ or delivered to the client, it often take on a life of its own. When it inevitably ceases to be current, there may be little that (former) analysts can do to remedy the situation.

Data analysts and scientists rarely have full (or even partial) control over model dissemination; consequently, results may be misappropriated, misunderstood, shelved, or failed to be updated, all without their knowledge. Can conscientious analysts do anything to prevent this?

Unfortunately, there is no easy answer, short of advocating for analysts and consultants to not solely focus on data analysis – data science projects afford an opportunity to educate clients and stakeholders as to the importance of these auxiliary concepts.

Finally, because of analytic decay, it is crucial not to view the last step in the analytical process as a static dead end, but rather as an invitation to return to the beginning of the process.

13.4.4 Automated Data Pipelines

In the service delivery context, the data analysis process is typically implemented as an automated data pipeline, to enable the analysis process to occur repeatedly and automatically.

Data pipelines are usually consist of 9 components (5 stages and 4 transitions, as in Figure @ref(fig:automated_pipeline)):

data collection

data storage

data preparation

data analysis

data presentation

Each of these components must be designed and then implemented. Typically, at least one pass of the data analysis process has to be done manually before the implementation is completed.

We will return to this topic in Structuring and Organizing Data).

13.5 Non-Technical Aspects of Data Work

The main skill set of a data scientist is the ability to apply quantitative methods to business problems in order to obtain actionable insight.

As mentioned previously, it is impossible for any given individual to have expertise in every field of mathematics, statistics, and computer science. In our experience, the best outputs are achieved when a small team of data scientists and consultants possesses expertise in 2 or 3 areas, a decent understanding of related disciplines, and a passing knowledge in a variety of other domains.

This requires analysts to:

keep up with trends;

implement knowledge redundancies on the team;

become conversant in non-expertise areas, and

to know where to find information (online, in books, or from external resources).

In this section, however, we focus on the non-technical aspects of quantitative work. Note that these are not just bells and whistles; analysts that neglect them will see their project fail, no matter how cleverly their analyses were conducted (see [71] for more details).

13.5.1 The Data Science Framework

The perfect data scientist is both reliable and extremely skilled; but in a pinch, it’s much preferable to be merely good and reliable than to be great but flaky. (B. Rayfield, paraphrased)

Data scientists’ duties could include some of the following:

making recommendations to improve products or services;

implementing solutions;

breathing new life into a failing project;

training colleagues,

etc.

More specifically, good data scientists are expected to:

have business acumen;

learn how to manage projects from inception to completion while working with various people, on various projects, and understanding that these people could also be working on various projects;

be able to slot into various team roles, recognize when to take the lead and when to take a backseat, when to focus on building consensus and when to focus on getting the work done;

seek personal and professional development, which means that the learning never stops;

always display professionalism (externally and internally), take ownership of failures and share the credit in successes, treat colleagues, stakeholders, and clients with respect, and demand respect for teammates, stakeholders, and clients as well;

act according to their ethical system;

hone their analytical, predictive, and creative thinking skills;

rely on their emotional intelligence, as it is not sufficient to have a high IQ and recognize stated and tacit colleagues’ and clients’ needs, and

communicate effectively with clients, stakeholders, and colleagues, to manage projects and deliver results.

13.5.2 Multiple “I”s Approach to Quantitative Work

While technical and quantitative proficiency (or expertise) is of course necessary to do good quantitative work, it is not sufficient – optimal real-world solutions may not always be the optimal academic or analytical solutions. This can be a difficult pill to swallow for individuals that have spent their entire education on purely quantitative matters.

The analysts’ focus should then shift to the delivery of useful analyses, obtained via the Multiple “I”s approach to data science:

intuition – understanding the data and the analysis context;

initiative – establishing an analysis plan;

innovation – searching for new ways to obtain results, if required;

insurance – trying more than one approach, even when the first approach worked;

interpretability – providing explainable results;

insights – providing actionable results;

integrity – staying true to the analysis objectives and results;

independence – developing self-learning and self-teaching skills;

interactions – building strong analyses through (often multi-disciplinary) teamwork;

interest – finding and reporting on interesting results;

intangibles – putting a bit of yourself in the results and deliverables, and thinking “outside the box”;

inquisitiveness – not simply asking the same questions over and over again.

Data scientists should not only heed the Multiple “I”s at the delivery stage of the process – they can inform every other stage leading to it.

13.5.3 Roles and Responsibilities

To leverage Big Data efficiently, an organization needs business analysts, data scientists, and big data developers and engineers. [De Mauro, Greco, Grimaldi [73]]

A data analyst or a data scientist (in the singular) is unlikely to get meaningful results – there are simply too many moving parts to any data project.

Successful projects require teams of highly-skilled individuals who understand the data, the context, and the challenges faced by their teammates.27

Depending on the scope of the project, the team’s size could vary from a few to several dozens (or more!) – it is typically easier to manage small-ish teams (with 1-4 members, say).

Figure 13.9: A data science team in action, warts and all [Meko Deng, 2017].

Our experience as consultants and data scientists has allowed us to identify the following quantitative/data work roles.28

- Project Managers / Team Leads

have to understand the process to the point of being able to recognize whether what is being done makes sense, and to provide realistic estimates of the time and effort required to complete tasks. Team leads act as interpreters between the team and the clients/stakeholders, and advocate for the team.29 They might not be involved with the day-to-day aspects of the projects but are responsible for the project deliverables.

- Domain Experts / SMEs

are, quite simply, authorities in a particular area or topic. Not “authority” in the sense that their word is law, but rather, in the sense that they have a comprehensive understanding of the context of the project, either from the client/stakeholder side, or from past experience. SMEs can guide the data science team through the unexpected complications that arise from the disconnect between data science team and the people “on-the-ground”, so to speak.

- Data Translators

have a good grasp on the data and the data dictionary, and help SMEs transmit the underlying context to the data science team.

- Data Engineers / Database Specialists

work with clients and stakeholders to ensure that the data sources can be used down the line by the data science team. They may participate in the analyses, but do not necessarily specialize in esoteric methods and algorithms. Most data science activities require the transfer of some client data to the analysis team. In many instances, this can be as simple as sending a

.csvfile as an e-mail attachment. In other instances, there are numerous security and size issues that must be tackled before the team can gain access to the data.- Data Scientists

are team members who work with the processed data to build sophisticated models that provide actionable insights. They have a sound understanding of algorithms and quantitative methods, and of how they can be applied to a variety of data scenarios. They typically have 2 or 3 areas of expertise and can be counted on to catch up on new material quickly.

- Computer Engineers

design and build computer systems and other similar devices. They are also involved in software development, which is frequently used to deploy data science solutions.

- Artificial Intelligence/Machine Learning Quality Assurance/Quality Control (AI/ML QA/QC) Specialists

design testing plans for solutions that implement AI/ML models; in particular, they should help the data science team determine whether the models are able to learn.

- Communication Specialists

are team members who can communicate the actionable insights to managers, policy analysts, decision-makers and other stake holders. They participate in the analyses, but do not necessarily specialize in esoteric methods and algorithms. They should keep on top of popular accounts of quantitative results. They are often data translators, as well.

Another complication is provided by the fact that data science projects can be downright stressful. In an academic environment, the pace is significantly looser, but

deadlines still exist (exams, assignments, theses),

work can pile up (multiple courses, TAs, etc.)

In the workplace, there are two major differences:

a data science project can only really receive 1 of 3 “grades”: A+ (exceeded expectations), A- (met expectation), or F (didn’t meet expectations);

while project quality is crucial, so is timeliness – missing a deadline is just as damaging as turning in uninspired or flawed work; perfect work delivered late may cost the client a sizeable amount of money.

Sound project management and scheduling can help alleviate some of the stress related to these issues. These are the purview of project managers and team leads, as is the maintenance of the quality of team interactions, which can make or break a project:

treat colleagues/clients with respect AT ALL TIMES – that includes emails, Slack conversations, watercooler conversations, meetings, progress reports, etc.;

keep interactions cordial and friendly – you do not have to like your teammates, but you are all pulling in the same direction;

keep the team leader/team abreast of developments and hurdles – delays may affect the project management plan in a crucial manner (plus your colleagues might be able to offer suggestions), and

respond to requests and emails in a timely manner (within reason, of course).

13.5.4 Non-Technical Data Cheat Sheet

We will end this section with a 12-point TL;DR (too long; didn’t read) snippet that summarizes the profession. These were collected (sometimes rather painfully) throughout the years (see [72] for more details).

Business solutions are not always academic solutions.

The data and models don’t always support the stakeholder/client’s hopes, wants, and needs.

Timely communication is key – with the client and stakeholders, and with your team.

Data scientists need to be flexible (within reason), and willing and able to learn something new, quickly.

Not every problem calls for data science methods.

We should learn from both from our good and our bad experiences.

Manage projects and expectations.

Maintain a healthy work-life balance.

Respect the client, the project, the methods, and ther team.

Data science is not about how smart we are; it is about how we can provide actionable insight.

When what the client wants can’t be done, offer alternatives.

“There ain’t no such thing as a free lunch.”

13.6 Getting Insight From Data

With all of the appropriate context now in mind, we can finally turn to the main attraction, data analysis proper. Let us start this section with a few definitions, in order to distinguish between some of the common categories of data analysis.

What is Data Analysis?

We view finding patterns in data as being data analysis’s main goal. Alternatively, we could describe the data analysis process as using data to:

answer specific questions;

help in the decision-making process;

create models of the data;

describe or explain the situation or system under investigation;

etc.

While some practitioners include other analytical-like activities, such as testing (scientific) hypotheses, or carrying out calculations on data, we think of those as separate activities.

What is Data Science?

One of the challenges of working in the data science field is that nearly all quantitative work can be described as data science (often to a ridiculous extent).

Our simple definition paraphrase T. Kwartler: data science is the collection of processes by which we extract useful and actionable insights from data. Robinson [74] further suggests that these insights usually come via visualization and (manual) inferential analysis.

The noted data scientist H.Mason thinks of the discipline as “the working intersection of statistics, engineering, computer science, domain expertise, and”hacking" [75]).

What is Machine Learning?

Starting in the 1940s, researchers began to take seriously the idea that machines could be taught to learn, adapt and respond to novel situations.

A wide variety of techniques, accompanied by a great deal of theoretical underpinning, were created in an effort to achieve this goal.

Machine learning is typically used in a second stage, to obtain “predictions” (or “advice”), while reducing the operator’s analytical, inferential and decisional workload (although it is still present to some extent) [74].

What is Artificial/Augmented Intelligence?

The science fiction answer is that artificial intelligence is non-human intelligence that has been engineered rather than one that has evolved naturally. Practically speaking, this translates to “computers carrying out tasks that only humans can do”.

A.I. attempts to remove the need for oversight, allowing for automatic “actions” to be taken by a completely unattended system.

These goals are laudable in an academic setting, but we believe that stakeholders (and humans, in general) should not seek to abdicate all of their agency in the decision-making process; as such, we follow the lead of various thinkers and suggest further splitting A.I. into general A.I. (who would operate independently of human intelligence) and augmented intelligence (which enhances human intelligence).

13.6.1 Asking the Right Questions

Definitions aside, however, data analysis, data science, machine learning, and artificial intelligence are about asking questions and providing answers to these questions. We might ask various types of questions, depending on the situation.

Our position is that, from a quantitative perspective, there are only really three types of questions:

analytics questions

data science questions, and

quantitative methods questions.

Analytics questions could be something as simple as:

how many clicks did a specific link on my website get?

Data science questions tend to be more complex – we might ask something along the lines of:

if we know, historically, when or how often people click on links, can we predict how many people from Winnipeg will access a specific page on our website within the next three hours?

Whereas analytics-type questions are typically answered by counting things, data science-like questions are answered by using historical patterns to make predictions.

Quantitative methods questions might, in our view, be answered by making predictions but not necessarily based on historical data. We could build a model from first principles – the “physics” of the situation, as it were – to attempt to figure out what might happen.

For instance, if we thought there was a correlation between the temperature in Winnipeg and whether or not people click on the links in our website, then we might build a model that predicts “how many people from Winnipeg will access a page in the next week?”, say, by trying to predict the weather instead,30 which is not necessarily an easy task.

Analytics models do not usually predict or explain anything – they just report on the data, which is itself meant to represent the situation. A data mining or a data science model tends to be predictive, but not necessarily explanatory – it shows the existence of connections, of correlations, of links, but without explaining why the connections exist.

In a quantitative method model, we may start by assuming that we know what the links are, what the connections are – which presumably means that we have an idea as to why these connections exist31 – and then we try to explore the consequences of the existence of these connections and these links.

This leads to a singular realization that we share with new data scientists and analysts (potentially the single most important piece of advice they will receive in their quantitative career, and we are only half-joking when we say it):

not every situation calls for analytics, data science, statistical analysis, quantitative methods, machine learning, or A.I.

Take the time to identify instances where more is asked out of the data than what it can actually yield, and be prepared to warn stakeholders, as early as possible, when such a situation is encountered.

If we cannot ask the right questions of the data, of the client, of the situation, and so on, any associated project is doomed to fail from the very beginning. Without questions to answer, analysts are wasting their time, running analyses for the sake of analysis – the finish line cannot be reached if there is no finish line.

In order to help clients/stakeholders, data analysts and scientists need:

questions to answer,

questions that can be answered by the types of methods and skills at their disposal, and

answers that will be recognized as answers.

“How many clicks did this link get?” is a question that is easily answerable if we have a dataset of links and clicks, but it might not be a question that the client cares to see answered.

Data analysts and scientists often find themselves in a situation where they will ask the types of questions that can be answered with the available data, but the answers might not prove actually useful.

From a data science perspective, the right question is one that leads to actionable insights. And it might mean that old data is discared and new data is collected in order to answer it. Analysts should beware: given the sometimes onerous price tag associated to data collection, it is not altogether surprising that there will sometimes be pression from above to keep working with the available data.

Stay strong – analysis on the wrong dataset is the wrong analysis!

13.6.1.1 The Wrong Questions

Wrong questions might be:

questions that are too broad or too narrow;

questions that no amount of data could ever answer;

questions for which data cannot reasonably be obtained, etc.

One of the issues with “wrong” questions is that they do not necessarily “break the pipeline”:

in the best-case scenario, stakeholders, clients, colleagues will still recognize the answers as irrelevant.

in the worst-case scenario, policies will erroneously be implemented (or decisions made) on the basis of answers that have not been identified as misleading and/or useless.

13.6.2 Structuring and Organizing Data

Let us now resume the discussion that was cut short in What Is Data? and From Objects and Attributes to Datasets.

13.6.2.1 Data Sources

We cannot have insights from data without data. As with many of the points we have made, this may seem trivially obvious, but there are many aspects of data acquisition, structuring, and organization that have a sizable impact on what insights can be squeezed from data.

More specifically, there are a number of questions that can be considered:

why do we collect data?

what can we do with data?

where does data come from?

assuming we collect data so we can have a collection of data, what does “a collection” of data look like?

how can we describe data?

do we need to distinguish between data, information, knowledge?32

Historically, data has had three functions:

record keeping – people/societal management (!);

science – new general knowledge, and

intelligence – business, military, police, social (?), domestic (?), personal (!)

Traditionally, each of these functions has

used different sources of information;

collected different types of data, and

had different data cultures and terminologies.

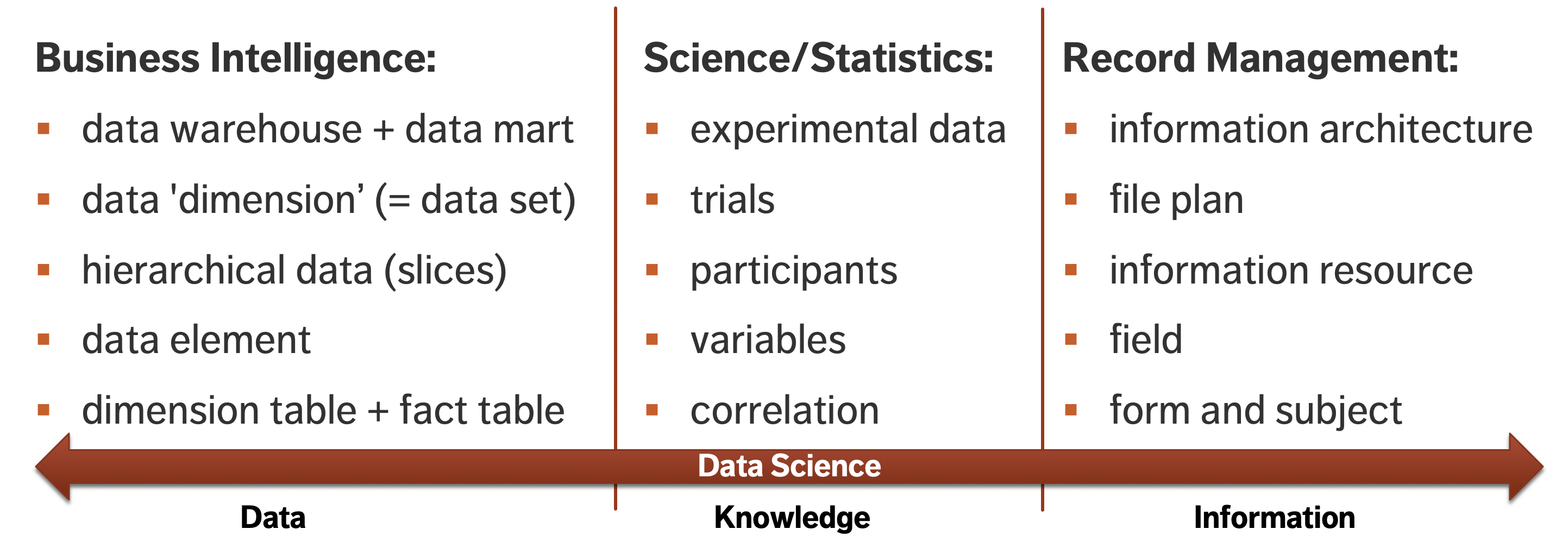

As data science is an interdisciplinary field, it should come as no surprise that we may run into all of them on the same project (see Figure 13.10).

Figure 13.10: Different data cultures and terms.

Ultimately, data is generated from making observations about and taking measurements of the world. In the process of doing so, we are already imposing particular conceptualizations and assumptions on our raw experience.

More concretely, data comes from a variety of sources, including:

records of activity,

(scientific) observations,

sensors and monitoring, and,

more frequently lately, from computers themselves.

As discussed in Section The Analog/Digital Data Dichotomy, although data may be collected and recorded by handed, it is fast becoming a mostly digital phenomenon.

Computer science (and information science) has its own theoretical, fundamental viewpoint about data and information, operating over data in a fundamental sense – 1s and 0s that represent numbers, letters, etc. Pragmatically, the resulting data is now stored on computers, and is accessible through our world-wide computer network.

While data is necessarily a representation of something else, analysts should endeavour to remember that the data itself still has physical properties: it takes up physical space and requires energy to work with.

In keeping with this physical nature, data also has a shelf life – it ages over time. We use the phrase “rotten data” or “decaying data” in one of two senses:

literally, as the data storage medium might decay, but also

metaphorically, as when it no longer accurately represents the relevant objects and relationships (or even when those objects no longer exist in the same way) – compare with “analytical decay” (see Model Assessment and Life After Analysis).

Useful data must stay ‘fresh’ and ‘current’, and avoid going ‘stale’ – but that is both context- and model-dependent!

13.6.2.2 Before the Data

The various data-using disciplines share some core (systems) concepts and elements, which should resonate with the systems modeling framework previously discussed in Conceptual Frameworks for Data Work:

all objects have attributes, whether concrete or abstract;

for multiple objects, there are relationships between these objects/attributes, and

all these elements evolve over time.

The fundamental relationships include:

part–whole;

is–a;

is–a–type–of;

cardinality (one-to-one, one-to-many, many-to-many),

etc.,

while object-specific relationships include:

ownership;

social relationship;

becomes;

leads-to,

etc.

13.6.2.3 Objects and Attributes

We can examine concretely the ways in which objects have properties, relationships and behaviours, and how these are captured and turned into data through observations and measurements, via the apple and sandwich example of What Is Data?.

There, we made observations of an apple instance, labeled the type of observation we made, and provided a value describing the observation. We can further use these labels when observing other apple instances, and associate new values for these new apple instances.

Regarding the fundamental and object specified relationships, we might be able to see that:

an apple is a type of fruit,

a sandwich is part of a meal,

this apple is owned by Jen,

this sandwich becomes fuel,

etc.

It is worth noting that while this all seems tediously obvious to adult humans, it is not so from the perspective of a toddler, or an artificial intelligence. Explicitly, “understanding” requires a basic grasp of:

categories,

instances,

types of attributes,

values of attributes, and

which of these are important or relevant to a specific situation or in general terms.

13.6.2.4 From Attributes to Datasets

Were we to run around in an apple orchard, measuring and jotting down the height, width and colour of 83 different apples completely haphazardly on a piece of paper, the resulting data would be of limited value; although it would technically have been recorded, it would be lacking in structure.

We would not be able to tell which values were heights and which were widths, and which colours or which widths were associated with which heights, and vice-versa. Structuring the data using lists, tables, or even tree structures allows analysts to record and preserve a number of important relationships:

those between object types and instances, property, attribute types (sometimes also called fields, features or dimensions), and values,

those between one attribute value and another value (i.e., both of these values are connected to this object instance),

those between attribute types, in the case of hierarchical data, and

those between the objects themselves (e.g., this car is owned by this person).

Tables, also called flat files, are likely the most familiar strategy for structuring data in order to preserve and indicate relationships. In the digital age, however, we are developing increasingly sophisticated strategies to store the structure of relationships in the data, and finding new ways to work with these increasingly complex relationship structures.

Formally, a data model is an abstract (logical) description of both the dataset structure and the system, constructed in terms that can be implemented in data management software.

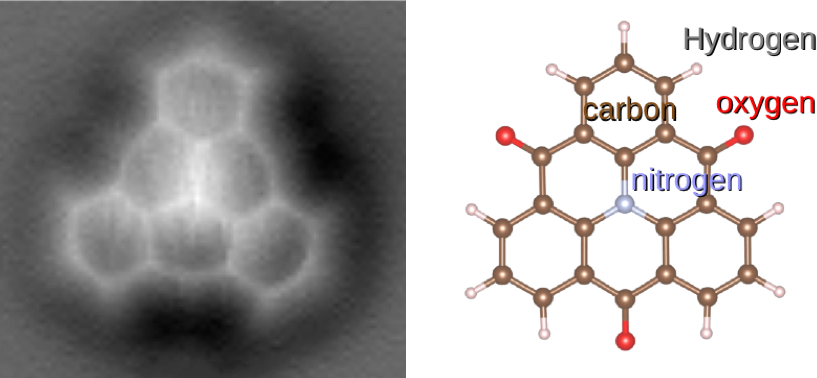

In a sense, data models lie halfway between conceptual models and database implementations. The data proper relates to instances; the model to object types. Ontologies provide an alternative representation of the system: simply put, they are structured, machine-readable collections of facts about a domain.33

In a sense, an ontology is an attempt to get closer to the level of detail of a full conceptual model, while keeping the whole machine-readable (see Figure 13.11 for an example).

Figure 13.11: Representation of Langerhans cells in the Cell Ontology [78].

Every time we move from a conceptual model to a specific type of model (a data model, a knowledge model), we lose some information. One way to preserve as much context as possible in these new models is to also provide rich metadata – data about the data! Metadata is crucial when it comes to successfully working with and across datasets. Ontologies can also play a role here, but that is a topic for another day.

Typically data is stored in a database. A major motivator for some of the new developments in types of databases and other data storing strategies is the increasing availability of unstructured and (so-called) ‘BLOB’ data.

Structured data is labeled, organized, and discrete, with a pre-defined and constrained form. With that definition, for instance, data that is collected via an e-form that only uses drop-down menus is structured.

Unstructured data, by comparison, is not organized, and does not appear in a specific pre-defined data structure – the classical example is text in a document. The text may have to subscribe to specific syntactic and semantic rules to be understandable, but in terms of storage (where spelling mistakes and meaning are irrelevant), it is highly unstructured since any data entry is likely to be completely different from another one in terms of length, etc.

The acronym “BLOB” stands for Binary Large Object data, such as images, audio files, or general multi-media files. Some of these files can be structured-like (all pictures taken from a single camera, say), but they are usually quite unstructured, especially in multi-media modes.

Not every type of database is well-suited to all data types. Let us look at four currently popular database options in terms of fundamental data and knowledge modeling and structuring strategies:

key-value pairs (e.g. JSON);

triples (e.g. resource description framework – RDF));

graph databases, and

relational databases.

13.6.2.5 Key-Value Stores