Module 4 A Survey of Optimization

We start by looking at some of the most common types of single-objective optimization problems that arise in practice, and popular techniques for solving them. The following two toy problems introduce some of the fundamental notions.

Let be the set of all the four-letter English words. What is the maximum number of ’s a word in can have?

There are numerous four-letter words that contain the letter – for example, “line”, “long”, “tilt”, and “full”. From this short list alone, we know the maximum number of ’s is at least 2 and at most 4. As “llll” is not an English word, the maximum number cannot be 4. Can the maximum number be 3? Yes, because “lull” is a four-letter word with three ’s.

This example illustrates some fundamental ideas in optimization. In order to say that 3 is the correct answer, we need to

search for a word that has three ’s, and

provide an argument that rules out any value higher than 3.

In this example, the only possible value of higher than 3 is 4, which was easily ruled out. Unfortunately, that is not always easy to do, even in similar contexts.

For instance, if the problem was to find the maximum number of y’s (instead of ’s), would the same approch work?

A pirate lands on an island with a knapsack that can hold 50kg of treasure. She finds a cave with the following items:

Item Weight Value Value/kg iron shield 20kg $2800.00 $140.00/kg gold chest 40kg $4400.00 $110.00/kg brass sceptre 30kg $1200.00 $40.00/kg Which items can she bring back home in order to maximize her reward without breaking the knapsack?

If the pirate does not take the gold chest, she can take both the iron shield and the brass sceptre for a total value of $4000. If she takes the gold chest, she cannot take any of the remaining items. However, the value of the gold chest is $4400, which is larger than the combined value of the iron shield and the brass sceptre. Hence, the pirate should just take the gold chest.

Here, we performed a case analysis and exhausted all the promising possibilities to arrive at our answer. Note that a greedy strategy that chooses items in descending value per weight would give us the sub-optimal solution of taking the iron shield and brass sceptre.

Even though there are problems for which the greedy approach would return an optimal solution, the second example is not such a problem. The general version of this problem is the classic binary knapsack problem and is known to be NP-hard (informally, NP-hard optimization problems are problems for which no algorithm can provide an output in polynomial time – when the problem size is large, the run time explodes).

Many real-world optimization problems are NP-hard. Despite the theoretical difficulty, practitioners often devise methods that return “good-enough solutions” using approximation methods and heuristics. There are also ways to obtain bounds to gauge the quality of the solutions obtained. We will be looking at these issues at a later stage.

4.1 Single-Objective Optimization Problem

A typical single-objective optimization problem consists of a domain set , an objective function , and predicates on , where for some non-negative integer , called constraints.

We want to find, if possible, an element such that holds for and the value of is either as high (in the case of maximization) or as low (in the case of minimization) as possible. Compactly, single-objective optimization problems are written down as: in the case of minimizing , or in the case of maximizing .

Here, “s.t.” is an abbreviation for “subject to.” To be technically correct, should be replaced with (and with ) since the minimum value is not necessarily attained. However, we will abuse notation and ignore this subtle distinction.

Some common domain sets include

(the set of -tuples of non-negative real numbers)

(the set of -tuples of non-negative integers)

(the set of binary -tuples)

The Binary Knapsack Problem (BKP) can be formulated using the notation we have just introduced. Suppose that there are items, with item having weight and value for . Let denote the capacity of the knapsack.

Then the BKP can be formulated as:

In the above problem, there is only one constraint given by the inequality modeling the capacity of the knapsack. For the pirate example discussed previously, the BKP is:

4.1.1 Feasible and Optimal Solutions

An element satisfying all the constraints (that is, holds for each ) is called a feasible solution and its objective function value is . For a minimization (resp. maximization) problem, a feasible solution such that (resp. ) for every feasible solution is called an optimal solution.

The objective function value of an optimal solution, if it exists, is the optimal value of the optimization problem. If an optimal value exists, it is by necessity unique, but the problem can have multiple optimal solutions. Consider, for instance, the following example: This problem has an optimal solution for every , but a unique optimal value of 1.

4.1.2 Infeasible and Unbounded Problems

It is possible that there exists no element such that holds for all . In such a case, the optimization problem is said to be infeasible. The following problem, for instance, is infeasible: Indeed, any solution must be simultaneously non-negative and smaller than , which is patently impossible. An optimization problem that is not infeasible can still fail to have an optimal solution, however.

For instance, the problem is not infeasible, but the does not exist since the objective function can take on values larger than any candidate maximum. Such a problem is said to be unbounded.

On the other hand, the problem has a positive objective function value for every feasible solution. Even though the objective function value approaches as , there is no feasible solution with an objective function value of 0. Note that this problem is not unbounded as the objective function value is bounded below by 0.

4.1.3 Possible Tasks Involving Optimization Problems

Given an optimization problem, the most natural task is to find an optimal solution (provided that one exists) and to demonstrate that it is optimal. However, depending on the context of the problem, one might be tasked to find:

a feasible solution (or show that none exists);

a local optimum;

a good bound on the optimal value;

all global solutions;

a “good” (but not necessarily optimal) solution, quickly;

a “good” solution that is robust to small changes in problem data, and/or

the best solutions.

In many contexts, the last three tasks are often more important than finding optimal solutions.

For example, if the problem data comes from measurements or forecasts, one needs to have a solution that is still feasible when deviations are taken into account.

Additionally, producing multiple “good” solutions could allow decision makers to choose a solution that has desirable properties (such as political or traditional requirements) but that is not represented by, or difficult to represent with, problem constraints.

4.2 Calculus Sidebar and Lagrange Multipliers

Optimization is quite possibly the most-commonly used application of the derivative.

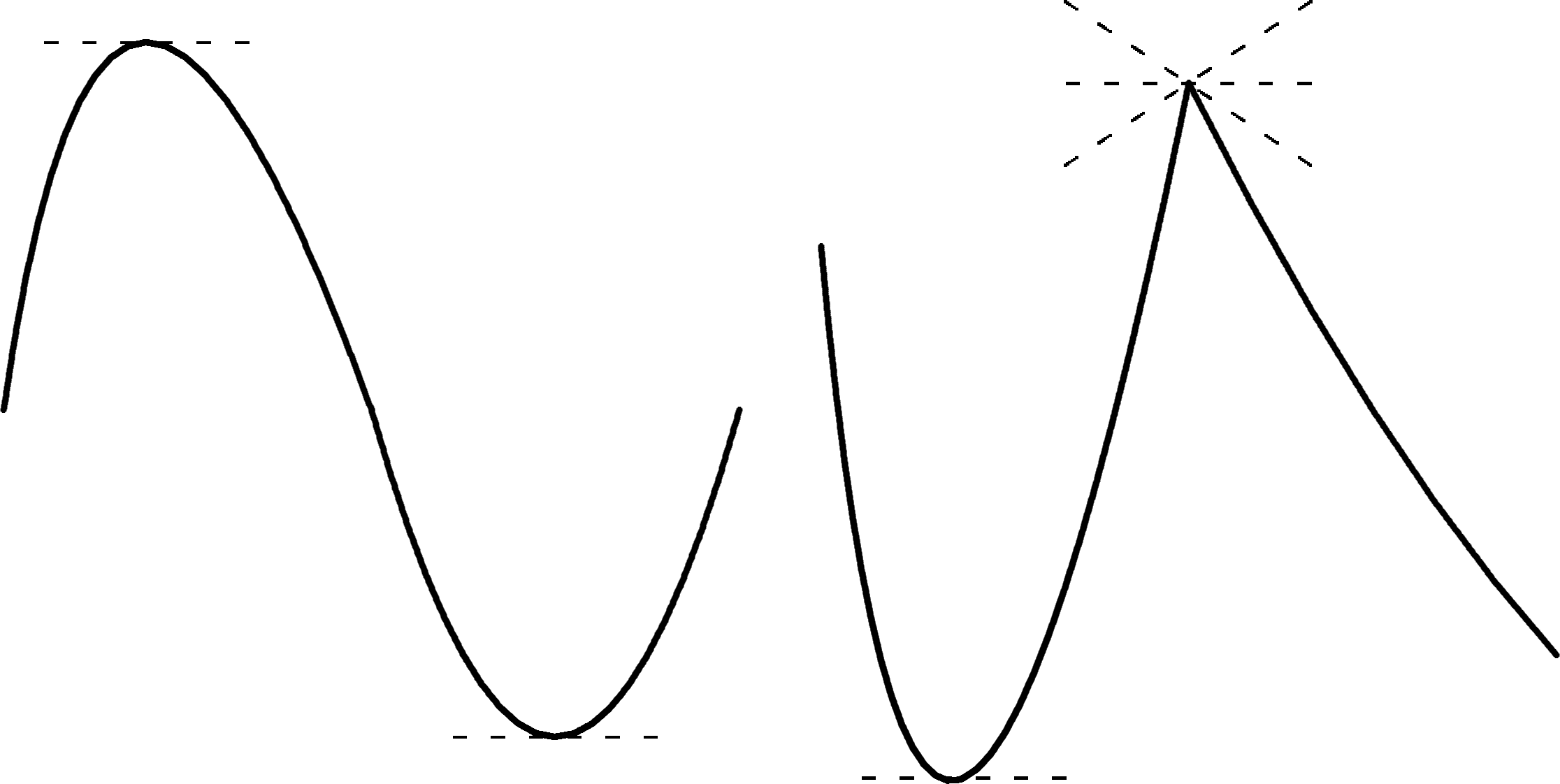

Recall that a differentiable function has a critical point at if either or is undefined (see Figure 4.1).

Figure 4.1: Critical points for continuous functions of a single real variable.

If additionally is continuous, then the optimal solution of the problem is found at one (or possibly, many) of the following feasible solutions: , , or where is a critical point of in .

This can be extended fairly easily to multi-dimensional domains, with the following result.

Theorem let be a continuous function, where is a closed subset of . Then reaches its maximum (resp.minimum) value either at a critical point of in the interior of , or somewhere on , the boundary of .

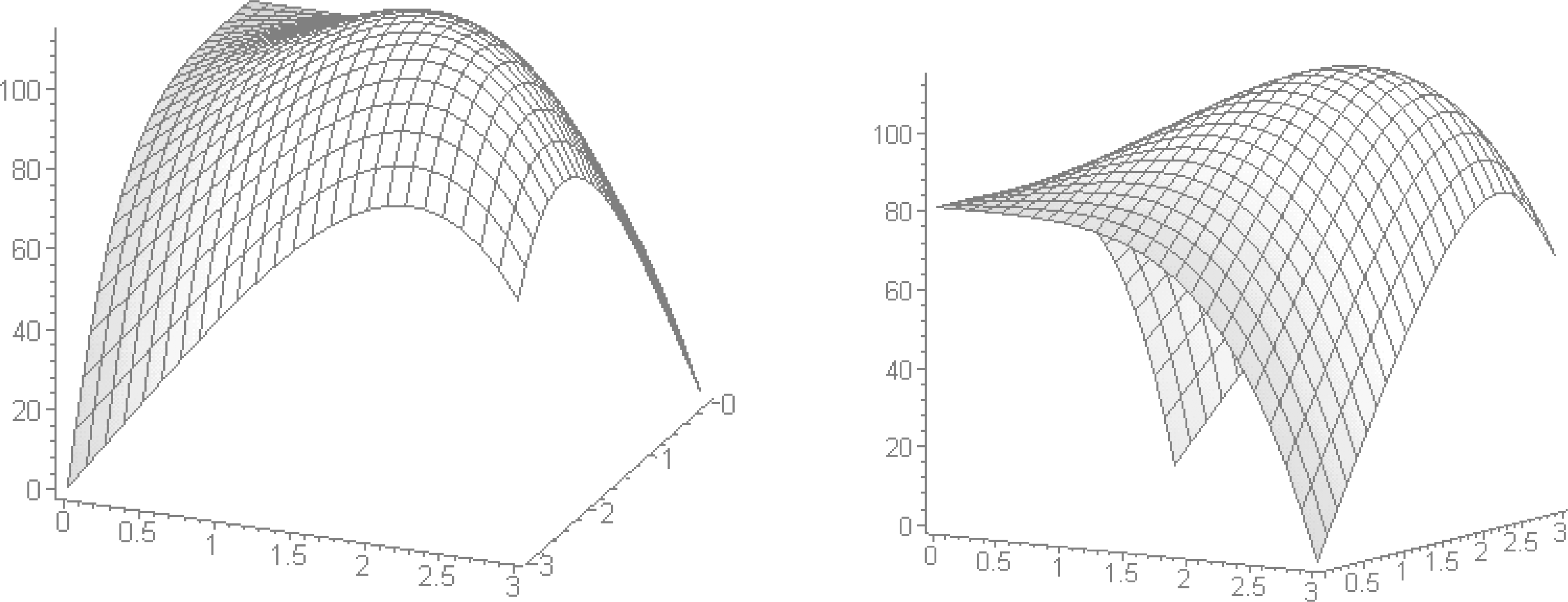

Example: Consider a company that sells gadgets and gizmos. If the company’s monthly profits are expressed (in 1000$ dollars) according to where and represent, respectively, the number of gadgets and gizmos sold monthly (in 10,000s of units), and if the company can produce up to 30,000 units of both gadgets and gizmos monthly, what is the optimal number of each items that the company must sell in order to maximize its monthly profits? The monthly profit function is shown in Figure 4.2.

Figure 4.2: Monthly profit function for the gadgets and gizmos example.

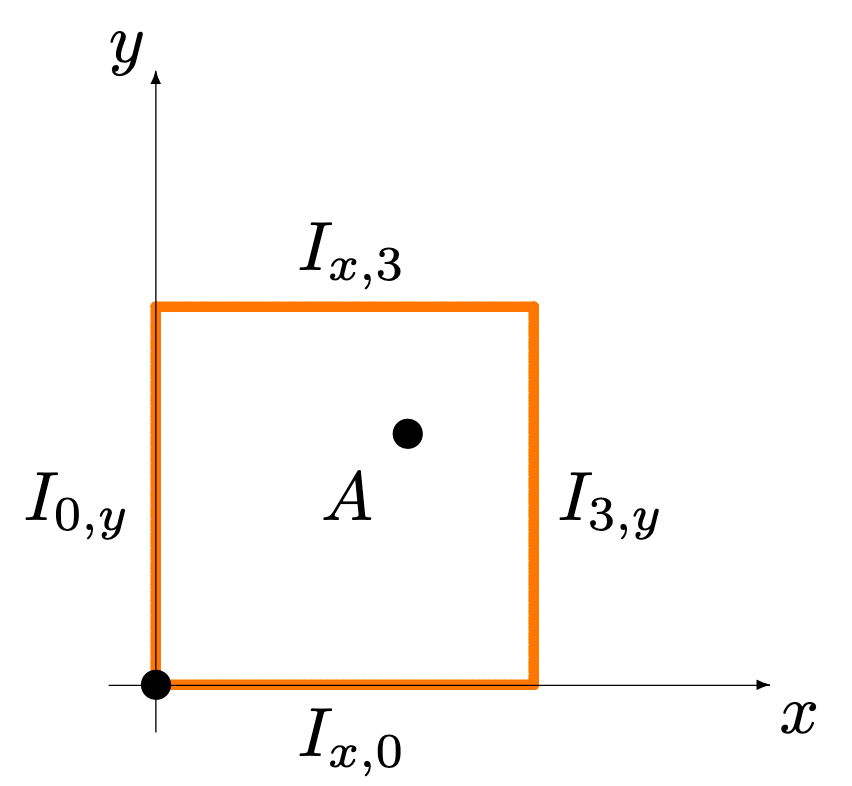

Since is continuous, the maximum value is reached at a critical value in or somewhere on the boundary

Figure 4.3: Boundary of the domain for the gadgets and gizmos example.

But is smooth; the gradient is thus always defined, and the only critical points are those for which . At such a point, , which, upon substitution in yields which is to say .

Only can potentially yield a critical point in , however. When , we must have : the only critical point of in is thus , and the monthly profit function value at that point is

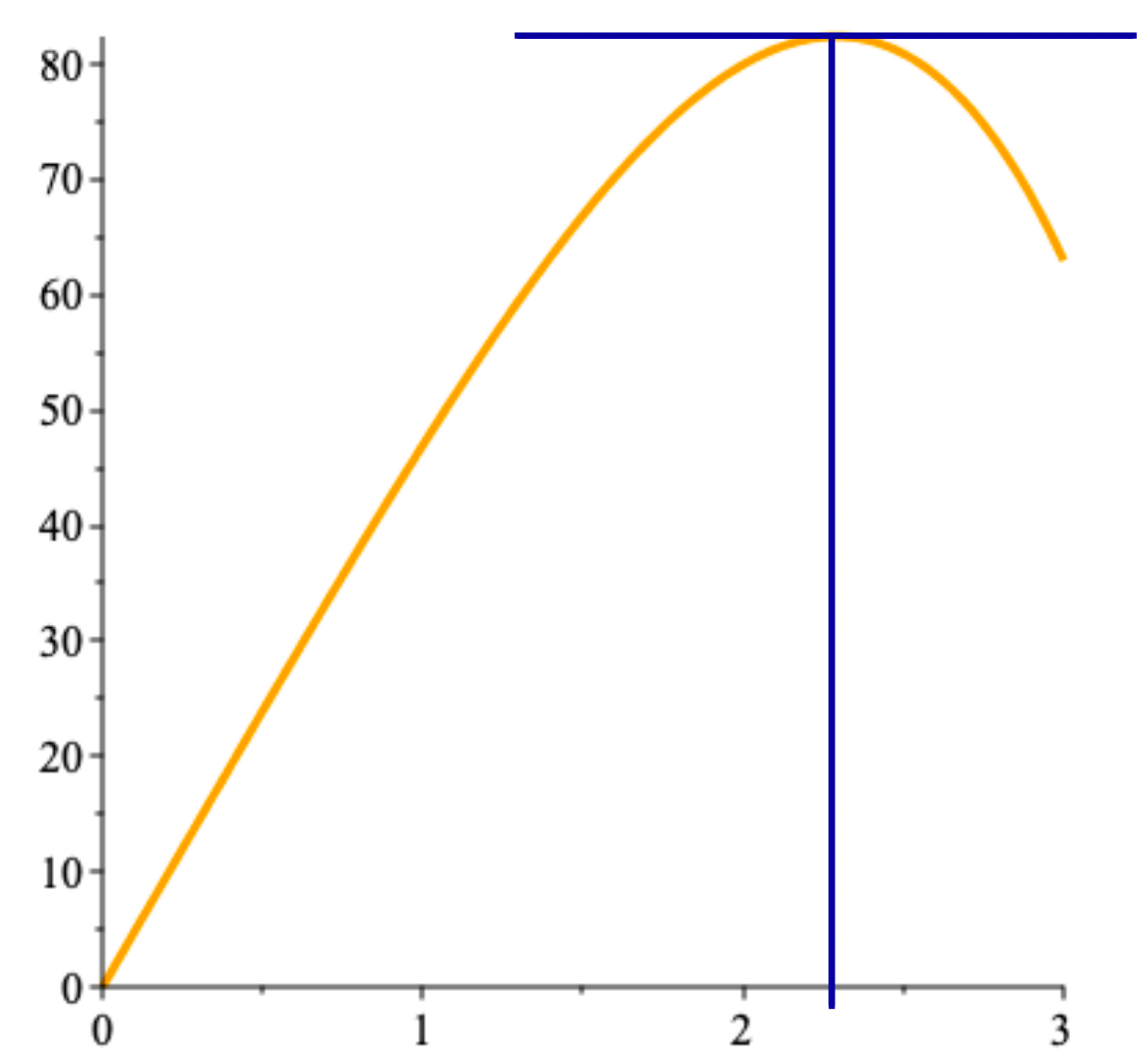

On the boundary , the objective function reduces to one of the following four forms: These are easy to optimize, being continuous functions of a single real variable; and are maximized at the origin, with the objective function taking the value 81 there, while and are maximized at , with the objective function taking the value there (see Figure 4.4).

Figure 4.4: Profile for and in the gadgets and gizmos example.

Combining all this information, we conclude that the company will maximize its monthly profits at 113,000$ if it sells 20,000 units of both the gadgets and the gizmos.

While the approach we just presented works in this case, there are many instances for which it can be substantially more difficult to find the optimal value on .

The method of Lagrange multipliers can simplify the computations, to some extent. Consider the problem where are continuous and differentiable on the (closed) region described by the constraints , 1

If the problem is feasible and bounded, then the optimal value is reached either at a critical point of in or at a point for which where are the Lagrange multipliers of the problem.

Example: consider a factory that produces various types of deluxe pickle jars. The monthly number of jars of a specific kind of pickled radish that can be produced at the factory is given by where is the number of dedicated canning machines, and is the monthly number of employee-hours spent on the pickled radish.

The pay rate for the employees is 100$/hour (the pickles are extra deluxe, apparently), and the monthly maintenance cost for each canning machine is .

If the factory owners want to maintain monthly production at 36,000 jars of pickled radish, what combination of number of canning machines and employee-hour will minimize the total production costs? The optimization problem is

The objective function is linear and so has no critical point. The feasability region can be described by the constraints and . Points of interest on the boundary are obtained by solving the Lagrange equation since , with

Numerically, there is only one solution, namely The objective function at that point takes on the value and this value must either be the maximum or the minimum of the objective function subject to the constraints of the problem.

We know, however, that the point belongs to (as ; since then is indeed the minimal solution of the problem, and the minimal value of the objective function subject to the constraints is .

In practice, the value for has to be an integer (unless we consider using a different number of canning machines at various times during the month), so we might pick:

a sub-optimal canning machines, which yields

a sub-optimal employee-hours,

both of which yield a sub-optimal monthly operating cost of

This departure from optimality would nevertheless be quite likely to be acceptable to the factory owners.

Given how straightforward the method is, it might seem that there is no real need to say anything else – why would anybody ever use something other than Lagrange multipliers to solve optimization problems?

One of the issues is that when the number of constraints is too high relative to the dimension of , which is usually the case in real-life situations, then there may not be a finite number of candidates solutions on , which makes this approach useless.

The method also fails when the gradient of the constraint vanishes at some critical location.

Another difficulty that might arise is that the system of equations could be ill-conditioned, or highly non-linear, and numerical solutions could be hard to obtain.

Numerical methods can also be used; we will discuss those briefly in future sections.

4.3 Classification of Optimization Problems and Types of Algorithms

The computational difficulty of optimization problems, then, depends on the properties of the domain set, constraints, and the objective function.

4.3.1 Classification

Problems without constraints are said to be unconstrained. For example, least-squares minimization in statistics can be formulated as an unconstrained problem, and so can

Problems with linear constraints (i.e.linear inequalities or equalities) and a linear objective function form an important class of problems in linear programming.

Linear programming problems are by far the easiest to solve in the sense that efficient algorithms exist both in theory and in practice. Linear programming is also the backbone for solving more complex models [1]. Convex problems are problems with a convex domain set, which is to say a set such that for all and for all , and convex constraints and function , which is to say, for all , and for all

Convex optimization problems have the property that every local optimum is also a global optimum. Such a property permits the development of effective algorithms that could also work well in practice. Linear programming is a special case of convex optimization.

Nonconvex problems (such as problems involving integer variables and/or nonlinear constraints that are not convex) are the hardest problems to solve. In general, nonconvex problems are NP-hard. Such problems often arise in scheduling and engineering applications. In the rest of the report, we will primarily focus on linear programming and nonconvex problems whose linear constraints and objective function are linear, but with domain set . These problems cover a large number of applications in operations research, which are often discrete in nature. We will not discuss optimization problems that arise in statistical learning and engineering applications that are modeled as nonconvex continuous models since they require different sets of techniques and methods – more information is available in [2].

4.3.2 Algorithms

We will not go into the details of algorithms for solving the problems discussed, as consultants and analytsts are expected to be using off-the-shelf solvers for the various tasks, but it could prove useful for analysts to know of the various types of algorithms or methods that exist for solving optimization problems.

Algorithms fall into three families: heuristics, exact, and approximate methods.

Heuristics are normally quick to execute but do not provide guarantees of optimality. For example, the greedy heuristic for the Knapsack Problem is very quick but does not always return an optimal solution. In fact, no guarantee exists for the “validity” of a solution either.

Other heuristics methods include ant colony, particle swarm, and evolutionary algorithms, just to name a few. There are also heuristics that are stochastic in nature and have proof of convergence to an optimal solution. Simulated annealing and multiple random starts are such heuristics.

Unfortunately, there is no guarantee on the running time to reach optimality and there is no way to identify when one has reached an optimum point.

Exact methods return a global optimum after a finite run time.

However, most exact methods can only guarantee that constraints are approximately satisfied (though the potential violations fall below some pre-specified tolerance). It is therefore possible for the returned solutions to be infeasible for the actual problem.

There also exist exact methods that fully control the error. When using such a method, an optimum is usually given as a box guaranteed to contain an optimal solution rather than a single element.

Returning boxes rather than single elements are helpful in cases, for example, where the optimum cannot be expressed exactly as a vector of floating point numbers.

Such exact methods are used mostly in academic research and in areas such as medicine and avionics where the tolerance for errors is practically zero.

Approximate methods eventually zoom in on sub-optimal solutions, while providing a guarantee: this solution is at most away from the optimal solution, say.

In other words, approximate methods also provide a proof of solution quality.

4.4 Linear Programming

Linear programming (LP) was developed independently by G.B. Dantzig and L. Kantorovich in the first half of the century to solve resource planning problems. Even though linear programming is insufficient for many modern-day applications in operations research, it was used extensively in economic and military contexts in the early days.

To motivate some key ideas in linear programming, we begin with a small example.

Example: A roadside stand sells lemonade and lemon juice. Each unit of lemonade requires 1 lemon and 2 litres of water to prepare, and each unit of lemon juice requires 3 lemons and 1 litre of water to prepare. Each unit of lemonade gives a profit of 3$ dollars upon selling, and each unit of lemon juice gives a profit of 2$ dollars, upon selling. With 6 lemons and 4 litres of water available, how many units of lemonade and lemon juice should be prepared in order to maximize profit?

If we let and denote the number of units of lemonade and lemon juice, respectively, to prepare, then the profit is the objective function, given by $. Note that there are a number of constraints that and must satisfy:

and should be non-negative;

the number of lemons needed to make units of lemonade and units of lemon juice is and cannot exceed 6;

the number of litres of water needed to make units of lemonade and units of lemon juice is and cannot exceed 4;

Hence, to determine the maximum profit, we need to maximize subject to and satisfying the constraints , , , and A more compact way to write the problem is as follows: It is customary to omit the specification of the domain set in linear programming since the variables always take on real numbers. Hence, we can simply write

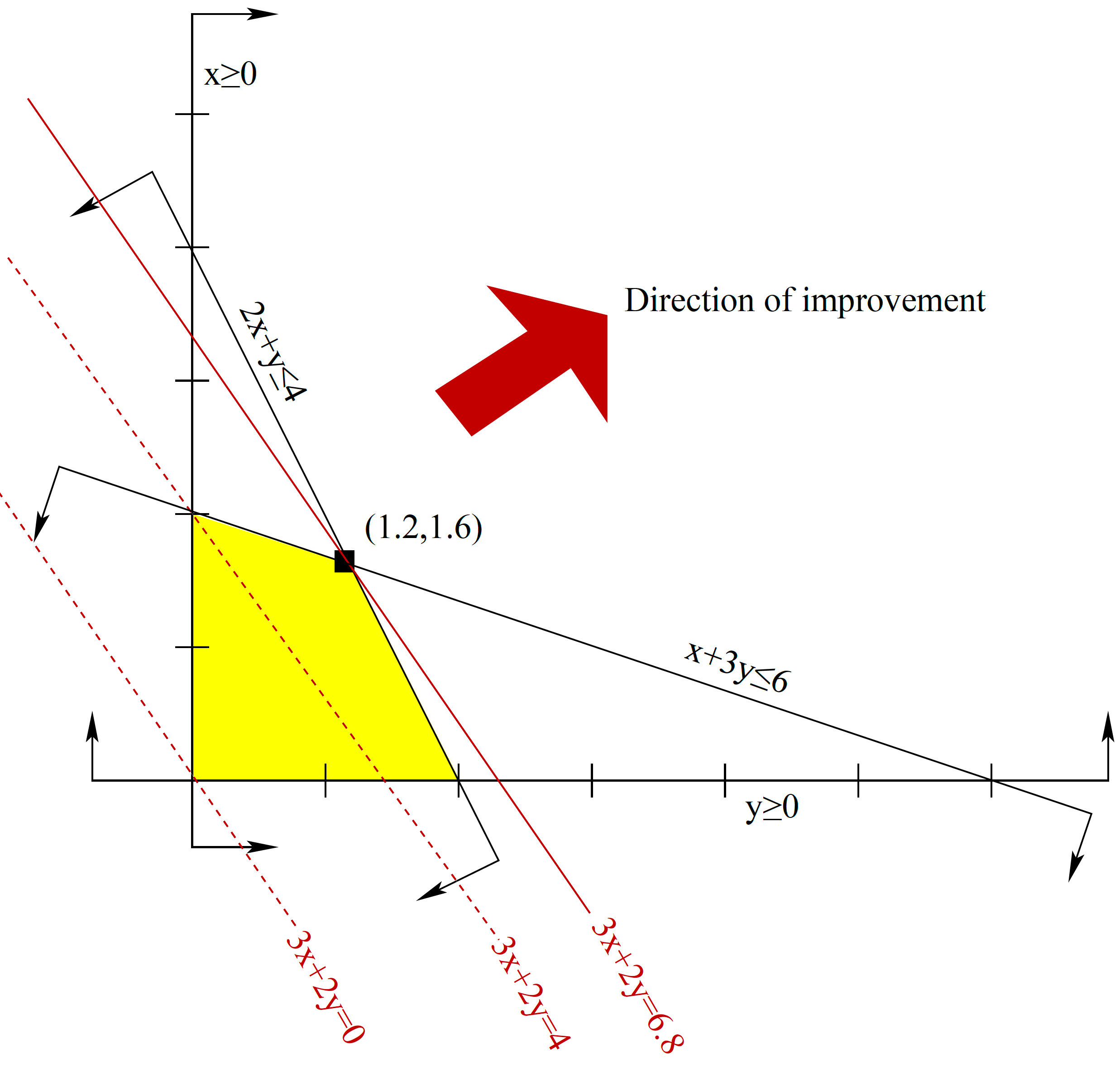

We can solve the above maximization problem graphically, as follows. We first sketch the set of satisfying the constraints, called the feasible region, on the -plane.

We then take the objective function and turn it into the equation of a line where is a parameter. Note that as the value of increases, the line defined by the equation moves in the direction of the normal vector . We call this direction the direction of improvement. Determining the maximum value of the objective function, called the optimal value, subject to the contraints amounts to finding the maximum value of so that the line defined by the equation still intersects the feasible region.

Figure 4.5 shows the lines with .

Figure 4.5: Graphical solution for the lemonade and lemon juice optimization problem; the feasible region is shown in yellow, and level curves of the objective function in red..

We can see that if is greater than 6.8, the line defined by will not intersect the feasible region. Hence, the profit cannot exceed 6.8 dollars.

As the line does intersect the feasible region, is the maximum value for the objective function. Note that there is only one point in the feasible region that intersects the line , namely . In other words, to maximize profit, we want to prepare 1.2 units of lemonade and 1.6 units of lemon juice.

This solution method can hardly be regarded as rigorous because we relied on a picture to conclude that for all satisfying the constraints. But we can also obtain this result algebraically.

Note that multiplying both sides of the constraint by yields and multiplying both sides of the constraint by yields Hence, any that satisfies both must also satisfy which simplifies to , as desired.

It is always possible to find an algebraic proof like the one above for linear programming problems, which adds to their appeal. To describe the full result, it is convenient to call on duality, a central notion in mathematical optimization.

4.4.1 Linear Programming Duality

Let (P) denote following linear programming problem: where (inequality on tuples is applied component-wise.)

Then for every (that is, all components of are non-negative), the inferred inequality is valid for all satisfying .

Furthermore, if the inferred inequality becomes , making a lower bound on the optimal value of (P). To obtain the largest possible bound, we can solve

This problem is called the dual problem of (P), and (P) is called the primal problem. A remarkable result relating (P) and its dual (P’) is the Duality Theorem for Linear Programming: if (P) has an optimal solution, then so does its dual problem (P’), and the optimal values of the two problems are the same.

A weaker result follows easily from the discussion above: the objective function value of a feasible solution to the dual problem (P’) is a lower bound on the objective function value of a feasible solution to (P). This result is known as weak duality. Despite the fact that it is a simple result, its significance in practice cannot be overlooked because it provides a way to gauge the quality of a feasible solution to (P).

For example, suppose we have at hand a feasible solution to (P) with objective function value 3 and a feasible solution to the dual problem (P’) with objective function value 2. Then we know that the objective function value of our current solution to (P) is within times the actual optimal value since the optimal value cannot be less than .

In general, a linear programming problem can have a more complicated form. Let , , . Let denote the th row of , denote the th column of , and (P) denote the minimization problem, with variables in the tuple , given as follows:

the objective function to be minimized is ;

the constraints are , where is , , or for , and

for each , is constrained to be non-negative, nonpositive, or free.

Then the dual problem (P’) is defined to be the maximization problem, with variables in the tuple given as follows:

the objective function to be maximized is ;

for , the th constraint is

and for each , is constrained to be non-negative if is ; is constrained to be non-positive if is ; is free if is .

The following table can help remember the correspondences:

| Primal (min) | Dual (max) |

|---|---|

| constraint | variable |

| constraint | variable |

| constraint | free variable |

| variable | constraint |

| variable | constraint |

| free variable | constraint |

Below is an example of a primal-dual pair of problems based on the above definition.

Consider the primal problem: Here, , , and .

Since the primal problem has three constraints, the dual problem has three variables:

the first constraint in the primal is an equation, the corresponding variable in the dual is free;

the second constraint in the primal is a -inequality, the corresponding variable in the dual is non-negative;

the third constraint in the primal is a -inequality, the corresponding variable in the dual is non-positive.

Since the primal problem has three variables, the dual problem has three constraints:

the first variable in the primal is non-negative, the corresponding constraint in the dual is a -inequality;

the second variable in the primal is free, the corresponding constraint in the dual is an equation;

the third variable in the primal is non-positive, the corresponding constraint in the dual is a -inequality.

Hence, the dual problem is:

In some references, the primal problem is always a maximization problem – in that case, what we have considered to be a primal problem is their dual problem and vice-versa.

Note that the Duality Theorem for Linear Programming remains true for the more general definition of the primal-dual pair of linearprogramming problems.

4.4.2 Methods for Solving Linear Programming Problems

There are currently two families of methods used by modern-day linear programming solvers: simplex methods and interior-point methods.

We will not get into the technical details of these methods, except to say that the algorithms in either family are iterative, that there is no known simplex method that runs in polynomial time, but efficient polynomial-time interior-point methods abound in practice. We might wonder why anyone would still use simplex methods, given that they are not polynomial-time methods: simply put, simplex methods are in general more memory-efficient than interior-point methods, and they tend to return solutions that have few nonzero entries.

More concretely, suppose that we want to solve the following problem:

For ease of exposition, we assume that has full row rank. Then, each iteration of a simplex method maintains a current solution that is basic, in the sense that the columns of corresponding to the nonzero entries of are linearly independent. In contrast, interior-point methods will maintain throughout (whence the name “interior point”).

When we use an off-the-shelf linear programming solver, the choice of method is usually not too important since solvers have good default settings. Simplex methods are typically used in settings when a problem needs to be resolved after minor changes in the problem data or in problems with additional integrality constraints discussed in the next section.

4.5 Mixed-Integer Linear Programming

While the simplicity of linear programming (and duality) make it an appealing tool, its modeling power is insufficient in many real-life applications (for example, there is no simple linear programming formulation of the BKP).

Fortunately, allowing the domain set to restrict one or more variables to integer values drastically extends the modeling power. The price we pay is that there is no guarantee that the problems can be solved in polynomial time.

Example: Recall the lemonade and lemon juice problem introduced in the previous section: there is a unique optimal solution at for a profit of .

But this solution requires the preparation of fractional units of lemonade and lemon juice. What if the number of prepared units needs to be integers?

The solution is to add integrality constraints:

This problem is no longer a linear programming problem; rather, it is an integer linear programming problem. Note that we can solve this problem via a case analysis. The second and third inequalities tell us that the possible values for are , , and .

If , the first inequality gives , implying that . Since we are maximizing , we want to be as large as possible; satisfies all the constraints with an objective function value of .

If , the first inequality gives , implying that . Note that satisfies all the constraints with an objective function value of .

If , the second inequality gives . Note that satisfies all the constraints with an objective function value of .

Thus, is an optimal solution. How does this compare to the solution of the LP problem of the previous section, both in terms of location of the solution and value of the objective function?

A mixed-integer linear programming problem (MILP) is a problem of minimizing or maximizing a linear function subject to finitely many linear constraints such that the number of variables are finite, with at least one of them required to take on integer values.

If all the variables are required to take on integer values, the problem is called a pure integer linear programming problem or simply an integer linear programming problem. Normally, we assume the problem data to be rational numbers to rule out pathological cases.

Many solution methods for solving MILPs have been devised and some of them first solve the linear programming relaxation of the original problem, which is the problem obtained from the original problem by dropping all the integrality requirements on the variables.

For instance, if (MP) denotes the following mixed-integer linear programming problem: then the linear programming relaxation (P1) of (MP) is:

Observe that the optimal value of (P1) is a lower bound for the optimal value of (MP) since the feasible region of (P1) contains all the feasible solutions to (MP), thus making it possible to find a feasible solution to (P1) with objective function value which is better than the optimal value of (MP).

Hence, if an optimal solution to the linear programming relaxation happens to be a feasible solution to the original problem, then it is also an optimal solution to the original problem. Otherwise, there is an integer variable having a nonintegral value .

What we then do is to create two new sub-problems as follows:

one requiring the variable to be at most the greatest integer less than ,

the other requiring the variable to be at least the smallest integer greater than .

This is the basic idea behind the branch-and-bound method. We now illustrate these ideas on (MP). Solving the linear programming relaxation (P1), we find that is an optimal solution to (P1). Note that is not a feasible solution to (MP) because is not an integer. We now create two sub-problems (P2) and (P3).

(P2) is obtained from (P1) by adding the constraint and (P3) is obtained from (P1) by adding the constraint Hence, (P2) is the problem and (P3) is the problem

Note that any feasible solution to (MP) must be a feasible solution to either (P2) or (P3). Using the help of a solver, one can see that (P2) is infeasible. The problem (P3), however, has an optimal solution at , which is also feasible for (MP). Hence, is an optimal solution of (MP).

In many instances, there are multiple choices for the variable on which to branch, and for which sub-problem to solve next. These choices can have an impact on the total computation time. Unfortunately, there are no hard-and-fast rules at the moment to determine the best branching path. This in area of ongoing research.

4.5.1 Cutting Planes

Difficult MILP problems often cannot be solved by branch-and-bound methods alone. A technique that is typically employed in solvers is to add valid inequalities to strengthen the linear programming relaxation.

Such inequalities, known as cutting planes, are known to be satisfied by all the feasible solutions to the original problem but not by all the feasible solutions to the initial linear programming relaxation.

Example: consider the following PILP problem:

An optimal solution to the linear programming relaxation is given by Note that adding the inequalities and yields , or equivalently, Since is an integer for every feasible solution , is a valid inequality for the original problem, but is violated by . Hence, is a cutting plane.

Adding this to the linear programming relaxation, we have which, upon solving, yields as an optimal solution.

Since all the entries are integers, this is also an optimal solution to the original problem. In this example, adding a single cutting plane solved the problem. In practice, one often needs to add numerous cutting planes and then continue with branch-and-bound to solve nontrivial MILP problems.

Many methods for generating cutting planes exist – the problem of generating effective cutting planes efficiently is still an active area of research [3].

4.6 A Sample of Useful Modeling Techniques

So far, we have discussed the kinds of optimization problems that can be solved and certain methods available for solving them. Practical success, however, depends upon the effective translation and formulation of a problem description into a mathematical programming problem, which often turns out to be as much an art as it is a science.

We will not be discussing formulation techniques in detail (see [4] for a deep dive into the topic) – instead, we highlight modeling techniques that often arise in business applications, which our examples have not covered so far.

4.6.1 Activation

Sometimes, we may want to set a binary variable to 1 whenever some other variable is positive. Assuming that is bounded above by , the inequality will model the condition. Note that if there is no valid upper bound on , the condition cannot be modeled using a linear constraint.

4.6.2 Disjunction

Sometimes, we want to satisfy at least one of a list of inequalities; that is, To formulate such a disjunction using linear constraints, we assume that, for , there is a lower bound on for all . Note that such bounds automatically exist when is a bounded set, which is often the case in applications.

The disjunction can now be formulated as the following system where is a new 0-1 variable for :

Note that reduces to when , and to when , which holds for all

Therefore, is an activation for the constraint, and at least one is activated because of the constraint

4.6.3 Soft Constraints

Sometimes, we may be willing to pay a price in exchange for specific constraints to be violated (perhaps they represent “nice-to-have” conditions instead of “must-be-met” conditions). Such constraints are referred to as soft constraints.

There are situations in which having soft constraints is advisable, say when enforcing all constraints results into an infeasible problem, but a solution is nonetheless needed.

We illustrate the idea on a modified BKP. As usual, there are items and item has weight and value for . The capacity of the knapsack is denoted by . Suppose that we prefer not to take more than items, but that the preference is not an actual constraint.

We assign a penalty for its violation and use the following formulation:

Here, is a non-negative number of our choosing. As we are maximizing is pushed towards 0 when is relatively large. Therefore, the problem will be biased towards solutions that try to violate as little as possible.

Experimentation is required to determine What value to select for ; the general rule is that if violation is costly in practice, we should set to be (relatively) high; otherwise, we set it to a moderate value relative to the coefficients of the variables in the objective function value.

Note that when , the constraint has no effect because can take on any positive value without incurring a penalty.

4.7 Software Solvers

A wide variety of solvers exist for all kinds of optimization problems. The NEOS Server is a free online service that hosts many solvers and is a great resource for experimenting with different solvers on small problems.

For large or computationally challenging problems, it is advisable to use a solver installed on a dedicated private machine/server. Commercial solvers can also prove useful:

There are popular open-source solvers as well, although they are not as powerful as the commercial tools:

We mention in passing that learning how to use of any of these solvers effectively requires a significant time investment. In addition, it is common to build optimization models using a modeling system such as GAMS and LINDO, or a modeling language such as AMPL, ZIMPL, or JuMP.

Note that in the data science and machine learning context, more straightforward methods like gradient descent, stochastic gradient descent and Newton’s method are usually sufficient for most applications.

References

[1] D. Bertsimas and J. Tsitsiklis, Introduction to linear optimization, 1st ed. Athena Scientific, 1997.

[2] D. P. Bertsekas, Nonlinear programming. Athena Scientific, 1999.

[3] G. Cornuéjols, “Valid inequalities for mixed integer linear programs.” Math. Program., vol. 112, no. 1, pp. 3–44, Mar. 2008,Available: http://dblp.uni-trier.de/db/journals/mp/mp112.html#Cornuejols08

[4] H. P. Williams, “Model building in linear and integer programming,” in Computational mathematical programming, 1985, pp. 25–53.

Strictly speaking, differentiability is not required on the entirety of .↩︎