Module 16 Machine Learning 101

Data is not information, information is not knowledge, knowledge is not understanding, understanding is not wisdom. [C. Stoll (attributed), Nothing to Hide: Privacy in the 21st Century, 2006]

One of the challenges of working in the data science (DS), machine learning (ML) and artificial intelligence (AI) fields is that nearly all quantitative work can be described with some combination of the terms DS/ML/AI (often to a ridiculous extent).

Robinson [74] suggests that their relationships follow an inclusive hierarchical structure:

in a first stage, DS provides “insights” via visualization and (manual) inferential analysis;

in a second stage, ML yields “predictions” (or “advice”), while reducing the operator’s analytical, inferential and decisional workload (although it is still present to some extent), and

in the final stage, AI removes the need for oversight, allowing for automatic “actions” to be taken by a completely unattended system.

The goals of artificial intelligence are laudable in an academic setting, but in practice, we believe that stakeholders should not seek to abdicate all of their agency in the decision-making process; as such, we follow the lead of various thinkers and suggest further splitting AI into “general AI” (which we will not be pursuing) and “augmented intelligence” (which can be viewed as ML “on steroids”).

With this in mind, our definition of the DS/ML/AI approach is that it consists of quantitative processes (what H. Mason has called “the working intersection of statistics, engineering, computer science, domain expertise, and”hacking" [75]) that can help users learn actionable insights about their situation without completely abdicating their decision-making responsibility.

In this module, we will take a brief look at:

the fundamentals of data science;

supervised learning and classification, with a focus on decision trees and naïve Bayes classifiers;

unsupervised learning and clustering, with a focus on means and hierarchical clustering, and

some of the common issues and challenges encountered during the data science and machine learning process.

Later modules will discuss other technical aspects of machine learning. The interested reader can obtain more information from [76], [85], [87], [128], [129].

16.1 Statistical Learning

We learn from failure, not from success! [B. Stoker, Dracula]

As humans, we learn (at all stages) by first taking in our environment, and then by:

answering questions about it;

testing hypotheses;

creating concepts;

making predictions;

creating categories, and

classifying and grouping its various objects and attributes.

In a way, the main concept of DS/ML/AI is to try to teach our machines (and thus, ultimately, ourselves) to gleam insight from data, and how to do this properly and efficiently, free of biases and pre-conceived notions – in other words, can we design algorithms that can learn?51

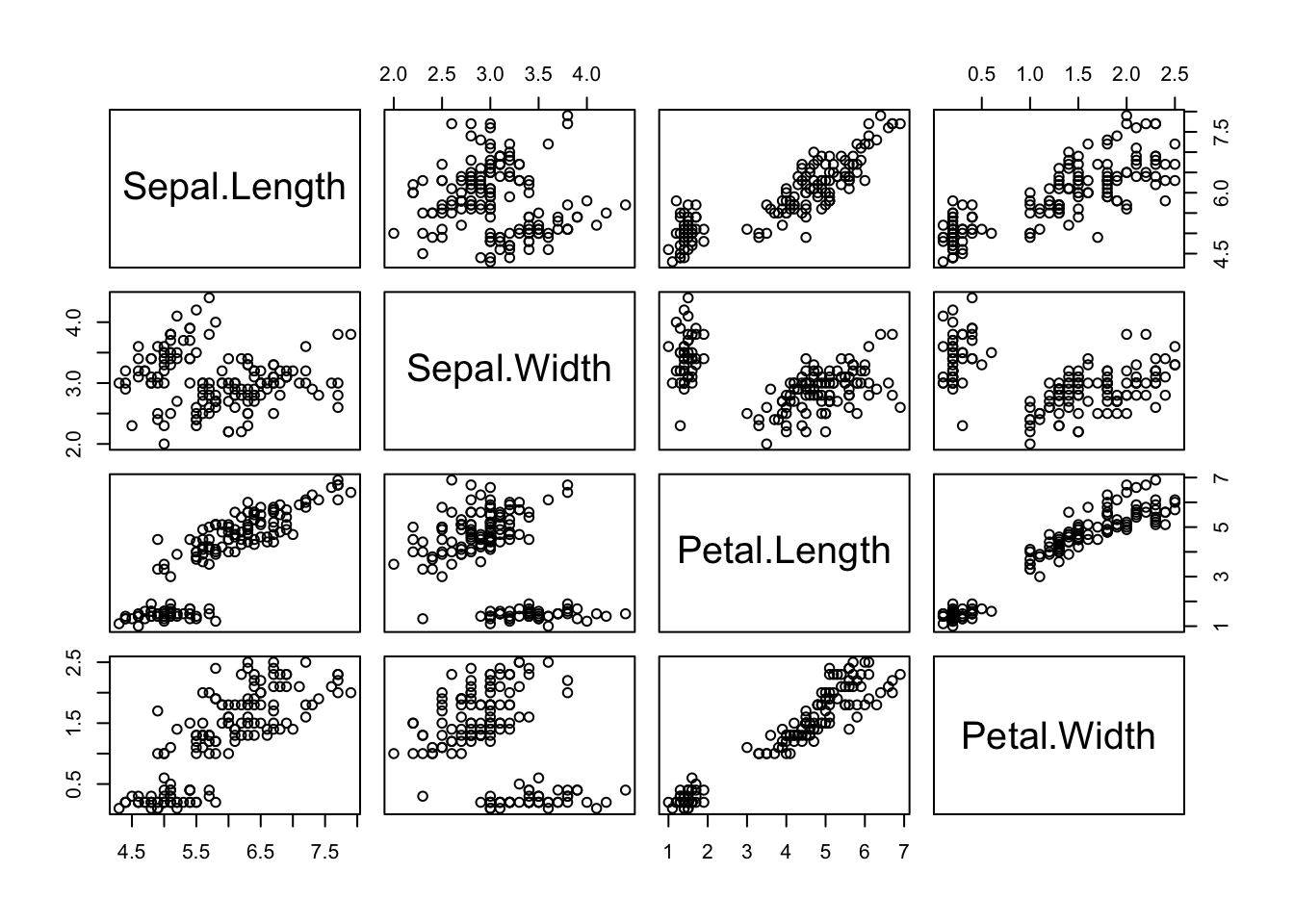

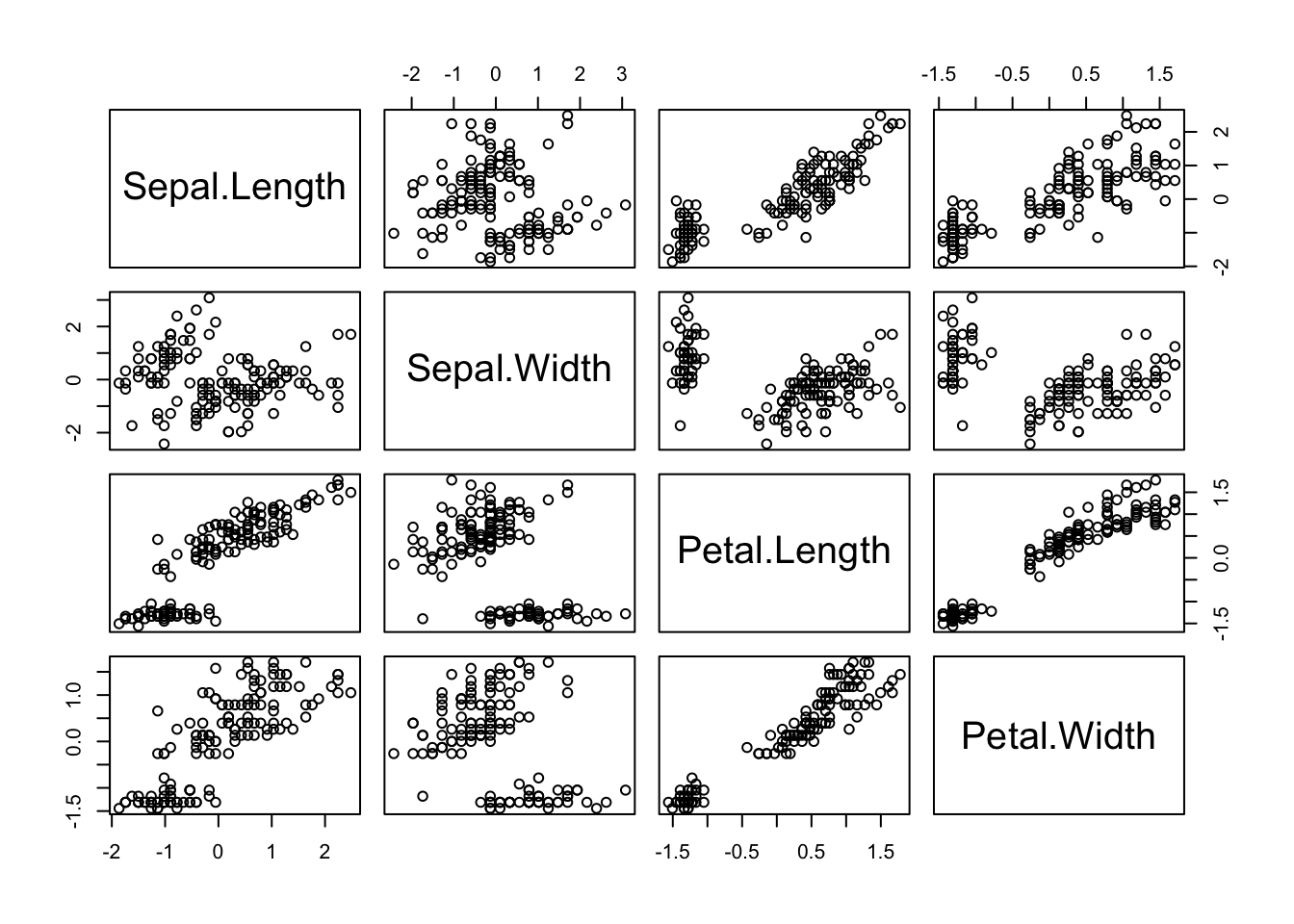

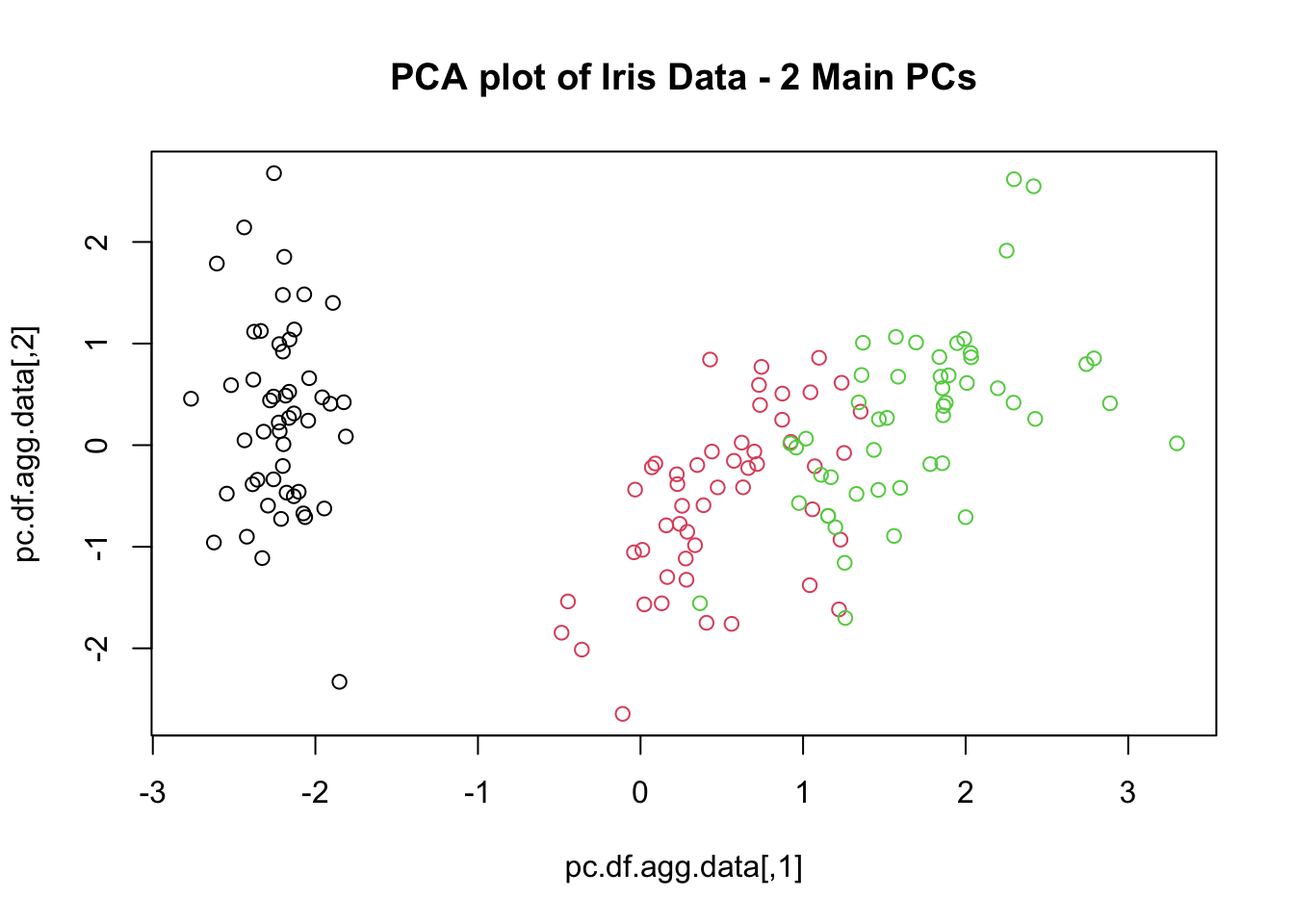

In that context, the simplest DS/ML/AI method is exploring the data (or a representative sample) to:

provide a summary through basic statistics – mean, mode, histograms, etc.;

make its multi-dimensional structure evident through data data visualization; and

look for consistency, considering what is in there and what is missing.

16.1.1 Types of Learning

In the data science context, more sophisticated approaches traditionally fall into a supervised or an unsupervised learning framework.

Supervised Learning

Supervised learning is akin to “learning with a teacher.” Typical tasks include classification, regression, rankings, and recommendations.

In supervised learning, algorithms use labeled training data to build (or train) a predictive model (i.e. “students give an answer to each exam question based on what they learned from worked-out examples provided by the teacher/textbook”); each algorithm’s performance is evaluated using test data for which the label is known but not used in the prediction (i.e. “the teacher provides the correct answers and marks the exam questions using the key”.)

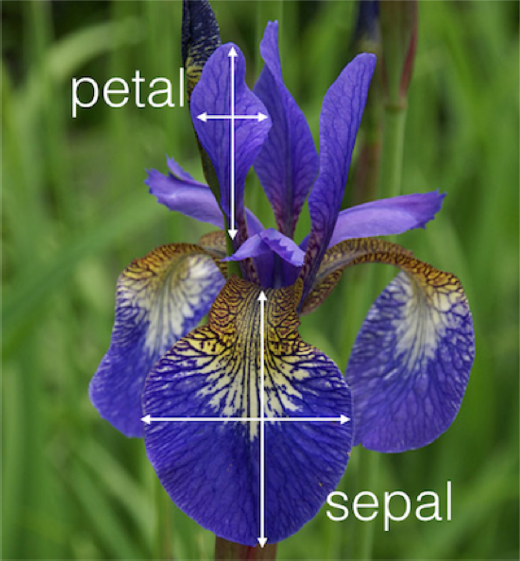

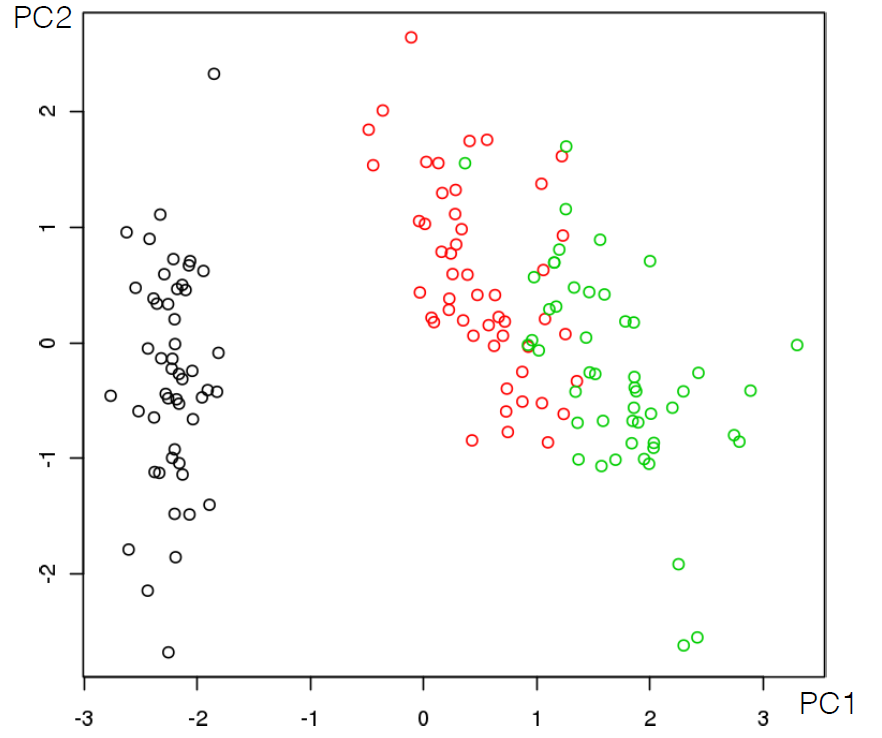

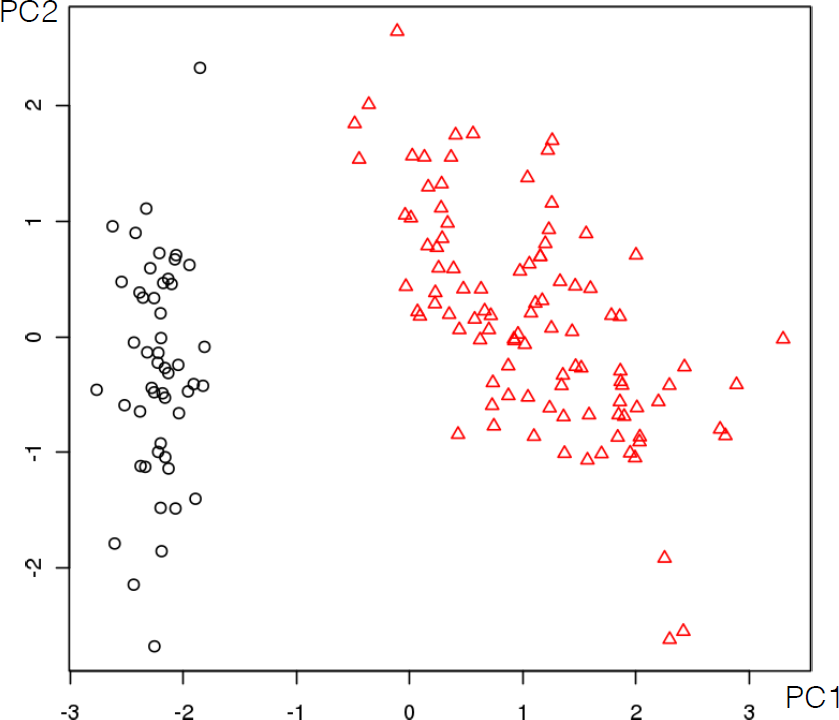

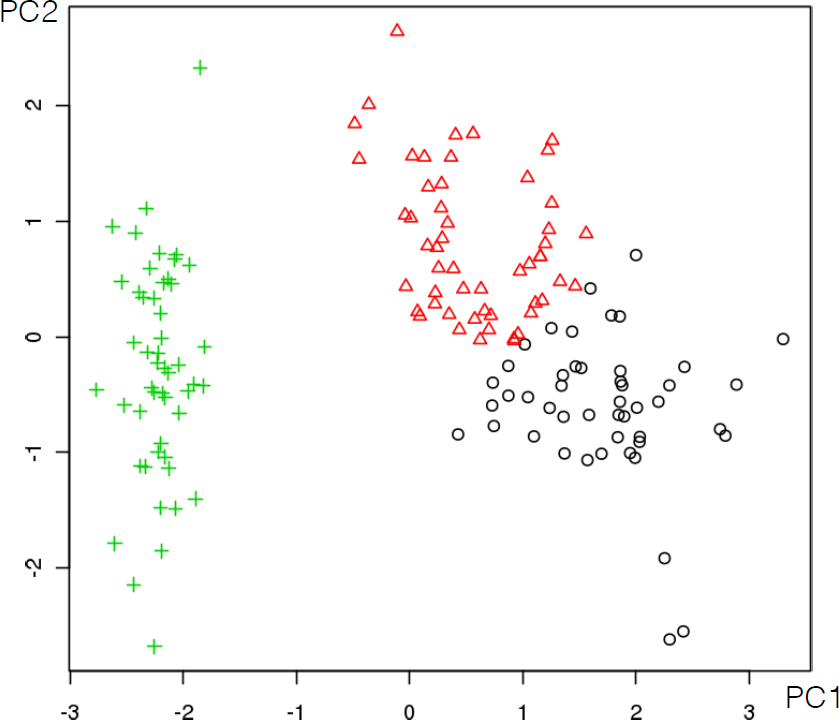

In supervised learning, there are fixed targets against which to train the model (such as age categories, or plant species) – the categories (and their number) are known prior to the analysis.

Unsupervised Learning

Unsupervised learning, on the other hand, is akin to “self-learning by grouping similar exercises together as a study guide.” Typical tasks include clustering, association rules discovery, link profiling, and anomaly detection. Unsupervised algorithms use unlabeled data to find natural patterns in the data (i.e. “the teacher is not involved in the discovery process”); the drawback is that accuracy cannot be evaluated with the same degree of satisfaction (i.e. “students might end up with different groupings”).

In unsupervised learning, we don’t know what the target is, or even if there is one – we are simply looking for natural groups in the data (such as junior students who like literature, have longish hair, and know how to cook vs. students who are on a sports team and have siblings vs. financial professionals with a penchant for superhero movies, craft beer and Hello Kitty backpack vs. … ).

Other Learning Frameworks

Some data science techniques fit into both camps; others can be either supervised or unsupervised, depending on how they are applied, but there are other conceptual approaches, especially for AI tasks:

semi-supervised learning in which some data points have labels but most do not, which often occurs when acquiring data is costly (“the teacher provides worked-out examples and a list of unsolved problems to try out; the students try to find similar groups of unsolved problems and compare them with the solved problems to find close matches”), and

reinforcement learning, where an agent attempts to collect as much (short-term) reward as possible while minimizing (long-term) regret (“embarking on a Ph.D. with an advisor… with all the highs and the lows and maybe a diploma at the end of the process?”).

16.1.2 Data Science and Machine Learning Tasks

Outside of academia, DS/ML/AI methods are only really interesting when they help users ask and answer useful questions. Compare, for instance:

Analytics – “How many clicks did this link get?”

Data Science – “Based on the previous history of clicks on links of this publisher’s site, can I predict how many people from Manitoba will read this specific page in the next three hours?” or “Is there a relationship between the history of clicks on links and the number of people from Manitoba who will read this specific page?”

Quantitative Methods – “We have no similar pages whose history could be consulted to make a prediction, but we have reasons to believe that the number of hits will be strongly correlated with the temperature in Winnipeg. Using the weather forecast over the next week, can we predict how many people will access the specific page during that period?”

Data science and machine learning models are usually predictive (not explanatory): they show connections, and exploit correlations to make predictions, but they don’t reveal why such connections exist.

Quantitative methods, on the other hand, usually assume a certain level of causal understanding based on various first principles. That distinction is not always understood properly by clients and consultants alike. Common data science tasks (with representative questions) include [77]:

classification and probability estimation – which undergraduates are likely to succeed at the graduate level?

value estimation – how much is a given client going to spend at a restaurant?

similarity matching – which prospective clients are most similar to a company’s establishes best clients?

clustering – do signals from a sensor form natural groups?

association rules discovery – what books are commonly purchased together at an online retailer?

profiling and behaviour description – what is the typical cell phone usage of a certain customer’s segment?

link prediction – J. and K. have 20 friends in common: perhaps they’d be great friends?

A classic example is provided by the UCI Machine Learning Repository Mushroom Dataset [130]. Consider Amanita muscaria (commonly known as the fly agaric), a specimen of which is shown below.

Figure 16.1: Amanita muscaria (fly agaric), in the wild. Does it look dangerous to you?

Is it edible, or poisonous? There is a simple way to get an answer – eat it, wait, and see: if you do not die or get sick upon ingestion, it was edible; otherwise it was poisonous.

This test in unappealing for various reasons, however. Apart from the obvious risk of death, we might not learn much from the experiment; it is possible that this specific specimen was poisonous due to some mutation or some other factor (or that you had a pre-existing condition which combined with the fungus to cause you discomfort, etc.), and that fly agaric is actually edible in general (unlikely, but not impossible).

A predictive model, which would use features of a vast collection of mushroom species and specimens (including whether they were poisonous or edible) could help shed light on the matter: what do poisonous mushrooms have in common? What properties do edible mushrooms share?52

For instance, let’s say that Amanita muscaria has the following features:

habitat: woods;

gill size: narrow;

spores: white;

odor: none;

cap color: red.

We do not know a priori whether it is poisonous or edible. Is the available information sufficient to answer the question? Not on its own, no.53

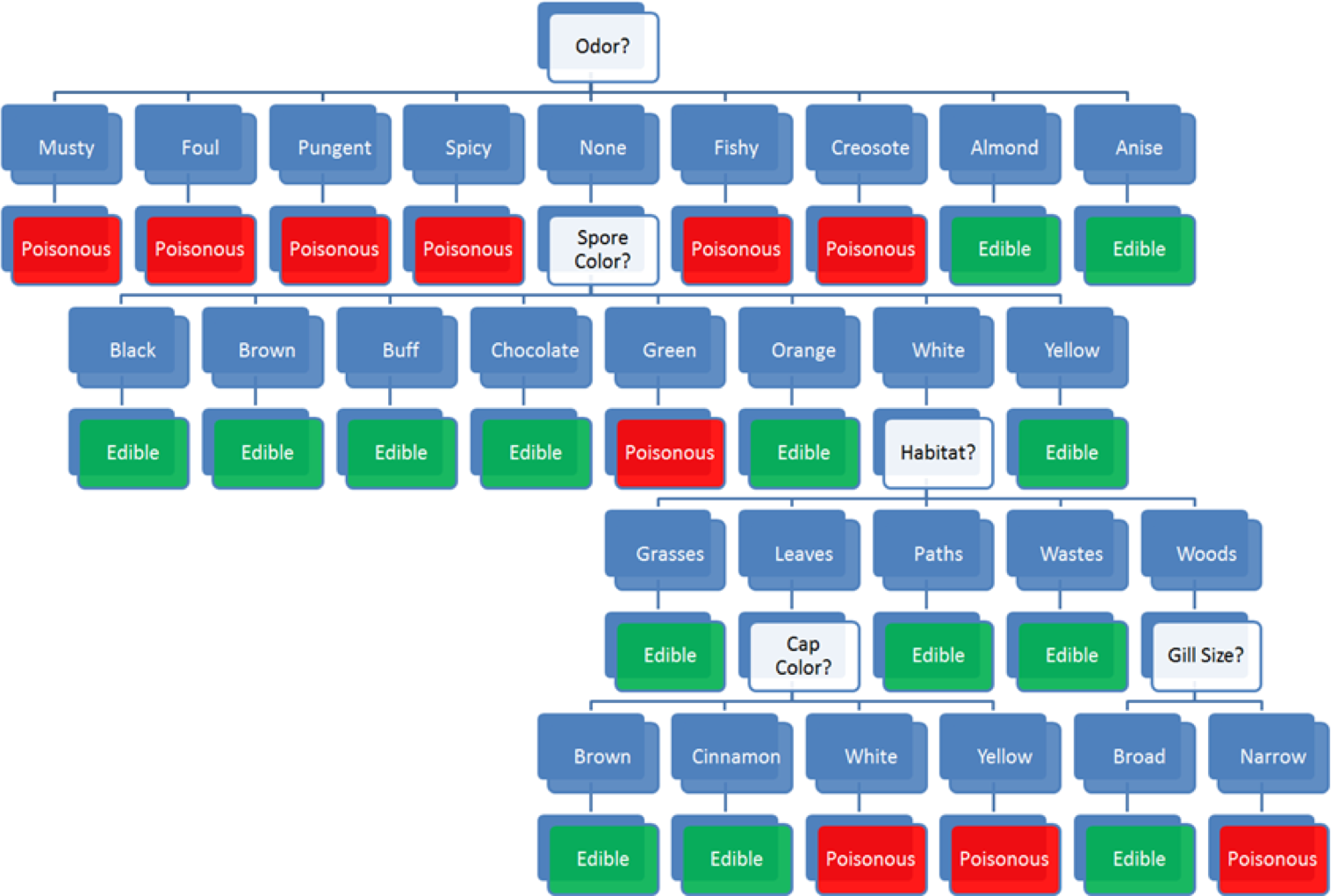

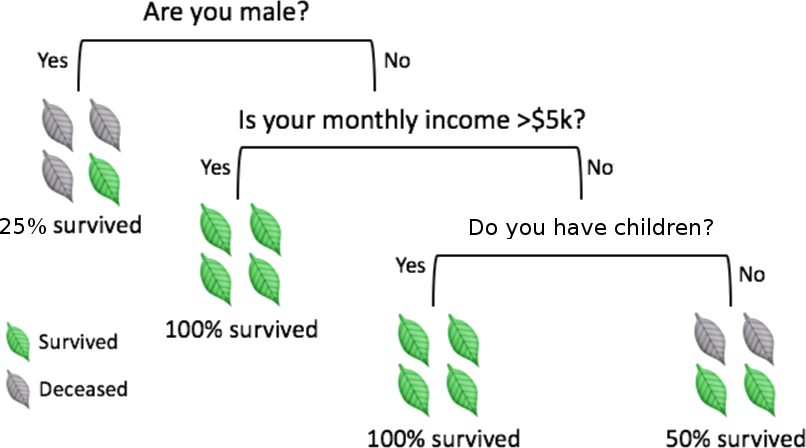

But we could use past data, with correct edible or poisonous labels and the same set of predictors to build various supervised classification models to attempt to answer the question. A simple such model, a decision tree, is shown on the left in Figure @(fig:DT-mushroom1).

Figure 16.2: Decision tree for the mushroom classification problem [author unknown].

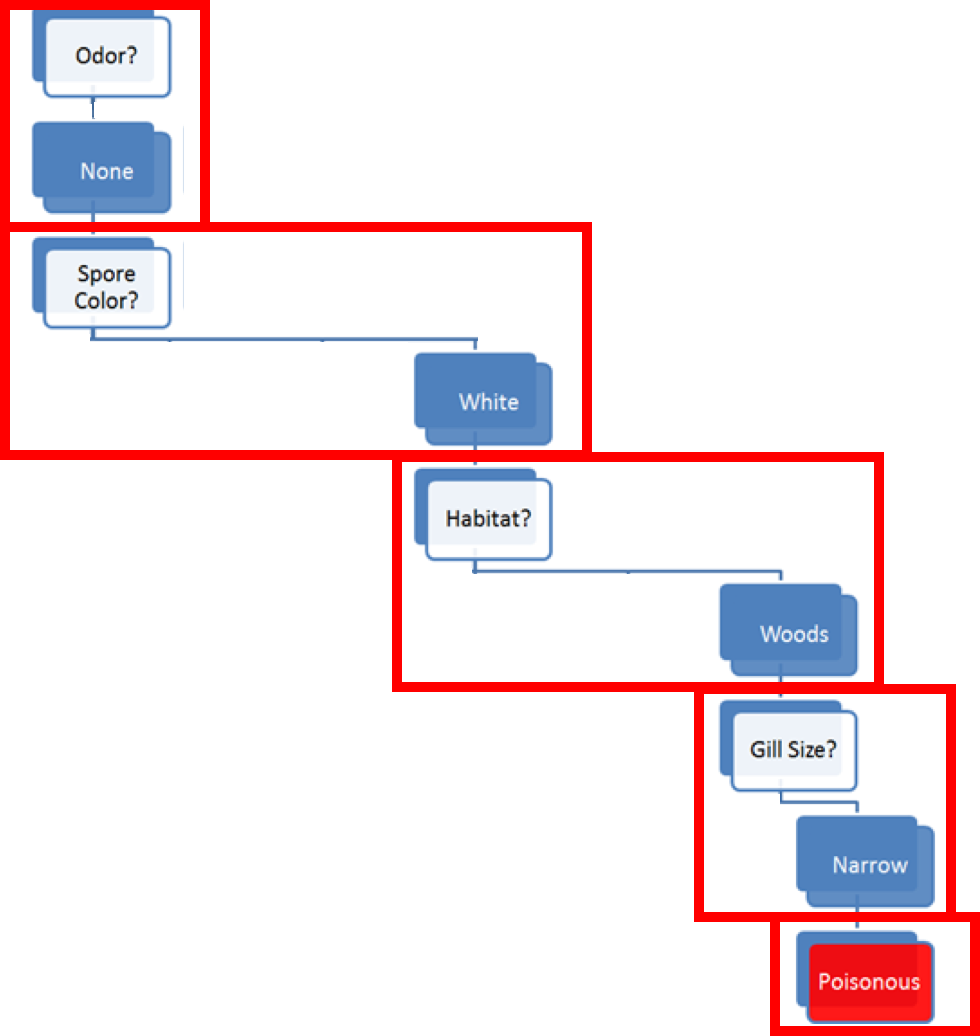

The model prediction for Amanita muscaria follows the decision path shown in Figure 16.3:

some mushroom odors (musty, spicy, etc.) are associated with poisonous mushrooms, some (almond, anise) with edible mushrooms, but there are mushrooms with no specific odor in either category – for mushroom with ‘no odor’ (as it he case with Amanita muscaria), odor does not provide enough information for proper classification and we need to incorporate additional features into the decision path;

among mushrooms with no specific odor, some spore colours (black, etc.) are associated with edible mushrooms, some (almond, anise) with poisonous mushrooms, but there are mushrooms with ‘white’ spores in either category – the combination ‘no odor and white spores’ does not provide enough information to classify Amanita muscaria and we need to incorporate additional features into the decision path;

among mushrooms of no specific odor with white spores, some habitats (grasses, paths, wastes) are associated with edible mushrooms, but there are mushrooms in either category that are found in the ‘woods’ – the combination ‘no odor, white spores, found in the woods’ does not provide enough information to classify Amanita muscaria and we need to incorporate additional features into the decision path;

among white-spored forest mushroom with no specific odor, a broad gill size is associated with edible mushrooms, whereas a ‘narrow’ gill size is associated with poisonous mushrooms – as Amanita muscaria is a narrow-gilled, white-spored forest mushroom with no specific odor, the decision path predicts that it is poisonous.

Note that the cap color does not affect the decision path, however.54

Figure 16.3: Decision path for Amanita muscaria.

The decision tree model does not explain why this particular combinations of features is associated with poisonous mushrooms – the decision path is not causal.

At this point, a number of questions naturally arise:

Would you have trusted an edible prediction?

How are the features measured?

What is the true cost of making a mistake?

Is the data on which the model is built representative?

What data is required to build trustworthy models?

What do we need to know about the model in order to trust it?

16.2 Association Rules Mining

Correlation isn’t causation. But it’s a big hint. [E. Tufte]

16.2.1 Overview

Association rules discovery is a type of unsupervised learning that finds connections among the attributes and levels (and combinations thereof) of a dataset’s observations. For instance, we might analyze a (hypothetical) dataset on the physical activities and purchasing habits of North Americans and discover that

runners who are also triathletes (the premise) tend to drive Subarus, drink microbrews, and use smart phones (the conclusion), or

individuals who have purchased home gym equipment are unlikely to be using it 1 year later, say.

But the presence of a correlation between the premise and the conclusion does not necessarily imply the existence of a causal relationship between them. It is rather difficult to “demonstrate” causation via data analysis; in practice, decision-makers pragmatically (and often erroneously) focus on the second half of Tufte’s rejoinder, which basically asserts that “there’s no smoke without fire.”

Case in point, while being a triathlete does not cause one to drive a Subaru, Subaru Canada thinks that the connection is strong enough to offer to reimburse the registration fee at an IRONMAN 70.3 competition (since at least 2018)! [131]

Market Basket Analysis

Association rules discovery is also known as market basket analysis after its original application, in which supermarkets record the contents of shopping carts (the baskets) at check-outs to determine which items are frequently purchased together.

For instance, while bread and milk might often be purchased together, that is unlikely to be of interest to supermarkets given the frequency of market baskets containing milk or bread (in the mathematical sense of “or”).

Knowing that a customer has purchased bread does provide some information regarding whether they also purchased milk, but the individual probability that each item is found, separately, in the basket is so high to begin with that this insight is unlikely to be useful.

If 70% of baskets contain milk and 90% contain bread, say, we would expect at least of all baskets to contain milk and bread, should the presence of one in the basket be totally independent of the presence of the other.

If we then observe that 72% of baskets contain both items (a 1.15-fold increase on the expected proportion, assuming there is no link), we would conclude that there was at best a weak correlation between the purchase of milk and the purchase of bread.

Sausages and hot dog buns, on the other hand, which we might suspect are not purchased as frequently as milk and bread, might still be purchased as a pair more often than one would expect given the frequency of baskets containing sausages or buns.

If 10% of baskets contain sausages, and 5% contain buns, say, we would expect that of all baskets would contain sausages and buns, should the presence of one in the basket be totally independent of the presence of the other.

If we then observe that 4% of baskets contain both items (an 8-fold increase on the expected proportion, assuming there is no link), we would obviously conclude that there is a strong correlation between the purchase of sausages and the purchase of hot dog buns.

It is not too difficult to see how this information could potentially be used to help supermarkets turn a profit: announcing or advertising a sale on sausages while simultaneously (and quietly) raising the price of buns could have the effect of bringing in a higher number of customers into the store, increasing the sale volume for both items while keeping the combined price of the two items constant.55

A (possibly) apocryphal story shows the limitations of association rules: a supermarket found an association rule linking the purchase of beer and diapers and consequently moved its beer display closer to its diapers display, having confused correlation and causation.

Purchasing diapers does not cause one to purchase beer (or vice-versa); it could simply be that parents of newborns have little time to visit public houses and bars, and whatever drinking they do will be done at home. Who knows? Whatever the case, rumour has it that the experiment was neither popular nor successful.

Applications

Typical uses include:

finding related concepts in text documents – looking for pairs (triplets, etc) of words that represent a joint concept: {San Jose, Sharks}, {Michelle, Obama}, etc.;

detecting plagiarism – looking for specific sentences that appear in multiple documents, or for documents that share specific sentences;

identifying biomarkers – searching for diseases that are frequently associated with a set of biomarkers;

making predictions and decisions based on association rules (there are pitfalls here);

altering circumstances or environment to take advantage of these correlations (suspected causal effect);

using connections to modify the likelihood of certain outcomes (see immediately above);

imputing missing data,

text autofill and autocorrect, etc.

Causation and Correlation

Association rules can automate hypothesis discovery, but one must remain correlation-savvy (which is less prevalent among quantitative specialists than one might hope, in our experience).

If attributes and are shown to be correlated in a dataset, there are four possibilities:

and are correlated entirely by chance in this particular dataset;

is a relabeling of (or vice-versa);

causes (or vice-versa), or

some combination of attributes (which may not be available in the dataset) cause both and .

Siegel [132] illustrates the confusion that can arise with a number of real-life examples:

Walmart has found that sales of strawberry Pop-Tarts increase about seven-fold in the days preceding the arrival of a hurricane;

Xerox employees engaged in front-line service and sales-based positions who use Chrome and Firefox browsers perform better on employment assessment metrics and tend to stay with the company longer, or

University of Cambridge researchers found that liking “Curly Fries” on Facebook is predictive of high intelligence.

It can be tempting to try to explain these results (again, from [132]): perhaps

when faced with a coming disaster, people stock up on comfort or nonperishable foods;

the fact that an employee takes the time to install another browser shows that they are an informed individual and that they care about their productivity, or

an intelligent person liked this Facebook page first, and her friends saw it, and liked it too, and since intelligent people have intelligent friends (?), the likes spread among people who are intelligent.

While these explanations might very well be the right ones (although probably not in the last case), there is nothing in the data that supports them. Association rules discovery finds interesting rules, but it does not explain them. The point cannot be over-emphasized: correlation does not imply causation.

Analysts and consultants might not have much control over the matter, but they should do whatever is in their power so that the following headlines do not see the light of day:

“Pop-Tarts” get hurricane victims back on their feet;

Using Chrome of Firefox improves employee performance, or

Eating curly fries makes you more intelligent.

Definitions

A rule is a statement of the form “if (the premise) then (the conclusion)” built from any logical combinations of a dataset attributes.

In practice, a rule does not need to be true for all observations in the dataset – there could be instances where the premise is satisfied but the conclusion is not.

In fact, some of the “best” rules are those which are only accurate 10% of the time, as opposed to rules which are only accurate is only 5% of the time, say. As always, it depends on the context. To determine a rule’s strength, we compute various rule metrics, such as the:

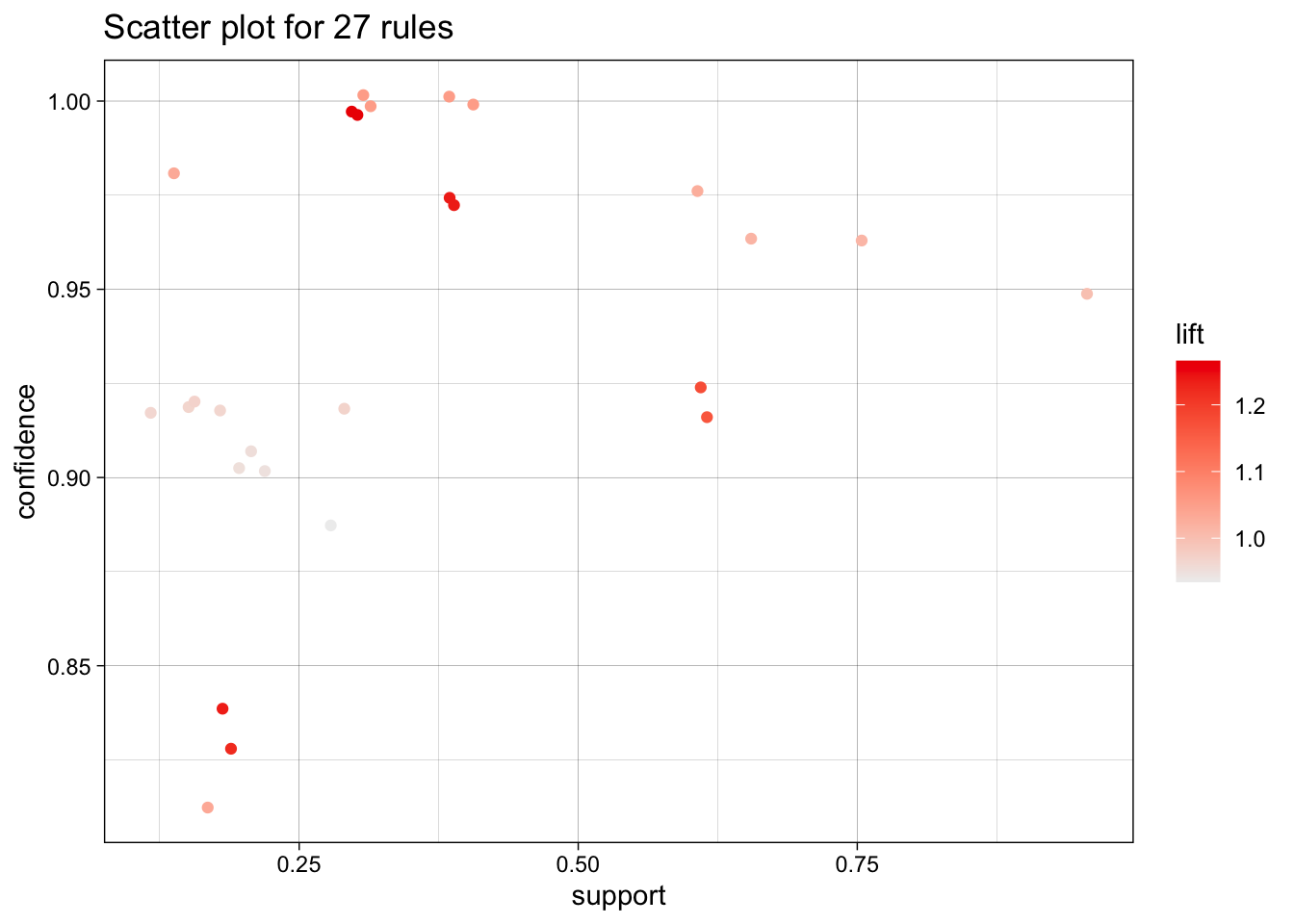

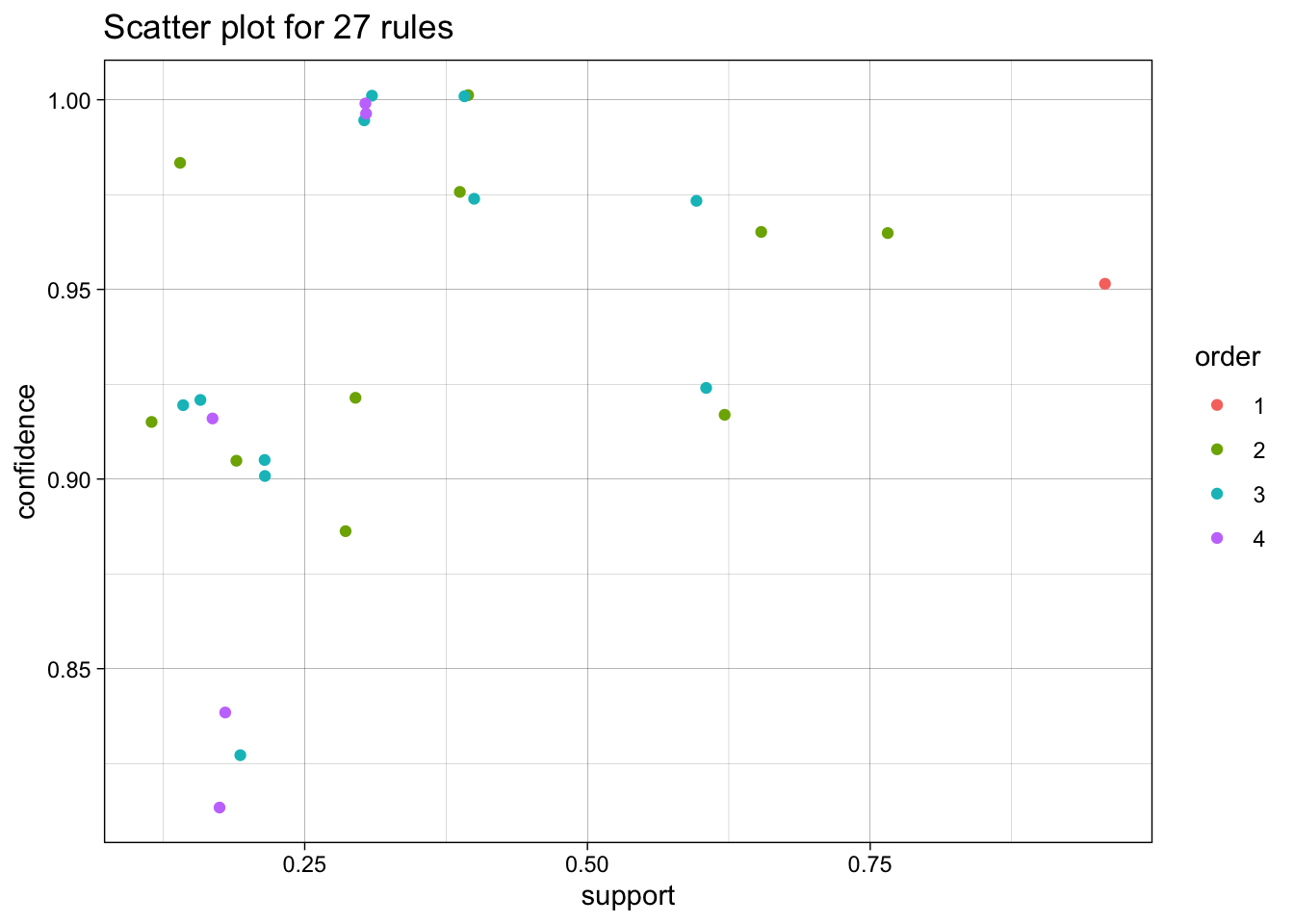

support, which measures the frequency at which a rule occurs in a dataset – low coverage values indicate rules that rarely occur;

confidence, which measures the reliability of the rule: how often does the conclusion occur in the data given that the premises have occurred – rules with high confidence are “truer”, in some sense;

interest, which measures the difference between its confidence and the relative frequency of its conclusion – rules with high absolute interest are … more interesting than rules with small absolute interest;

lift, which measures the increase in the frequency of the conclusion which can be explained by the premises – in a rule with a high lift (), the conclusion occurs more frequently than it would if it was independent of the premises;

conviction [134], all-confidence [135], leverage [136], collective strength [137], and many others [138], [139].

In a dataset with observations, let represent the count of the dataset’s observations for which property holds. This is all the information that is required to compute a rule’s evaluation metrics:

Music Dataset

A simple example will serve to illustrate these concepts. Consider a (hypothetical) music dataset containing data for Chinese music lovers and a candidate rule RM:

“If an individual is born before 1986 (), then they own a copy of Teresa Teng’s The Moon Represents My Heart, in some format ()”.

Let’s assume further that

individuals were born before 1986;

individuals own a copy of The Moon Represents My Heart, and

individuals were born before 1986 and own a copy of The Moon Represents My Heart.

We can easily compute the 5 metrics for RM: These values are easy to interpret: RM occurs in 18% of the dataset’s instances, and it holds true in 70% of the instances where the individual was born prior to 1986.

This would seem to make RM a meaningful rule about the dataset – being older and owning that song are linked properties. But if being younger and not owning that song are not also linked properties, the statement is actually weaker than it would appear at a first glance.

As it happens, RM’s lift is 1.2, which can be rewritten as i.e. 56% of younger individuals also own the song.

The ownership rates between the two age categories are different, but perhaps not as significantly as one would deduce using the confidence and support alone, which is reflected by the rule’s “low” interest, whose value is 0.11.

Finally, the rule’s conviction is 1.36, which means that the rule would be incorrect 36% more often if and were completely independent.

All this seems to point to the rule RM being not entirely devoid of meaning, but to what extent, exactly? This is a difficult question to answer.56

It is nearly impossible to provide hard and fast thresholds: it always depends on the context, and on comparing evaluation metric values for a rule with the values obtained for some other of the dataset’s rules. In short, evaluation of a lone rule is meaningless.

In general, it is recommended to conduct a preliminary exploration of the space of association rules (using domain expertise when appropriate) in order to determine reasonable threshold ranges for the specific situation; candidate rules would then be discarded or retained depending on these metric thresholds.

This requires the ability to “easily” generate potentially meaningful candidate rules.

16.2.2 Generating Rules

Given association rules, it is straightforward to evaluate them using various metrics, as discussed in the previous section.

The real challenge of association rules discovery lies in generating a set of candidate rules which are likely to be retained, without wasting time generating rules which are likely to be discarded.

An itemset (or instance set) for a dataset is a list of attributes and values. A set of rules can be created from the itemset by adding “IF … THEN” blocks to the instances.

As an example, from the instance set

we can create the 7 following item rules:

IF AND ) THEN ;

IF AND ) THEN ;

IF AND THEN ;

IF THEN ( AND );

IF THEN AND ;

IF THEN AND ;

IF THEN ( AND AND );

the 6 following item rules:

IF THEN ;

IF THEN ;

IF THEN ;

IF THEN ( AND );

IF THEN AND ;

IF THEN AND ;

and the 3 following item rules:

IF THEN ;

IF THEN ;

IF THEN .

In practice, we usually only consider rules with the same number of items as there are members in the itemset: in the example above, for instance, the item rules could be interpreted as emerging from the 3separate itemsets

and the item rules as arising from the 3 separate itemsets

Note that rules of the form (or IF THEN ) are typically denoted simply by .

Now, consider an itemset with members (that is to say, attribute/level pairs). In an item rule derived from , each of the members appears either in the premise or in the conclusion; there are thus such rules, in principle.

The rule where each member is part of the premise (i.e., the rule without a conclusion) is nonsensical and is not allowed; we can derive exactly item rules from . Thus, the number of rules increases exponentially when the number of features increases linearly.

This combinatorial explosion is a problem – it instantly disqualifies the brute force approach (simply listing all possible itemsets in the data and generating all rules from those itemsets) for any dataset with a realistic number of attributes.

How can we then generate a small number of promising candidate rules, in general?

16.2.3 The A Priori Algorithm

The a priori algorithm is an early attempt to overcome that difficulty. Initially, it was developed to work for transaction data (i.e. goods as columns, customer purchases as rows), but every reasonable dataset can be transformed into a transaction dataset using dummy variables.

The algorithm attempts to find frequent itemsets from which to build candidate rules, instead of building rules from all possible itemsets.

It starts by identifying frequent individual items in the database and extends those that are retained into larger and larger item supersets, who are themselves retained only if they occur frequently enough in the data.

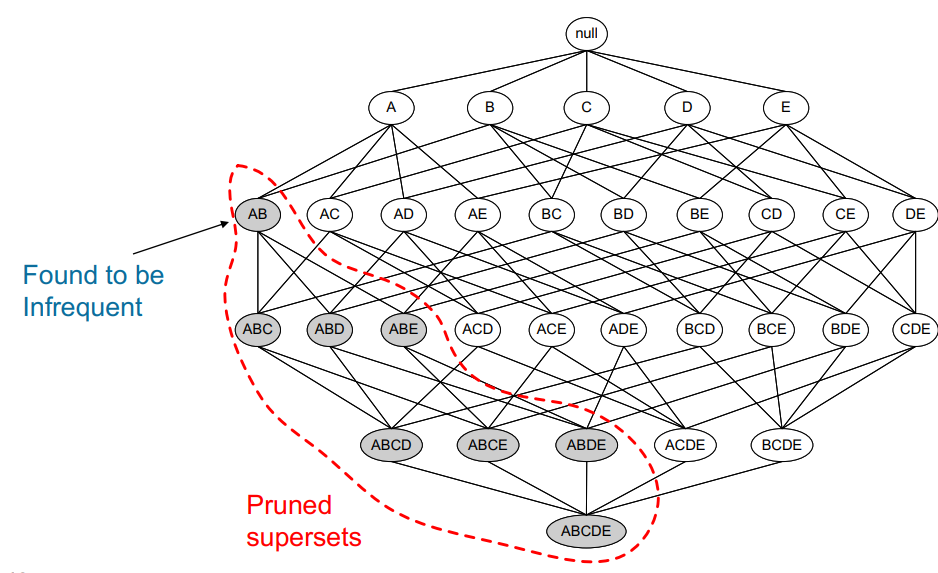

The main idea is that “all non-empty subsets of a frequent itemset must also be frequent” [140], or equivalently, that all supersets of an infrequent itemset must also be infrequent (see Figure 16.4).

Figure 16.4: Pruned supersets of an infrequent itemset in the a priori network of a dataset with 5 items [140]; no rule would be generated from the grey itemsets.

In the technical jargon of machine learning, we say that a priori uses a bottom-up approach and the downward closure property of support.

The memory savings arise from the fact that the algorithm prunes candidates with infrequent sub-patterns and removes them from consideration for any future itemset: if a itemset is not considered to be frequent enough, any itemset containing it is also infrequent (see Figure 16.5 for another illustration).

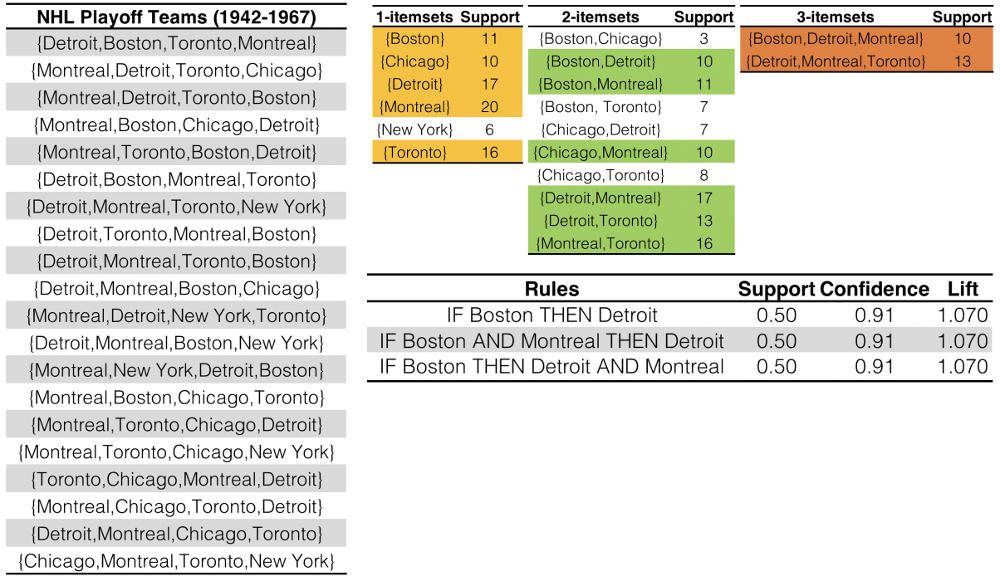

Figure 16.5: Association rules for NHL playoff teams (1942-1967).

A list of the 4 teams making the playoffs each year is shown on the left (). Frequent itemsets are generated using the a priori algorithms, with a support threshold of 10. We see that there are frequent itemsets, top row, in yellow (New York made the playoffs times – no larger frequent itemset can contain New York). 6 frequent itemsets are found in the subsequent list of ten itemsets, top row, in green (note the absence of New York). Only 2 frequent itemsets are found, top row, in orange. Candidate rules are generated from the shaded itemsets; the rules retained by the thresholds are shown in the table on the bottom row – the main result is that when Boston made the playoffs, it was not surprising to see Detroit also make the playoffs (the presence or absence of Montreal in a rule is a red herring, as Montreal made the playoffs every year in the data. Are these rules meaningful at all?

Of course, this process requires a support threshold input, for which there there is no guaranteed way to pick a “good” value; it has to be set sufficiently high to minimize the number of frequent itemsets that are being considered, but not so high that it removes too many candidates from the output list; as ever, optimal threshold values are dataset-specific.

The algorithm terminates when no further itemsets extensions are retained, which always occurs given the finite number of levels in categorical datasets.

Strengths: easy to implement and to parallelize [141];

Limitations: slow, requires frequent data set scans, not ideal for finding rules for infrequent and rare itemsets.

More efficient algorithms have since displaced it in practice (although the a priori algorithm retains historical value):

Max-Miner tries to identify frequent itemsets without enumerating them – it performs jumps in itemset space instead of using a bottom-up approach;

Eclat is faster and uses depth-first search, but requires extensive memory storage (a priori and eclat are both implemented in the

Rpackagearules[135]).

16.2.4 Validation

How reliable are association rules? What is the likelihood that they occur entirely by chance? How relevant are they? Can they be generalised outside the dataset, or to new data streaming in?

These questions are notoriously difficult to solve for association rules discovery, but statistically sound association discovery can help reduce the risk of finding spurious associations to a user-specified significance level [138], [139]. We end this section with a few comments:

Since frequent rules correspond to instances that occur repeatedly in the dataset, algorithms that generate itemsets often try to maximize coverage. When rare events are more meaningful (such as detection of a rare disease or a threat), we need algorithms that can generate rare itemsets. This is not a trivial problem.

Continuous data has to be binned into categorical data to generate rules. As there are many ways to accomplish that task, the same dataset can give rise to completely different rules. This could create some credibility issues with clients and stakeholders.

Other popular algorithms include: AIS, SETM, aprioriTid, aprioriHybrid, PCY, Multistage, Multihash, etc.

Additional evaluation metrics can be found in the

arulesdocumentation [135].

16.2.5 Case Study: Danish Medical Data

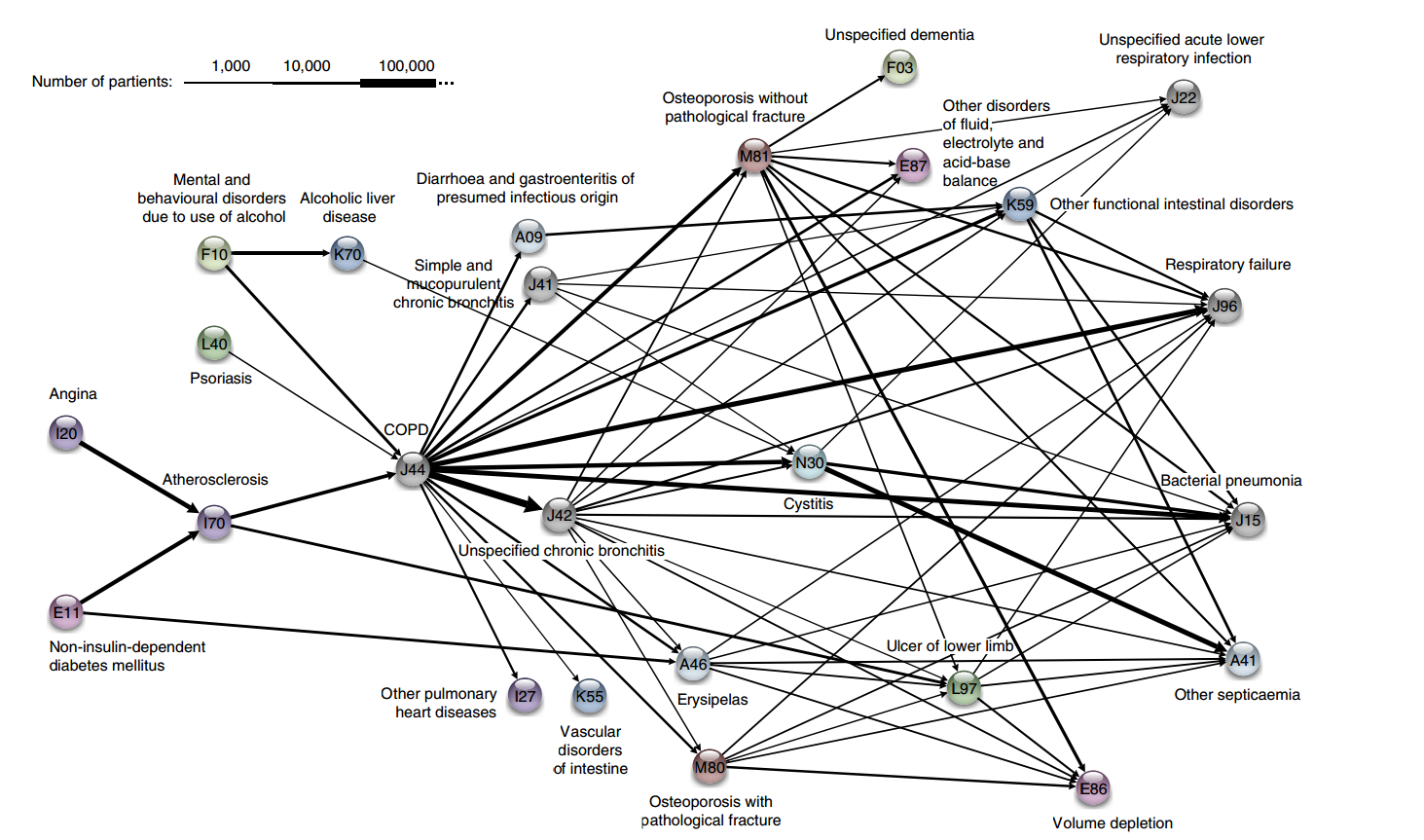

In temporal disease trajectories condensed from population wide registry data covering 6.2 million patients [62], A.B. Jensen et al. study diagnoses in the Danish population, with the help of association rules mining and clustering methods.

Objectives

Estimating disease progression (trajectories) from current patient state is a crucial notion in medical studies. Such trajectories had (at the time of publication) only been analyzed for a small number of diseases, or using large-scale approaches without consideration for time exceeding a few years. Using data from the Danish National Patient Registry (an extensive, long-term data collection effort by Denmark), the authors sought connections between different diagnoses: how does the presence of a diagnosis at some point in time allow for the prediction of another diagnosis at a later point in time?

Methodology

The authors took the following methodological steps:

compute the strength of correlation for pairs of diagnoses over a 5 year interval (on a representative subset of the data);

test diagnoses pairs for directionality (one diagnosis repeatedly occurring before the other);

determine reasonable diagnosis trajectories (thoroughfares) by combining smaller (but frequent) trajectories with overlapping diagnoses;

validate the trajectories by comparison with non-Danish data;

cluster the thoroughfares to identify a small number of central medical conditions (key diagnoses) around which disease progression is organized.

Data

The Danish National Patient Registry is an electronic health registry containing administrative information and diagnoses, covering the whole population of Denmark, including private and public hospital visits of all types: inpatient (overnight stay), outpatient (no overnight stay) and emergency. The data set covers 15 years, from January ’96 to November ’10 and consists of 68 million records for 6.2 million patients.

Challenges and Pitfalls

Access to the Patient Registry is protected and could only be granted after approval by the Danish Data Registration Agency the National Board of Health.

Gender-specific differences in diagnostic trends are clearly identifiable (pregnancy and testicular cancer do not have much cross-appeal), but many diagnoses were found to be made exclusively (or at least, predominantly) in different sites (inpatient, outpatient, emergency ward), which suggests the importance of stratifying by site as well as by gender.

In the process of forming small diagnoses chains, it became necessary to compute the correlations using large groups for each pair of diagnoses. For close to 1 million diagnosis pairs, more than 80 million samples would have been required to obtain significant values while compensating for multiple testing, which would have translated to a few thousand years’ worth of computer running time. A pre-filtering step was included to avoid this pitfall.57

Project Summaries and Results

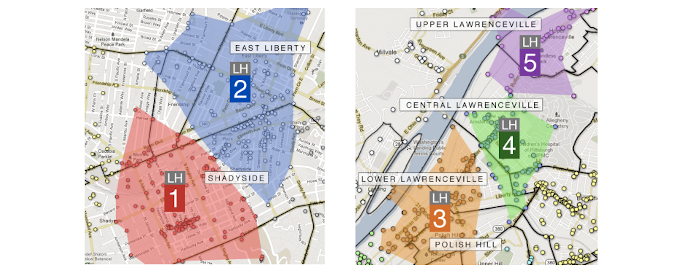

The dataset was reduced to 1,171 significant trajectories. These thoroughfares were clustered into patterns centred on 5 key diagnoses central to disease progression:

diabetes;

chronic obstructive pulmonary disease (COPD);

cancer;

arthritis, and

cerebrovascular disease.

Early diagnoses for these central factors can help reduce the risk of adverse outcome linked to future diagnoses of other conditions.

Two author quotes illustrate the importance of these results:

“The sooner a health risk pattern is identified, the better we can prevent and treat critical diseases.” [S. Brunak]

“Instead of looking at each disease in isolation, you can talk about a complex system with many different interacting factors. By looking at the order in which different diseases appear, you can start to draw patterns and see complex correlations outlining the direction for each individual person.” [L.J. Jensen]

Among the specific results, the following “surprising” insights were found:

a diagnosis of anemia is typically followed months later by the discovery of colon cancer;

gout was identified as a step on the path toward cardiovascular disease, and

COPD is under-diagnosed and under-treated.

The disease trajectories cluster for COPD, for instance, is shown in Figure 16.6.

Figure 16.6: The COPD cluster showing five preceding diagnoses leading to COPD and some of the possible outcomes [62].

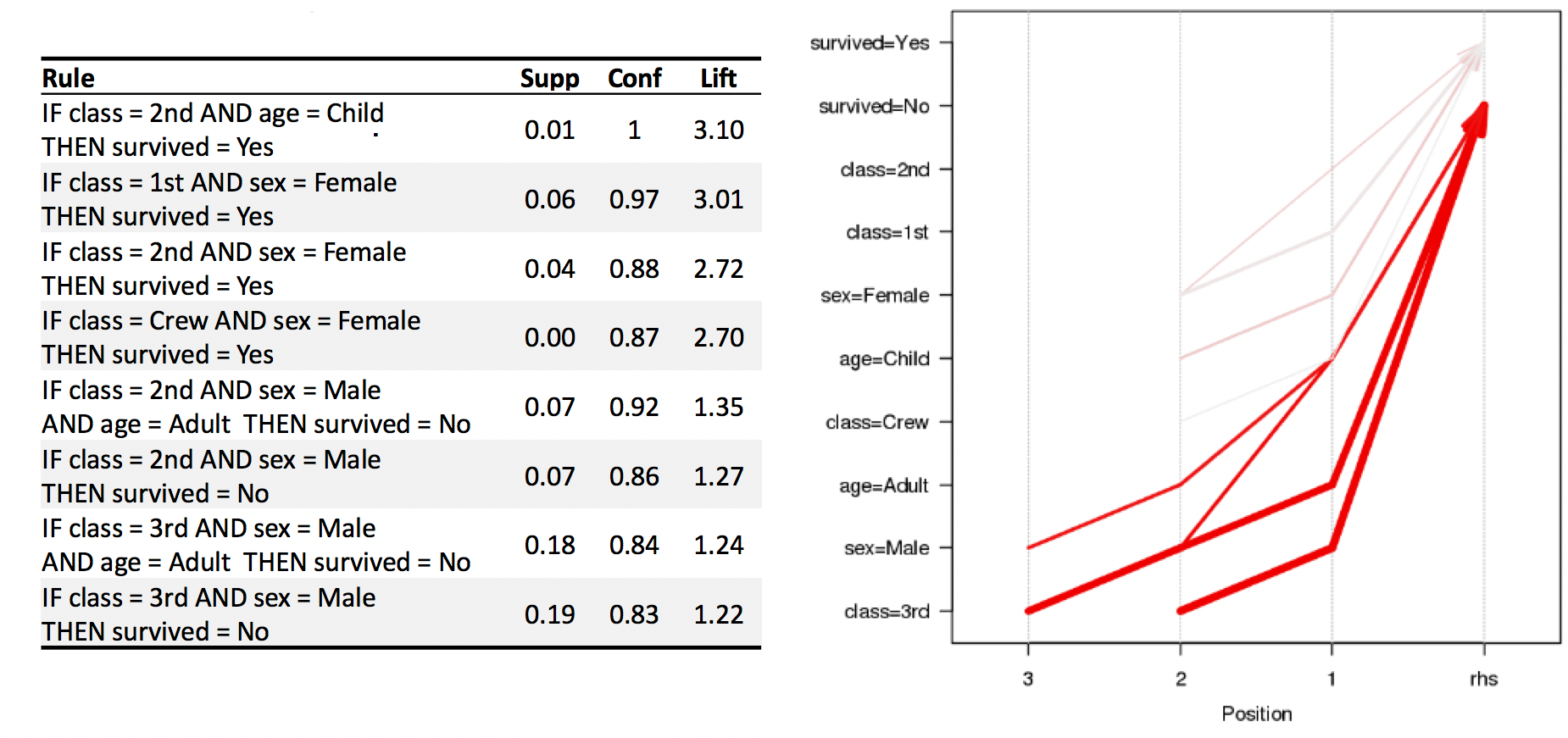

16.2.6 Toy Example: Titanic Dataset

Compiled by Robert Dawson in 1995, the Titanic dataset consists of 4 categorical attributes for each of the 2201 people aboard the Titanic when it sank in 1912 (some issues with the dataset have been documented, but we will ignore them for now):

class (1st class, 2nd class, 3rd class, crewmember)

age (adult, child)

sex (male, female)

survival (yes, no)

The natural question of interest for this dataset is:

“How does survival relate to the other attributes?”

This is not, strictly speaking, an unsupervised task (as the interesting rules’ structure is fixed to conclusions of the form or ).

For the purpose of this example, we elect not to treat the problem as a predictive task, since the situation on the Titanic has little bearing on survival for new data – as such, we use fixed-structure association rules to describe and explore survival conditions on the Titanic (compare with [142]).

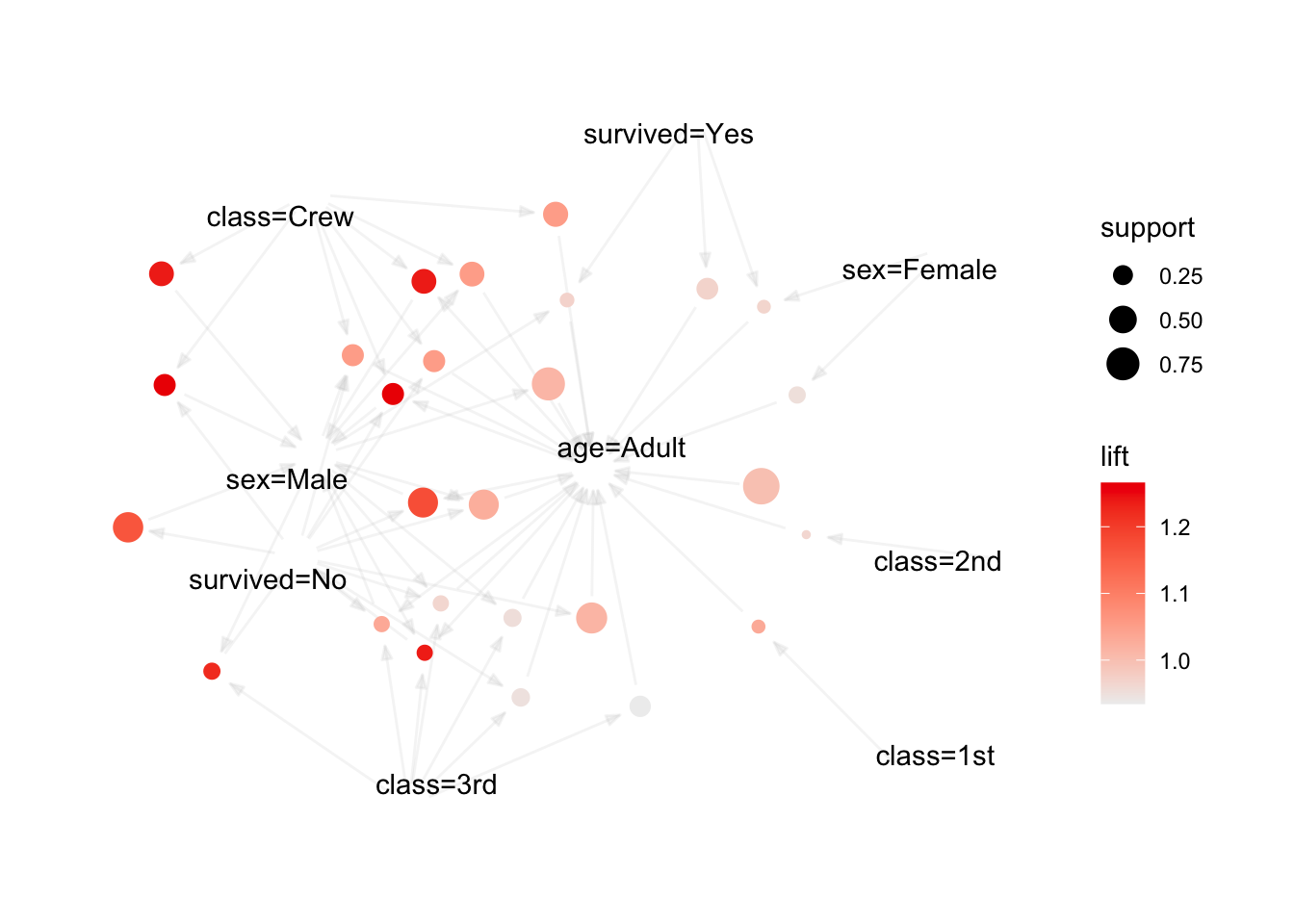

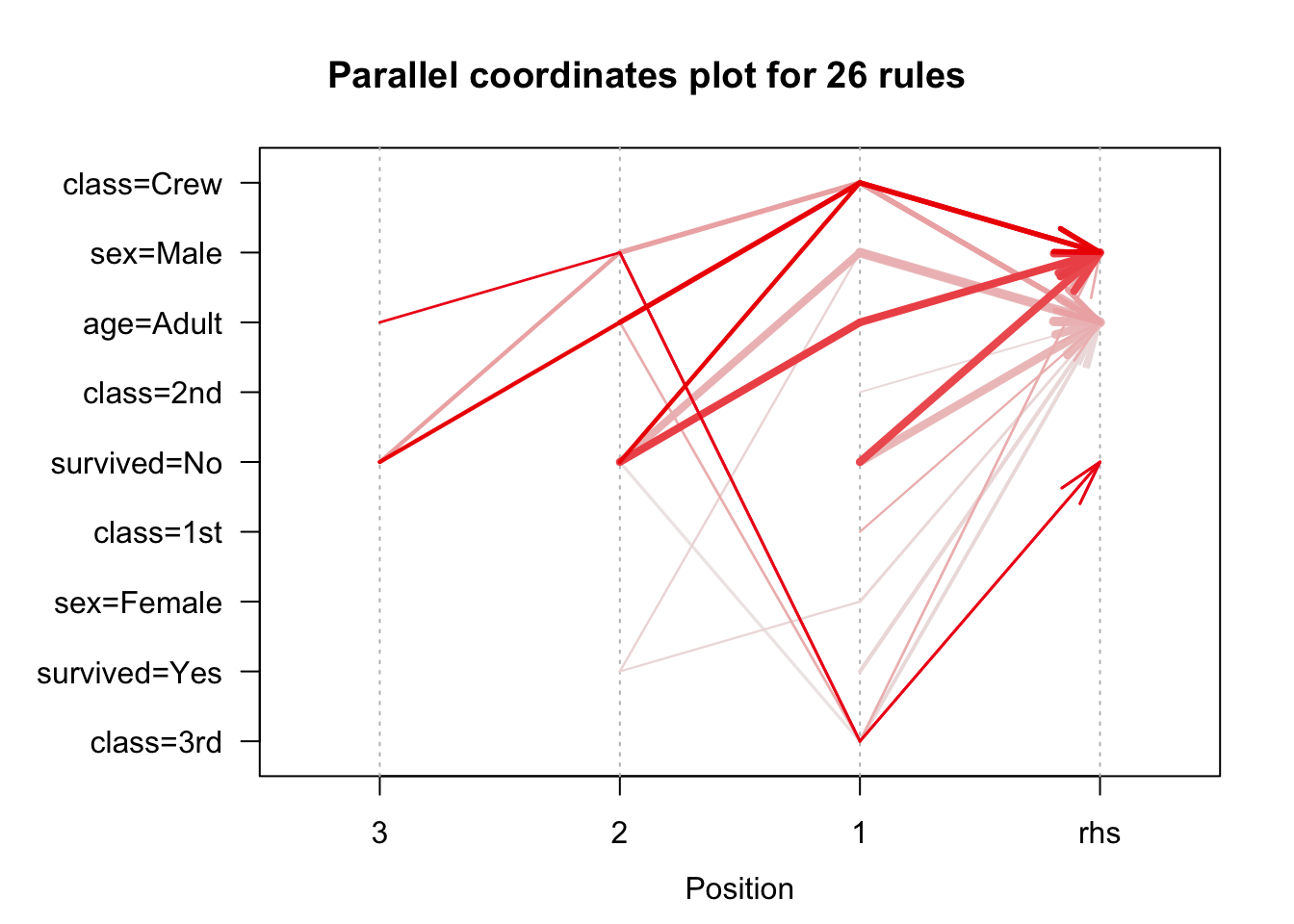

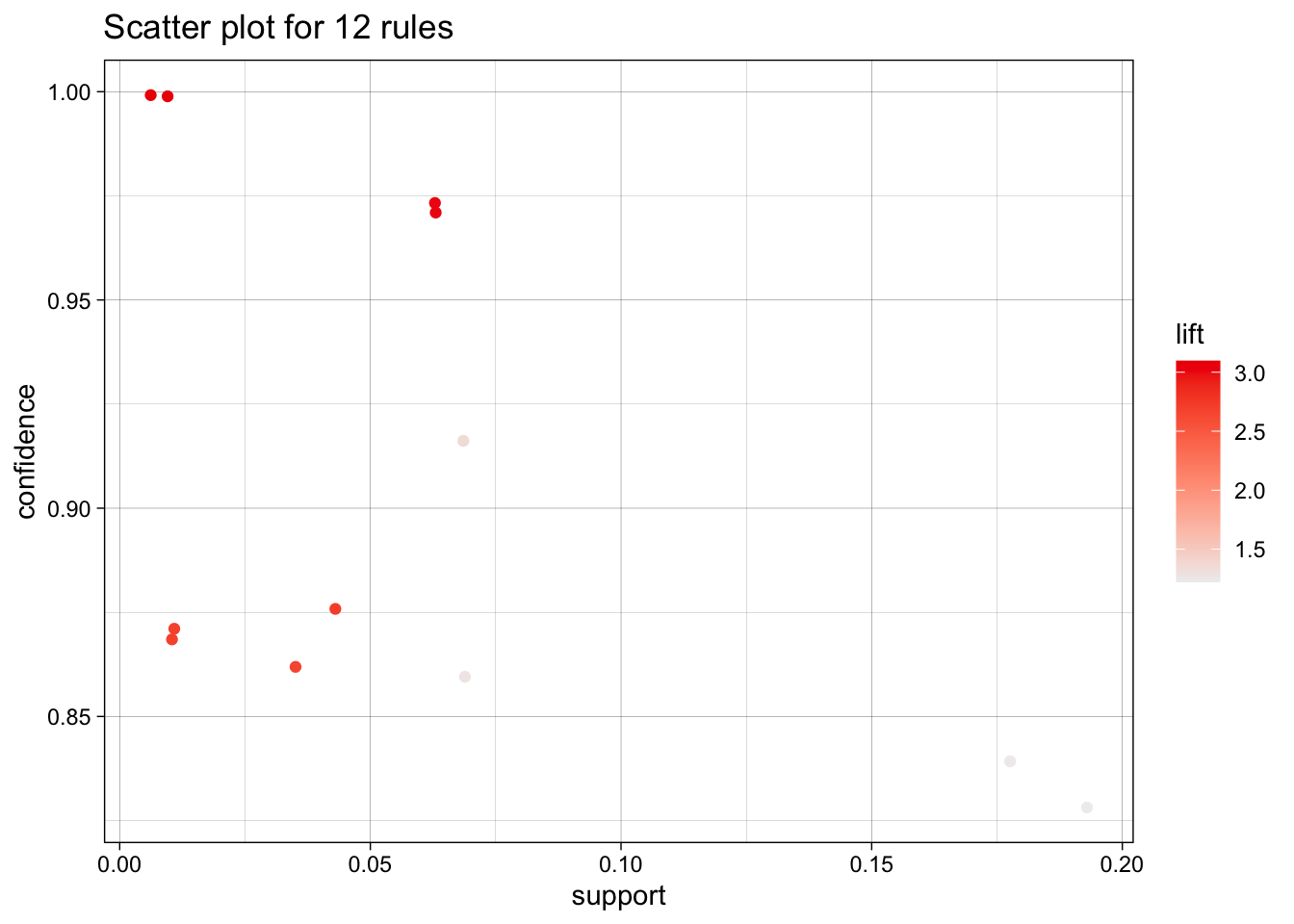

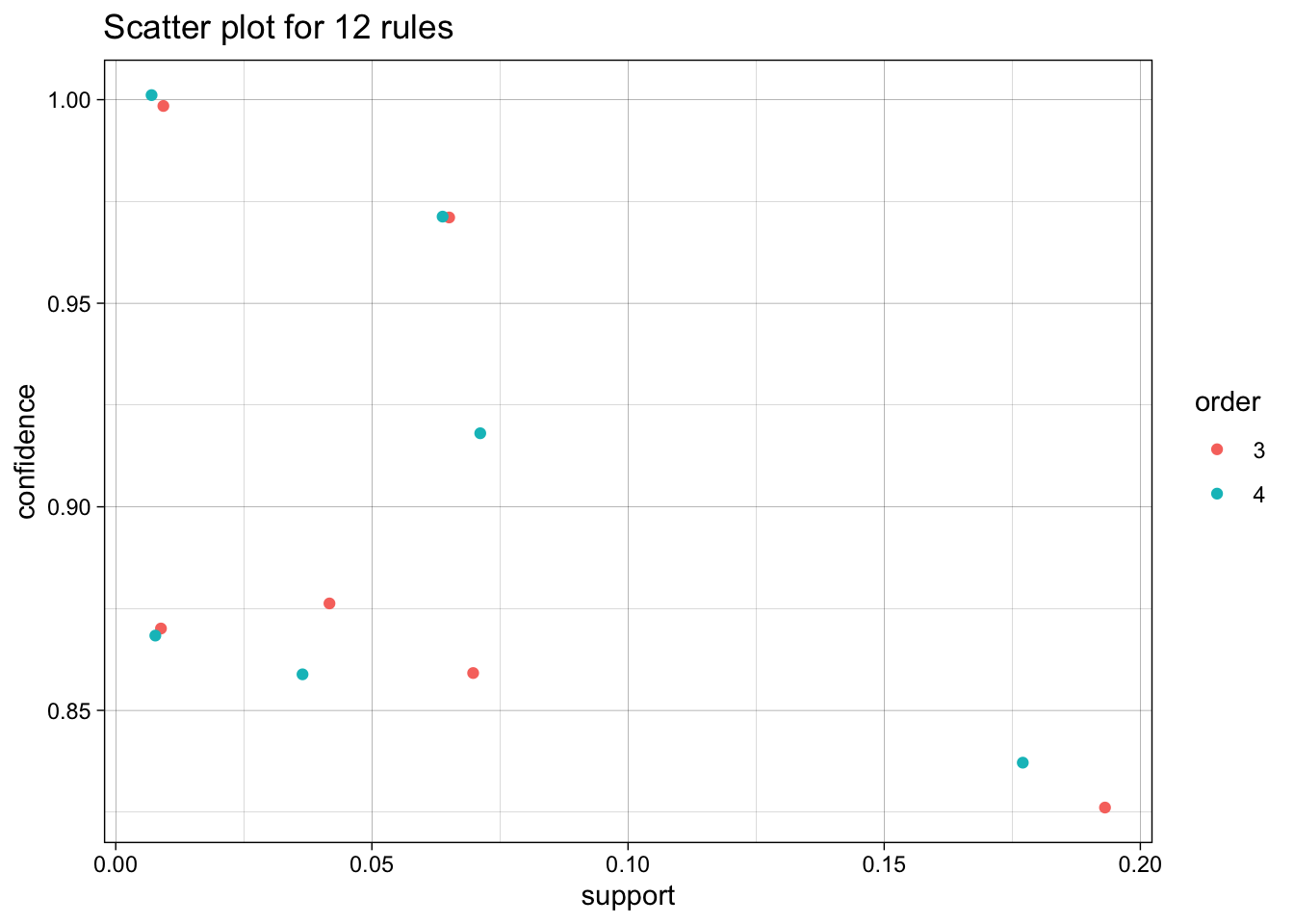

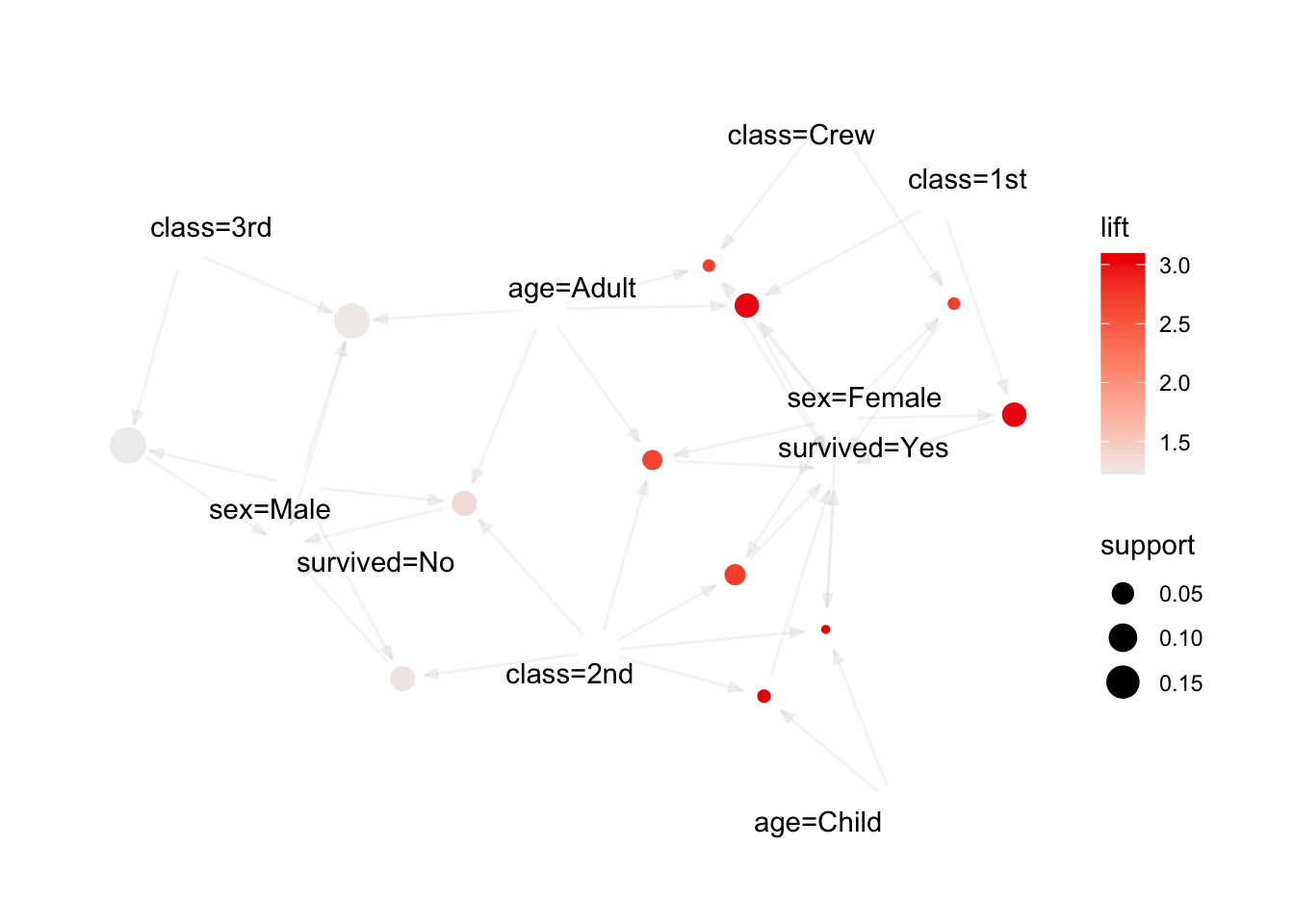

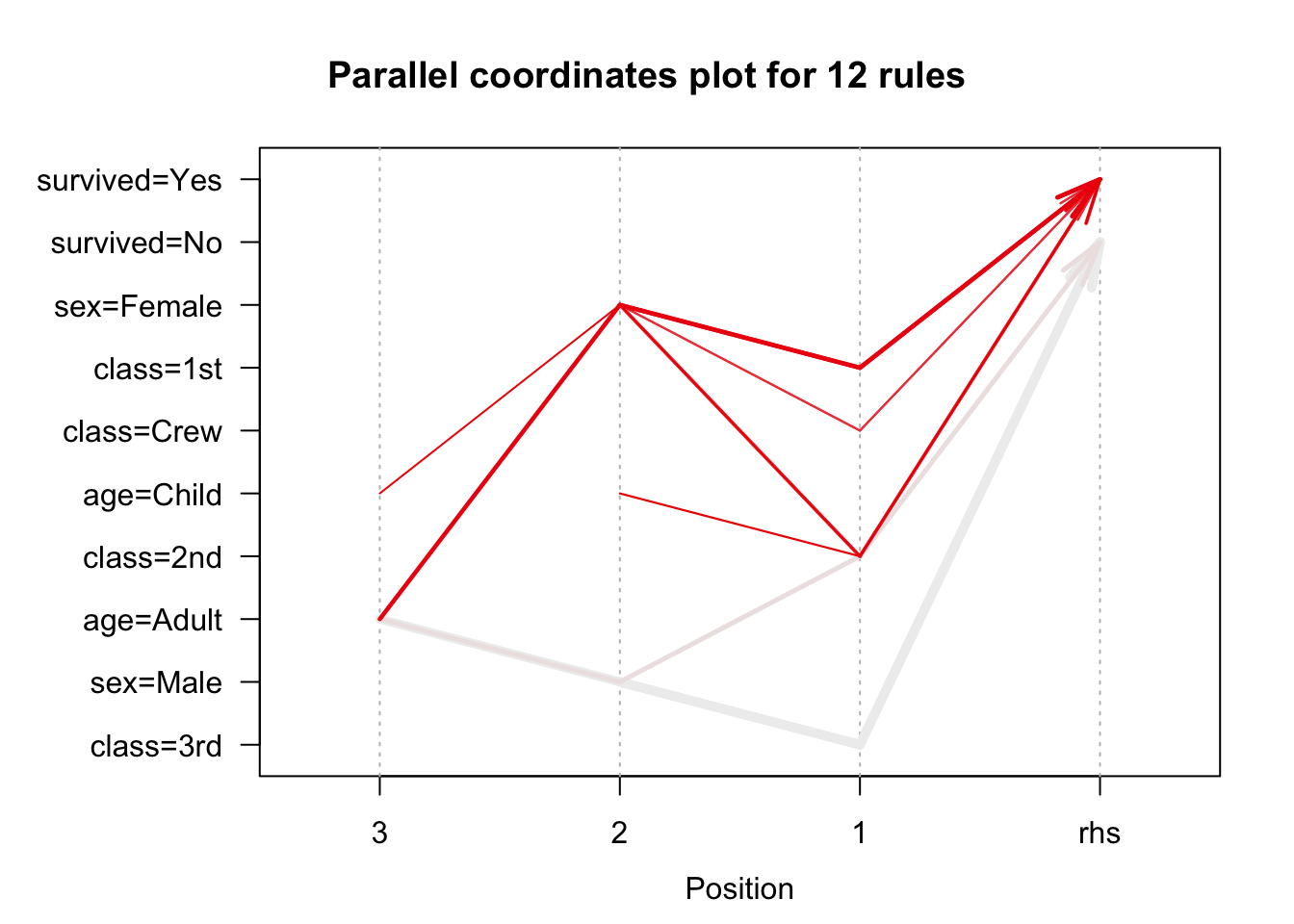

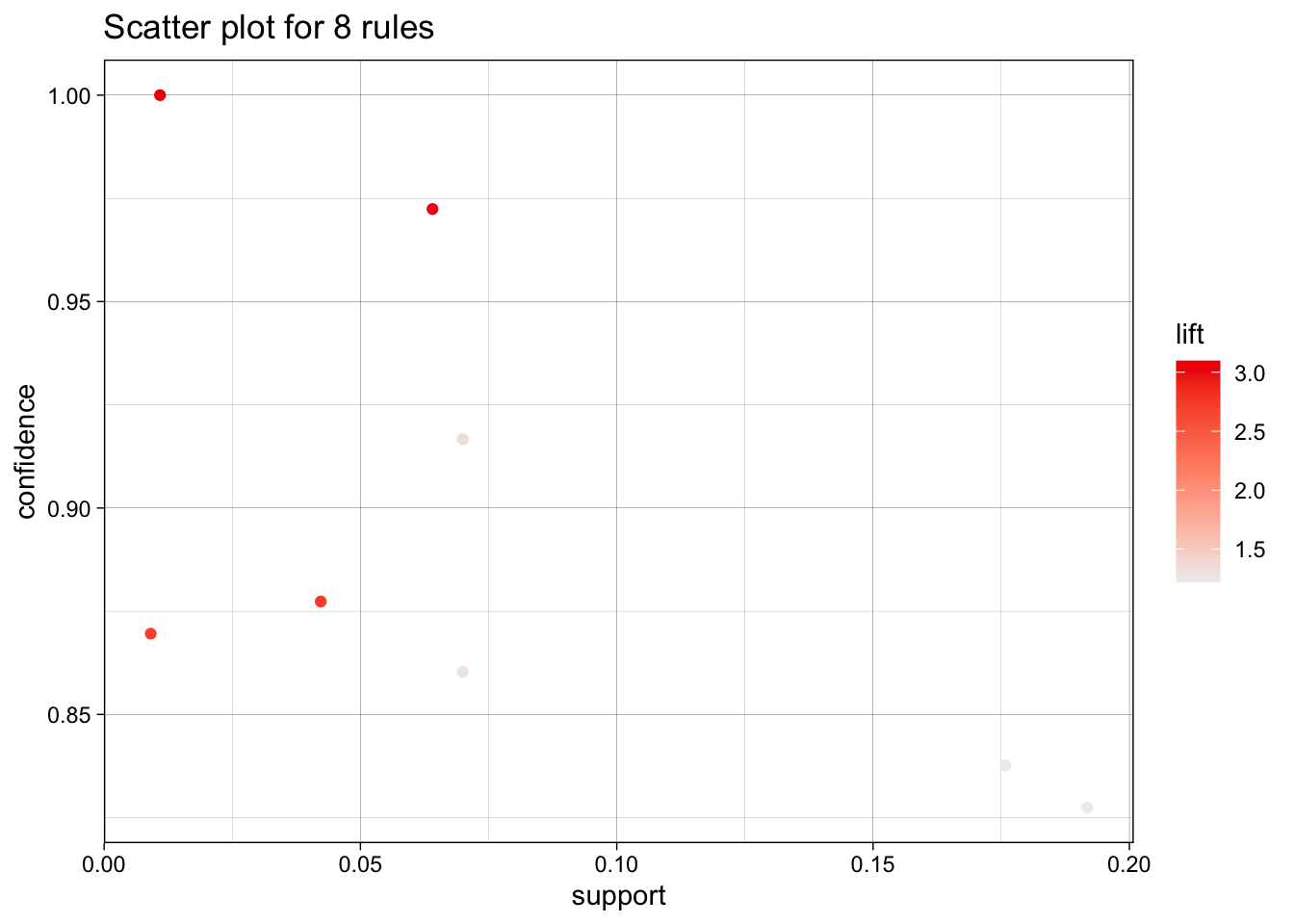

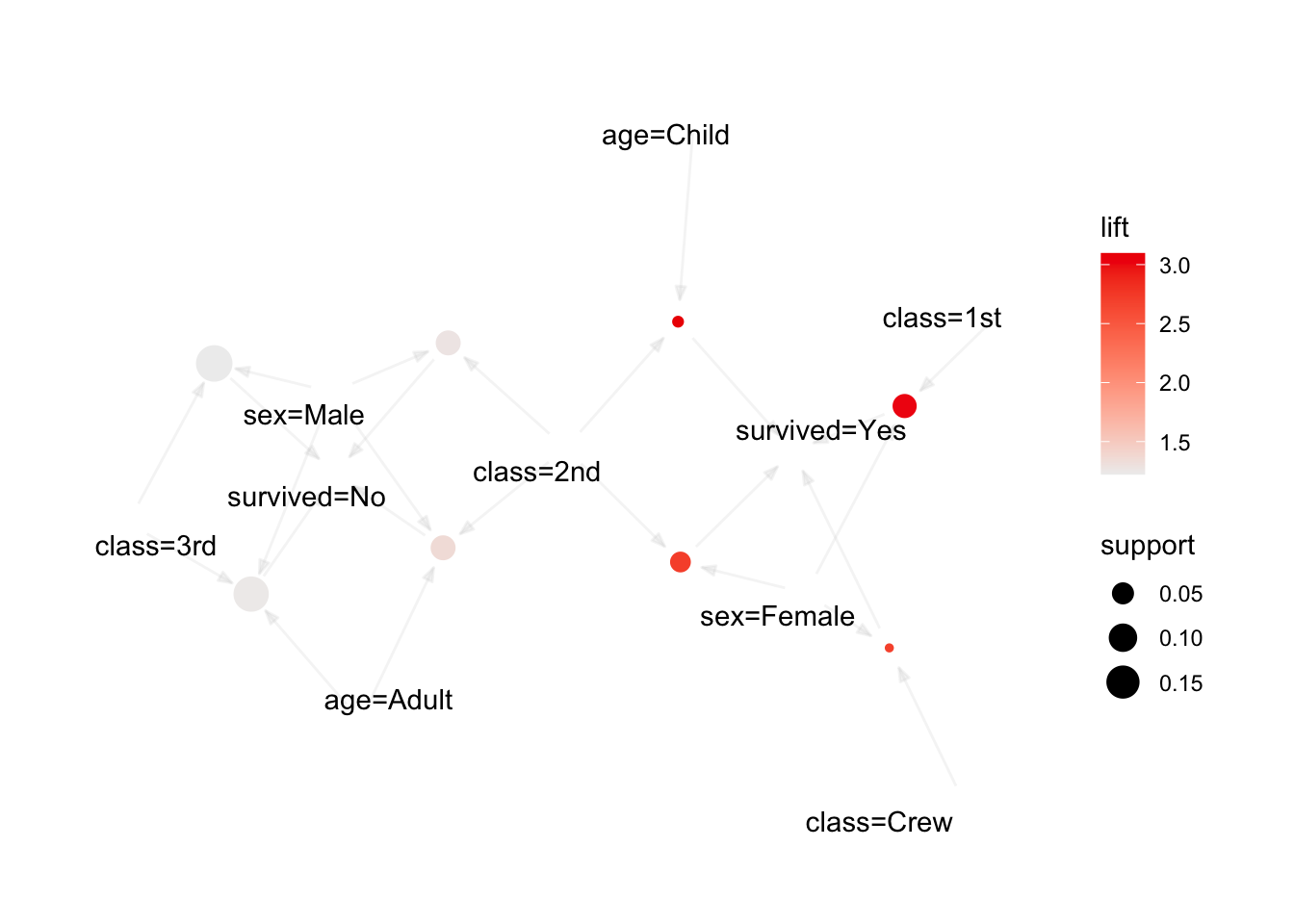

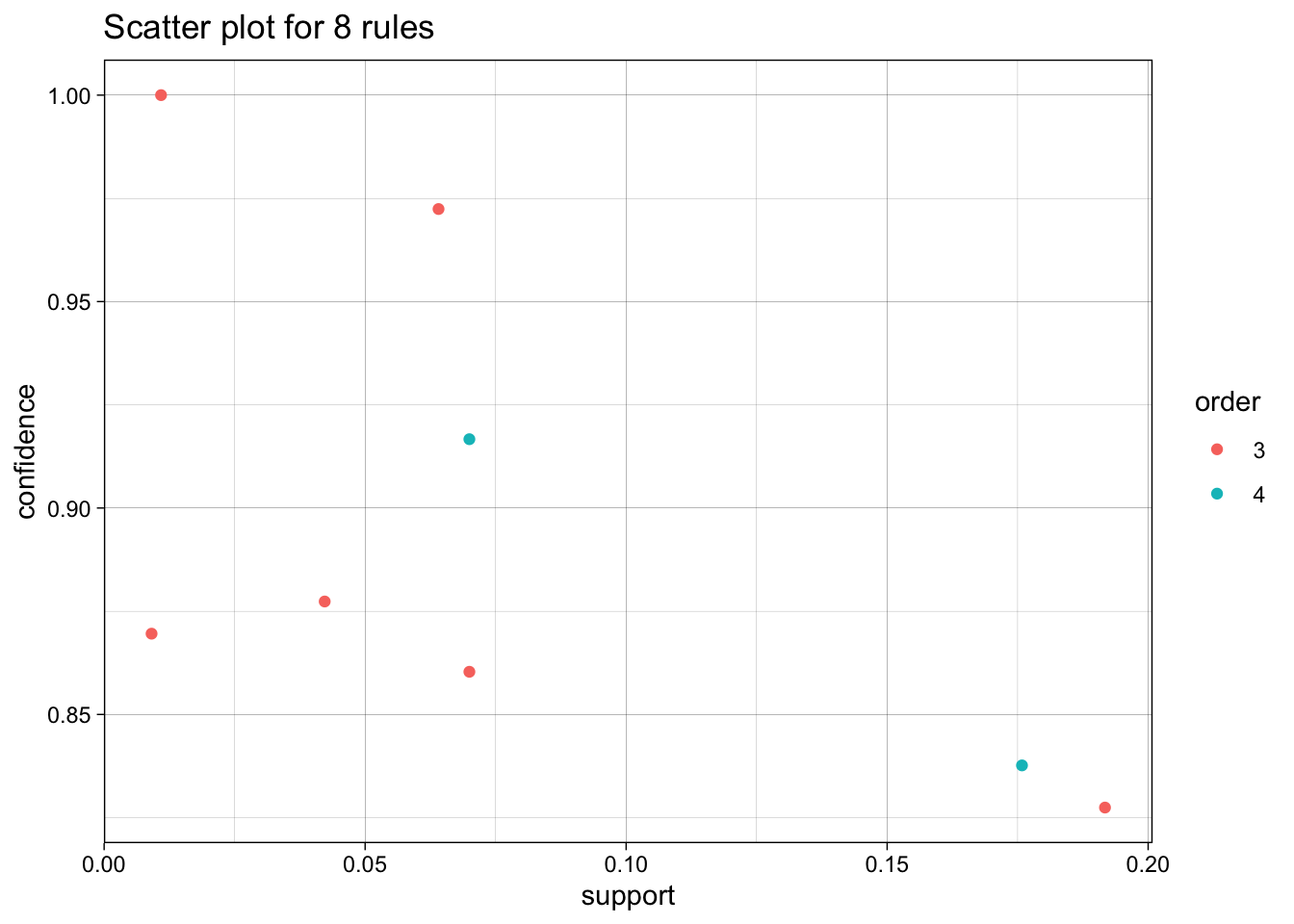

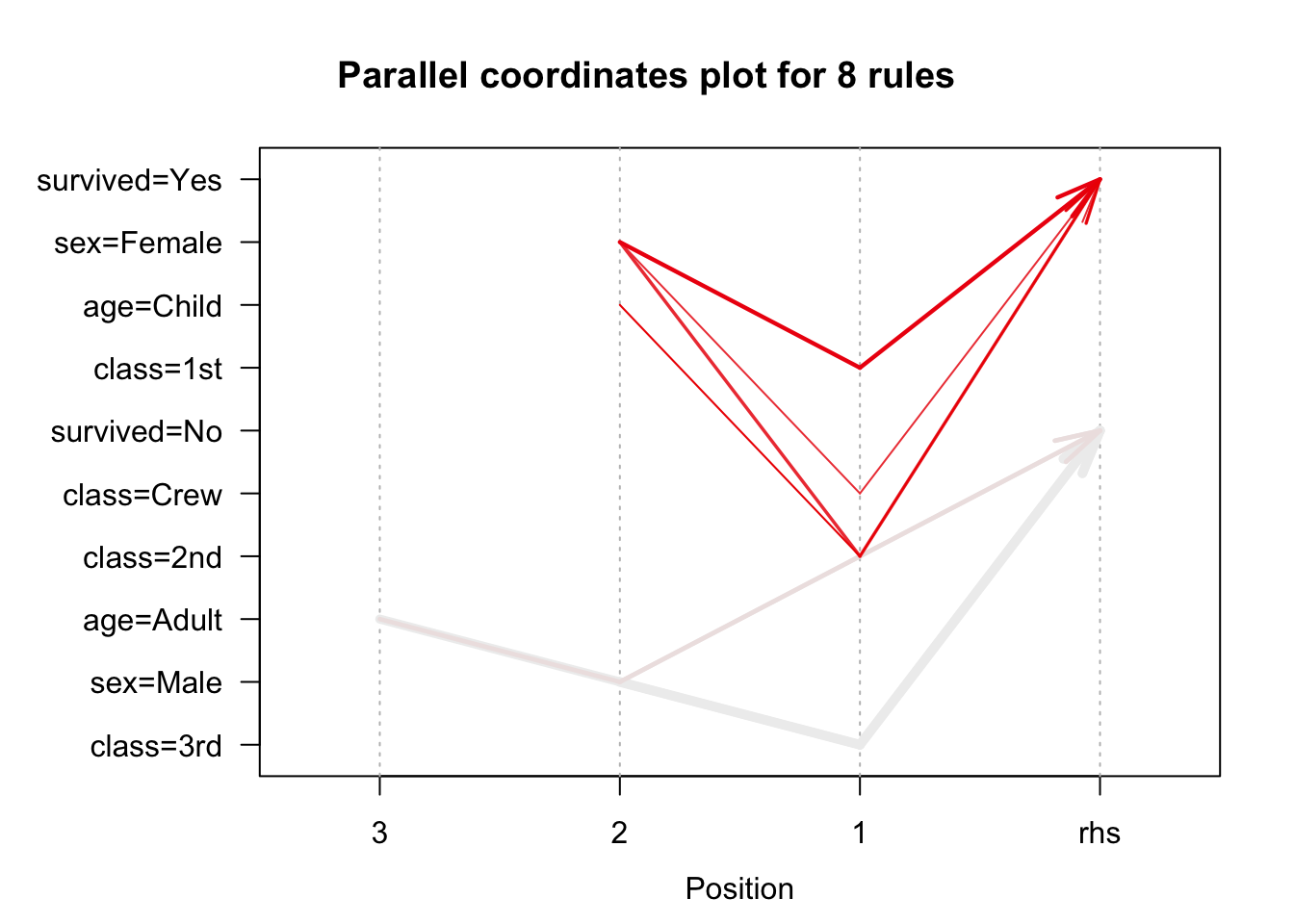

We use the arules implementation of the a priori algorithm in R to generate and prune candidate rules, eventually leading to 8 rules (the results are visualized in Figure 16.7). Who survived? Who didn’t?58

Figure 16.7: Visualization of the 8 Titanic association rules with parallel coordinates.

We show how to obtain these rules via R in Association Rules Mining: Titanic Dataset.

16.3 Classification and Value Estimation

The diversity of problems that can be addressed by classification algorithms is significant, and covers many domains. It is difficult to comprehensively discuss all the methods in a single book. [C.C. Aggarwal [86]]

16.3.1 Overview

The principles underlying classification, regression and class probability estimation are well-known and straightforward. Classification is a supervised learning endeavour in which a sample set of data (the training set) is used to determine rules and patterns that divide the data into predetermined groups, or classes. The training data usually consists of a randomly selected subset of the labeled data.59

In the testing phase, the model is used to assign a class to observations in the testing set, in which the label is hidden, in spite of being actually known.

The performance of the predictive model is then evaluated by comparing the predicted and the values for the testing set observations (but never using the training set observations). A number of technical issues need to be addressed in order to achieve optimal performance, among them: determining which features to select for inclusion in the model and, perhaps more importantly, which algorithm tochoose.

The mushroom (classification) model from Data Science and Machine Learning Tasks provides a clean example of a classification model, albeit one for which no detail regarding the training data and choice of algorithm were made available.

Applications

Classification and value estimation models are among the most frequently used of the data science models, and form the backbone of what is also known as predictive analytics. There are applications and uses in just about every field of human endeavour, such as:

medicine and health science – predicting which patient is at risk of suffering a second, and this time fatal, heart attack within 30 days based on health factors (blood pressure, age, sinus problems, etc.);

social policies – predicting the likelihood of required assisting housing in old age based on demographic information/survey answers;

marketing/business – predicting which customers are likely to cancel their membership to a gym based on demographics and usage;

in general, predicting that an object belongs to a particular class, or organizing and grouping instances into categories, or

enhancing the detection of relevant objects:

avoidance – “this object is an incoming vehicle”;

pursuit – “this borrower is unlikely to default on her mortgage”;

degree – “this dog is 90% likely to live until it’s 7 years old”;

economics – predicting the inflation rate for the coming two years based on a number of economic indicators.

Concrete Examples

Some concrete examples may provide a clearer picture of the types of supervised learning problems that quantitative consultants may be called upon to solve.

A motor insurance company has a fraud investigation department that studies up to 20% of all claims made, yet money is still getting lost on fraudulent claims. To help better direct the investigators, management would like to determine, using past data, if it is possible to predict

whether a claim is likely to be fraudulent?

whether a customer is likely to commit fraud in the near future?

whether an application for a policy is likely to result in a fraudulent claim?

the amount by which a claim will be reduced if it is fraudulent?

Customers who make a large number of calls to a mobile phone company’s customer service number have been identified as churn risks. The company is interested in reducing said churn. Can they predict

the overall lifetime value of a customer?

which customers are more likely to churn in the near future?

what retention offer a particular customer will best respond to?

In every classification scenario, the following questions must be answered before embarking on analysis:

What kind of data is required?

How much of it?

What would constitute a predictive success?

What are the risks associated with a predictive modeling approach?

These have no one-size-fits-all answers. In the absence of testing data, classification models cannot be used for predictive tasks, but may still be useful for descriptive tasks.

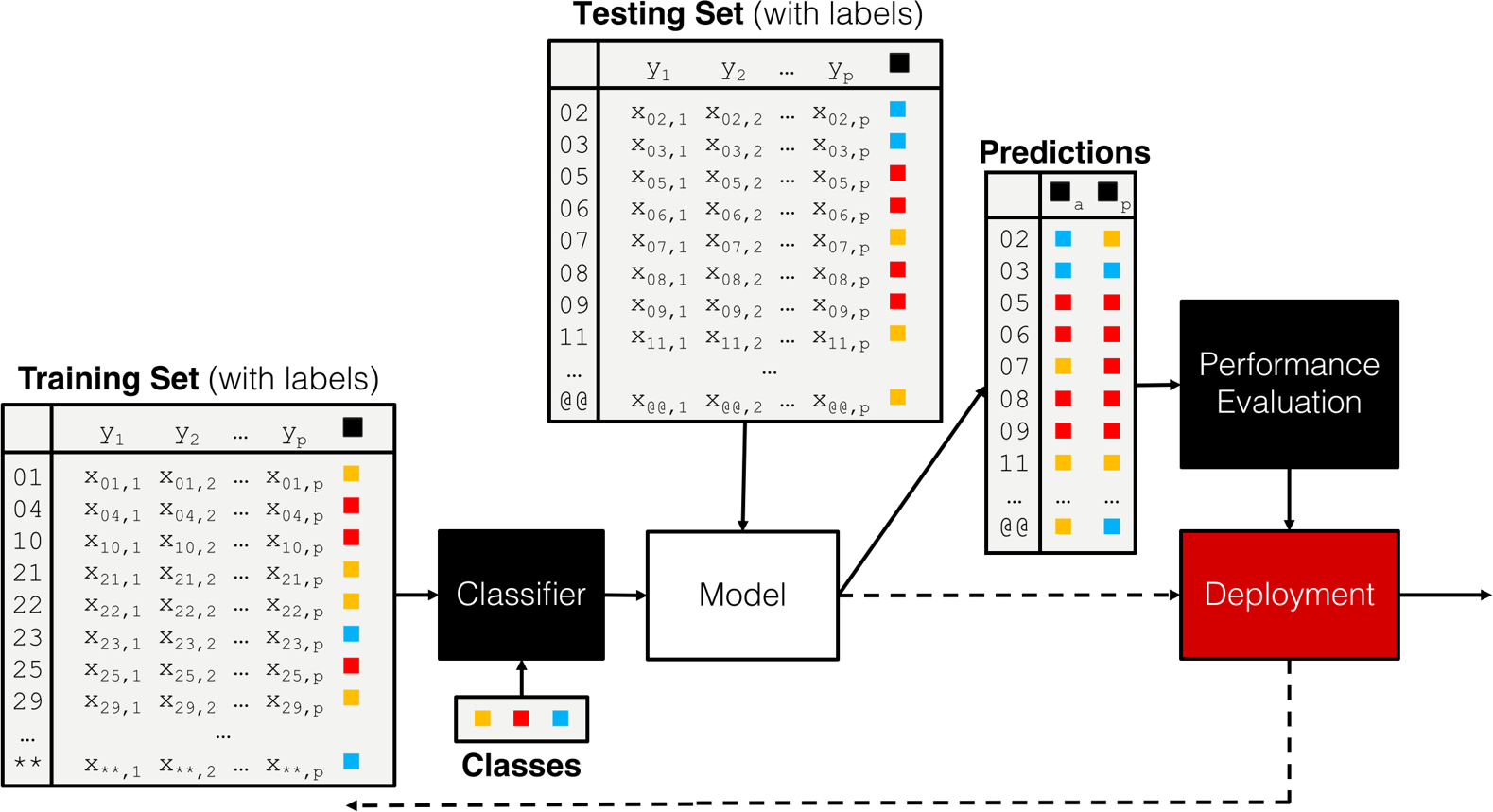

When testing data exists, the process is often quite similar, independently of the choice of the algorithm (see the classification pipeline shown in Figure 16.8).

Figure 16.8: A classification pipeline, including training set, testing set, performance evaluation, and (eventual) deployment.

16.3.2 Classification Algorithms

The number of classification algorithms is truly staggering – it often seems as though new algorithms and variants are put forward on a monthly basis, depending on the task and on the type of data [86].

While some of them tend to be rather esoteric, there is a fairly small number of commonly-used workhorse algorithms/approaches that data scientists and consultants should at least have at their command (full descriptions are available in [77], [85], [147]):

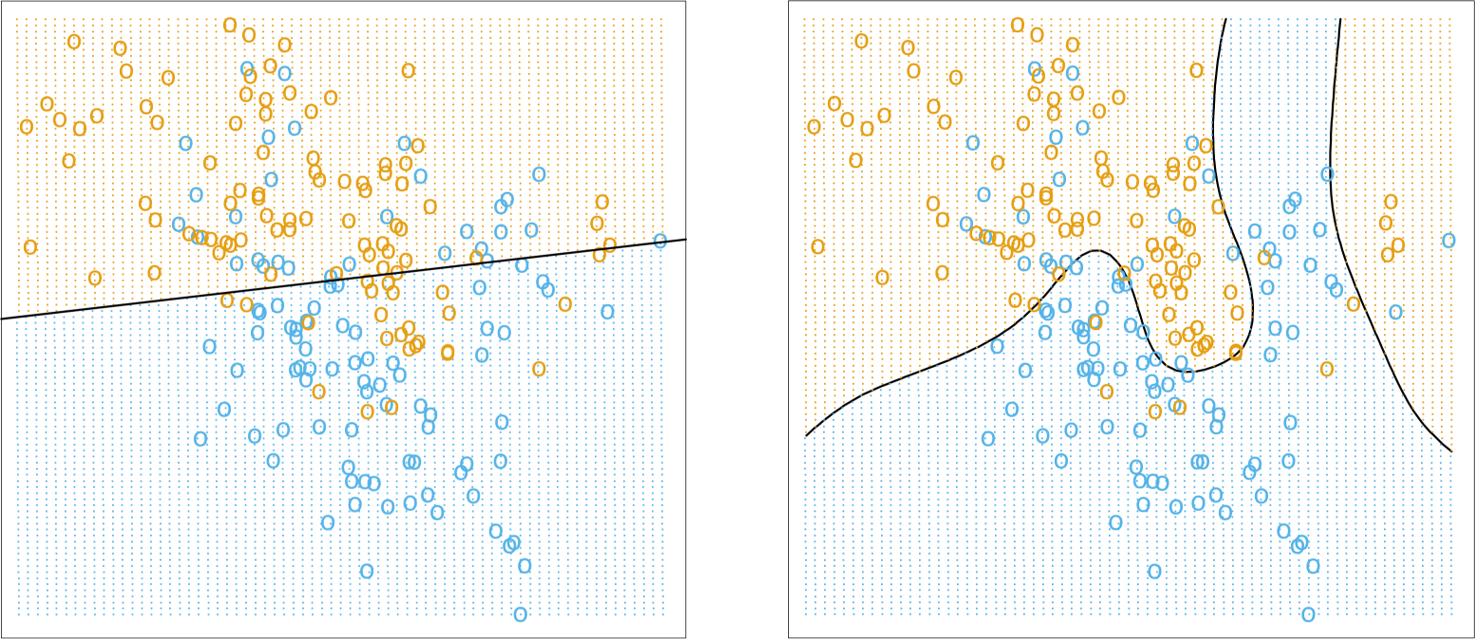

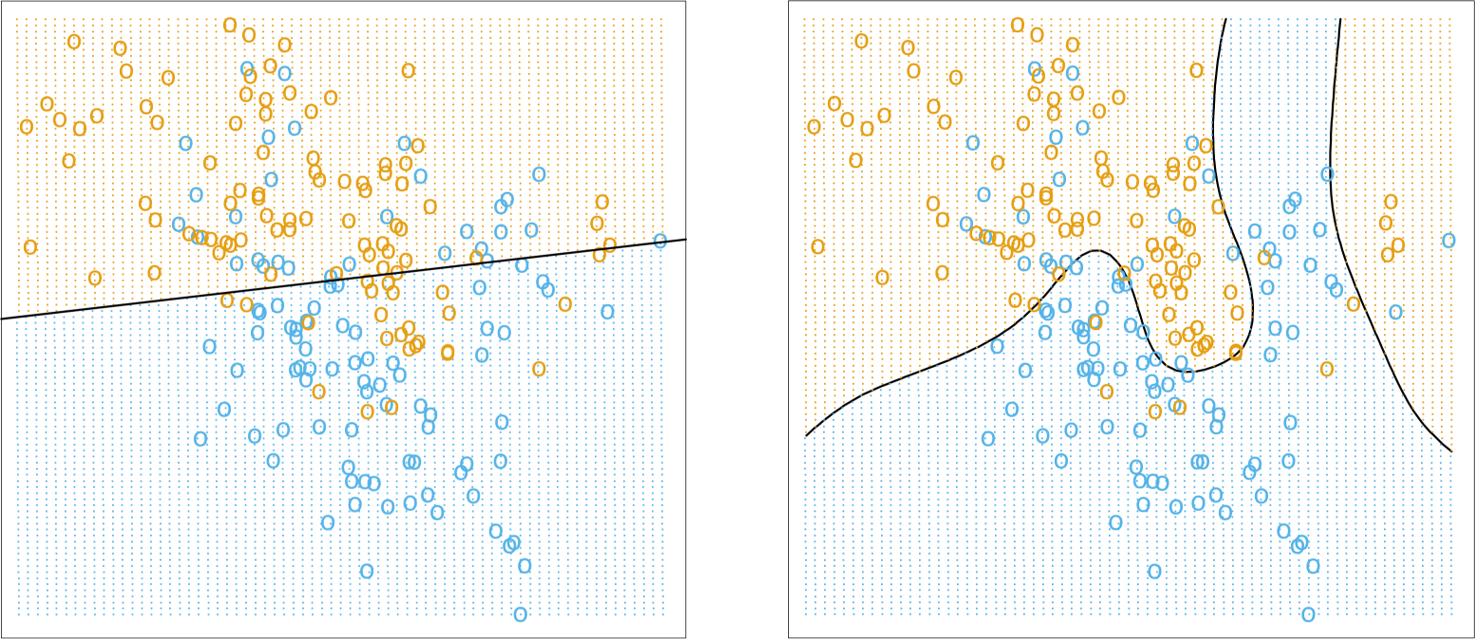

logistic regression and linear regression are classical models which are often used by statisticians but rarely in a classification setting (the estimated coefficients are often used to determine the features’ importance); one of their strengths is that the machinery of standard statistical theory (hypothesis testing, confidence intervals, etc.) is still available to the analyst, but they are easily affected by variance inflation in the presence of predictor multi-colinearity, and the stepwise variable selection process that is typically used is problematic – regularization methods would be better suited in general [148] (see Figure (fig:class3) for illustrations);

neural networks have become popular recently due to the advent of deep learning; they might provide the prototypical example of a black box algorithm as they are hard to interpret; another issue is that they require a fair amount of data to train properly – we will have more to say on the topic in a later chapter;

decision trees are perhaps the most commons of all data science algorithms, but they tend to overfit the data when they are not pruned correctly, a process which often has to be done manually (see Figure (fig:class3) for an illustration) – we shall discuss the pros an cons of decision trees in general in Decision Trees;

naı̈ve Bayes classifiers have known quite a lot of success in text mining applications (more specifically as the basis of powerful spam filters), but, embarrassingly, no one is quite sure why they should work as well as they do given that one of their required assumptions (independence of priors) is rarely met in practice (see Figure (fig:class3) for an illustration);

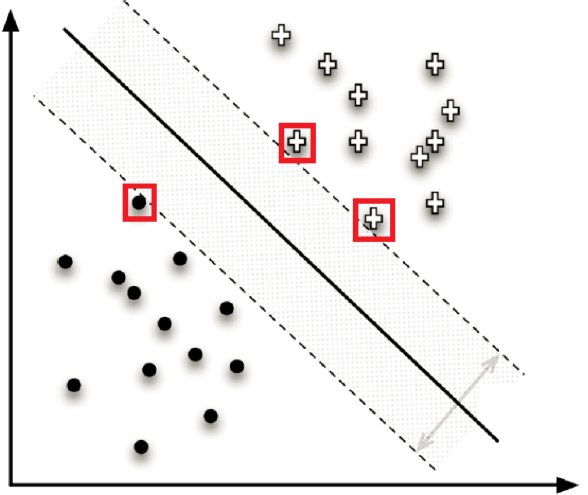

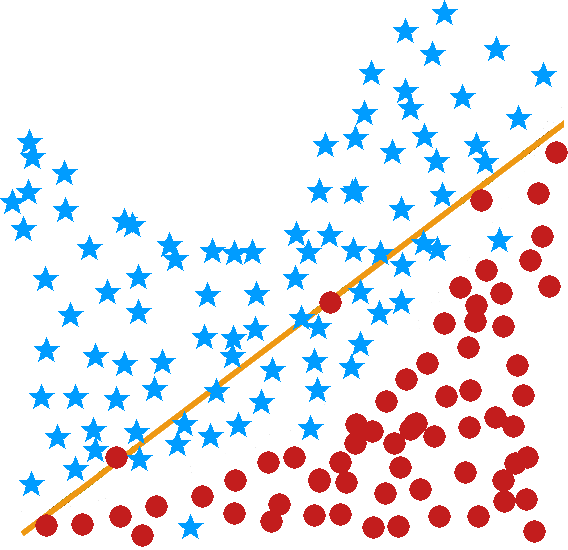

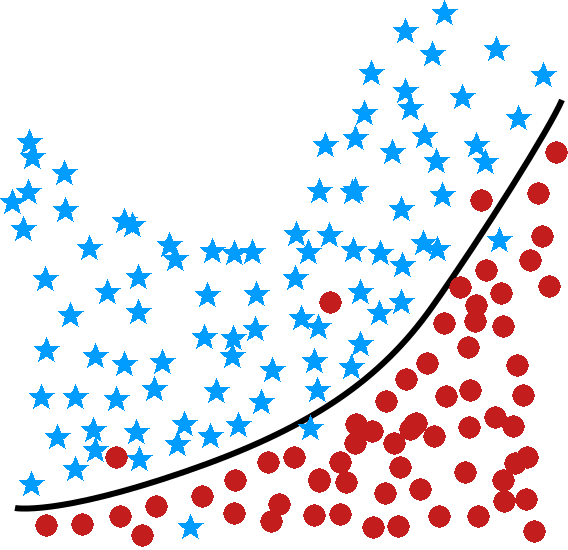

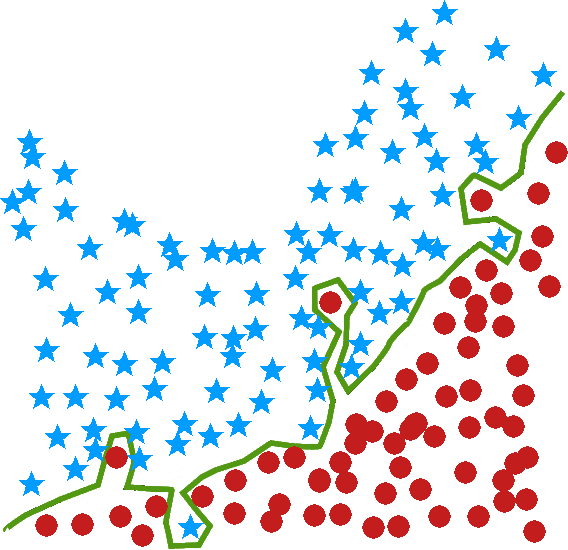

support vector machines attempt to separate the dataset by “fitting” as wide of a “tube” as possible through the classes (subjected to a number of penalty constraints); they have also known successes, most notably in the field of digital handwriting recognition, but their decision boundaries (the tubes in question) tend to be non-linear and quite difficult to interpret; nevertheless, they may help mitigate some of the difficulties associated with big data (see Figure (fig:class2) for an illustration);

nearest neighbours classifiers basically implement a voting procedure and require very little assumptions about the data, but they are not very stable as adding training points may substantially modify the boundary (see Figures (fig:class3) and (fig:class2) for illustrations);

Boosting methods [129], [149] and Bayesian methods ([5], [150], [151]) are also becoming more popular.

Figure 16.9: Illustrations of various classifiers (linear regression, top left; optimal Bayes, top right; 1NN and 15NN, middle left and right, respectively, on an artificial dataset (from [85]); decision tree depicting the chances of survival for various disasters (fictional, based on [152]). Note that linear regression is more stable, simpler to describe, but less accurate than NN and optimal Bayes.

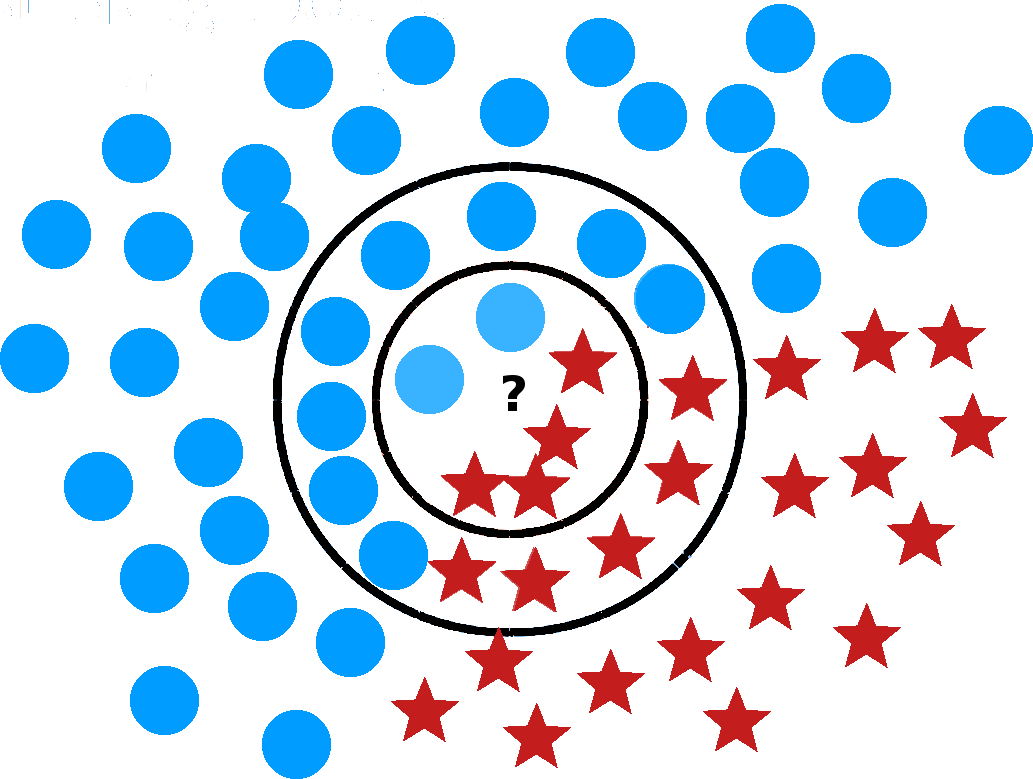

Figure 16.10: Illustration of a nearest neighbour (left) and a support vector machines classifier (right, based on [77]). What is the 6NN prediction for the location marked by a question mark? What about the 19NN prediction?

16.3.3 Decision Trees

In order to highlight the relative simplicity of most classification algorithms, we will discuss the workings of ID3, a historically significant decision tree algorithm.60

Classification trees are perhaps the most intuitive of all supervised methods: classification is achieved by following a path up the tree, from its root, through its branches, and ending at its leaves (alghough typically the tree is depicted with its root at the top and its leaves at the bottom).

To make a prediction for a new instance, it suffices to follow the path down the tree, reading the prediction directly once a leaf is reached. It sounds simple enough in theory, but in practice, creating the tree and traversing it might be time-consuming if there are too many variables in the dataset (due to the criterion that is used to determine how the branches split).

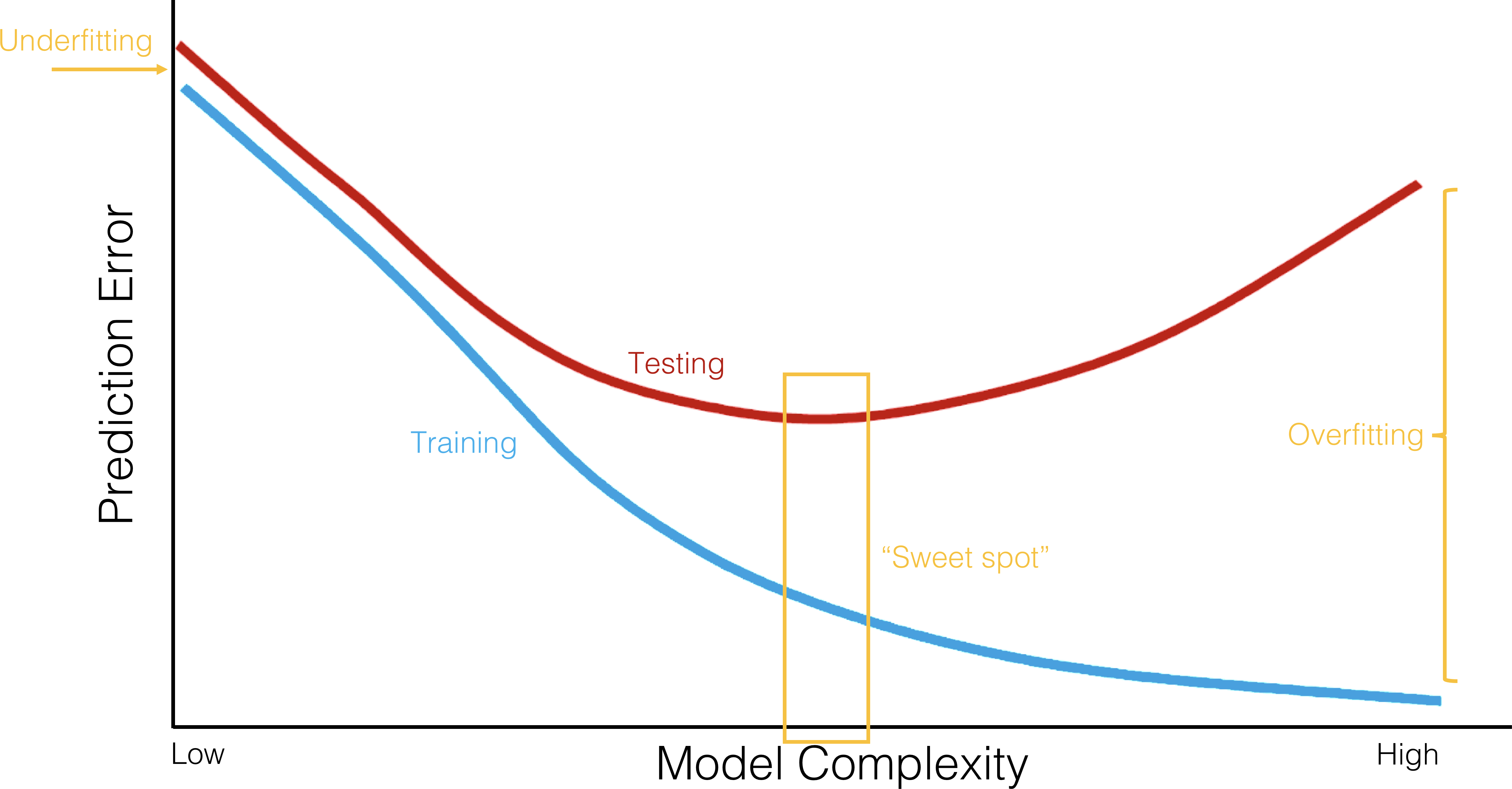

Prediction accuracy can be a concern in trees whose growth is unchecked. In practice, the criterion of purity at the leaf-level (that is to say, all instances in a leaf belong to the same leaf) is linked to bad prediction rates for new instances. Other criteria are often used to prune trees, which may lead to impure leaves.

How do we grow such trees?

For predictive purposes, we need a training set and a testing set upon which to evaluate the tree’s performance. Ross Quinlan’s Iterative Dichotomizer 3 (a precursor to the widely-used C4.5 and C5.0) follows a simple procedure:

split the training data (parent) set into (children) subsets, using the different levels of a particular attribute;

compute the information gain for each subset;

select the most advantageous split, and

repeat for each node until some leaf criterion is met.

Entropy is a measure of disorder in a set . Let be the proportion of observations in belonging to category , for . The entropy of is given by If the parent set consisting of records is split into children sets containing records, respectively, then the information gained from the split is The sum term in the information gain equation is a weighted average of the entropy of the children sets.

If the split leads to little disorder in the children, then is high; if the split leads to similar disorder in both children and parent, then is low.

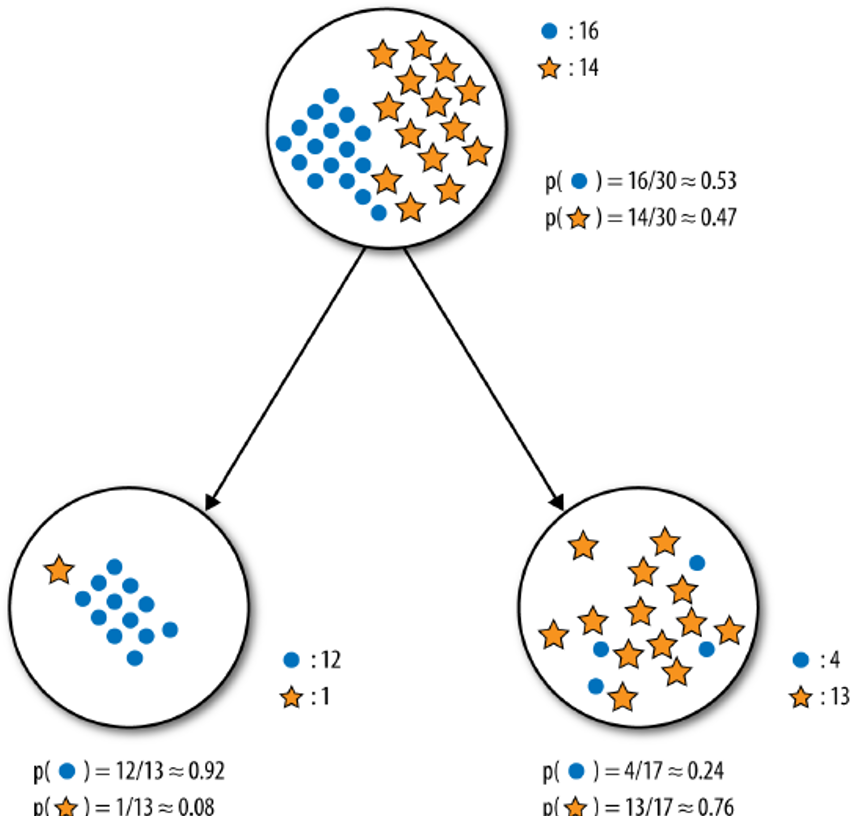

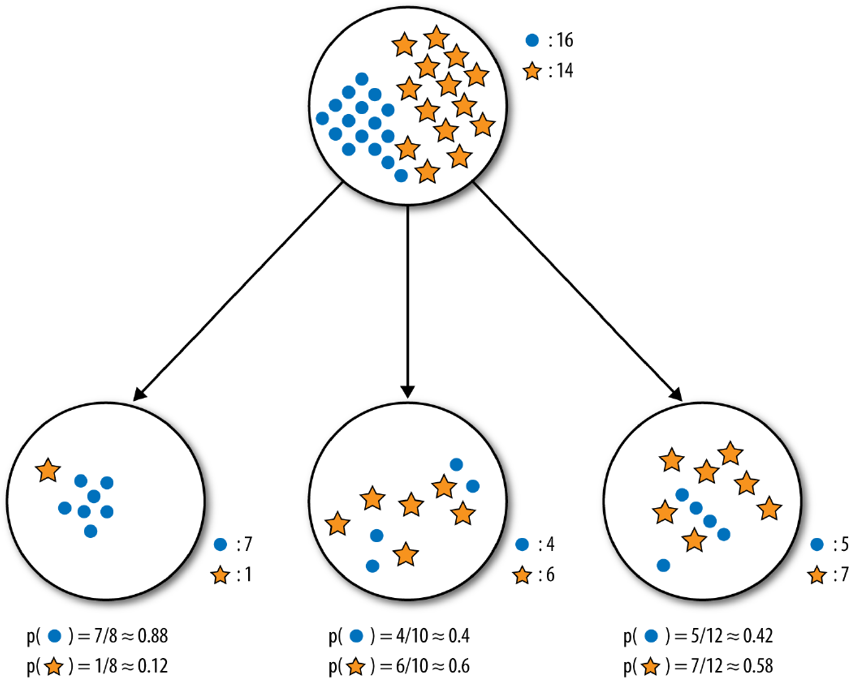

Consider, as in Figure 16.11, two splits shown for a parent set with 30 observations separated into 2 classes: and .

Visually, it appears as though the binary split does a better job of separating the classes. Numerically, the entropy of the parent set is For the binary split (on the left), leading to the children set (left) and (right), the respective entropies are and so that the information gained by that split is

On its own, this value is not substantially meaningful – it is only in comparison to the information gained from other splits that it becomes useful.

A similar computation for the ternary split leads to , which is indeed smaller than the information gained by the binary split – of these two options, ID3 would select the first as being most advantageous.

Decision trees have numerous strengths: they

are easy to interpret, providing, as they do, a white box model – predictions can always be explained by following the appropriate paths;

can handle numerical and categorical data simultaneously, without first having to “binarise” the data;

can be used with incomplete datasets, if needed (although there is still some value in imputing missing observations);

allow for built-in feature selection as less relevant features do not tend to be used as splitting features;

make no assumption about independence of observations, underlying distributions, multi-colinearity, etc., and can thus be used without the need to verify assumptions;

lend themselves to statistical validation (in the form of cross-validation), and

are in line with human decision-making approaches, especially when such decisions are taken deliberately.

On the other hand, they are

not usually as accurate as other more complex algorithms, nor as robust, as small changes in the training data can lead to a completely different tree, with a completely different set of predictions;[This can become problematic when presenting the results to a client whose understanding of these matters is slight.]

particularly vulnerable to overfitting in the absence of pruning — and pruning procedures are typically fairly convoluted (some algorithms automate this process, using statistical tests to determine when a tree’s “full” growth has been achieved), and

biased towards categorical features with high number of levels, which may give such variables undue importance in the classification process.

Information gain tends to grow small trees in its puruit of pure leaves, but it is not the only splitting metric in use (Gini impurity, variance reduction, etc.).

Notes

ID3 is a precursor of C4.5, perhaps the most popular decision tree algorithm on the market. There are other tree algorithms, such as C5.0, CHAID, MARS, conditional inference trees, CART, etc., each grown using algorithms with their own strengths and weaknesses.

Regression trees are grown in a similar fashion, but with a numerical response variable (predicted inflation rate, say), which introduces some complications [85], [129].

Decision trees can also be combined together using boosting algorithms (such as AdaBoost) or random forests, providing a type of voting procedure also known as ensemble learning – an individual tree might make middling predictions, but a large number of judiciously selected trees are likely to make good predictions, on average [85], [129], [149] – we will re-visit these concepts in a later chapter.

Additionally:

since classification is linked to probability estimation, approaches that extend the basic ideas of regression models could prove fruitful;

rare occurrences are often more interesting and more difficult to predict and identify than regular instances – historical data at Fukushima’s nuclear reactor prior to the 2011 meltdown could not have been used to learn about meltdowns, for obvious reasons;61

with big datasets, algorithms must also consider efficiency – thankfully, decision trees are easily parallelizable.

16.3.4 Performance Evaluation

As a consequence of the (so-called) No-Free-Lunch Theorem, no single classifier can be the best performer for every problem. Model selection must take into account:

the nature of the available data;

the relative frequencies of the classification sub-groups;

the stated classification goals;

how easily the model lends itself to interpretation and statistical analysis;

how much data preparation is required;

whether it can accommodate various data types and missing observations;

whether it performs well with large datasets, and

whether it is robust against small data departures from theoretical assumptions.

Past success is not a guarantee of future success – it is the analyst’s responsibility to try a variety of models. But how can the “best” model be selected?

When a classifier attempts to determine what kind of music a new customer would prefer, there is next to no cost in making a mistake; if, on the other hand, the classifier attempts to determine the presence or absence of cancerous cells in lung tissue, mistakes are more consequential. Several metrics can be used to assess a classifier’s performance, depending on the context.

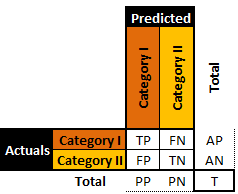

Binary classifiers (presented in the abstract in Figure 16.12) are simpler and have been studied far longer than multi-level classifiers; consequently, a larger body of evaluation metrics is available for these classifiers.

In the medical literature, for instance, , , and stand for True Positives, True Negatives, False Positives, and False Negatives, respectively.

Figure 16.12: A general binary classifier.

A perfect classifier would be one for which both , but in practice, that rarely ever happens (if at all).

Traditional performance metrics include:

sensitivity:

specificity:

precision:

negative predictive value:

false positive rate:

false discovery rate:

false negative rate:

accuracy:

score:

MCC:

informedness/ROC:

markedness:

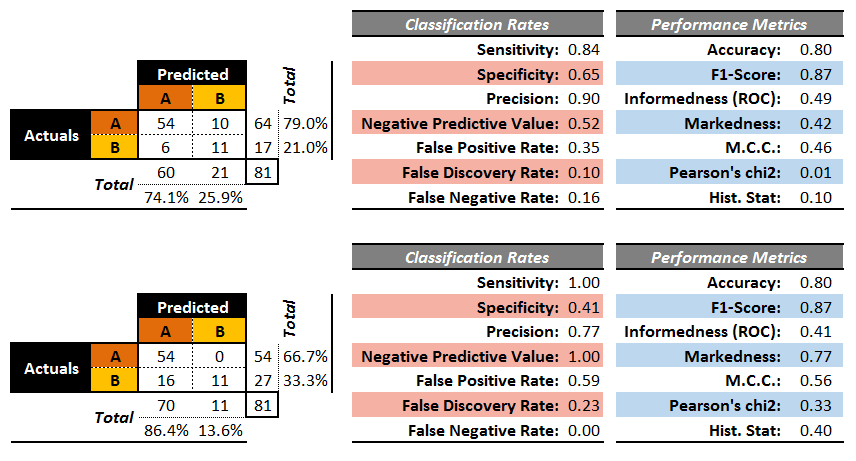

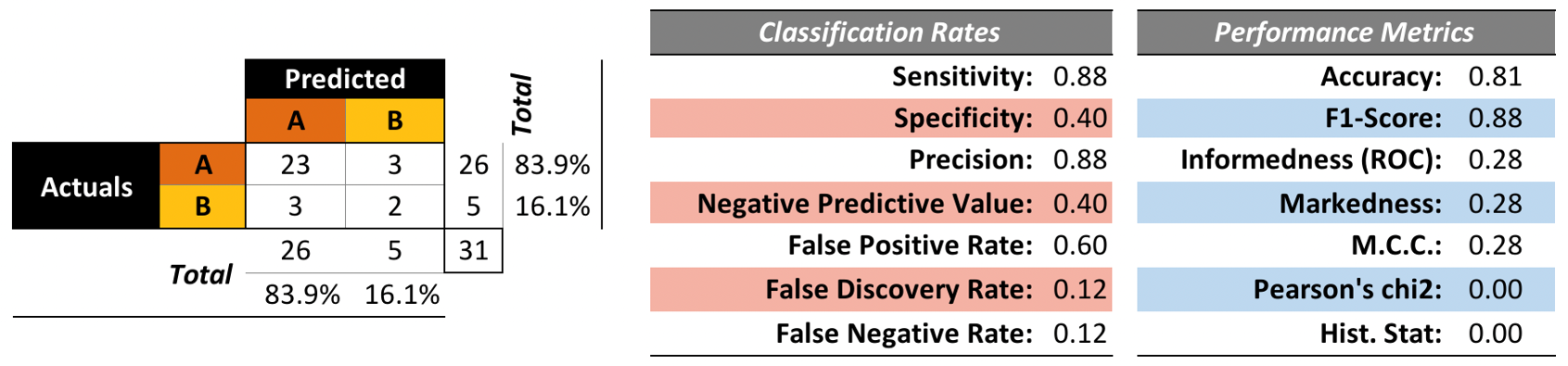

The confusion matrices of two artificial binary classifers for a testing set are shown in 16.13.

Figure 16.13: Performance metrics for two (artificial) binary classifiers.

Both classifiers have an accuracy of , but while the second classifier sometimes makes a wrong prediction for , it never does so for , whereas the first classifier makes erroneous predictions for both and .

On the other hand, the second classifier mistakenly predicts occurrence as 16 times while the first one only does so 6 times. So which one is best?

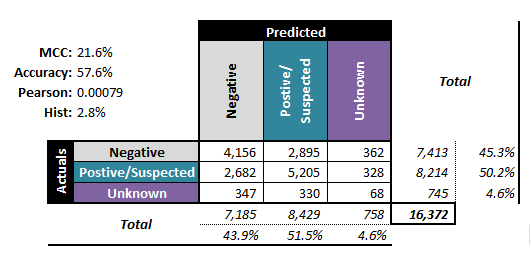

The performance metrics alone do not suffice to answer the question: the cost associated with making a mistake must also be factored in. Furthermore, it could be preferable to select performance evaluation metrics that generalize more readily to multi-level classifiers (see Figure 16.14 for examples of associated confusion matrices).

Figure 16.14: Performance metrics for (artificial) multi-level classifiers: ternary - left; senary - right (personal files).

The accuracy is the proportion of correct predictions amid all the observations; its value ranges from 0% to 100%. The higher the accuracy, the better the match, and yet, a predictive model with high accuracy may nevertheless be useless thanks to the Accuracy Paradox (see rare occurrence footnote).

The Matthews Correlation Coefficient (MCC), on the other hand, is a statistic which is of use even when the classes are of very different sizes. As a correlation coefficient between the actual and predicted classifications, its range varies from to .

If , the predicted and actual responses are identical,while if , the classifier performs no better than arandom prediction (“flip of a coin”).

It is also possible to introduce two non-traditional performance metrics (which are nevertheless well-known statistical quantities) to describe how accurately a classifier preserves the classification distribution (rather than how it behaves on an observation-by-observation basis):

Pearson’s :

Hist:

Note, however, that these are non-standard performance metrics. For a given number of levels, the smaller these quantities, the more similar the actual and predicted distributions.

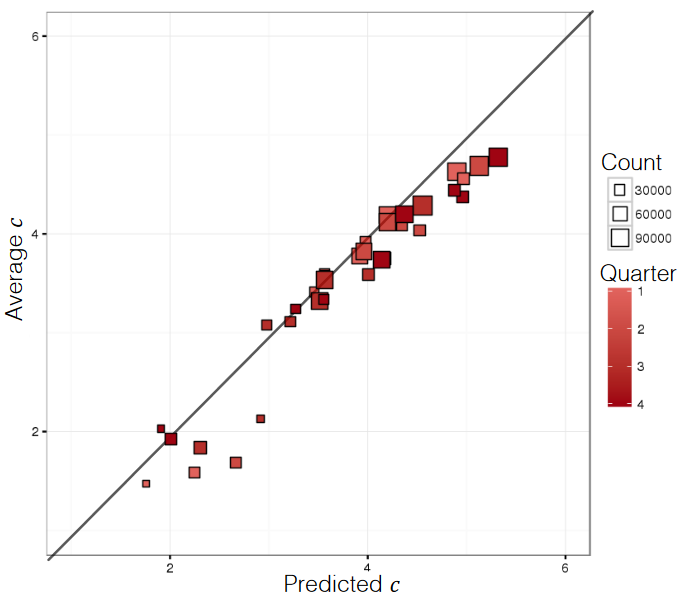

For numerical targets with predictions , the confusion matrix is not defined, but a number of classical performance evaluation metrics can be used on the testing set: the

mean squared and mean absolute errors

normalized mean squared/mean absolute errors

mean average percentage error

correlation , which is based on the notion that for good models, the predicted values and the actual values should congregate around the lines (see Figure @ref(fig:predictions for an illustration).

Figure 16.15: Predicted and actual numerical responses (personal file).

As is the case for classification, an isolated value estimation performance metric does not provide enough of a rationale for model validation/selection. One possible exception: normalized evaluation metrics do provide some information about the relative quality of performance (see [85], [129]).

16.3.5 Case Study: Minnesota Tax Audit

Large gaps between revenue owed (in theory) and revenue collected (in practice) are problematic for governments. Revenue agencies implement various fraud detection strategies (such as audit reviews) to bridge that gap.

Since business audits are rather costly, there is a definite need for algorithms that can predict whether an audit is likely to be successful or a waste of resources.

In Data Mining Based Tax Audit Selection: A Case Study of a Pilot Project at the Minnesota Department of Revenue [63], Hsu et al. study the Minnesota Department of Revenue’s (DOR) tax audit selection process with the help of classification algorithms.

Objective

The U.S. Internal Revenue Service (IRS) estimated that there were large gaps between revenue owed and revenue collected for 2001 and for 2006. Using DOR data, the authors sought to increase efficiency in the audit selection process and to reduce the gap between revenue owed and revenue collected.

Methodology

The authors took the following steps:

data selection and separation: experts selected several hundred cases to audit and divided them into training, testing and validating sets;

classification modeling using MultiBoosting, Naïve Bayes, C4.5 decision trees, multilayer perceptrons, support vector machines, etc;

evaluation of all models was achieved by testing the model on the testing set – models originally performed poorly on the testing set until the size of the business being audited was recognized to have an effect, leading to two separate tasks (large and small businesses);

model selection and validation was done by comparing the estimated accuracy between different classification model predictions and the actual field audits. Ultimately, MultiBoosting with Naïve Bayes was selected as the final model; the combination also suggested some improvements to increase audit efficiency.

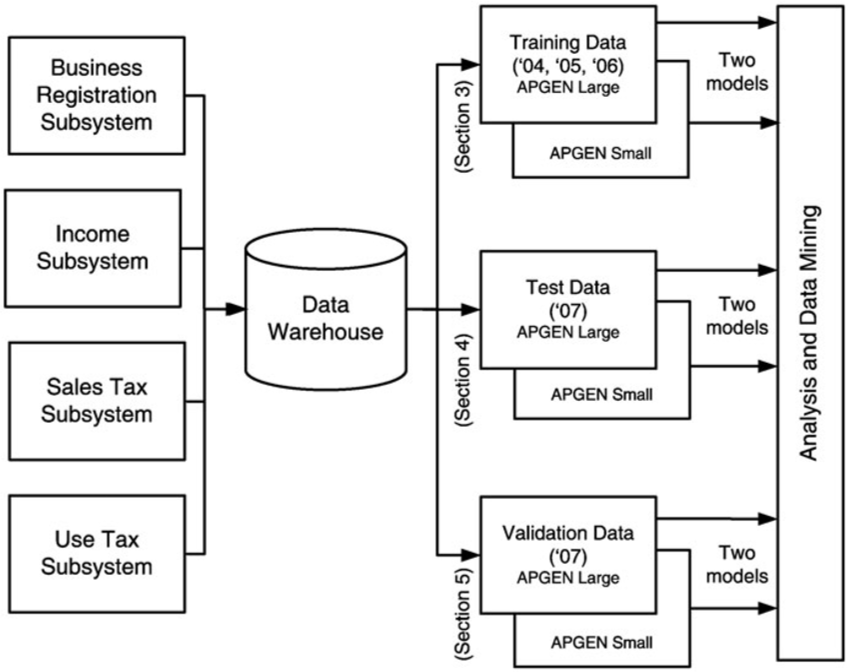

Data

The data consisted of selected tax audit cases from 2004 to 2007, collected by the audit experts, which were split into training, testing and validation sets:

the training data set consisted of Audit Plan General (APGEN) Use Tax audits and their results for the years 2004-2006;

the testing data consisted of APGEN Use Tax audits conducted in 2007 and was used to test or evaluate models (for Large and Smaller businesses) built on the training dataset,

while validation was assessed by actually conducting field audits on predictions made by models built on 2007 Use Tax return data processed in 2008.

None of the sets had records in common (see Figure 16.16).

Figure 16.16: Data sources for APGEN mining [63]. Note the 6 final sets which feed the Data Analysis component.

Strengths and Limitations of Algorithms

The Naïve Bayes classification scheme assumes independence of the features, which rarely occurs in real-world situations. This approach is also known to potentially introduce bias to classification schemes. In spite of this, classification models built using Naïve Bayes have a successfully track record.

MultiBoosting is an ensemble technique that uses committee (i.e. groups of classification models) and “group wisdom” to make predictions; unlike other ensemble techniques, it is different from other ensemble techniques in the sense that it forms a committee of sub-committees (i.e., a group of groups of classification models), which has a tendency to reduce both bias and variance of predictions (see [86], [149] for more information on these topics).

Procedures

Classification schemes need a response variable for prediction: audits which yielded more than $500 per year in revenues during the audit period were classified as Good; the others were Bad. The various models were tested and evaluated by comparing the performances of the manual audits (which yield the actual revenue) and the classification models (the predicted classification).

The procedure for manual audit selection in the early stages of the study required:

DOR experts selecting several thousand potential cases through a query;

DOR experts further selecting several hundreds of these cases to audit;

DOR auditors actually auditing the cases, and

calculating audit accuracy and return on investment (ROI) using the audits results.

Once the ROIs were available, data mining started in earnest. The steps involved were:

Splitting the data into training, testing, and validating sets.

Cleaning the training data by removing “bad” cases.

Building (and revising) classification models on the training dataset. The first iteration of this step introduced a separation of models for larger businesses and relatively smaller businesses according to their average annual withholding amounts (the threshold value that was used is not revealed in [63]).

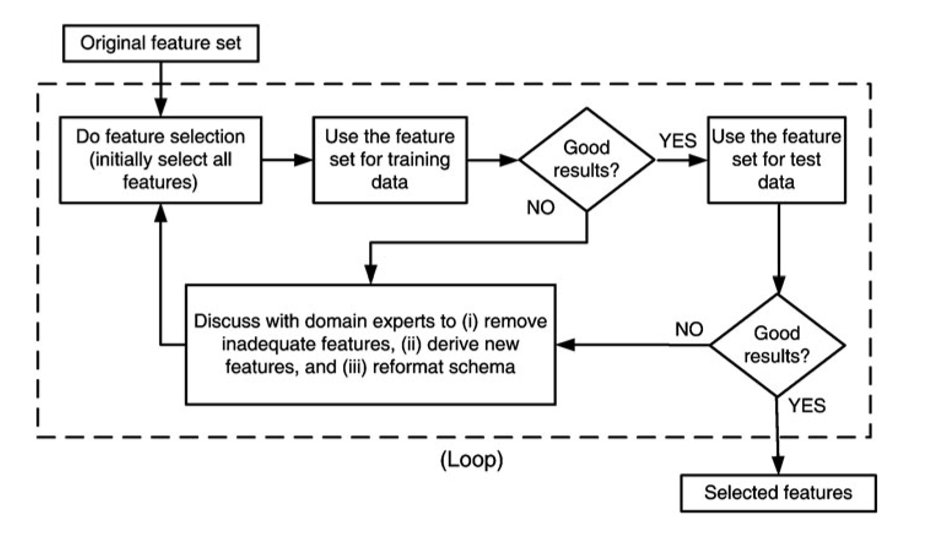

Selecting separate modeling features for the APGEN Large and Small training sets. The feature selection process is shown in Figure 16.17.

Building classification models on the training dataset for the two separate class of business (using C4.5, Naïve Bayes, multilayer perceptron, support vector machines, etc.), and assessing the classifiers using precision and recall with improved estimated ROI:

Figure 16.17: Feature selection process [63]. Note the involvement of domain experts.

Results, Evaluation and Validation

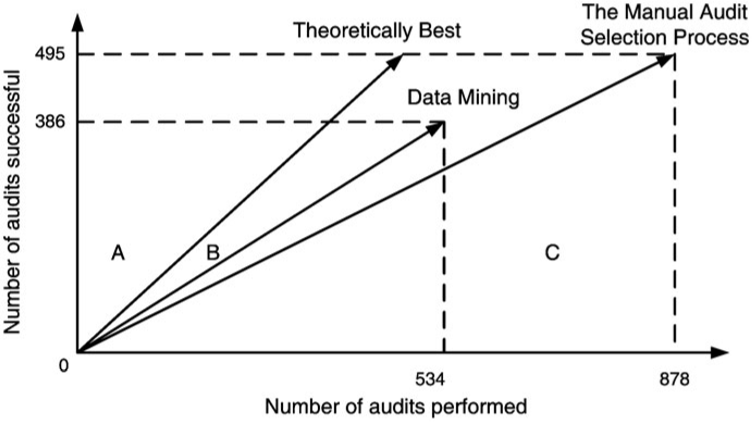

The models that were eventually selected were combinations of MultiBoosting and Naïve Bayes (C4.5 produced interpretable results, but its performance was shaky). For APGEN Large (2007), experts had put forward 878 cases for audit (495 of which proved successful), while the classification model suggested 534 audits (386 of which proved successful). The theoretical best process would find 495 successful audits in 495 audits performed, while the manual audit selection process needed 878 audits in order to reach the same number of successful audits.

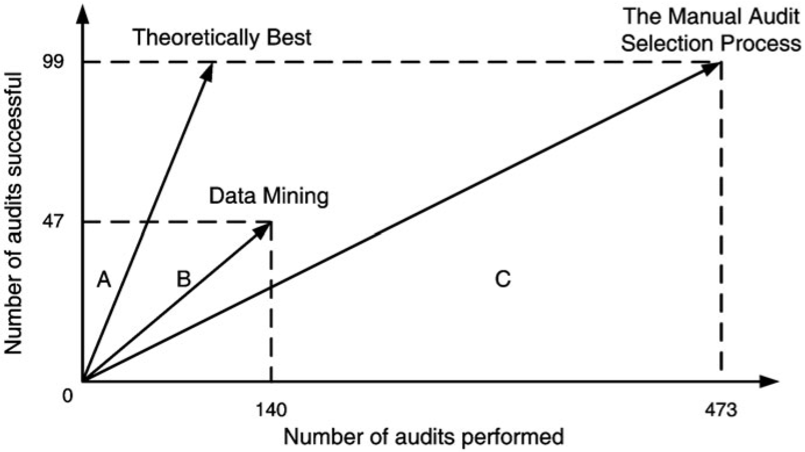

For APGEN Small (2007), 473 cases were recommended for audit by experts (only 99 of which proved successful); in contrast, 47 out of the 140 cases selected by the classification model were successful. The theoretical best process would find 99 successful audits in 99 audits performed, while the manual audit selection process needed 473 audits in order to reach the same number of successful audits.

In both cases, the classification model improves on the manual audit process: roughly 685 data mining audits to reach 495 successful audits of APGEN Large (2007), and 295 would be required to reach 99 successful audits for APGEN Small (2007), as can be seen in Figure 16.18.

Figure 16.18: Audit resource deployment efficiency [63]. Top: APGEN Large (2007). Bottom: APGEN Small (2007). In both cases, the Data Mining approach was more efficient (the slope of the Data Mining vector is ‘closer’ to the Theoretical Best vector than is the Manual Audit vector).

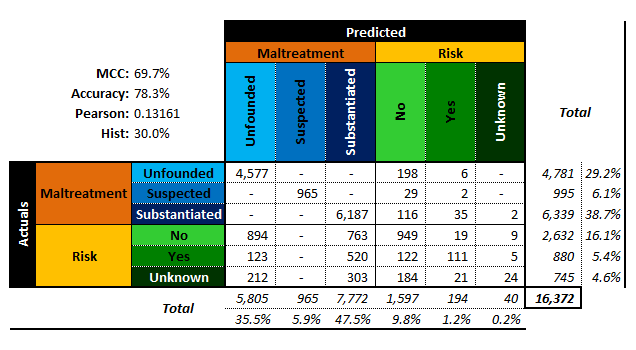

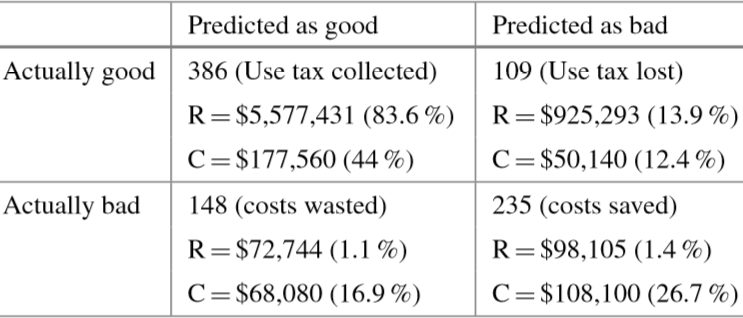

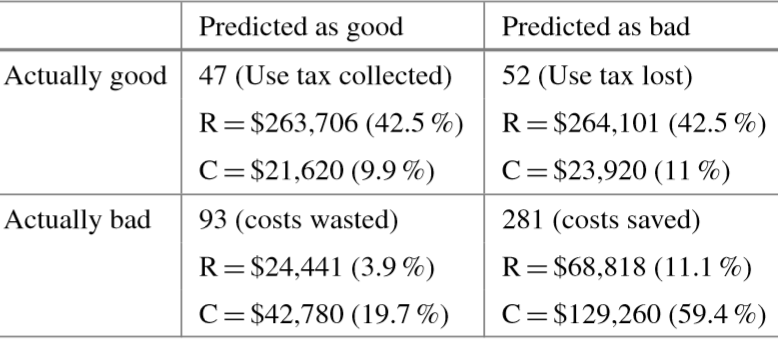

Figure 16.19 presents the confusion matrices for the classification model on both the APGEN Large and Small 2007 datasets.

Figure 16.19: Confusion matrices for audit evaluation [63]. Top: APGEN Large (2007). Bottom: APGEN Small (2007). stands for revenues, for collection costs.

The revenue and collection cost entries can be read as follows: the 47 successful audits which were correctly identified by the model for APGEN Small (2007) correspond to cases consuming 9.9% of collection costs but generating 42.5% of the revenues. Similarly, the 281 bad audits correctly predicted by the model represent notable collection cost savings. These are associated with 59.4% of collection costs but they generate only 11.1% of the revenues.

Once the testing phase of the study was conpleted, the DOR validated the data mining-based approach by using the models to select cases for actual field audits in a real audit project. The prior success rate of audits for APGEN Use tax data was 39% while the model was predicting a success rate of 56%; the actual field success rate was 51%.

Take-Aways

A substantial number of models were churned out before the team made a final selection. Past performance of a model family in a previous project can be used as a guide, but it provides no guarantee regarding its performance on the current data – remember the No Free Lunch (NFL) Theorem [153]: nothing works best all the time!

There is a definite iterative feel to this project: the feature selection process could very well require a number of visits to domain experts before the feature set yields promising results. This is a valuable reminder that the data analysis team should seek out individuals with a good understand of both data and context. Another consequence of the NFL is that domain-specific knowledge has to be integrated in the model in order to beat random classifiers, on average [154].

Finally, this project provides an excellent illustration that even slight improvements over the current approach can find a useful place in an organization – data science is not solely about Big Data and disruption!

16.3.6 Toy Example: Kyphosis Dataset

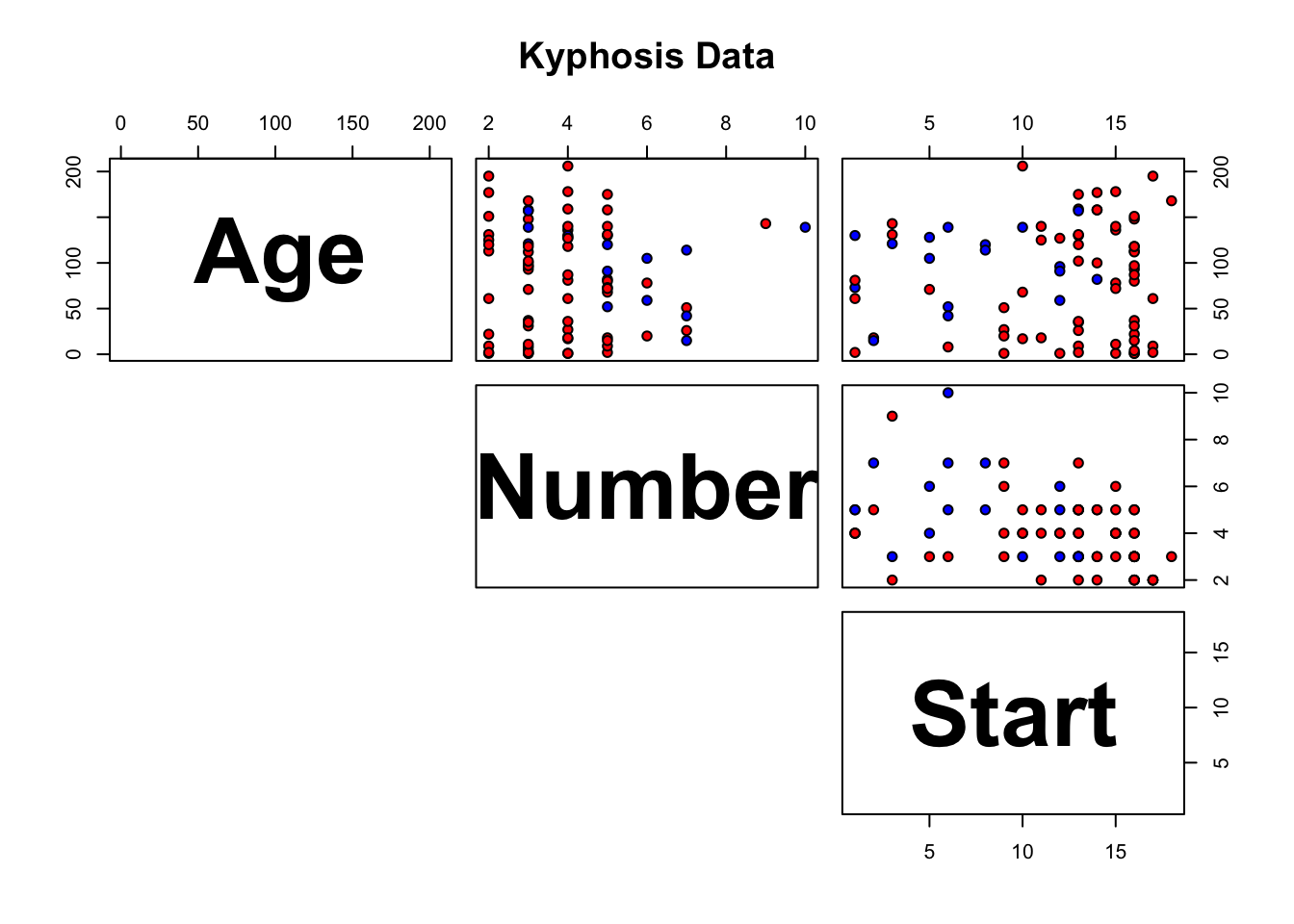

As a basic illustration of these concepts, consider the following example. Kyphosis is a medical condition related to an excessive convex curvature of the spine. Corrective spinal surgery is at times performed on children.

A dataset of 81 observations and 4 attributes has been collected (we have no information on how the data was collected and how representative it is likely to be, but those details can be gathered from [155]).

The attributes are:

kyphosis (absent or present after presentation)

age (at time of operation, in months)

number (of vertebrae involved)

start (topmost vertebra operated on)

The natural question of interest for this dataset is:

“how do the three explanatory attributes impact the operation’s success?”

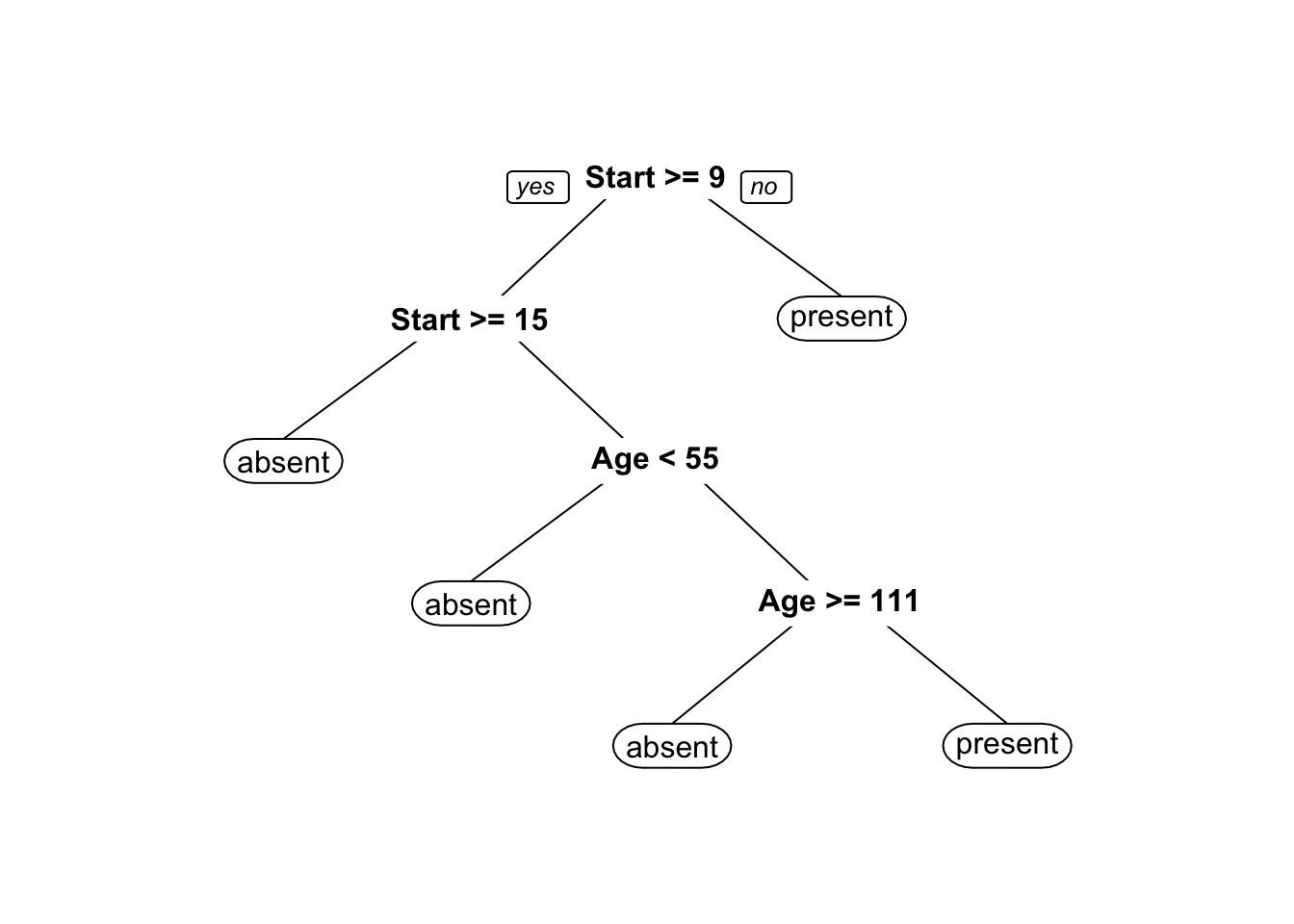

We use the rpart implementation of Classification and Regression Tree (CART) in R to generate a decision tree. Strictly speaking, this is not a predictive supervised task as we treat the entire dataset as a training set for the time being – there are no hold-out testing observations.

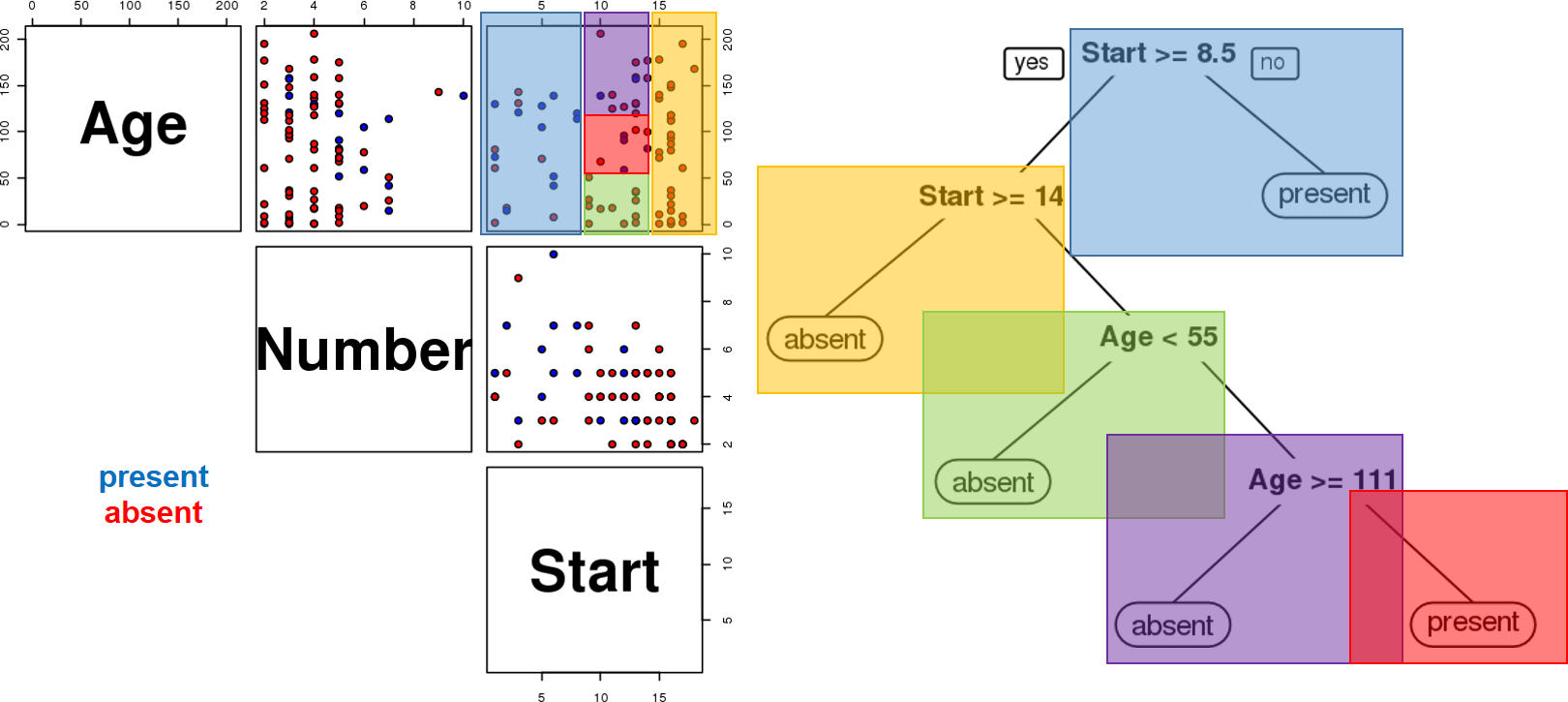

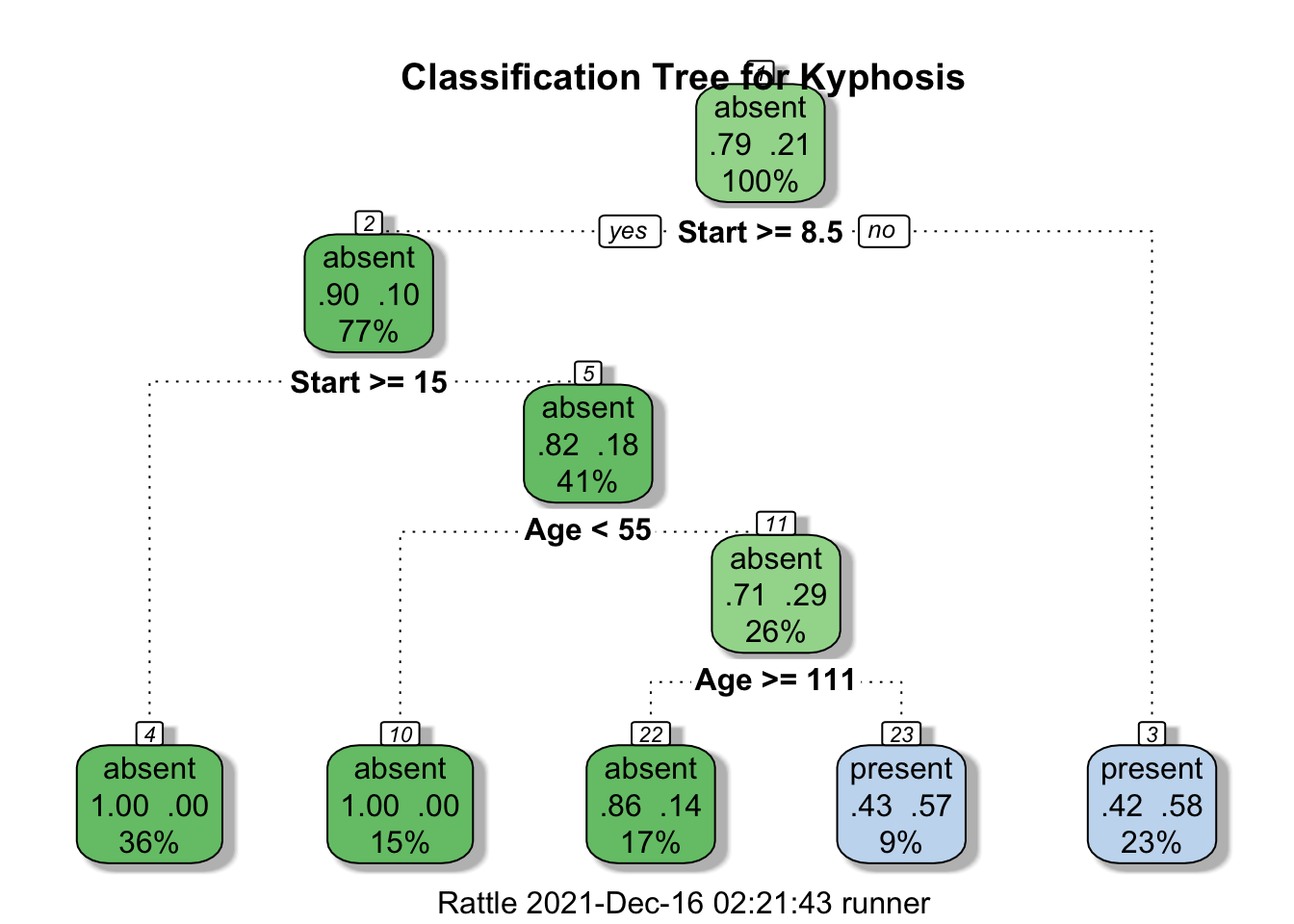

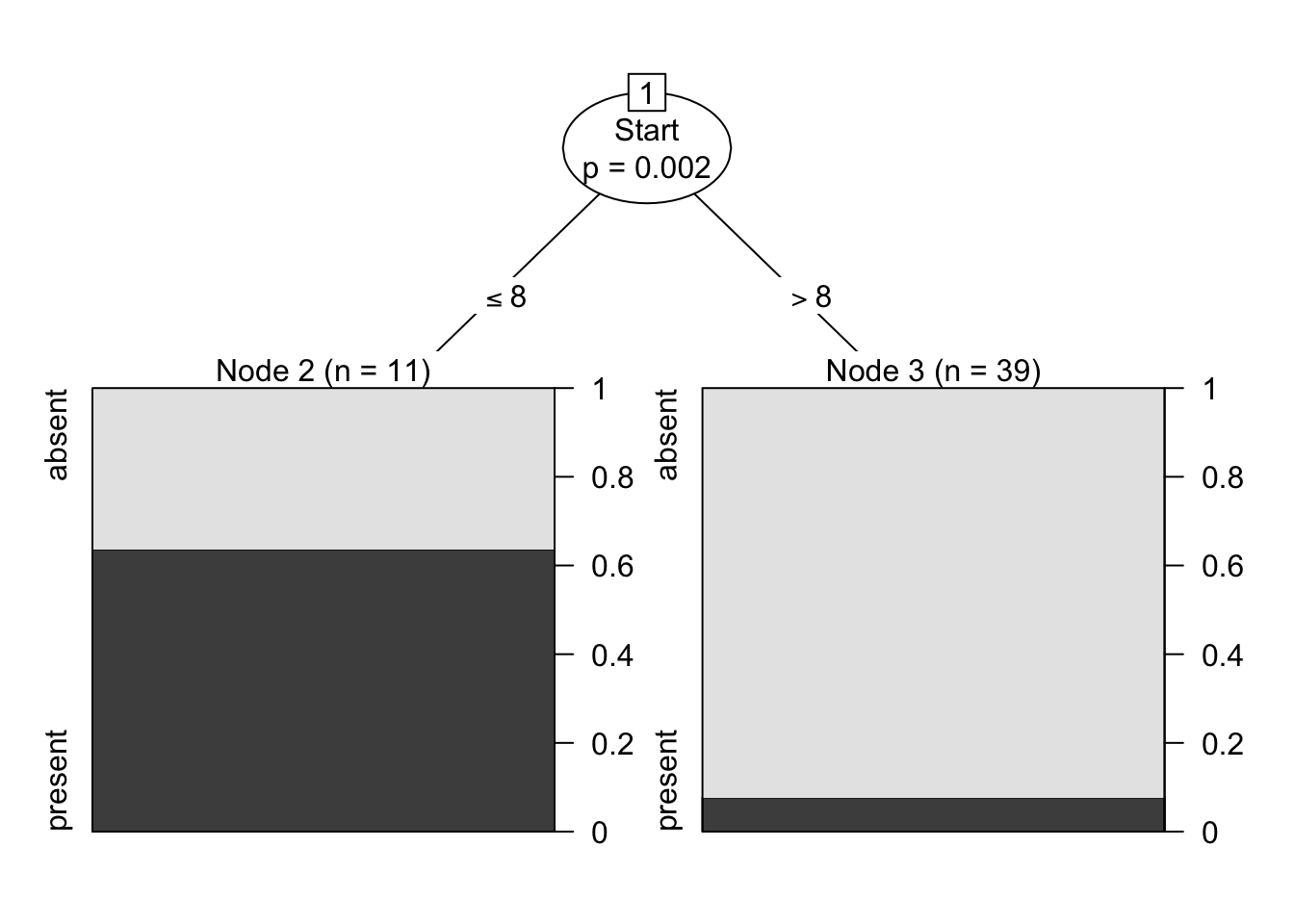

The results are shown in Figure 16.20. Interestingly, it would appear that the variable number does not play a role in determining the success of the operation (for the observations in the dataset).

Figure 16.20: Kyphosis decision tree visualization. Only two features are used to construct the tree. We also note that the leaves are not pure – there are blue and red instances in 3 of the 5 classification regions.

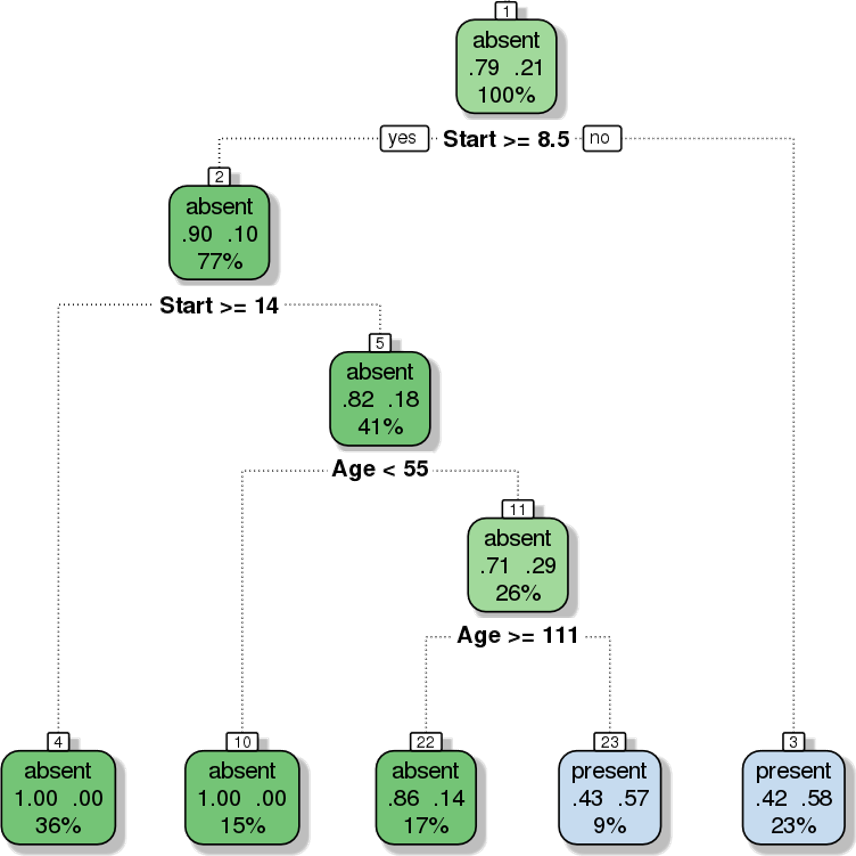

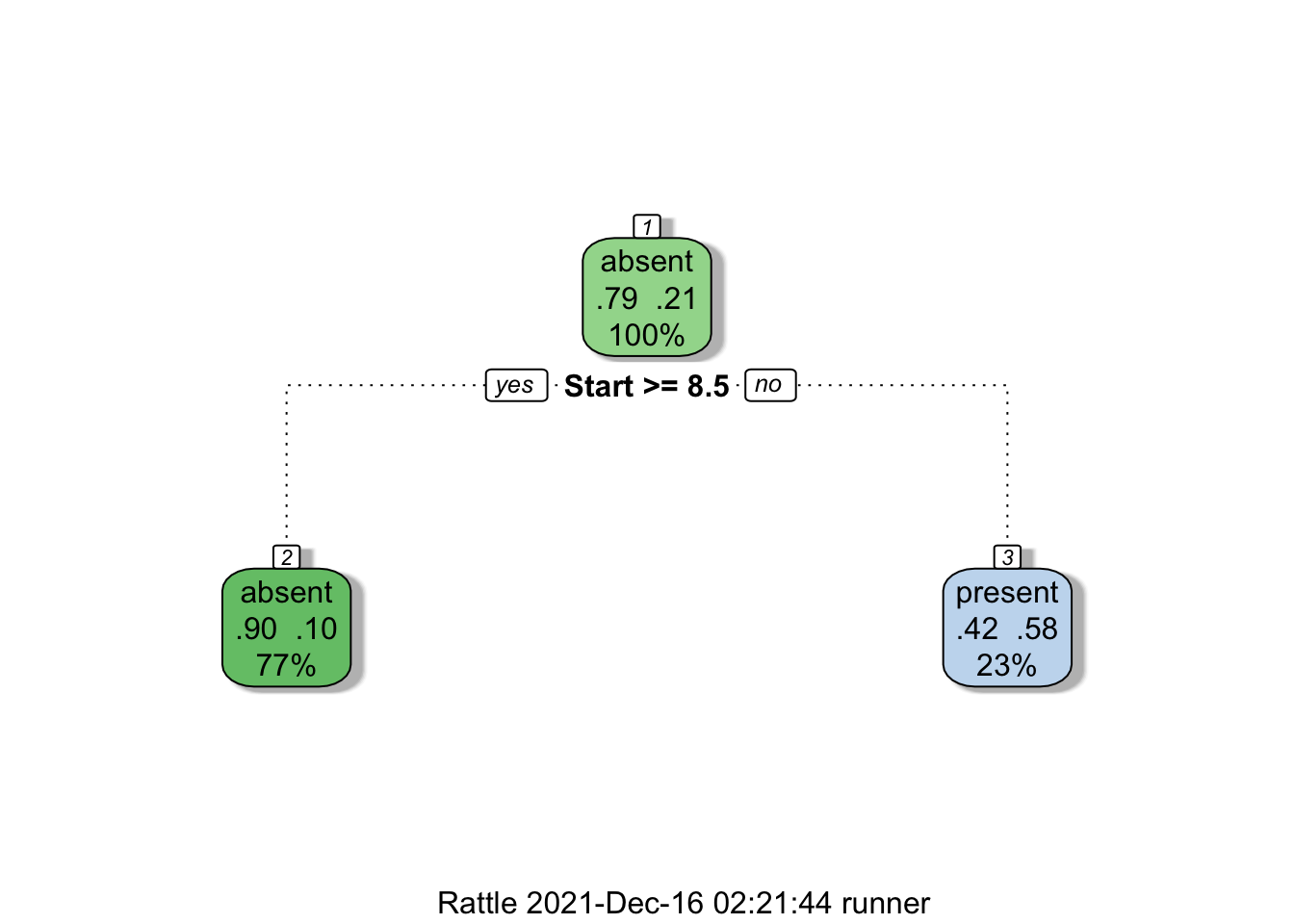

Furthermore, the decision tree visualization certainly indicates that its leaves are not pure (see Figure 16.21). Some additional work suggests that the tree is somewhat overgrown and that it could benefit from being pruned after the first branching point.

Figure 16.21: Pruning a decision tree – the original tree (left) is more accurate/more complex than the pruned tree (right).

At any rate, it remains meangingless to discuss the performance of the tree for predictive purposes if we are not using a holdout testing sample (not to say anything about the hope of generalizing to a larger population).

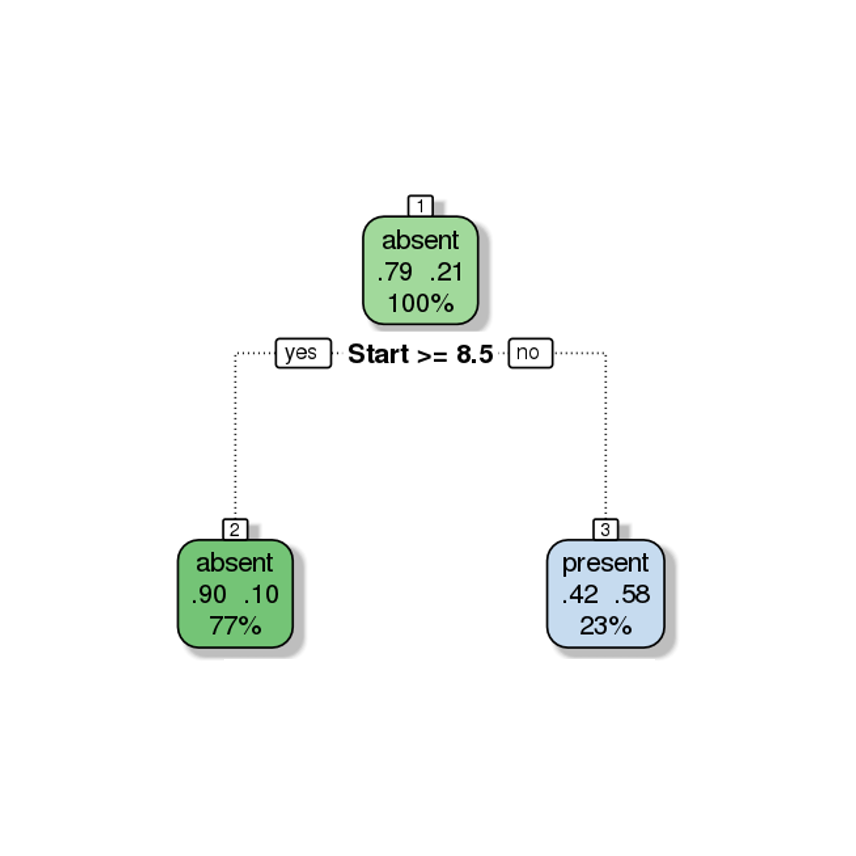

To that end, we trained a model on 50 randomly selected observations and evaluated the performance on the remaining 31 observations (the structure of the tree is not really important at this stage). The results are shown in Figure 16.22. Is the model “good”?

Figure 16.22: Kyphosis decision tree – performance evaluation. The accuracy and score are good, but the false discovery rate and false negative rate are not so great. This tree is good at predicting successful surgeries, but not fantastic at predicting failed surgeries. Is it still useful?

It is difficult to answer this question in the machine learning sense without being able to compare its performance metrics with those of other models (or families of models).62

In Model Selection, we will briefly discuss how estimate a model’s true predictive error rate through cross-validation. We will also discuss a number of other issues that can arise when ML/AI methods are not used correctly.

We show how to obtain these decision trees via R in Classification: Kyphosis Dataset.

16.4 Clustering

Clustering is in the eye of the beholder, and as such, researchers have proposed many induction principles and models whose corresponding optimisation problem can only be approximately solved by an even larger number of algorithms. [V. Estivill-Castro, Why So Many Clustering Algorithms?]

16.4.1 Overview

We can make a variety of quantitative statements about a dataset, at the univariate level. For instance, we can

compute frequency counts for the variables, and

identify measures of centrality (mean, mode, median), and

dispersal (range, standard deviation), among others.

At the multivariate level, the various options include way tabulations, correlation analysis, and data visualization, among others.

While these might provide insights in simple situations, datasets with a large number of variables or with mixed types (categorical and numerical) might not yield to such an approach. Instead, insights might come in the form of aggregation or clustering of similar observations.

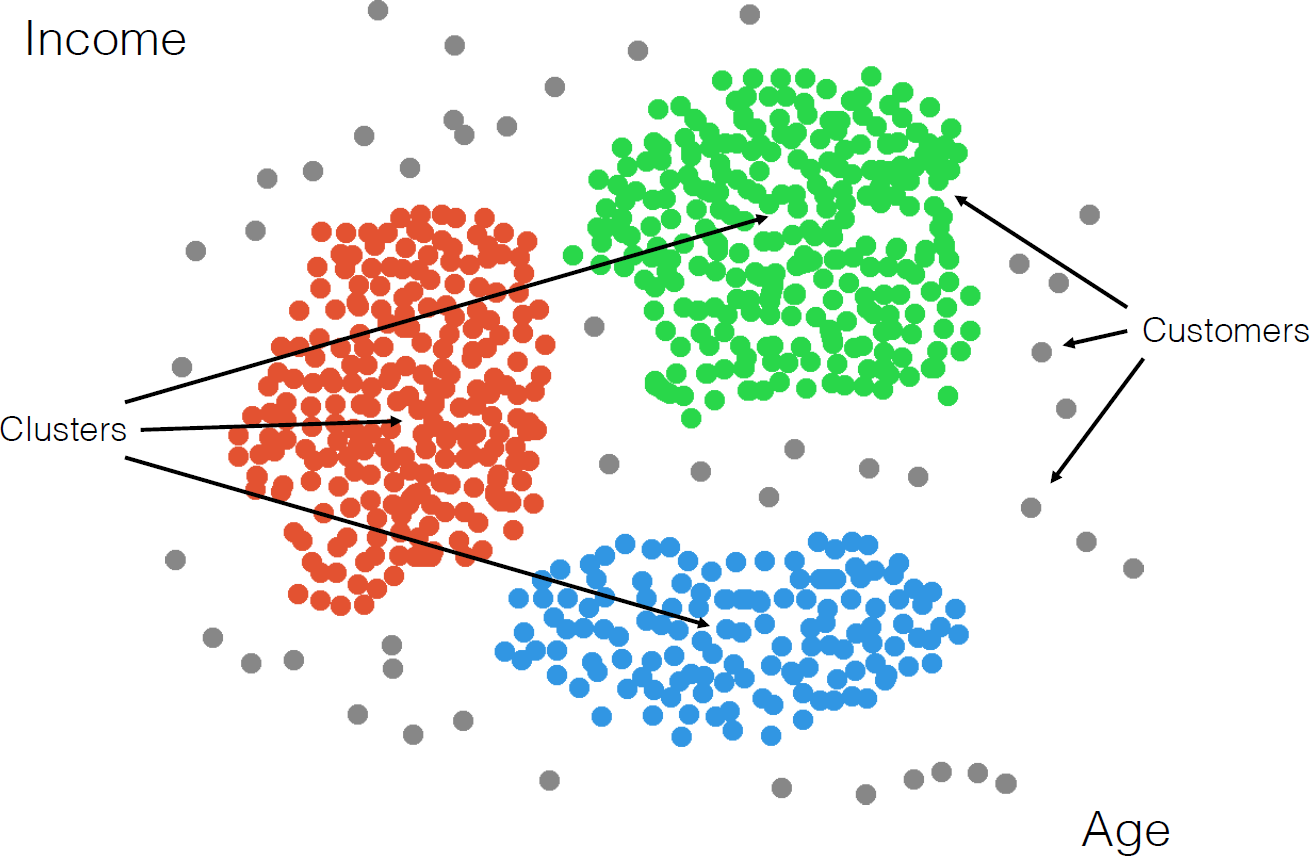

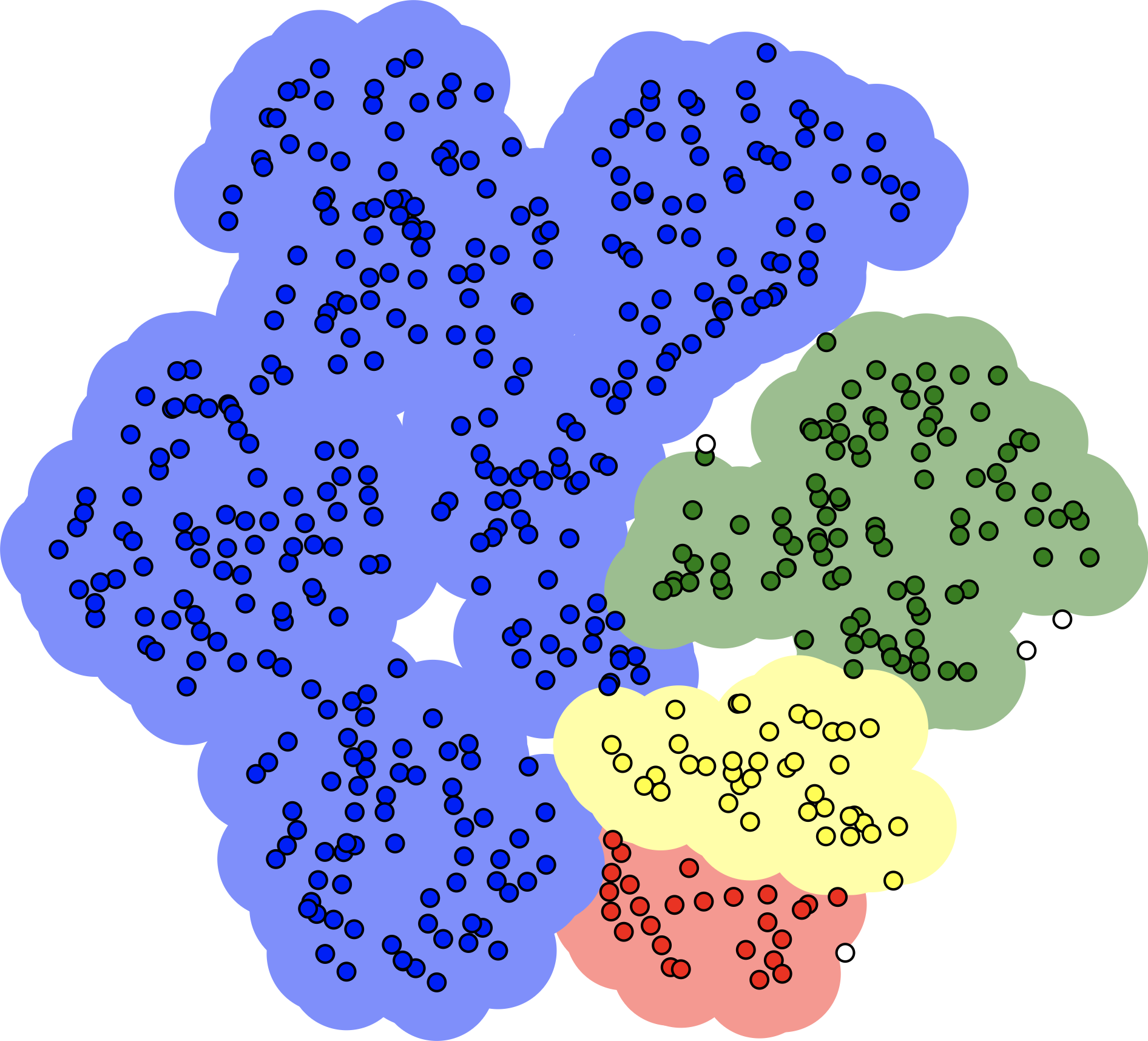

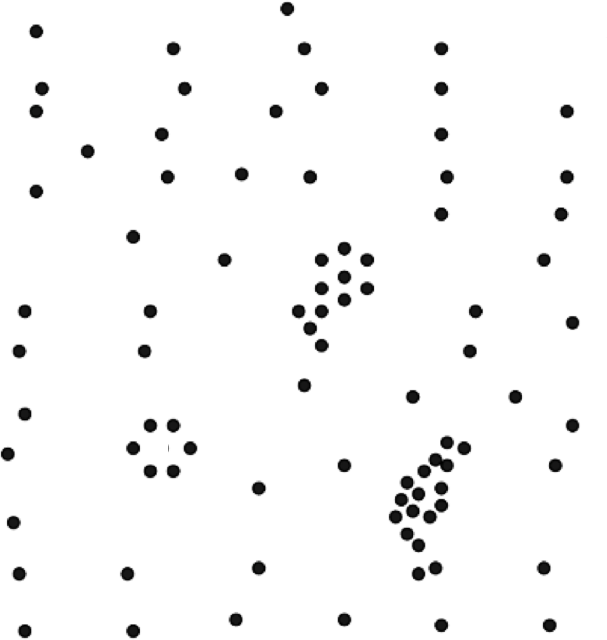

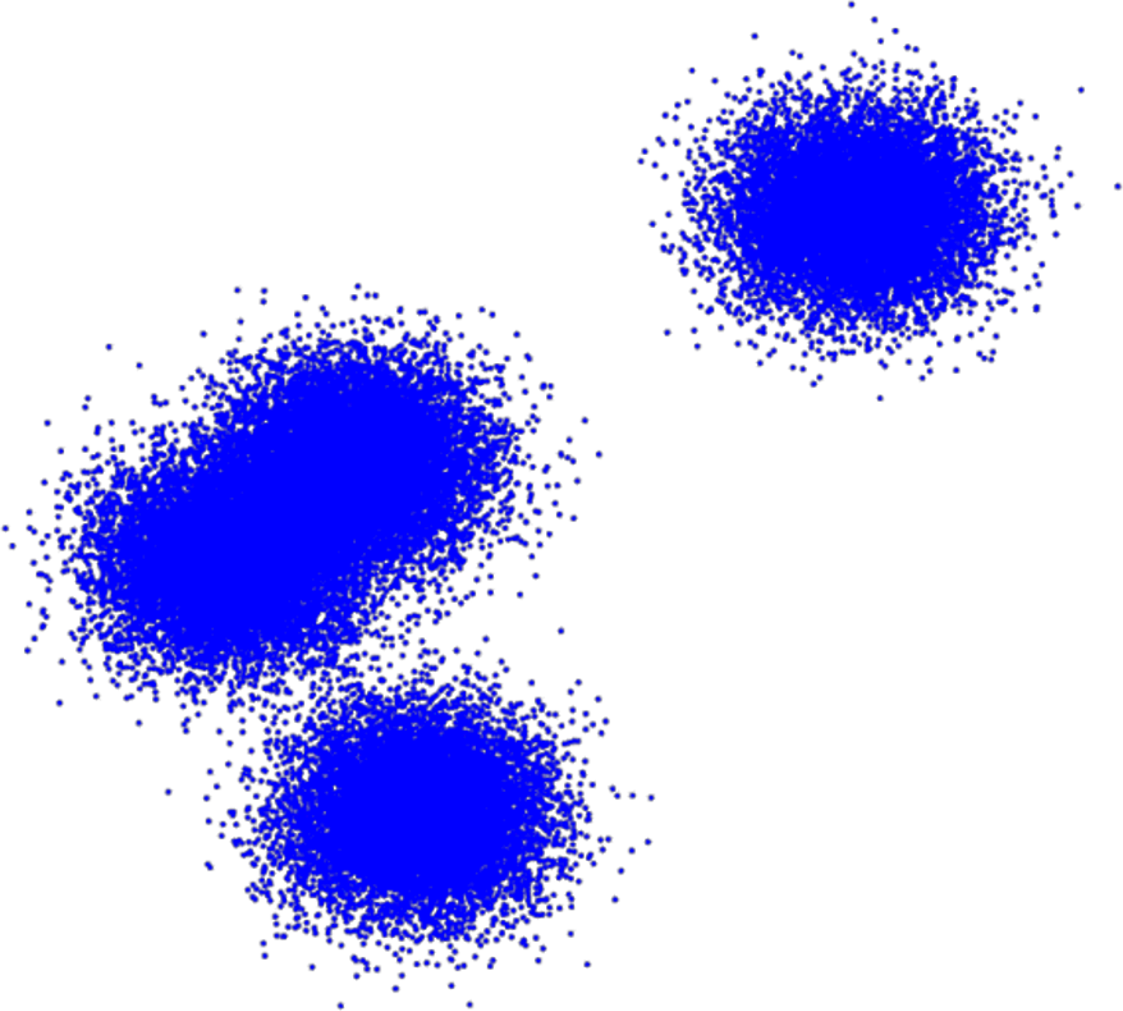

A successful clustering scheme is one that tightly joins together any number of similarity profiles (“tight” in this context refers to small variability within the cluster, see 16.23 for an illustration).

Figure 16.23: Clusters and outliers in an artificial dataset [personal file].

A typical application is one found in search engines, where the listed search results are the nearest similar objects (relevant webpages) clustered around the search item.

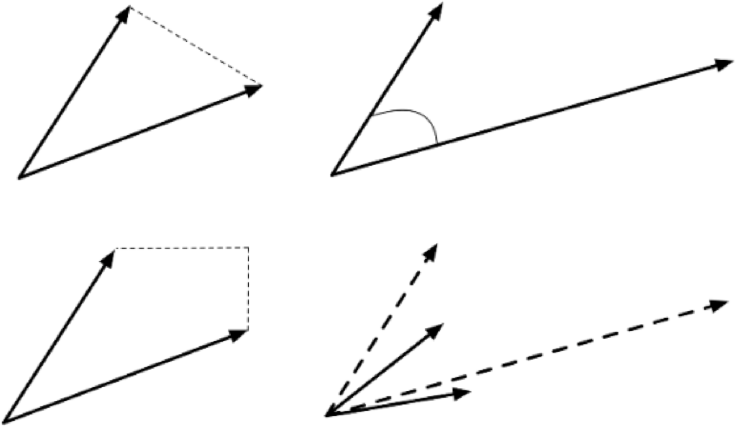

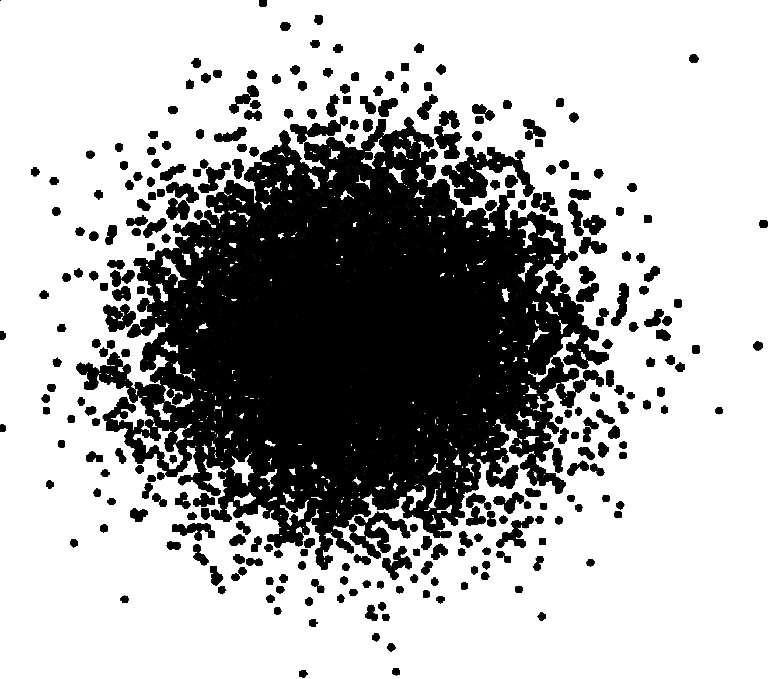

Dissimilar objects (irrelevant webpages) should not appear in the list, being “far” from the search item. Left undefined in this example is the crucial notion of closeness: what does it mean for one observation to be near another one? Various metrics can be used (see 16.24 for some simple examples), and not all of them lead to the same results.

Figure 16.24: Distance metrics between observations: Euclidean (as the crow flies, top left); cosine (direction from a vantage point, top right); Manhattan (taxi-cab, bottom left). Observations should be transformed (scaled, translated) before distance computations (bottom right).

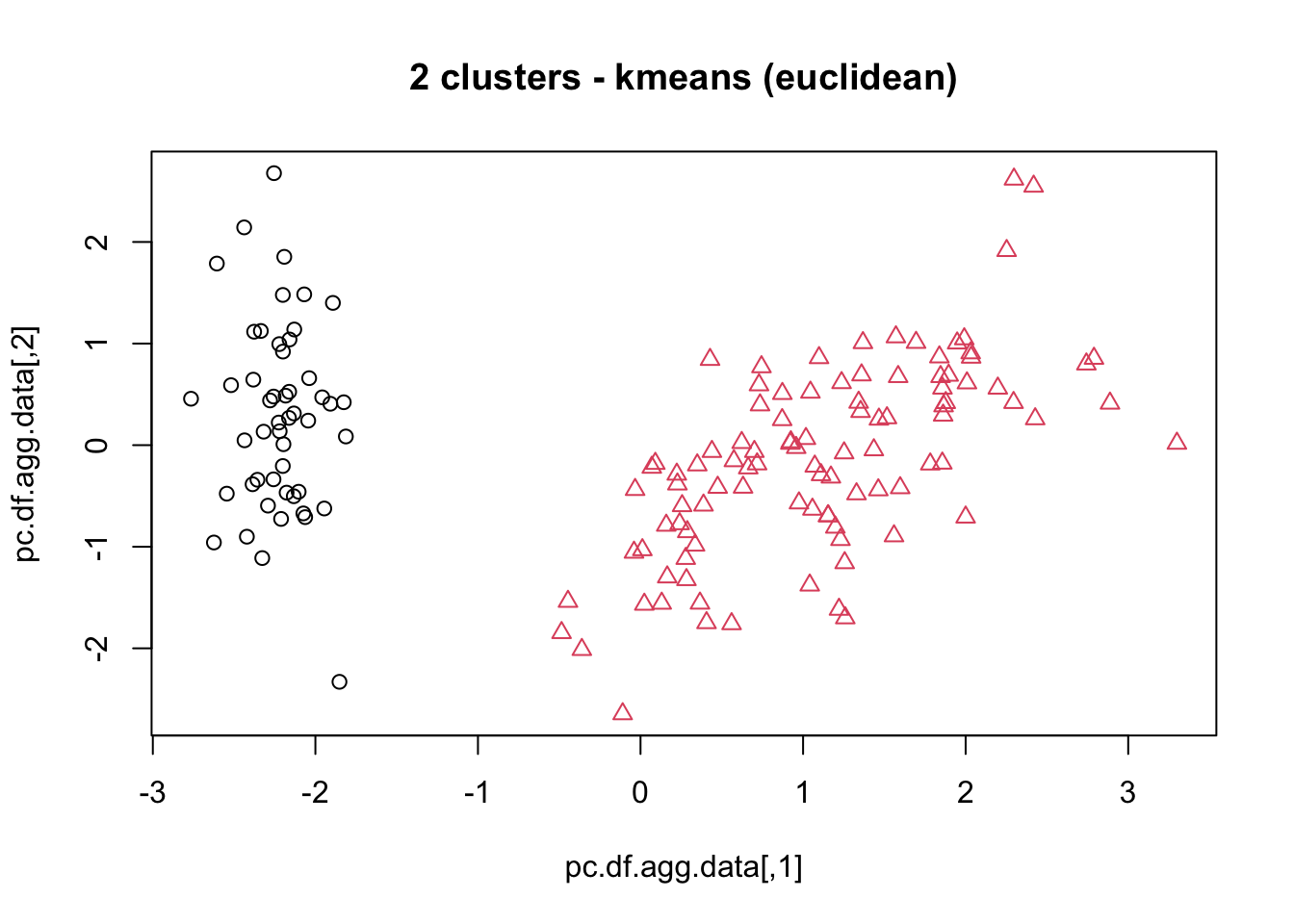

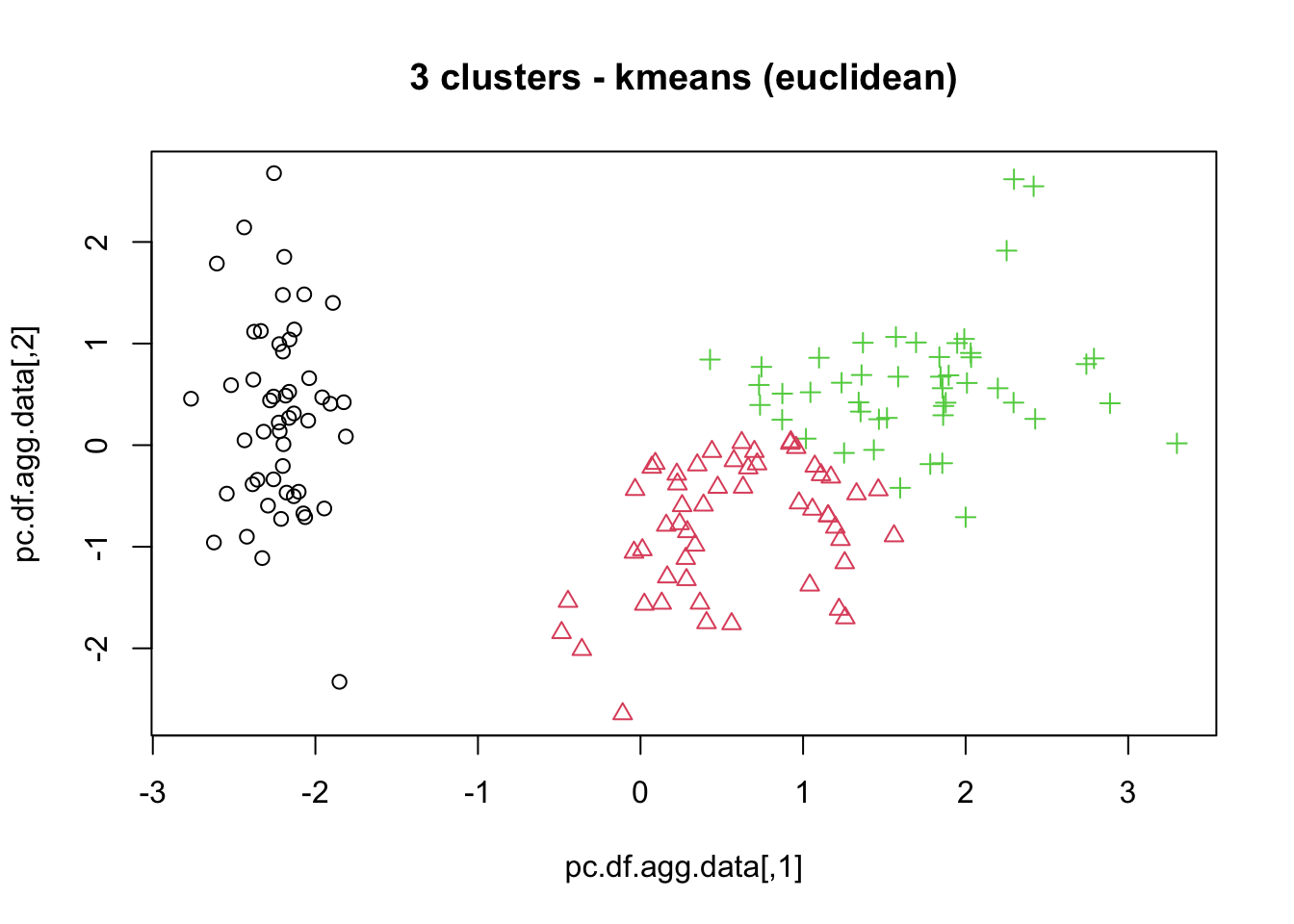

Clustering is a form of unsupervised learning since the cluster labels (and possibly their number) are not determined ahead of the analysis.

The algorithms can be complex and non-intuitive, based on varying notions of similarities between observations, and yet, the temptation to provide a simple a posteriori explanation for the groupings remains strong – we really, really want to reason with the data.63

The algorithms are also (typically) non-deterministic – the same routine, applied twice (or more) to the same dataset, can discover completely different clusters.64

This (potential) non-replicability is not just problematic for validation – it can also leads to client dissatisfaction. If the analyst is tasked with finding customer clusters for marketing purposes and the clusters change every time the client or the stakeholder asks for a report, they will be very confused (and doubtful) unless the stochastic nature of the process has already been explained.

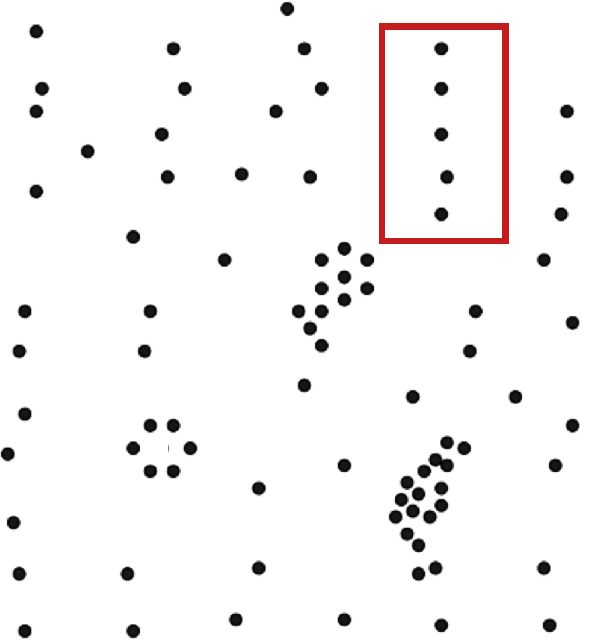

Another interesting aspect of clustering algorithms is that they often find clusters even when there are no natural ways to break down a dataset into constituent parts.

When there is no natural way to break up the data into clusters, the results may be arbitrary and fail to represent any underlying reality of the dataset. On the other hand, it could be that while there was no recognized way of naturally breaking up the data into clusters, the algorithm discovered such a grouping – clustering is sometimes called automated classification as a result.

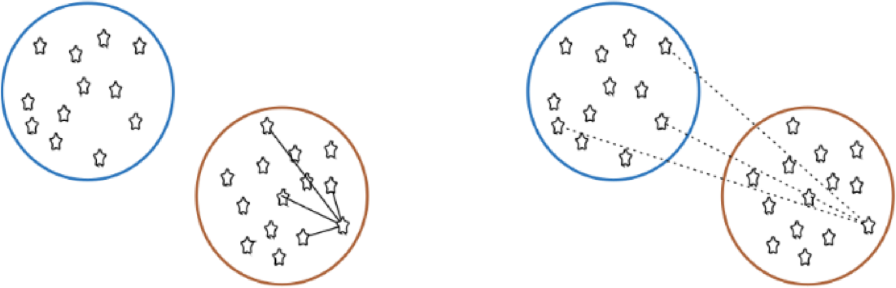

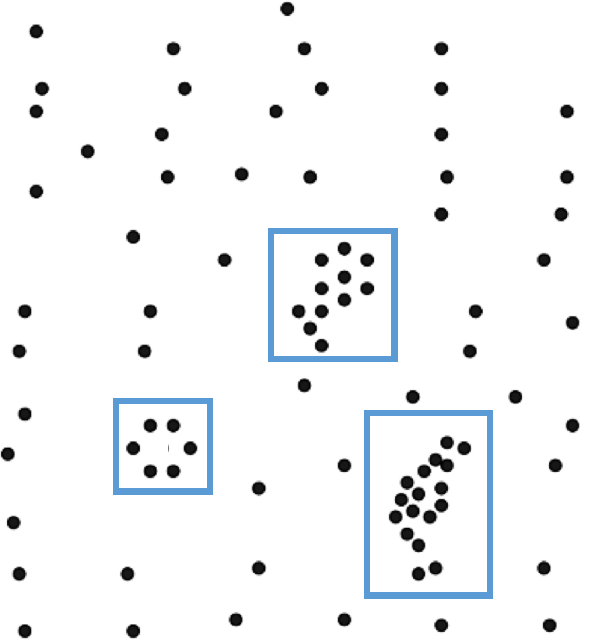

The aim of clustering, then, is to divide into naturally occurring groups. Within each group, observations are similar; between groups, they are dissimilar (see Figure 16.25 for an illustration).

Figure 16.25: Distance to points in own clusters (left, smaller is better) and to points in other clusters (right, larger is better).

As a learning process, clustering is fairly intuitive for human beings – our brains unconsciously search for patterns and they can generally handle messy data with the same relative ease as clean data.

Computers have a harder time of it, however; part of the challenge is that there is no agreed-upon definition of what constitutes a cluster, and so we cannot easily code their recognition into algorithms – to paraphrase Justice Potter Stewart,

“I may not be able to define what a cluster is, but I know one when I see one.”

All clustering algorithms rely on the notion of similarity between observations; in many instances, similarity is obtained via a distance (or metric) , with as , and as . However, there are similarity measures which are not derived from a distance metric.

One additional clustering challenge is that there is no such thing as the distance or the similarity measure between observations – observations which are similar using a specific measure may not be similar at all using another. Commonly-used metrics include:

euclidean,

Manhattan,

cosine,

Canberra,

Haming,

Jaccard,

Pearson,

and so on.

Note, however, that no matter which similarity measure is selected, the data must first be transformed: scaled, centered, etc. (see Figure 16.24). This introduces another layer of arbitrary choices, as there are multiple available options and no canonical way to perform this.

Applications

Frequently, we use clustering and other unsupervised learning tasks as preliminary steps in supervised learning problems, but there exist stand-alone applications as well:

text analysis – grouping similar documents according to their topics, based on the patterns of common and unusual terms;

product recommendations – grouping online purchasers based on the products they have viewed, purchased, liked, or disliked, or grouping products based on customer reviews;

marketing – grouping client profiles based on their demographics and preferences;

social network analysis – recognising communities within large groups of people;

medical imaging – differentiating between different tissue types in a 3D voxel;

genetics – inferring structures in populations;

dividing a larger group (or area, or category) into smaller groups, with members of the smaller groups having similarities of some kind, as analytical tasks may then be solved separately for each of the smaller groups, which may lead to increased accuracy once the separate results are aggregated, or

creating (new) taxonomies on the fly, as new items are added to a group of items, which could allow for easier product navigation on a website like Netflix, for instance.

Plenty of other applications may be found in [61], [64]–[67], [76], [156]–[162].

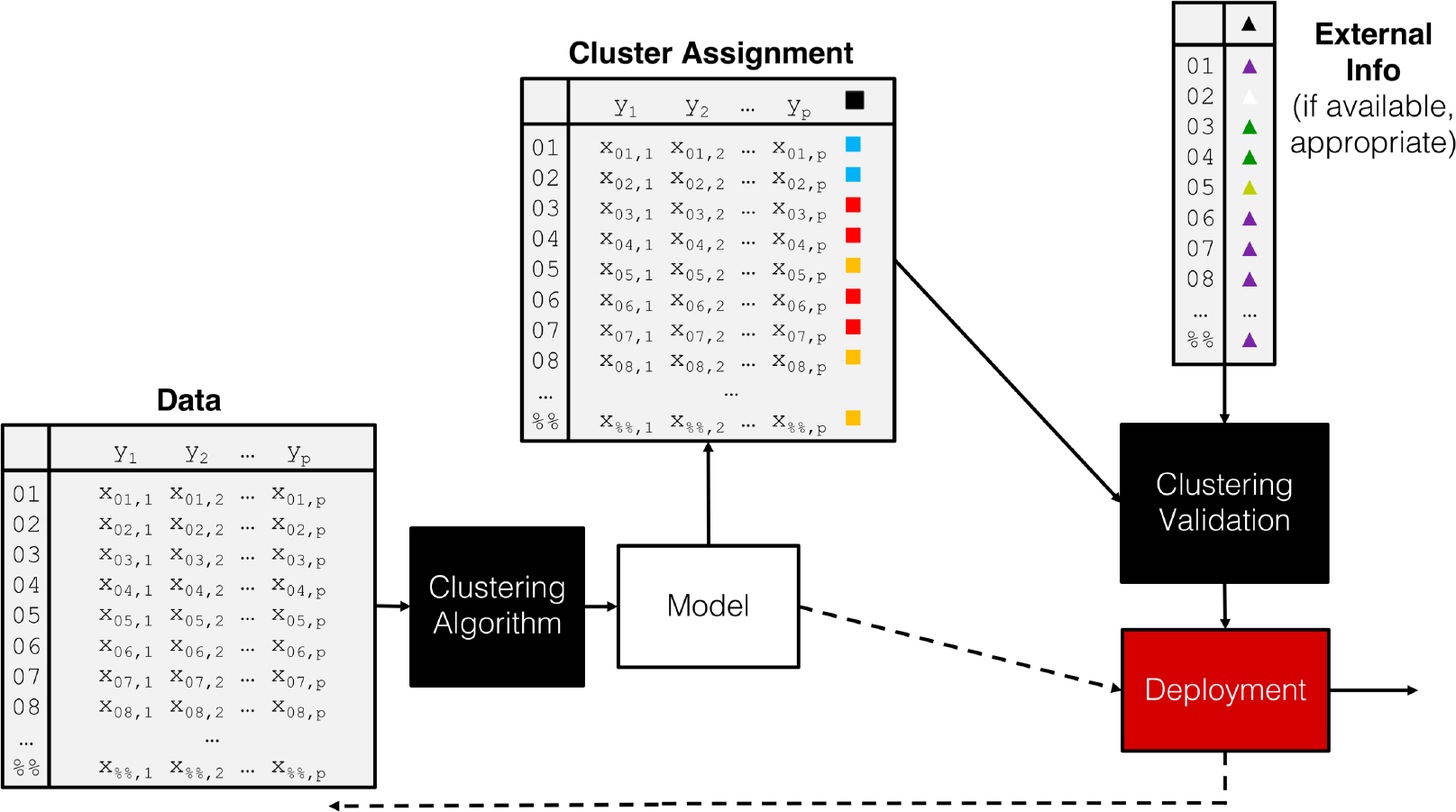

When all is said and done, the clustering process is quite standard, notwithstanding the choice of scaling strategy, similarity measure, and algorithm and parameters (see the pipeline shown in Figure 16.26).

Figure 16.26: A clustering pipeline, including validation and (eventual) deployment.

16.4.2 Clustering Algorithms

As is the case with classification, the number of clustering algorithms is quite high; the Wikipedia page lists 40+ such algorithms as of August 2018 [88]. The choice of algorithms (and associated parameters) is as much an art as it is a science, although domain expertise can come in handy [76]. There is a smaller list of common algorithms thatdata scientists and consultants should have in their toolbox (full descriptions available in [76], [77], [147]):

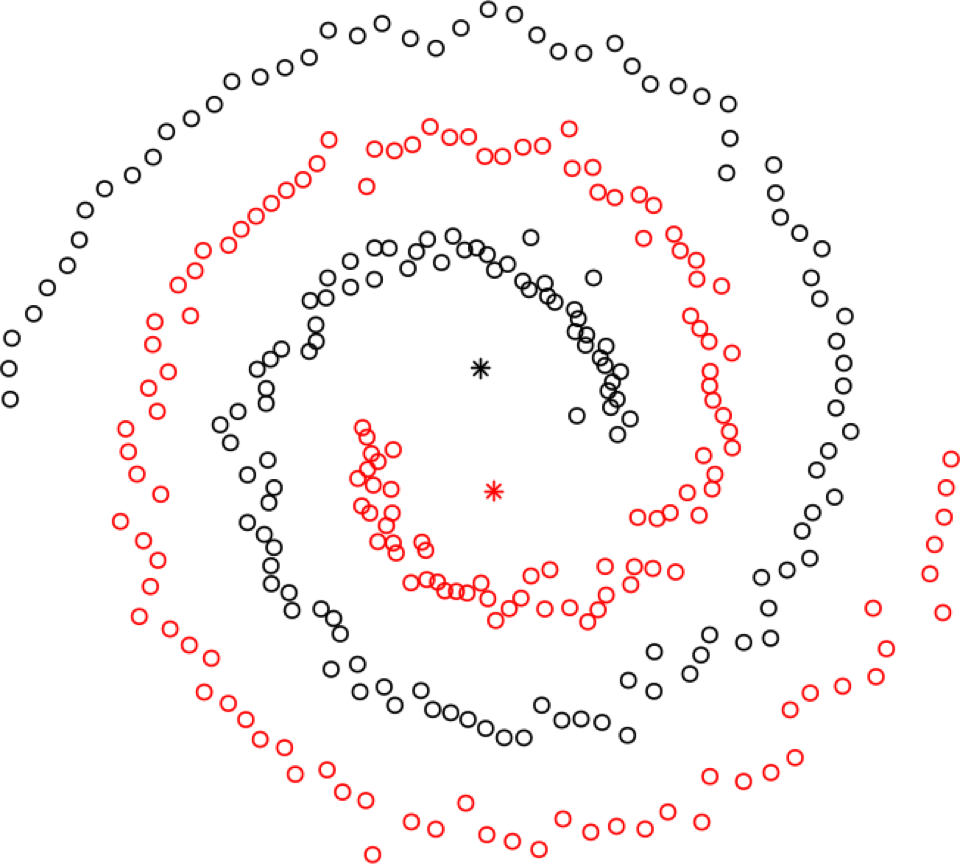

means, close on the heels of decision trees for the title of “most-used data science algorithm”, is a partition clustering method which tends to produce equal-sized clusters; when clients ask for their data to be clustered, they are typically envisioning means with the Euclidean metric; variants include mode (for categorical data), medians (for data with outliers), and means and means for large data sets; the number of clusters (and the similarity measure/distance metric) must be provided by the user; works fairly well for “blob”-like data;

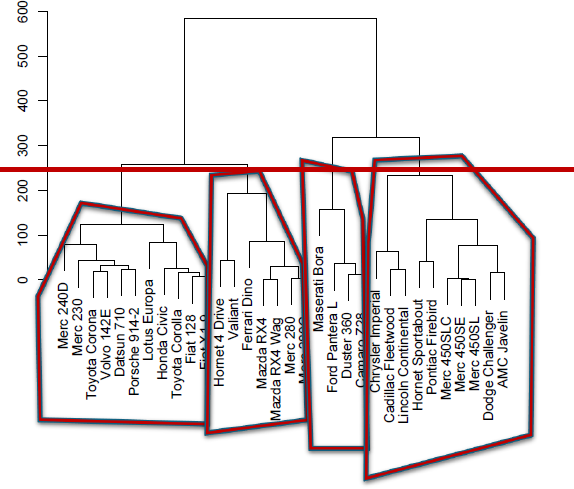

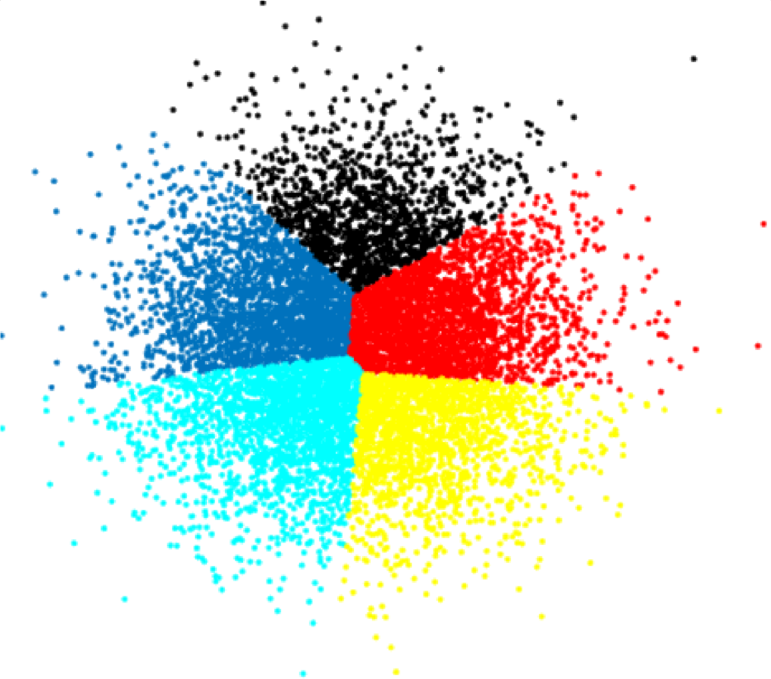

hierarchical clustering is one of the few deterministic algorithms on the market, with divisive (DIANA) and agglomerative (AGNES) versions; no parameters need to be inputted, but the users must select a linkage strategy (roughly speaking, a metric that computes the distance between clusters) and a level at which to read off the clusters (see Figure 16.27 for an an illustration);

density-based spatial clustering (DBSCAN) is a graph-based approach which attempts to identify densely-packed regions in the dataset; its most obvious advantages (and of its variants OPTICS and DENCLUE) are robustness to outliers and not needing to input a number of clusters to search for in the data; the main disadvantage is that the optimal input parameters (neighbourhood radius and minimum number of points to be considered dense) are not easy to derive (see Figure 16.27 for an an illustration);

affinity propagation is another algorithm which selects the optimal number of clusters directly from the data, but it does so by trying and evaluating various scenarios, which may end up being time-consuming;

spectral clustering can be used to recognize non-globular clusters (see Figure 16.27 for an an illustration); these are found by computing eigenvalues of an associated Laplacian matrix – consequently, spectral clustering is fast.

Figure 16.27: Illustration of hierarchical clustering (left), DBSCAN (middle, based on [163]), and spectral clustering (right).

Other methods include latent Dirichlet allocation (used in topics modeling), expectation-maximisation (particularly useful to find gaussian clusters), BIRCH (a local method which does not require the entire dataset to be scanned) and fuzzy clustering (a soft clustering scheme in which the observations have a degree of belonging to each cluster).

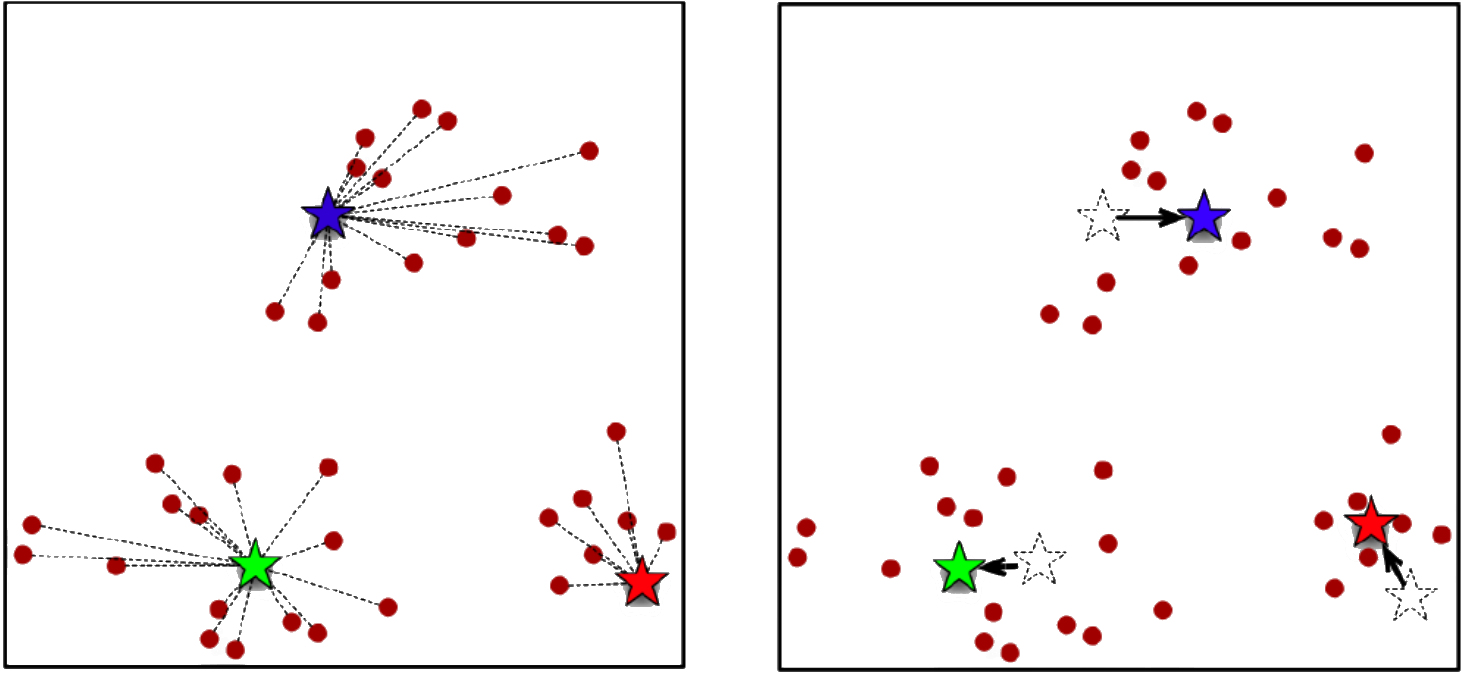

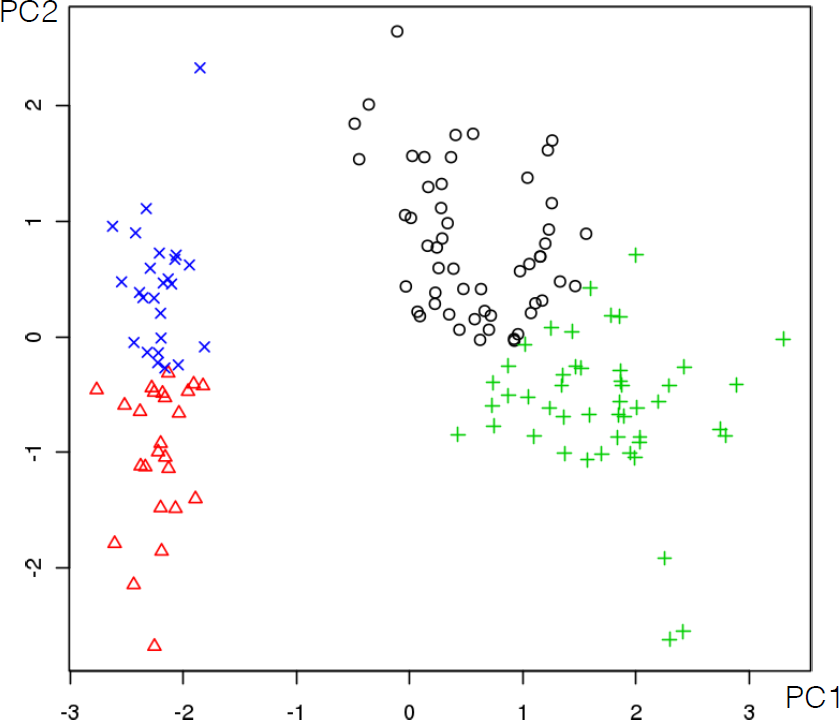

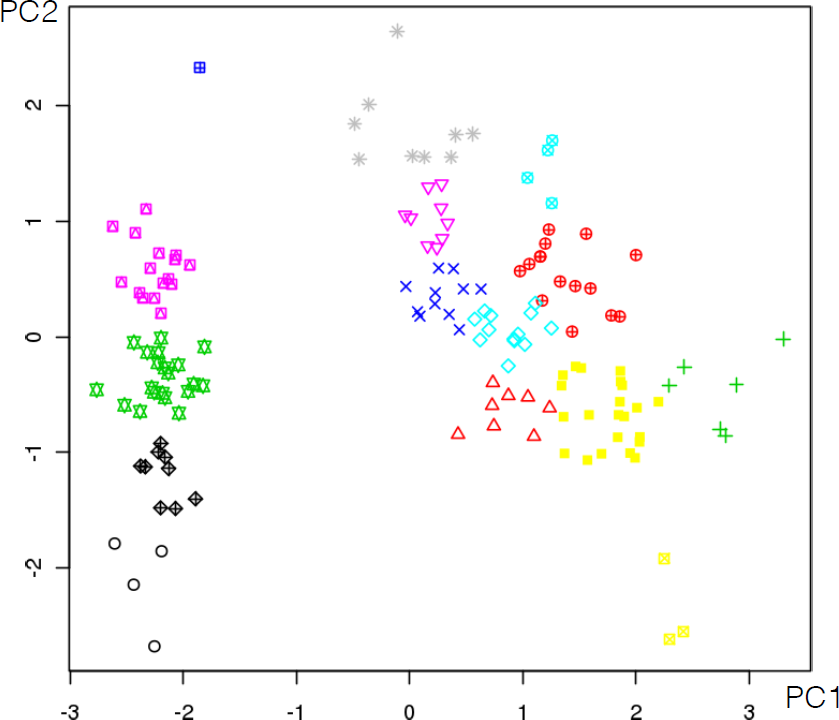

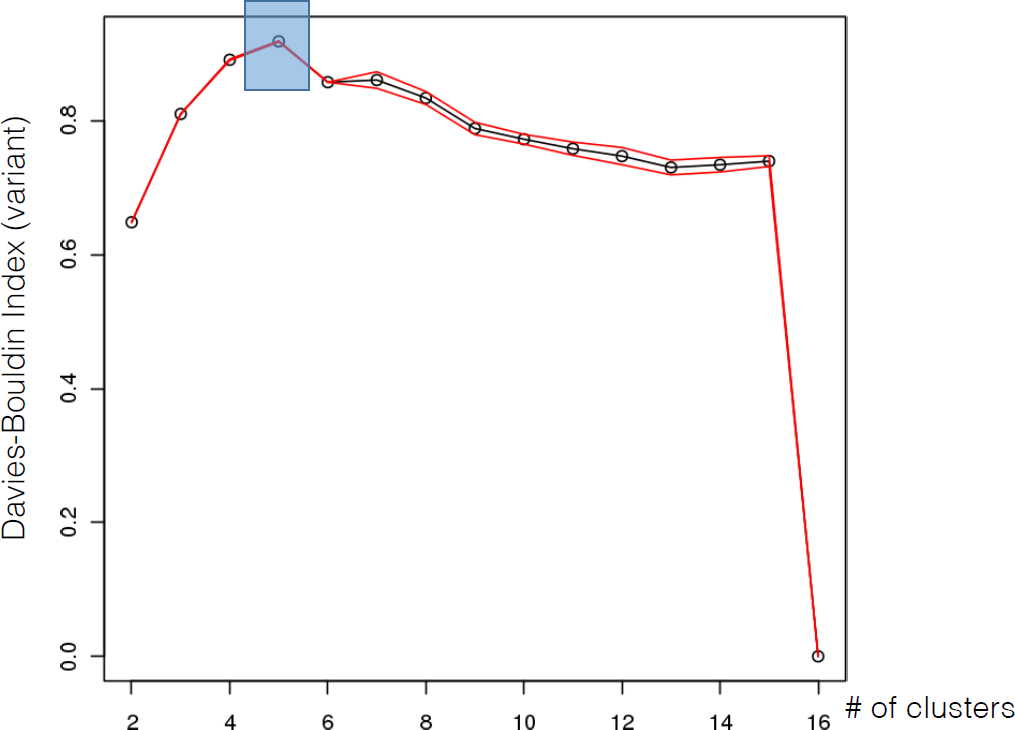

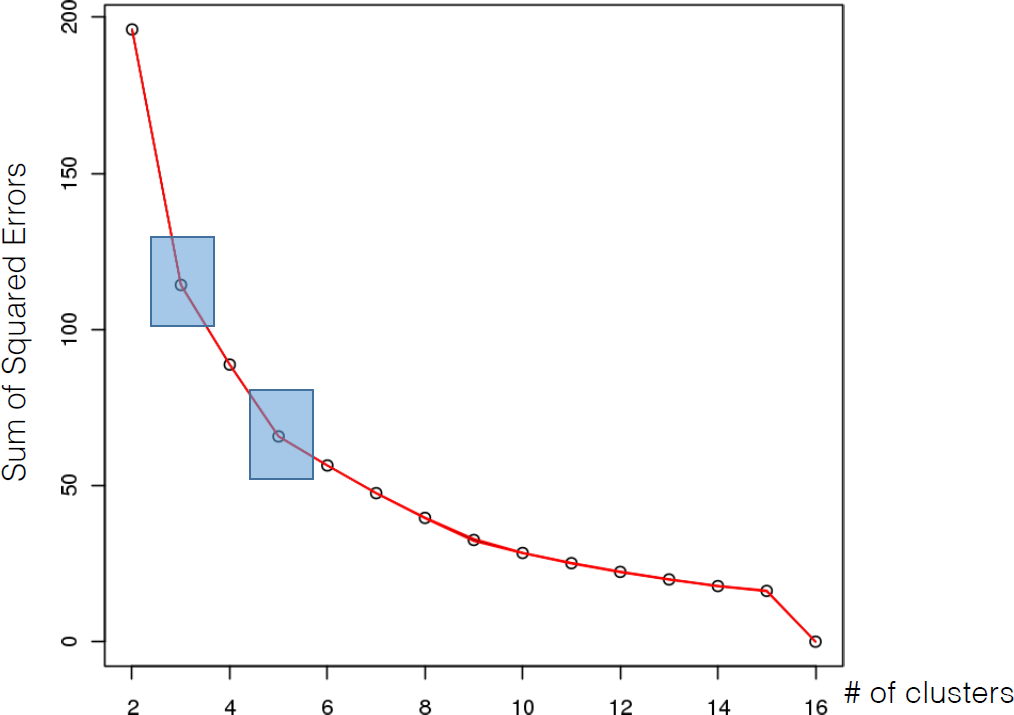

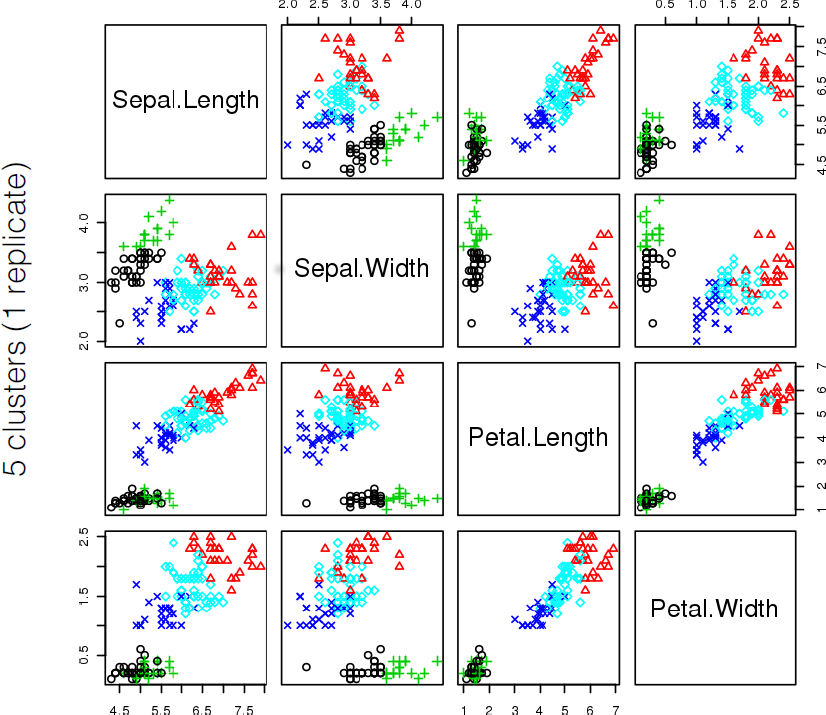

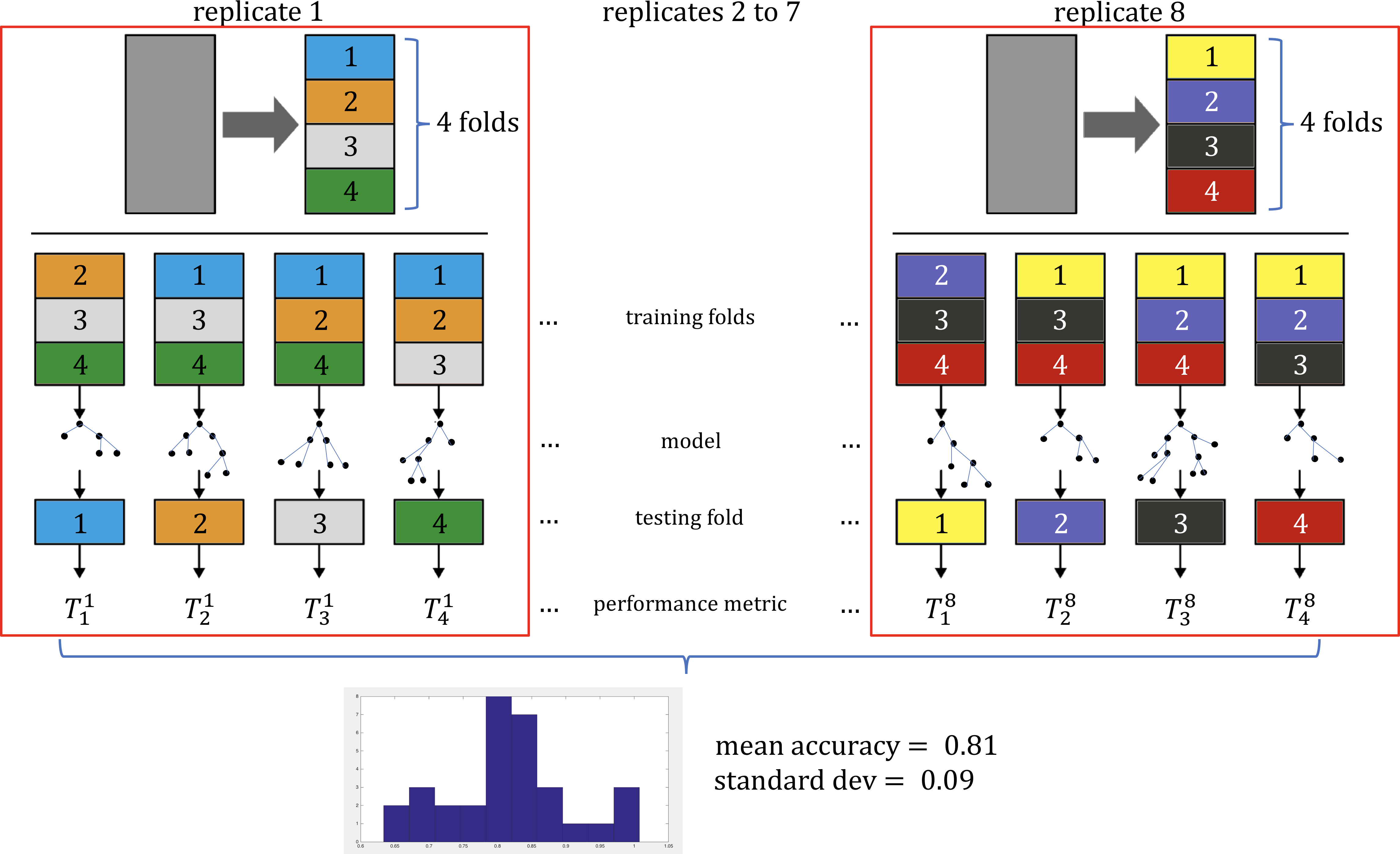

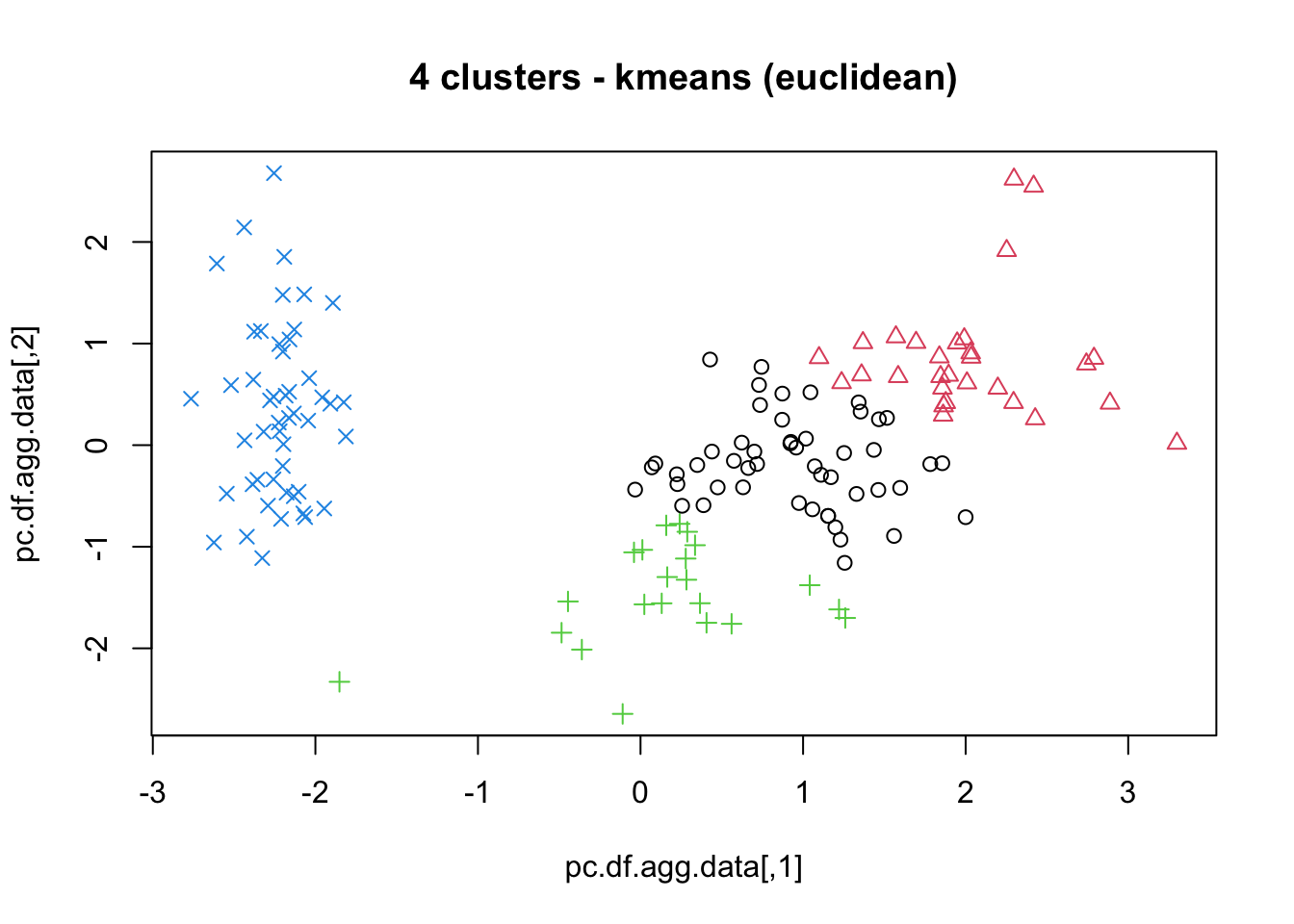

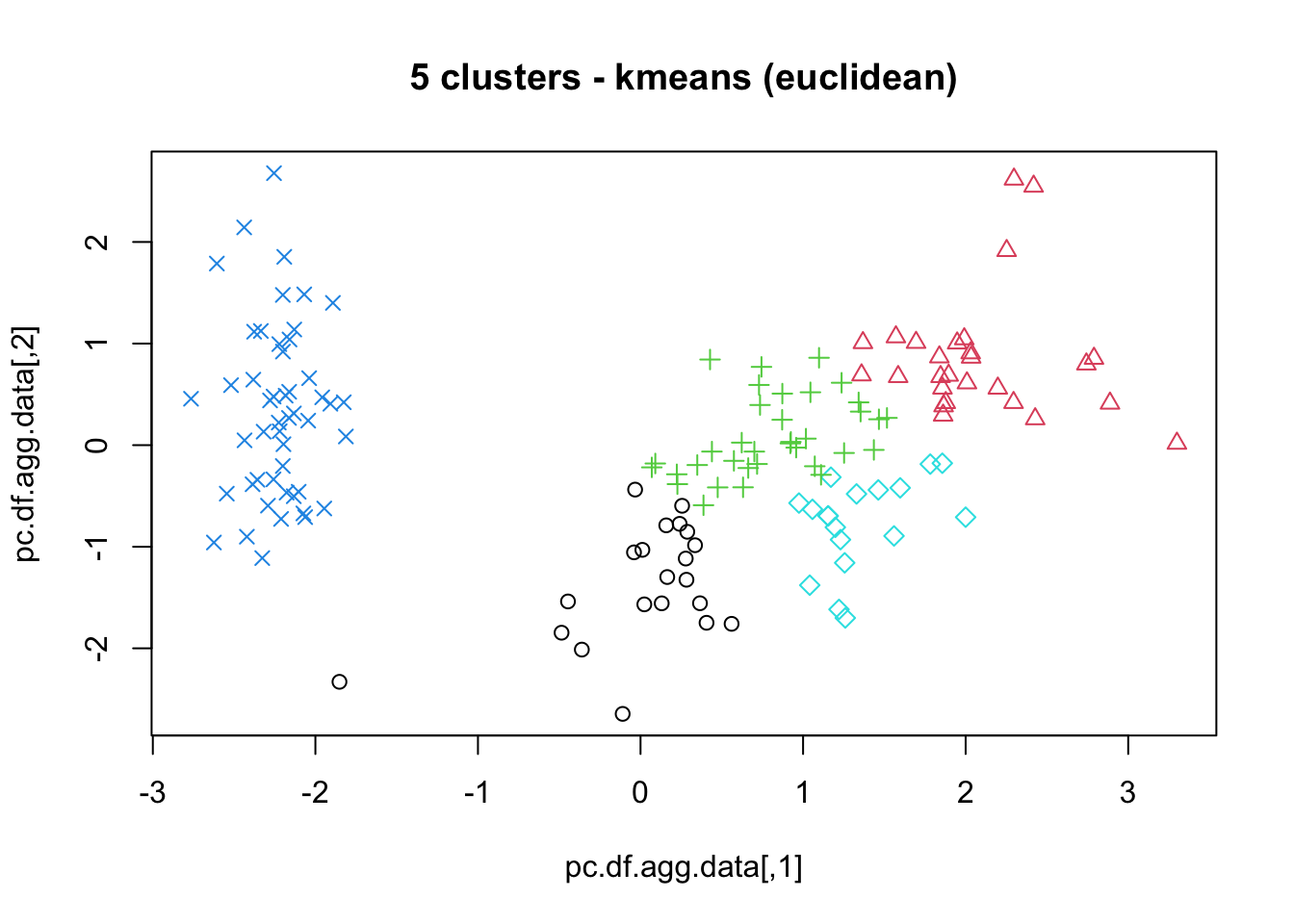

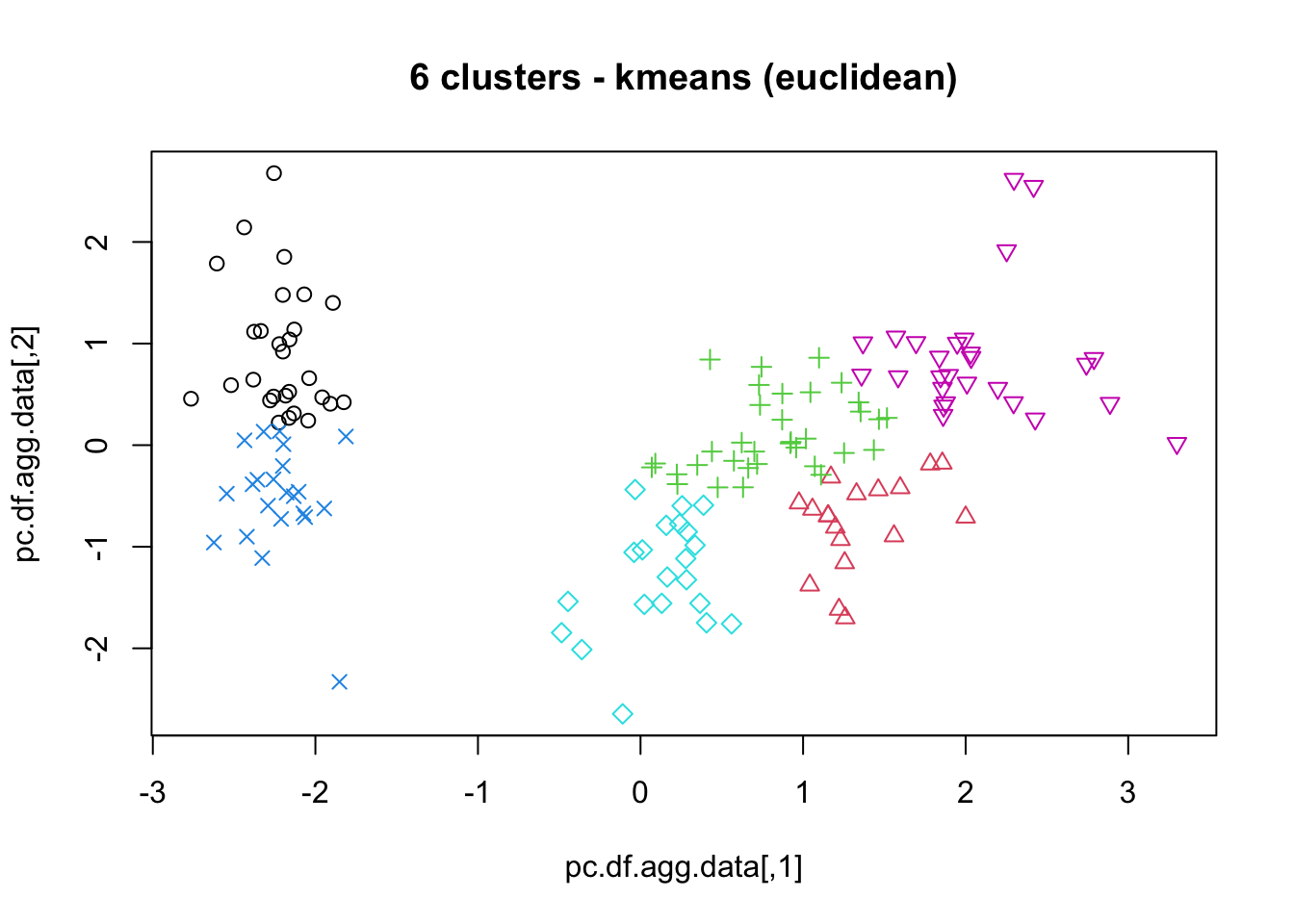

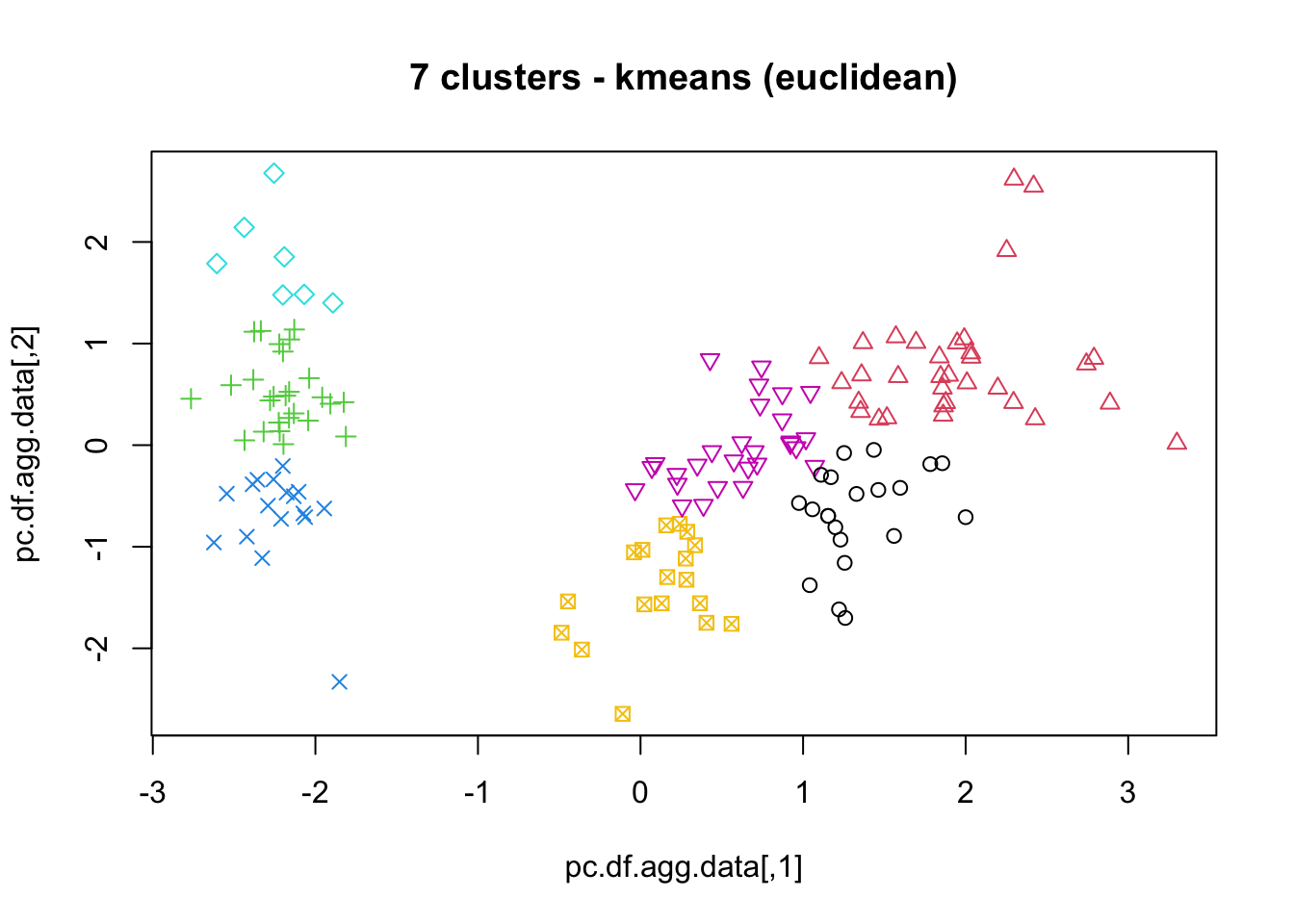

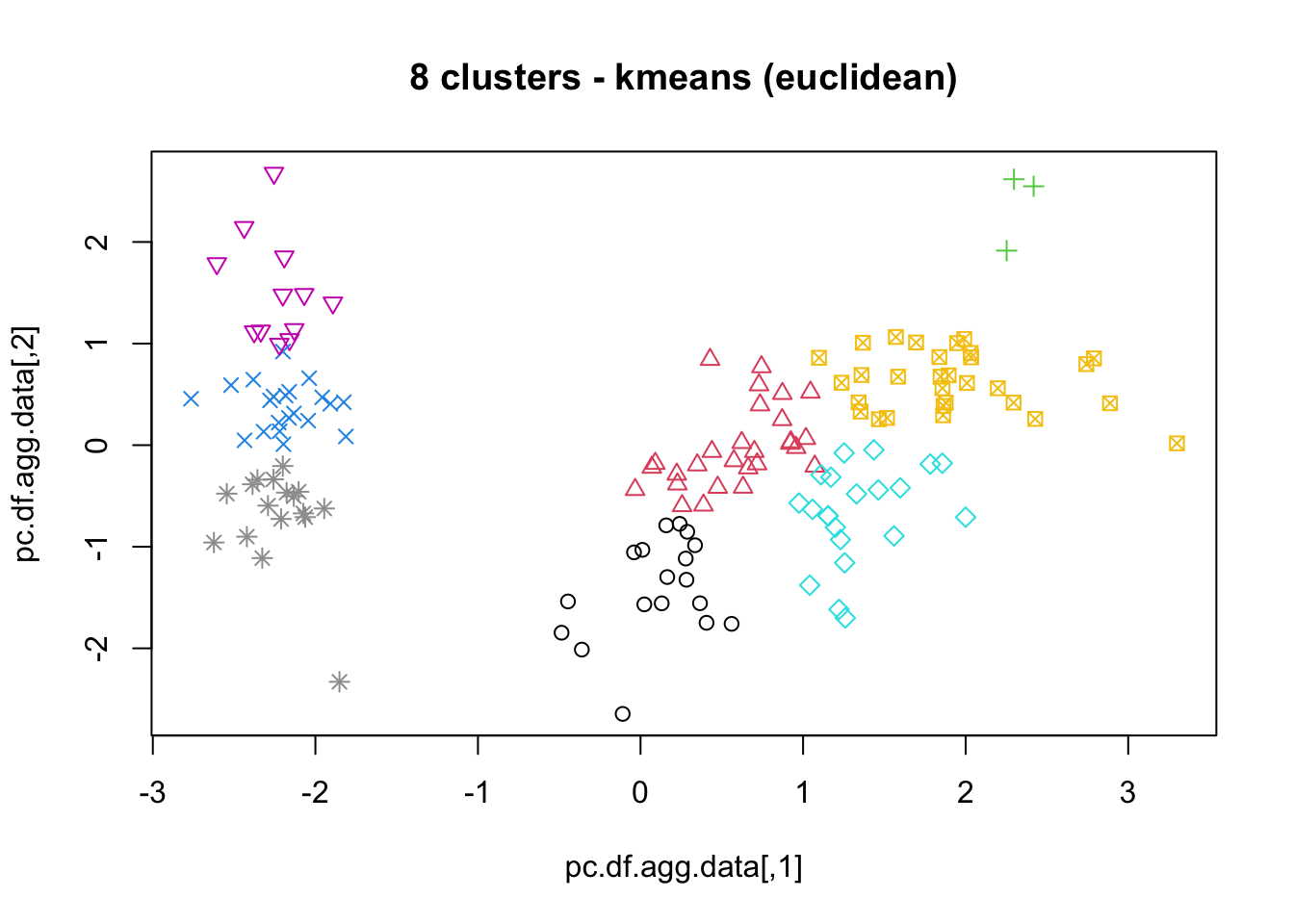

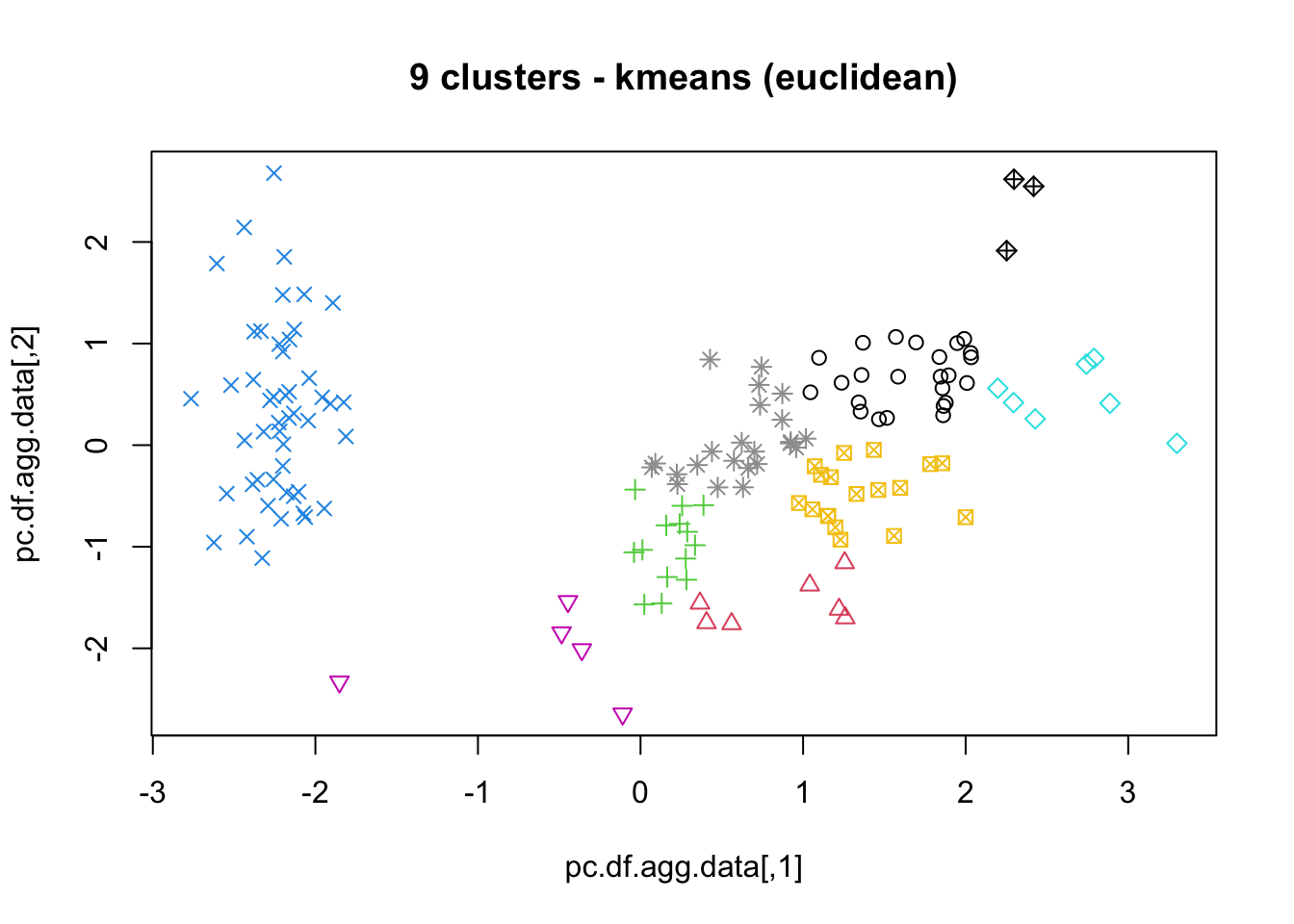

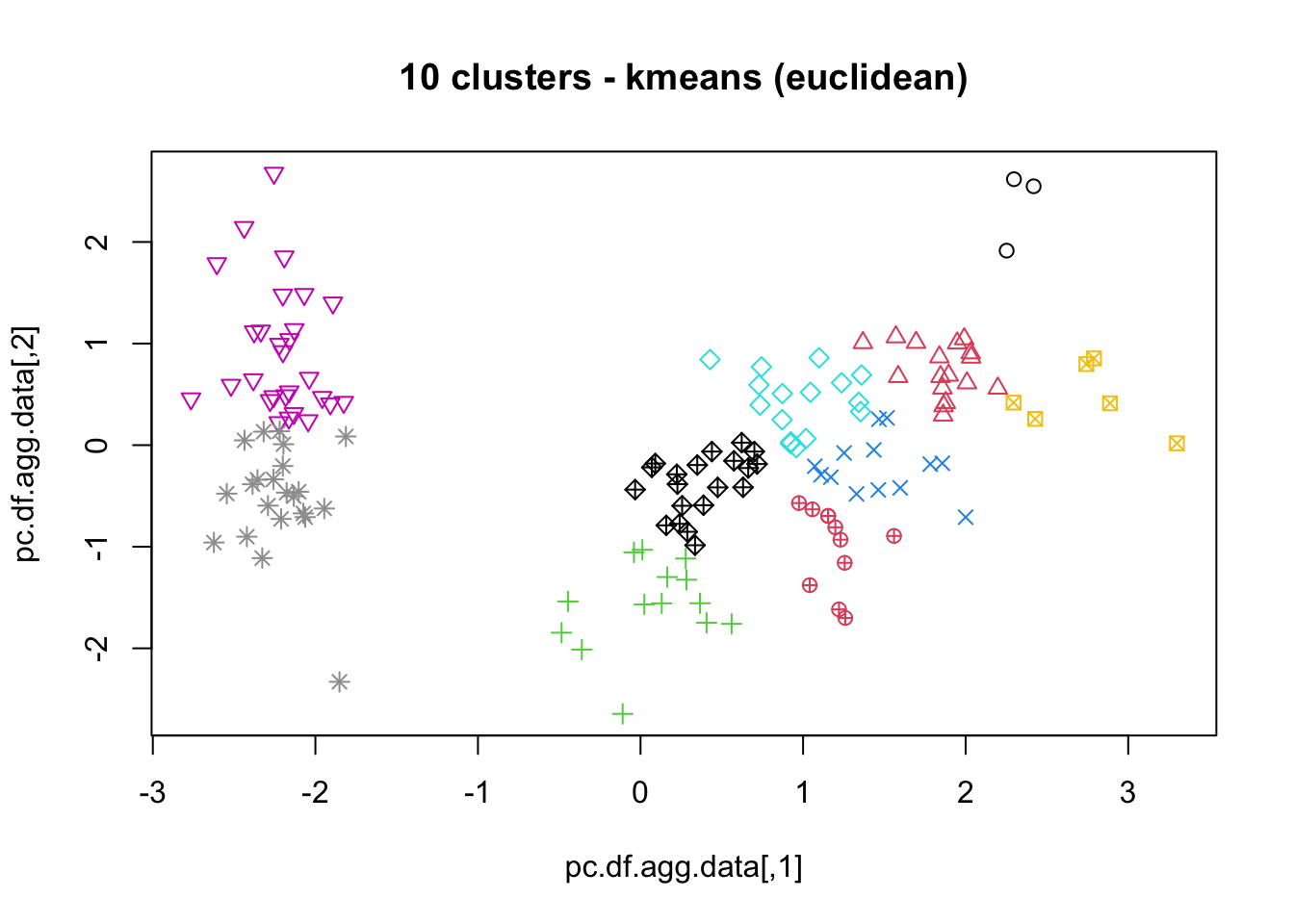

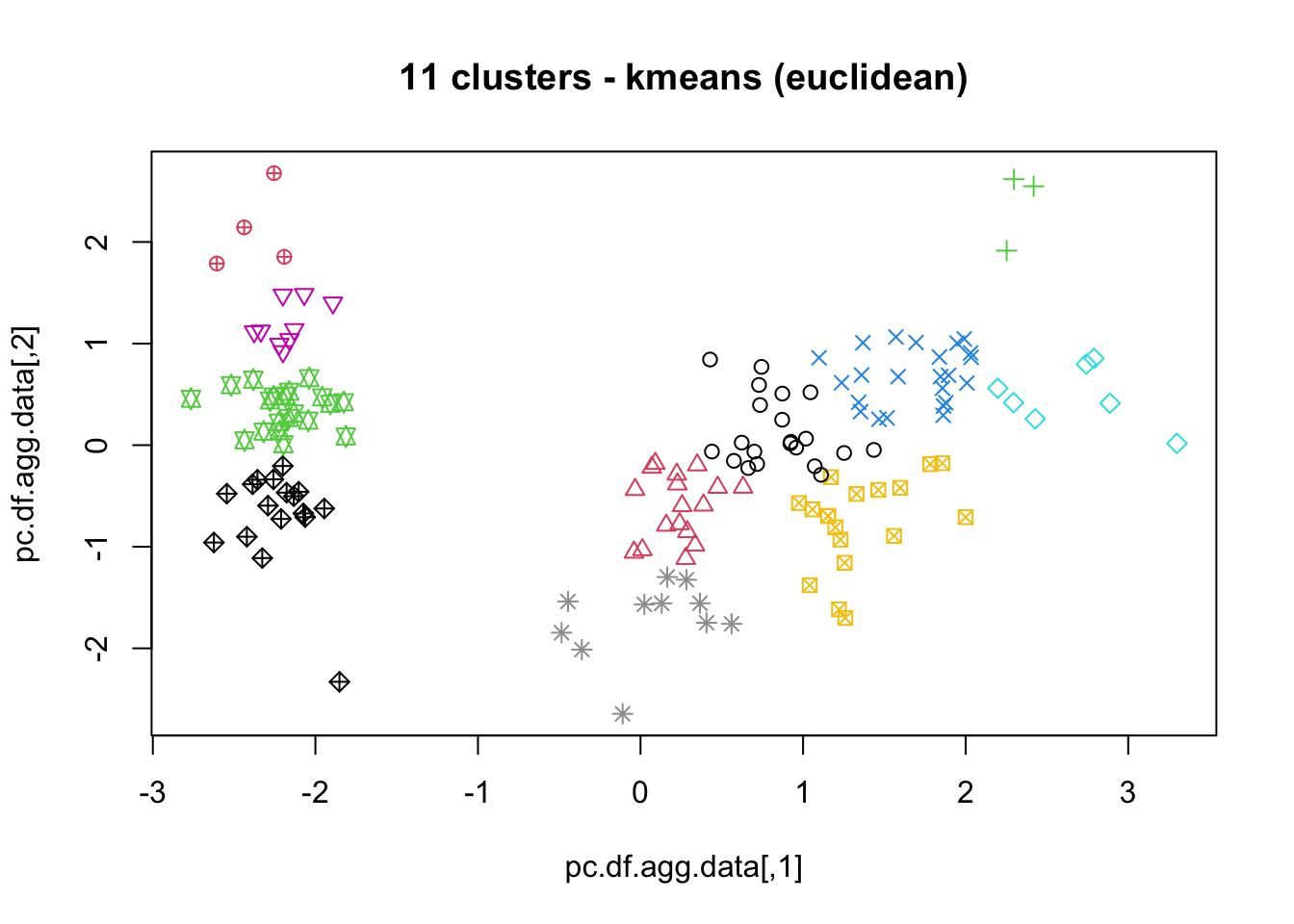

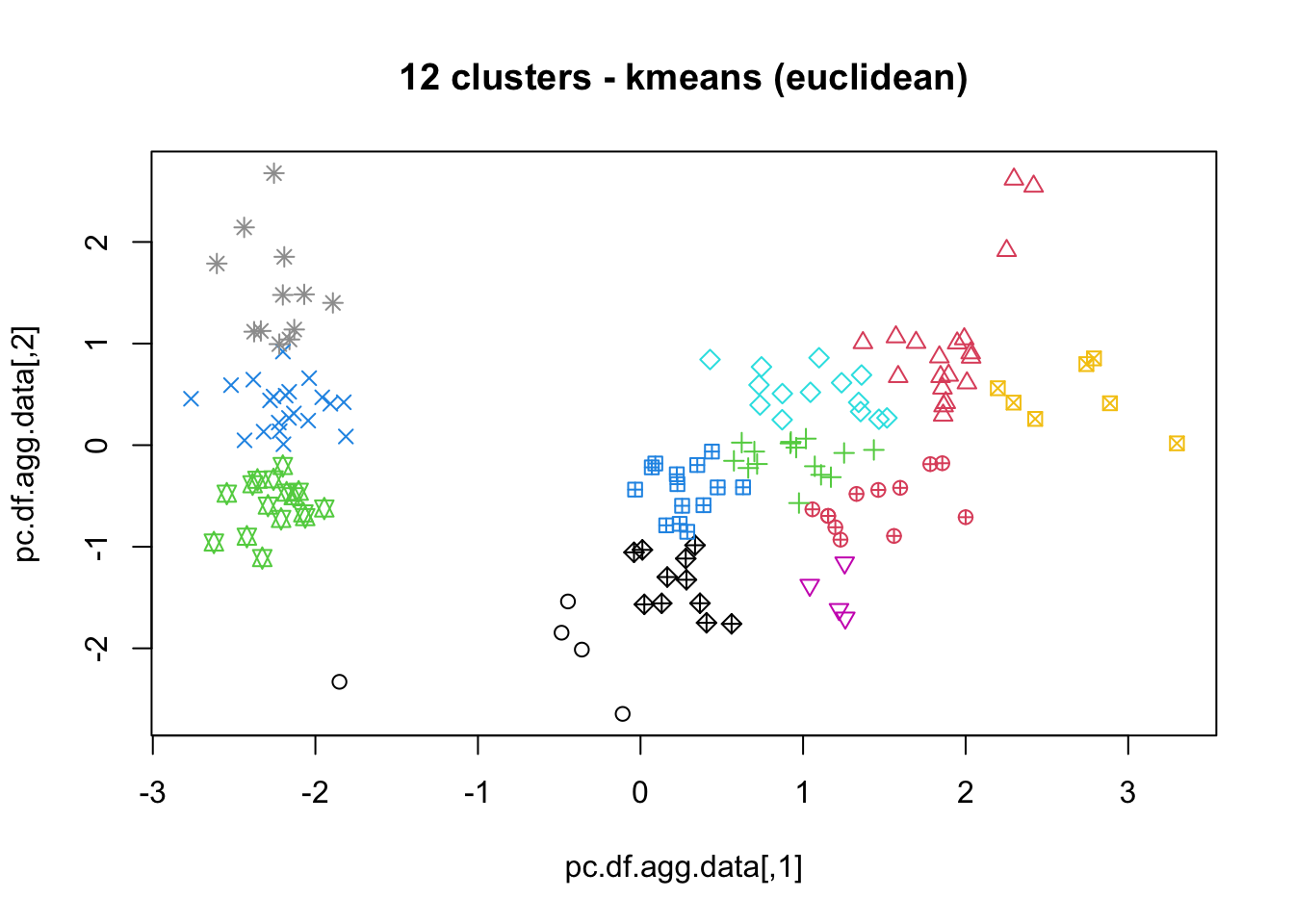

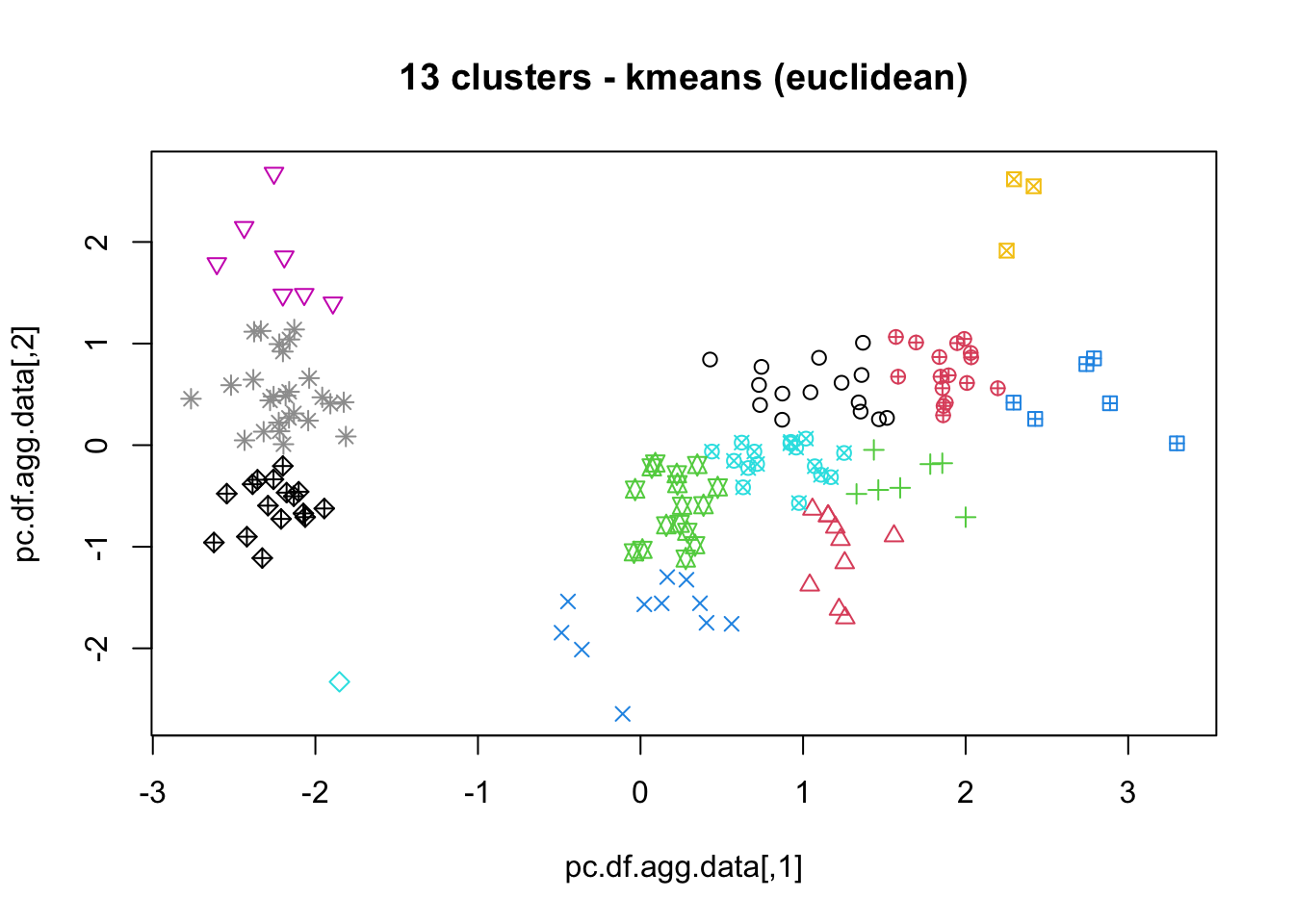

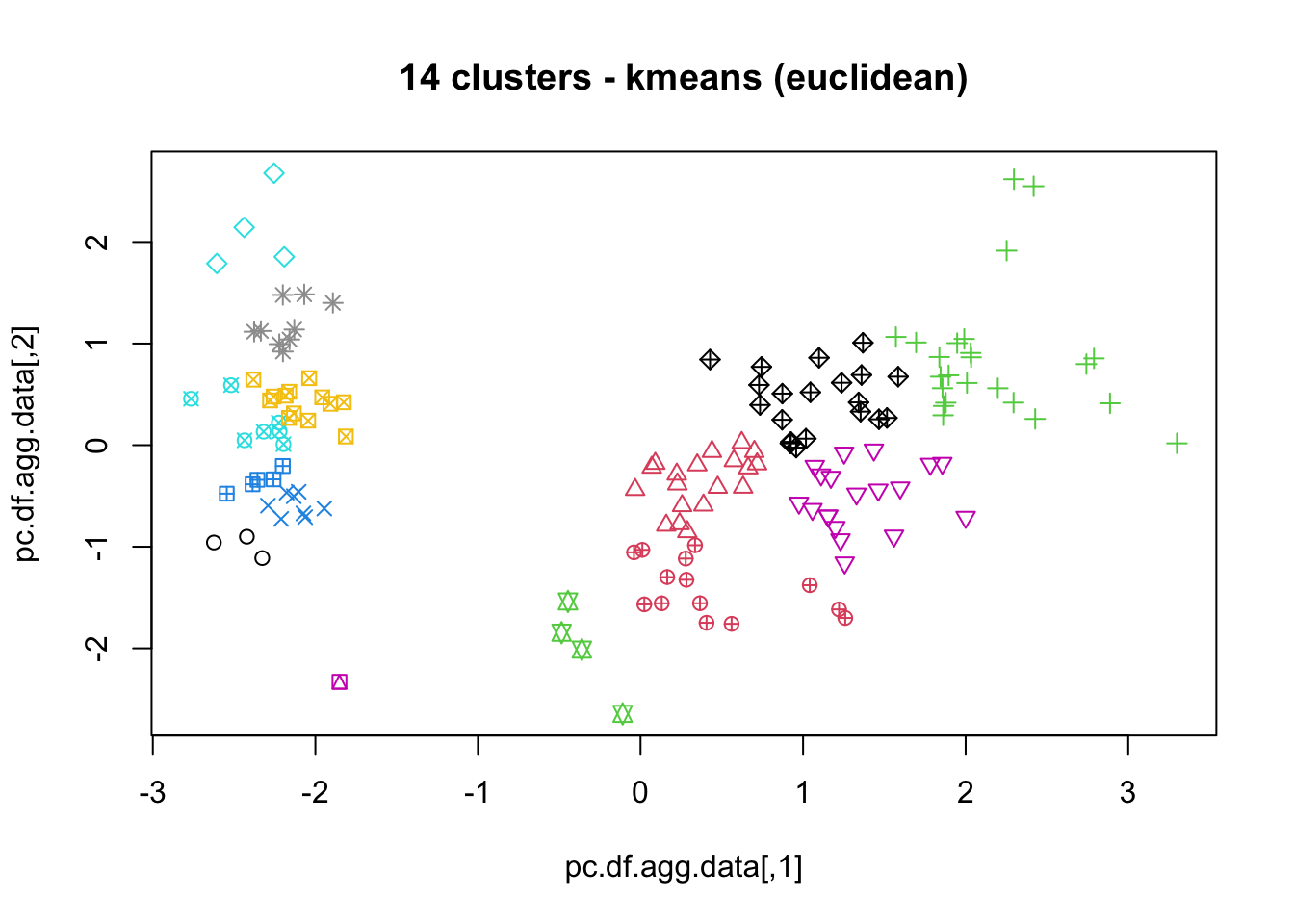

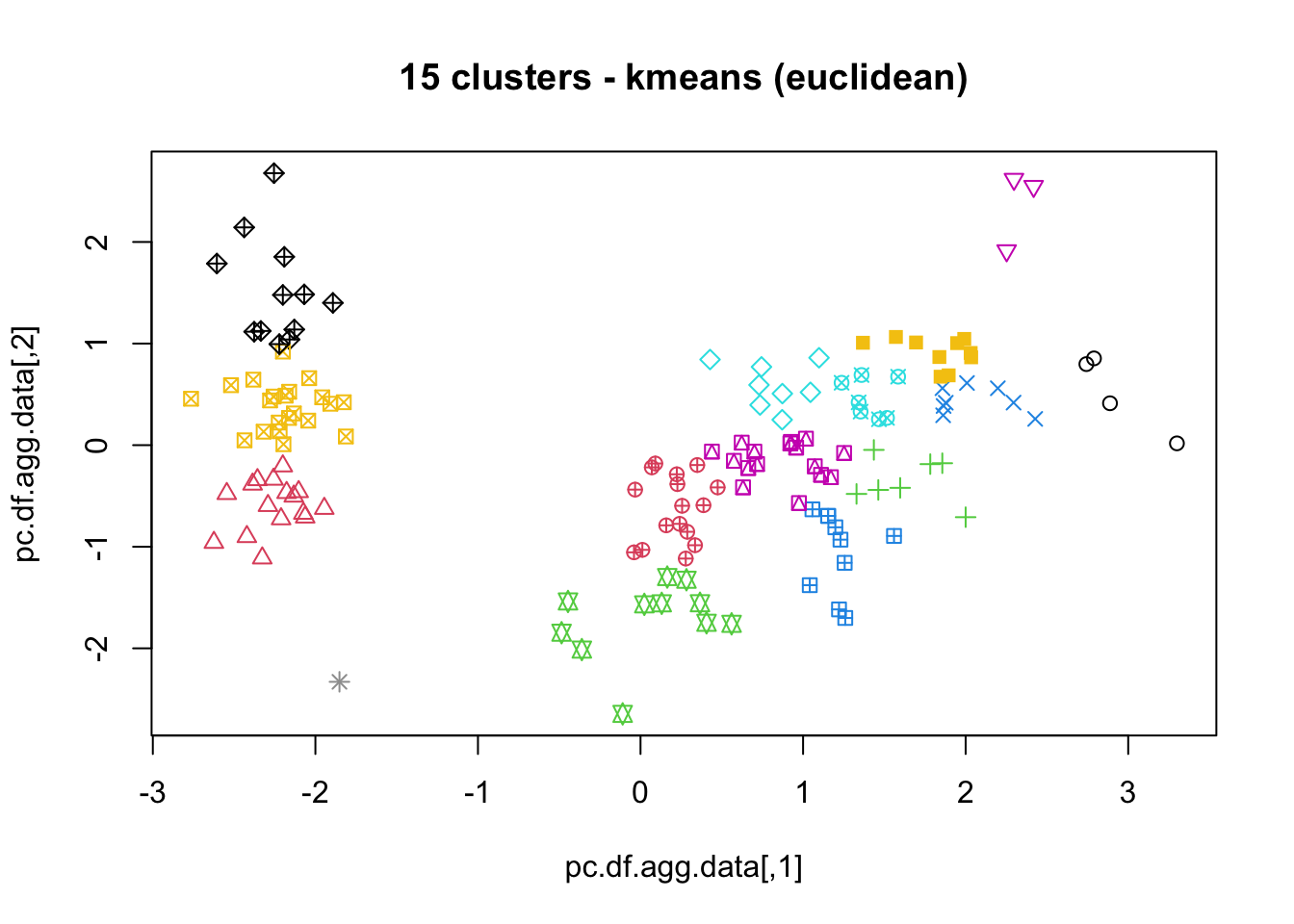

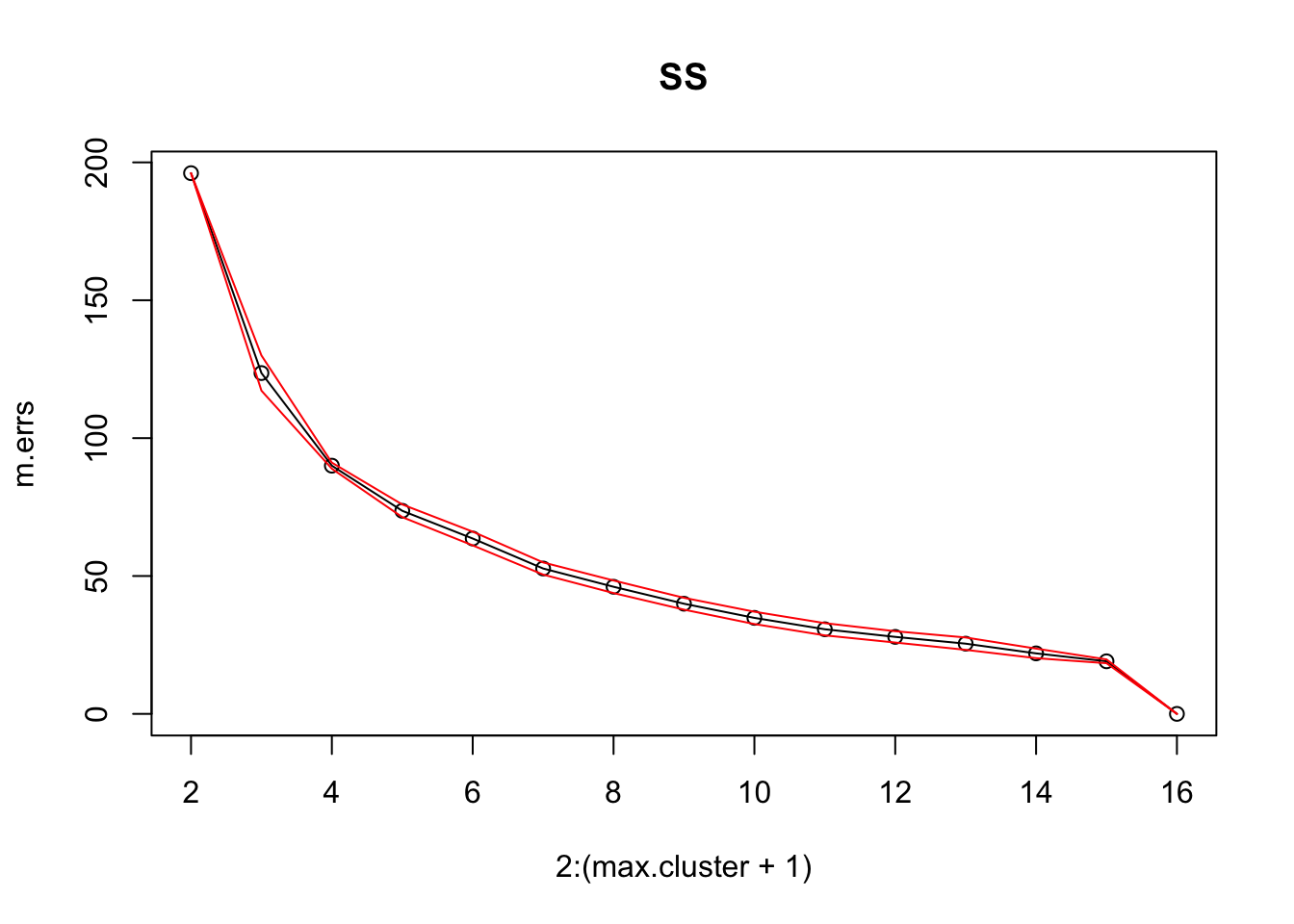

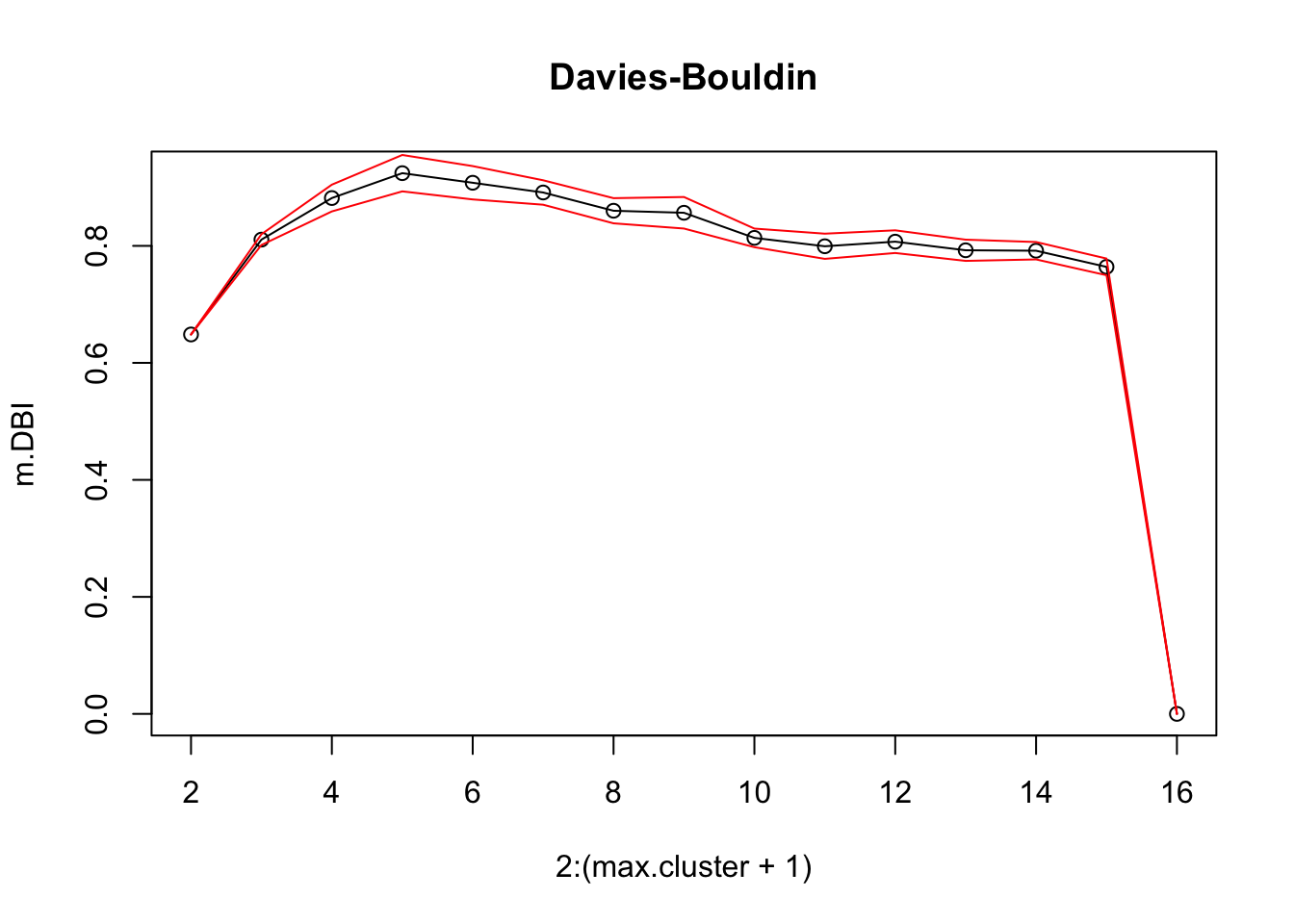

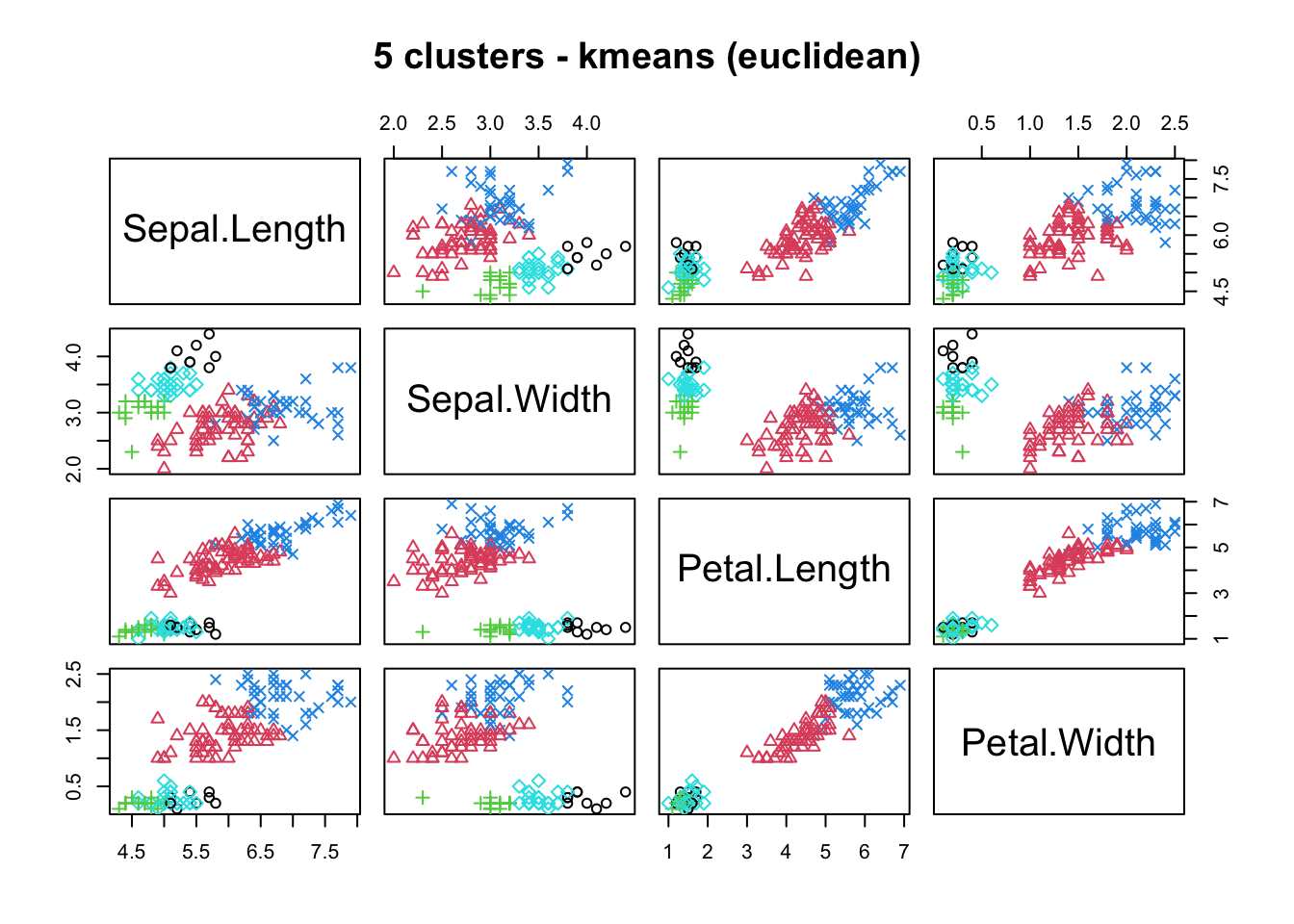

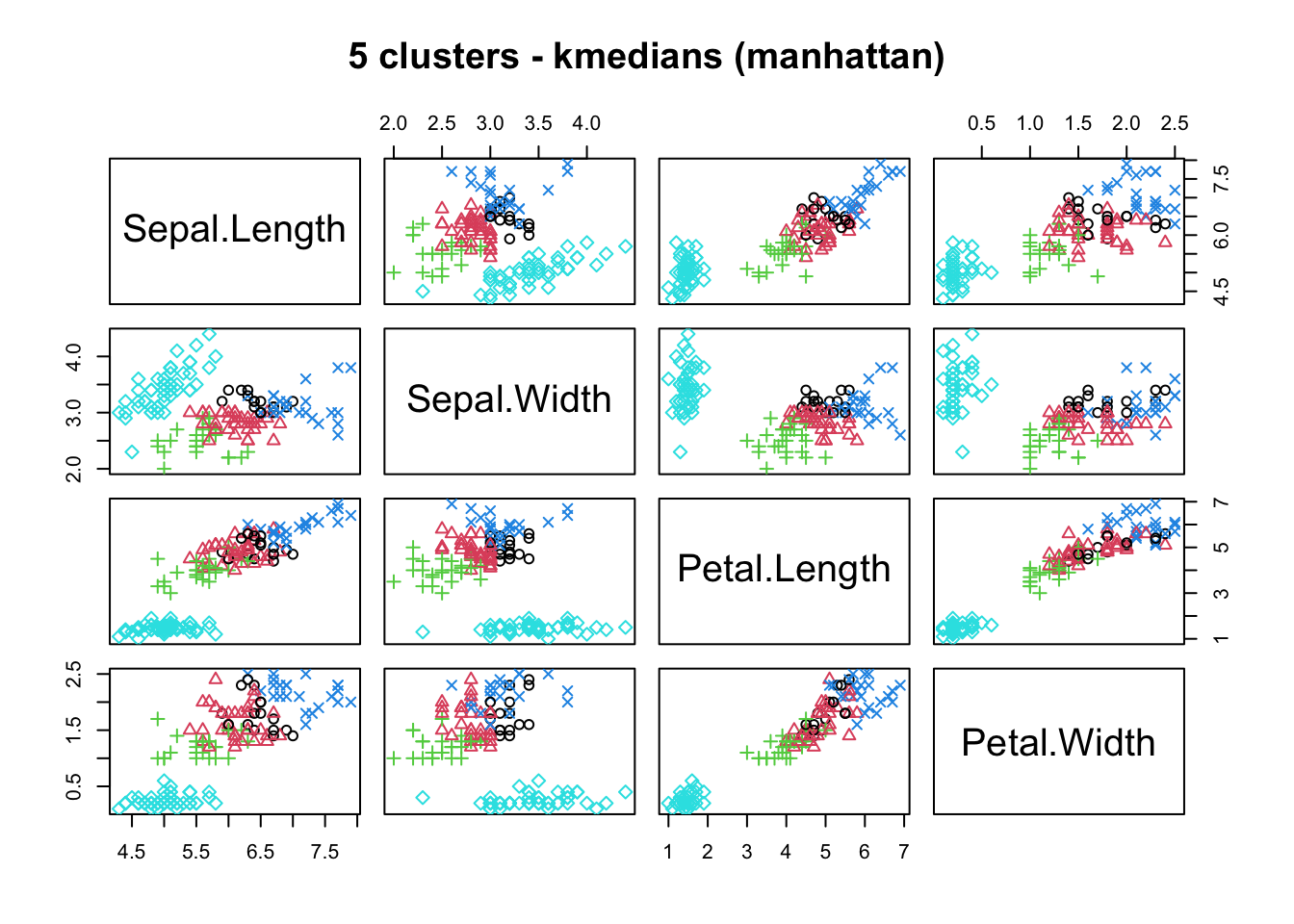

16.4.3 k-Means