Module 15 Data Visualization

Why do we display evidence in a report, in a newspaper article, or online? What is the fundamental goal of our charts and graphs? Representation data properly, in a manner that will allow an audience to gain insight about the underlying situation, is, without a doubt, the most important skill a quantitative consultant must possess. In this chapter, we introduce some commonly-used charts, discuss the fundamental principles of analytical designs, give a brief overview of dashboards, and present the important notions of the grammar of graphics and its implementation in ggplot2.

15.1 Data and Charts

As data scientist Damian Mingle once put it, modern data analysis is a different beast:

“Discovery is no longer limited by the collection and processing of data, but rather management, analysis, and visualization. [103]”

What can be done with the data, once it has been collected/processed?

Two suggestions come to mind:

analysis is the process by which we extract actionable insights from the data (this process is discussed in later subsections), while

visualization is the process of presenting data and analysis outputs in a visual format; visualization of data prior to analysis can help simplify the analytical process; post-analysis, it allows for the results to be communicated to various stakeholders.

In this section, we focus on important visualization concepts and methods; we shall provide examples of data displays to illustrate the various possibilities that might be produced by the data presentation component of a data analysis system.

15.1.1 Pre-Analysis Uses

Even before the analytical stage is reached, data visualization can be used to set the stage for analysis by:

detecting invalid entries and outliers;

shaping the data transformations (binning, standardization, dimension reduction, etc.);

getting a sense for the data (data analysis as an art form, exploratory analysis), and

identifying hidden data structures (clustering, associations, patterns which may inform the next stage of analysis, etc.).

15.1.2 Presenting Results

The crucial element of data presentations is that they need to help convey the insight (or the message); they should be clear, engaging, and (more importantly) readable. Our ability to think of questions (and to answer them) is in some sense limited by what we can visualize.

There is always a risk that if certain types of visualization techniques dominate in evidence presentations, the kinds of questions that are particularly well-suited to providing data for these techniques will come to dominate the landscape, which will then affect data collection techniques, data availability, future interest, and so forth.

15.1.2.1 Generating Ideas and Insights

In Beautiful Evidence [104], E.explains that evidence is presented to assist our thinking processes. He further suggests that there is a symmetry to visual displays of evidence – that visualization consumers should be seeking exactly (and explicitly) what the visualization producers should be providing, namely:

meaningful comparisons;

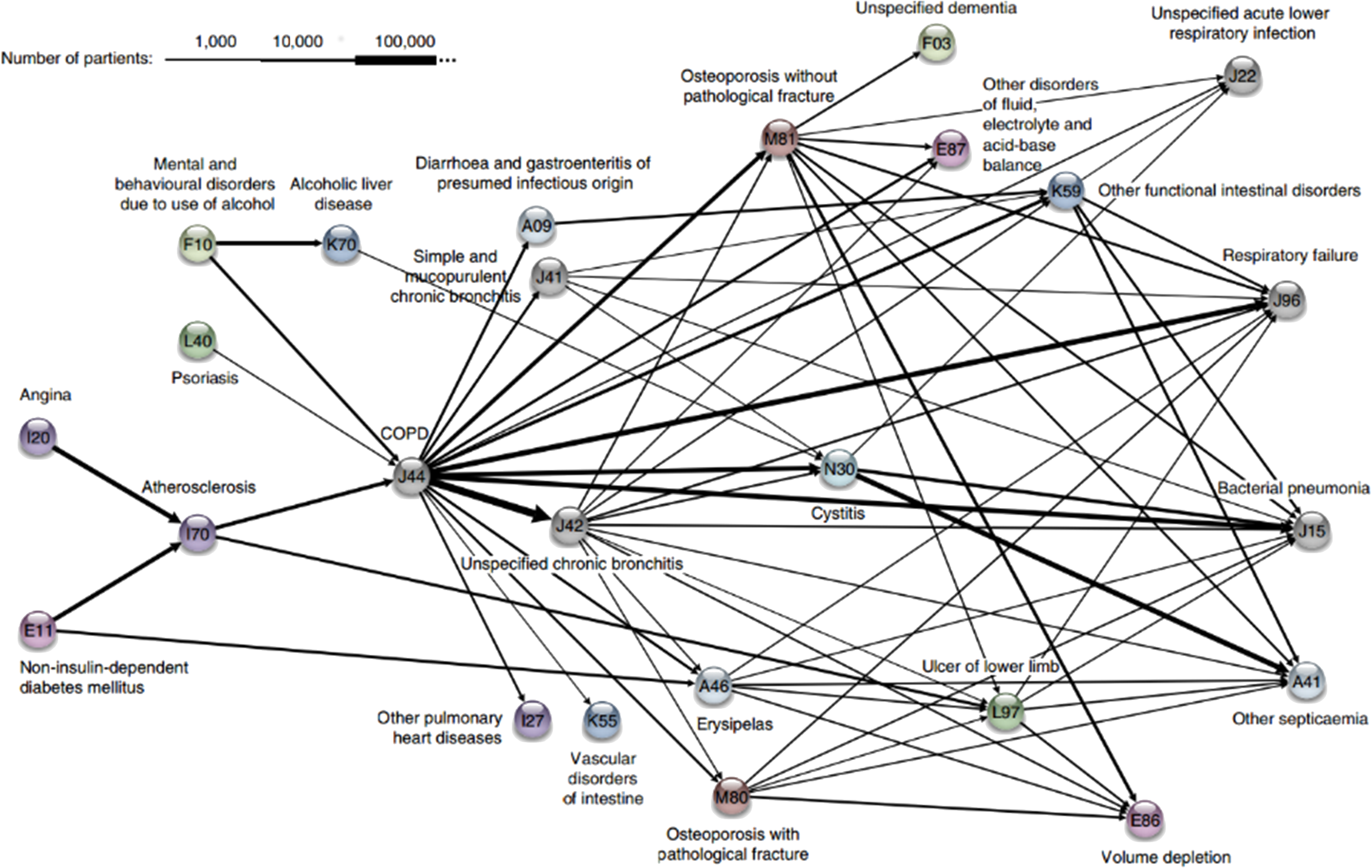

causal networks and underlying structure;

multivariate links;

integrated and relevant data, and

a primary focus on content.

More details can be found in Fundamental Principles of Analytical Design.

15.1.2.2 Selecting a Chart Type

The choice of visualization methods is strongly dependent on the analysis objective, that is, on the questions that need to be answered. Presentation methods should not be selected randomly (or simply from a list of easily-produced templates) [83].

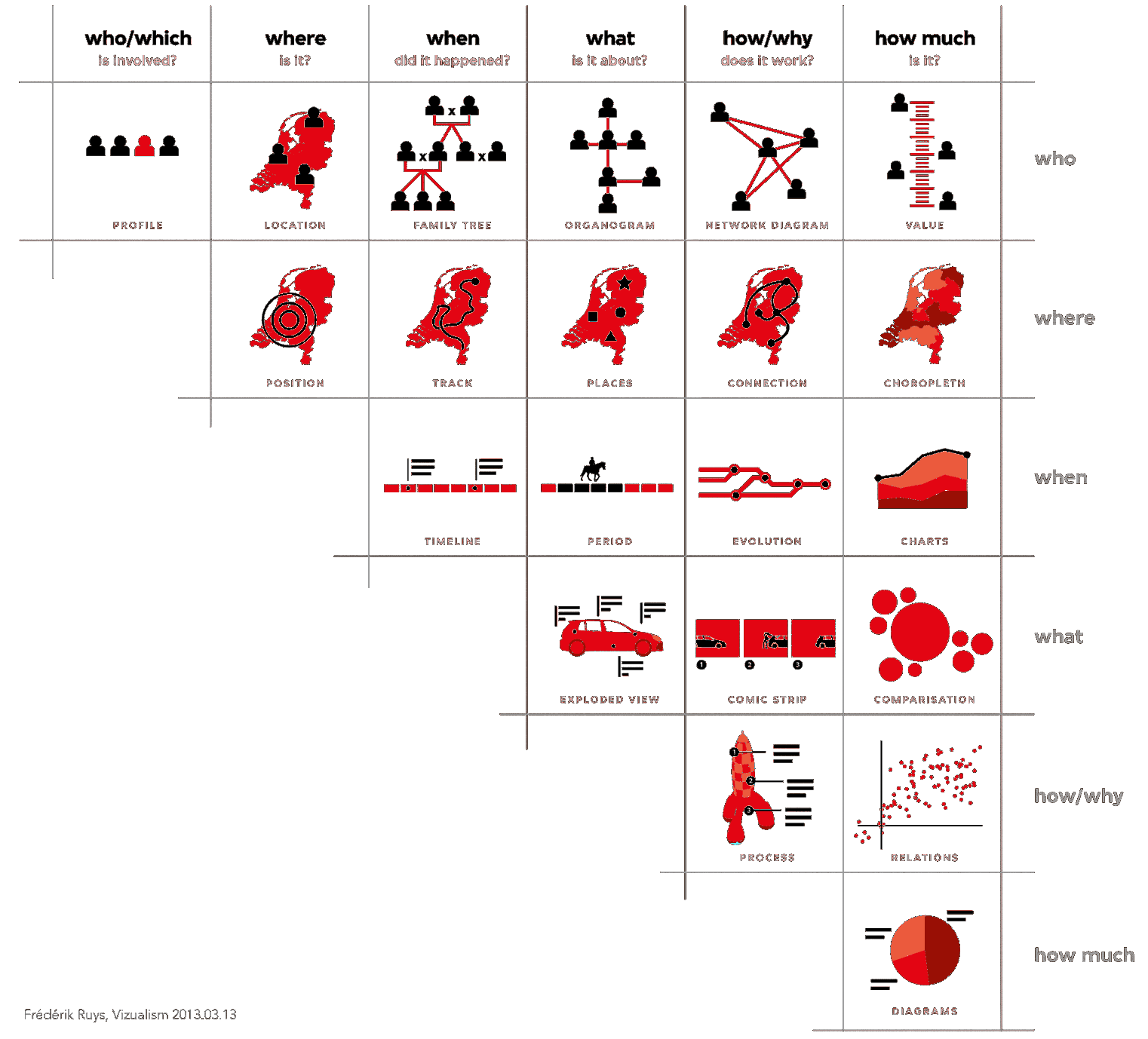

In Figure 15.1 below, F. Ruys suggests various types of visual displays that can be used, depending on the objective:

who is involved?

where is the situation taking place?

when is it happening?

what is it about?

how/why does it work?

how much?

Figure 15.1: Data visualization suggestions, by type of question (F. Ruys, Vizualism.nl).

A general dashboard should at least be able to produce the following types of display:

charts – comparison and relation (scatterplots, bubble charts, parallel coordinate charts, decision trees, cluster plots, trend plots)

choropleth maps (heat maps, classification maps)

network diagrams and connection maps (association rule networks, phrase nets)

univariate diagrams (word clouds, box plots, histograms)

15.1.3 Multivariate Elements in Charts

At most two fields can be represented by position in the plane. How can we then represent other crucial elements on a flat computer screen?

Potential solutions include:

third dimension

marker size

marker colour

colour intensity and value

marker texture

line orientation

marker shape

motion/movie

These elements do not always mix well – efficient design is as much art as it is science.

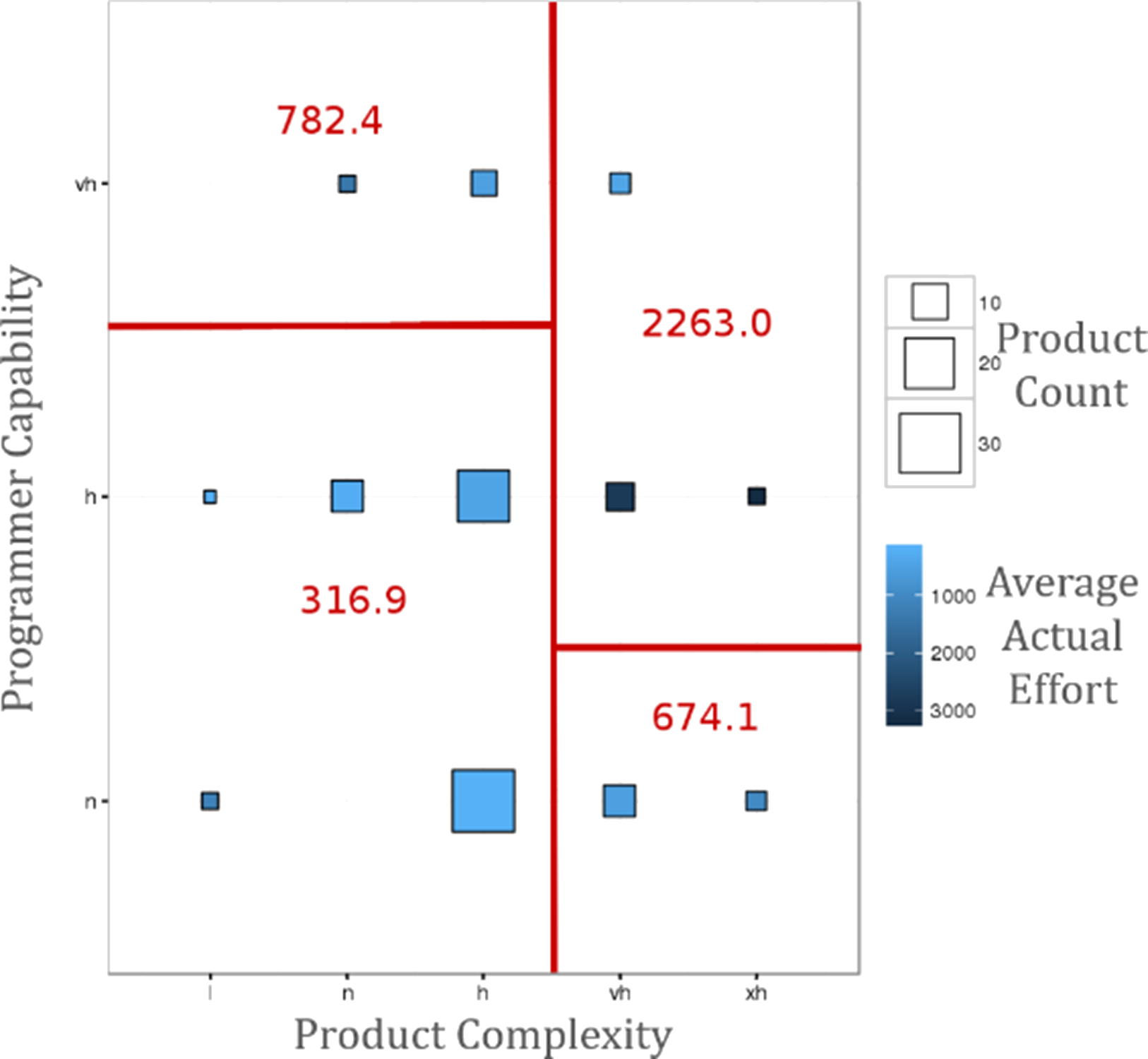

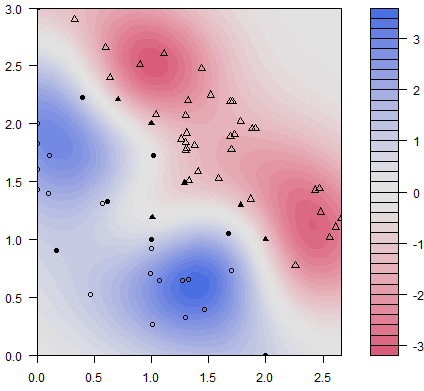

The following examples, along with concise descriptions of key components and lists of questions that they could help answer, highlight charts’ strengths (and limitations). Some additional diagrams showcasing the four presentation types discussed above are also provided.

15.1.3.1 Bubble Chart

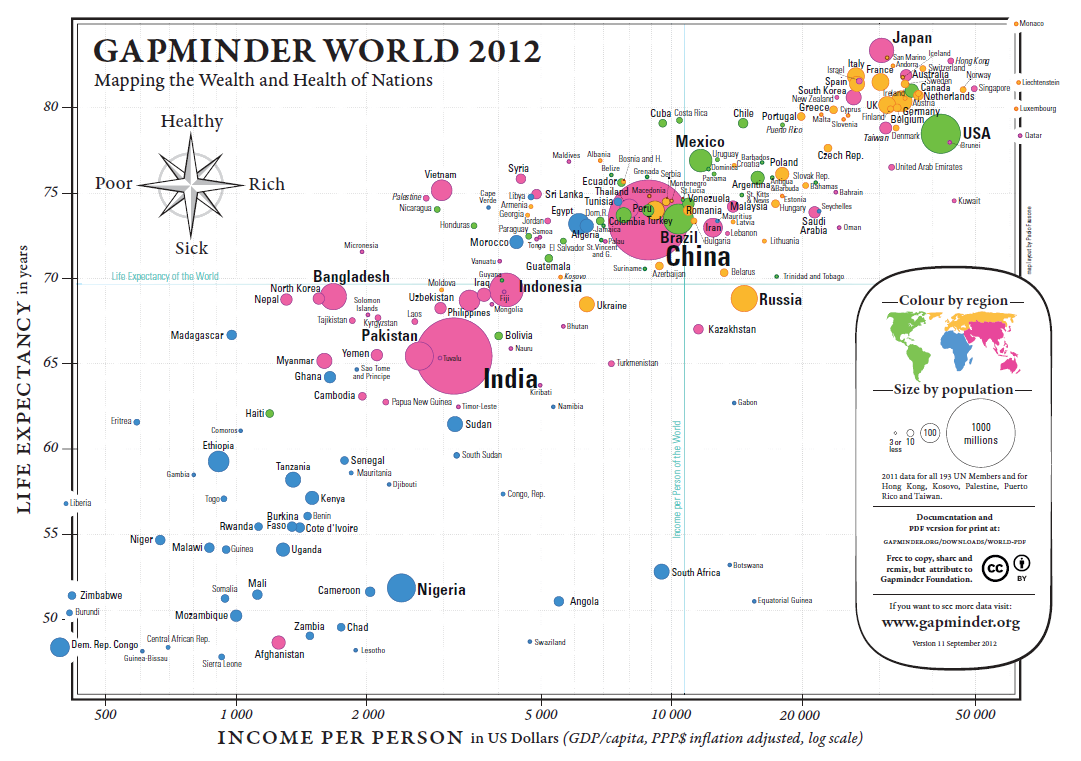

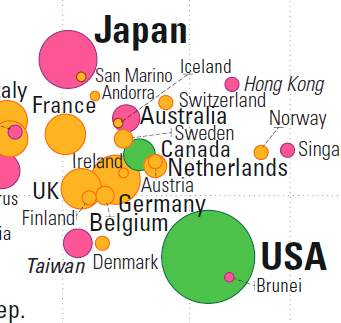

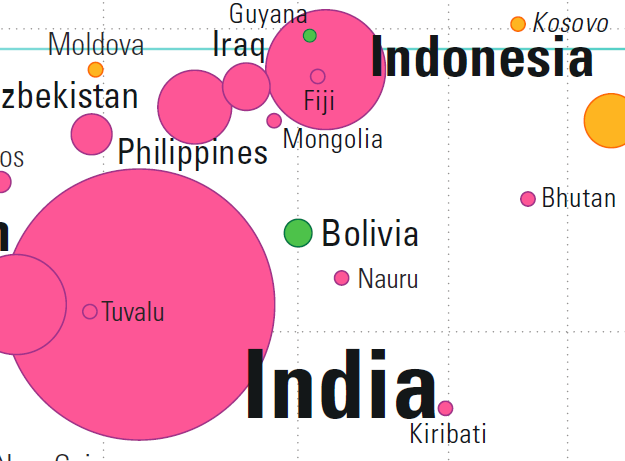

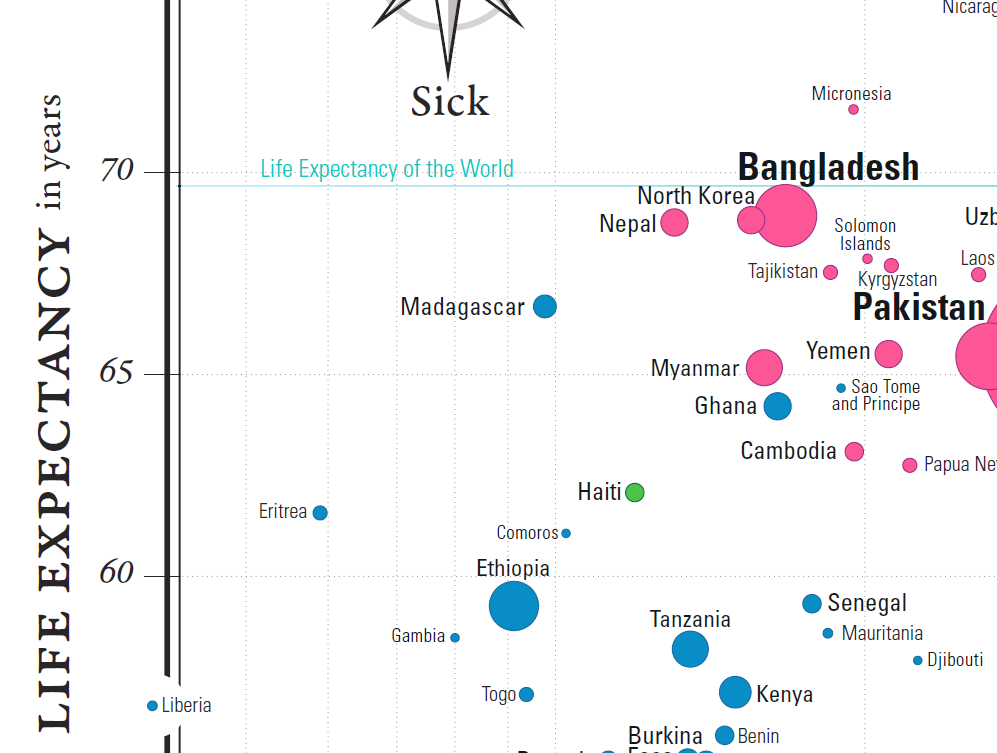

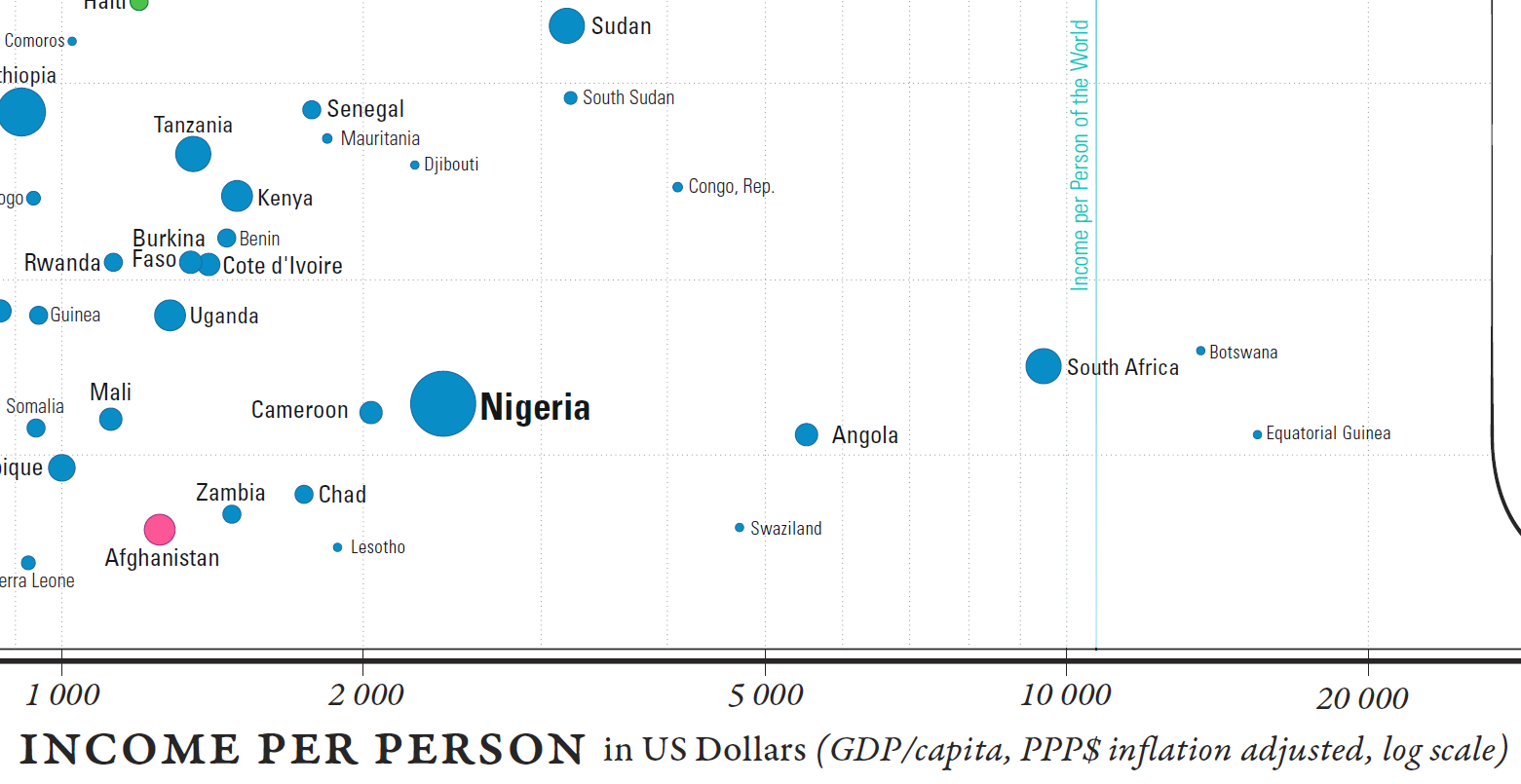

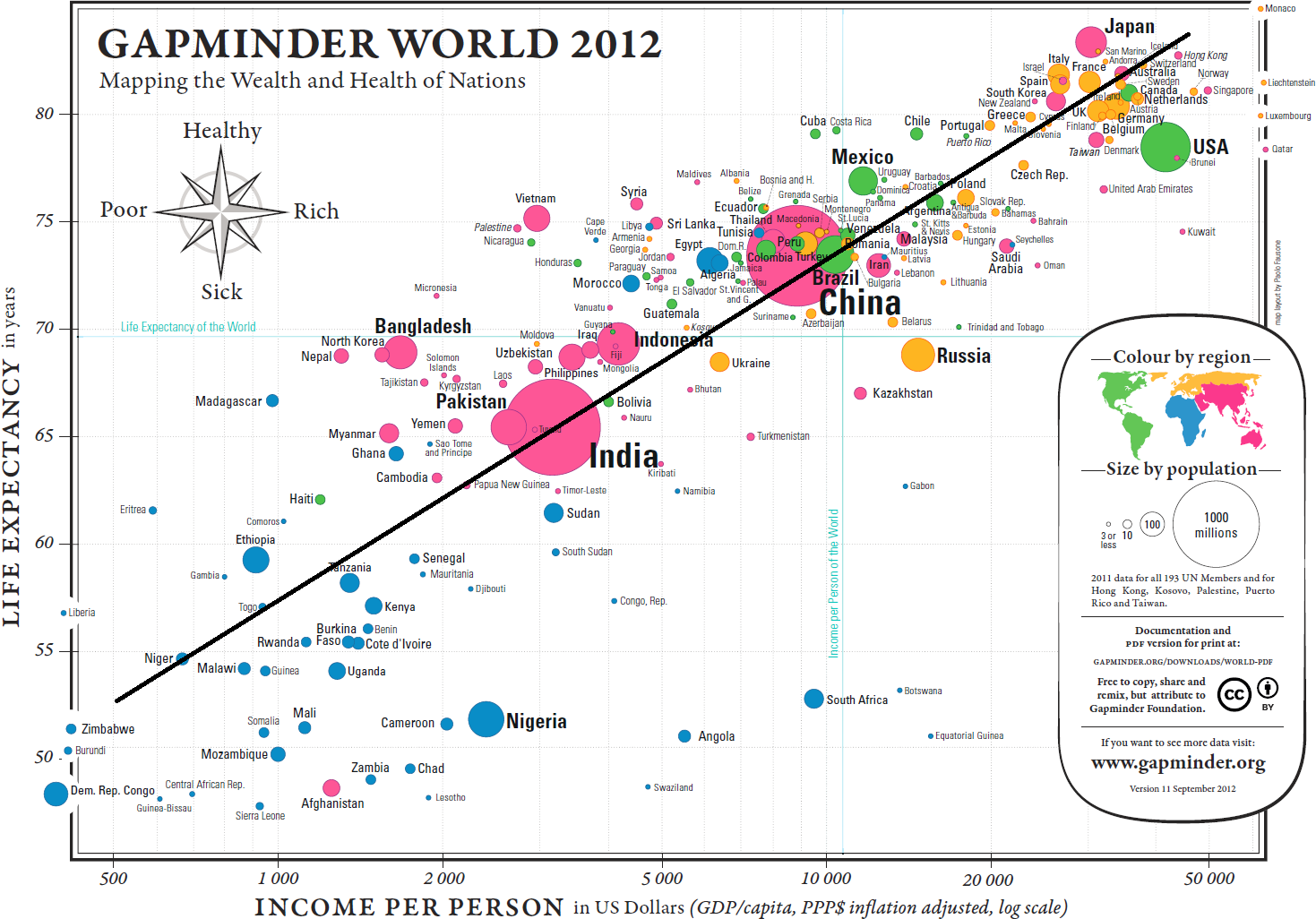

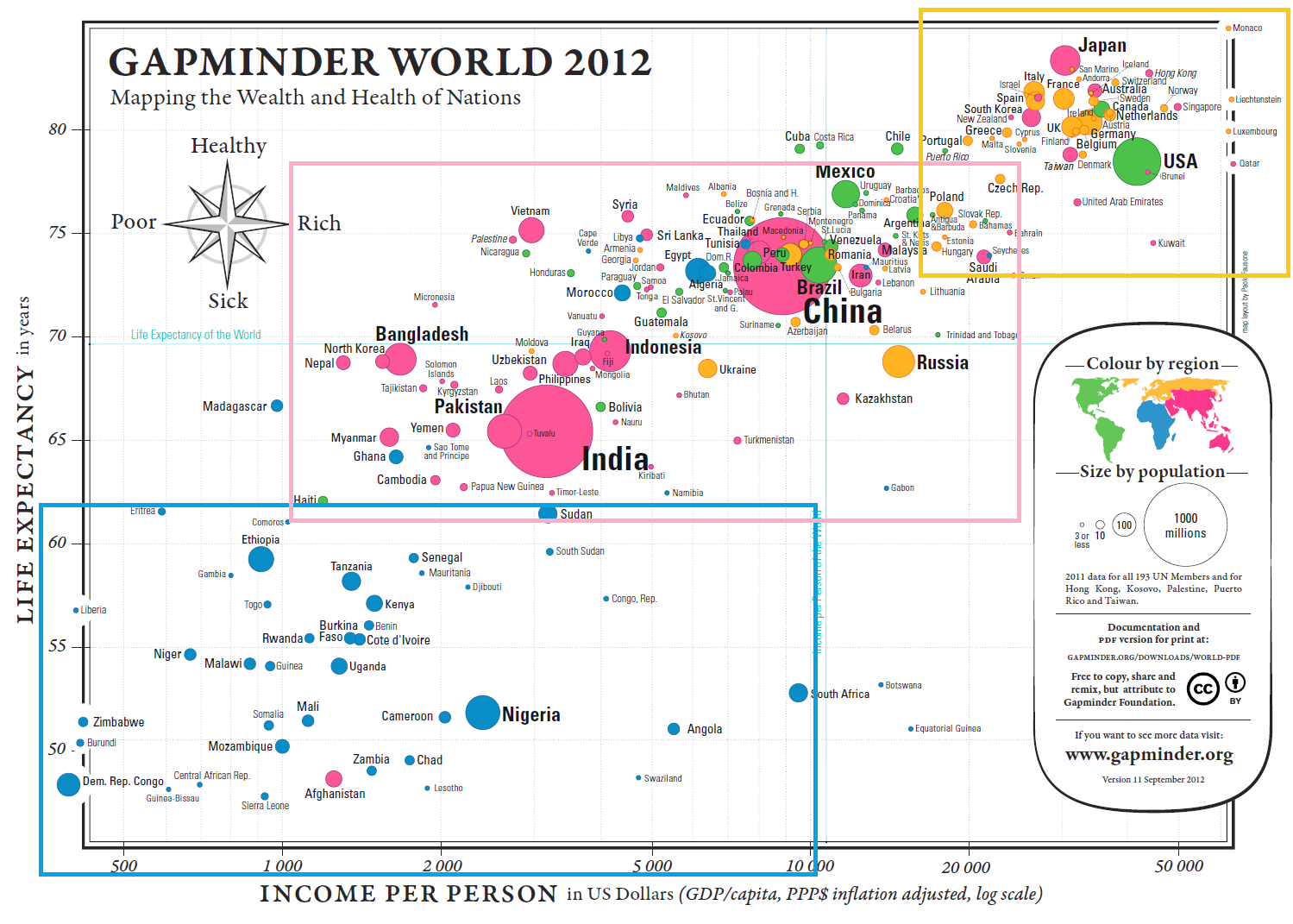

Example: Health and Wealth of Nations (see Figure 15.2)

Figure 15.2: Health and Wealth of Nations, in 2012 (Gapminder Foundation).

Data:

2012 life expectancy in years

2012 inflation adjusted GDP/capita in USD

2012 population for 193 UN members and 5 other countries

Some Questions and Comparisons:

Can we predict the life expectancy of a nation given its GDP/capita?

(The trend is roughly linear: \(\mbox{Expectancy}\approx 6.8 \times \ln \mbox{GDP/capita} + 10.6\))Are there outlier countries? Botswana, South Africa, and Vietnam, at a glance.

Are countries with a smaller population healthier? Bubble size seems uncorrelated with the axes’ variates.

Is continental membership an indicator of health and wealth levels? There is a clear divide between Western Nations (and Japan), most of Asia, and Africa.

How do countries compare against world values for life expectancy and GDP per capita? The vast majority of countries fall in three of the quadrants. There are very few wealthy countries with low life expectancy. China sits near the world values, which is expected for life expectancy, but more surprising when it comes to GDP/capita – compare with India.

Multivariate Elements:

positions for health and wealth

bubble size for population

colour for continental membership

labels to identify the nations

Comments:

Are life expectancy and GDP/capita appropriate proxies for health and wealth?

A fifth element could also be added to a screen display: the passage of time. In this case, how do we deal with countries coming into existence (and ceasing to exist as political entities)?

15.1.3.2 Choropleth Map

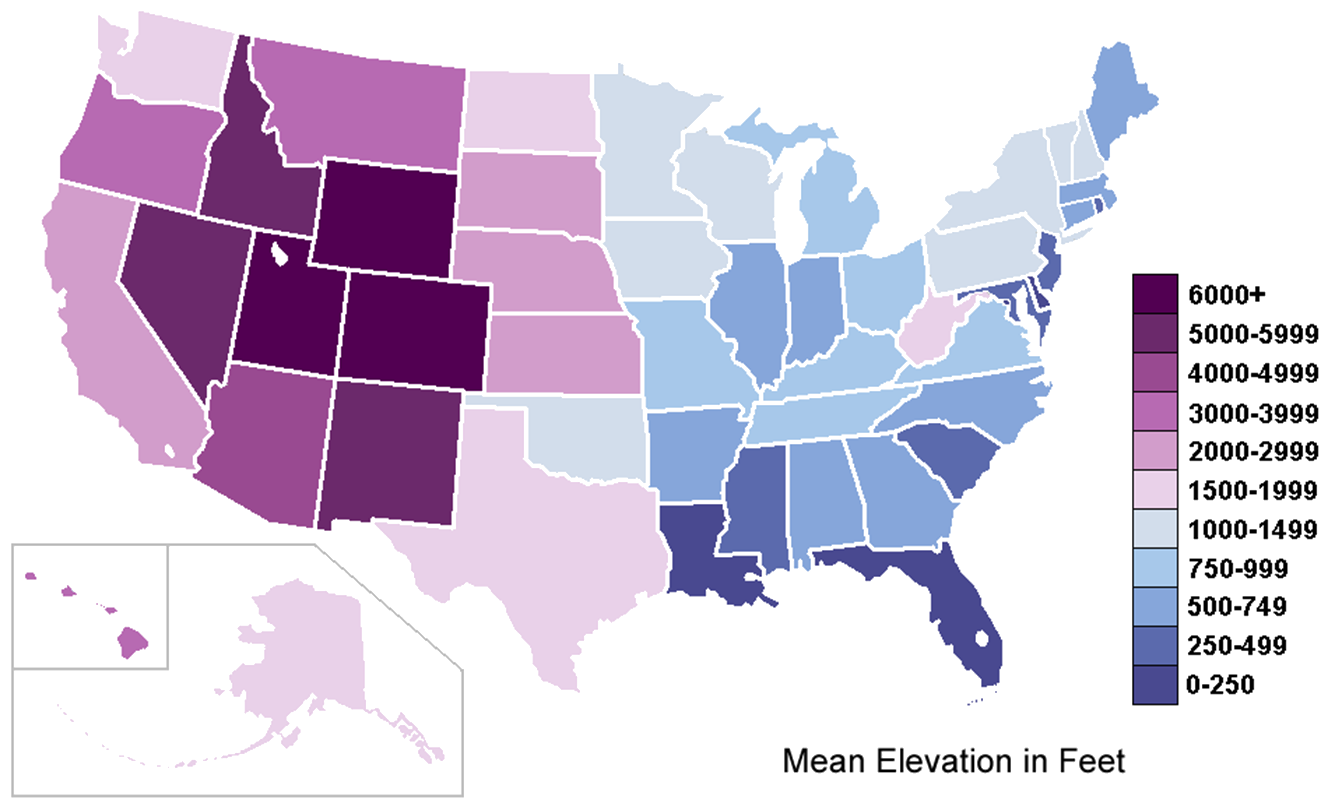

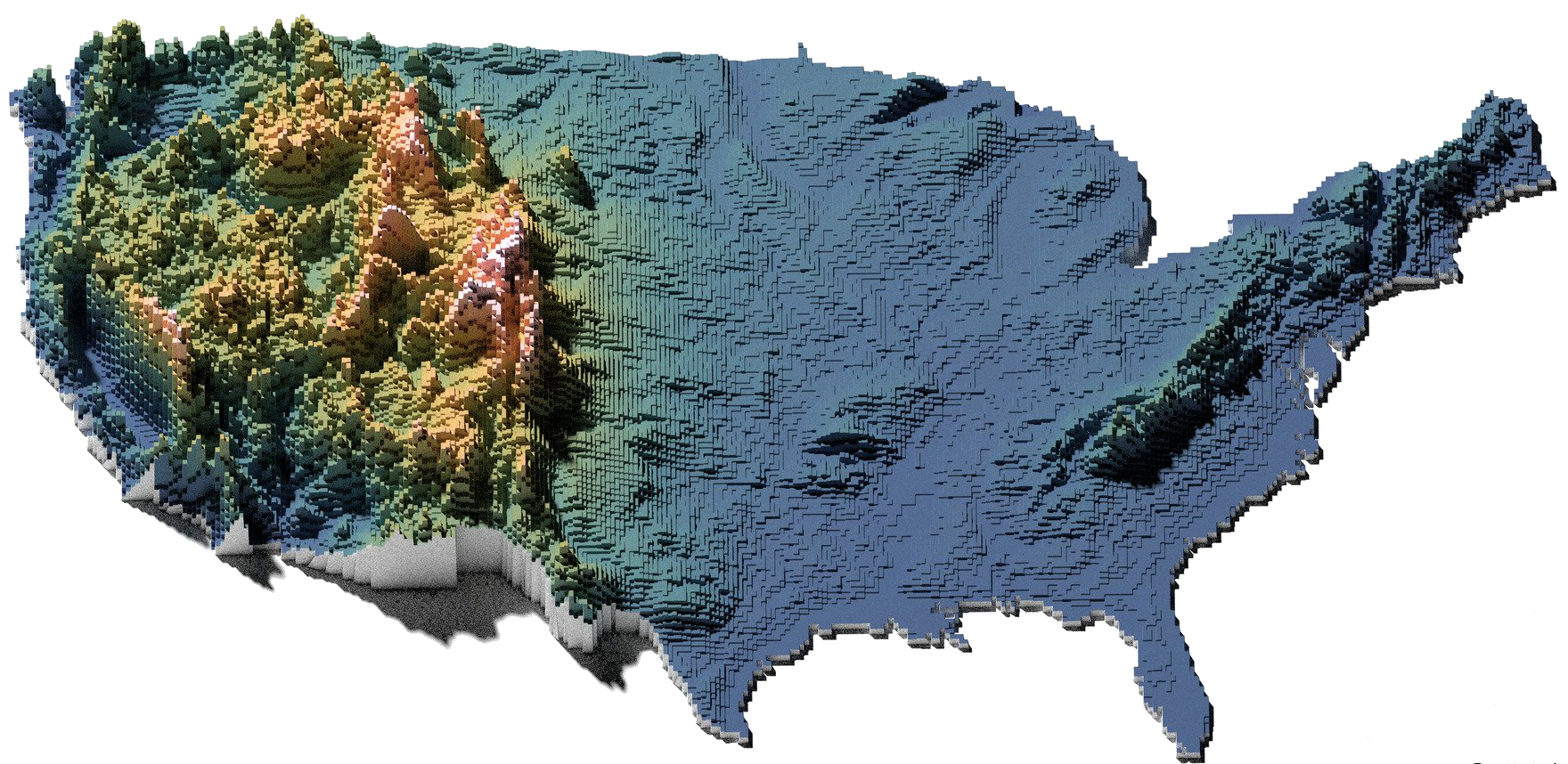

Example: Mean Elevation by U.S. State (see Figure 15.3)

Figure 15.3: Mean elevation by U.S. state, in feet (source unknown); contrast with high resolution elevation map (by twitter user @cstats.)

Data: 50 observations, ranging from sea level (0-250) to (6000+)

Some Questions and Comparisons:

Can the mean elevation of the U.S. states tell us something about the global topography of the U.S.? West has higher mean elevation related to the presence of the Rockies; Eastern coastal states are more likely to suffer from rising water levels, for instance.

Are there any states that do not “belong” in their local neighbourhood, elevation-wise? West Virginia and Oklahoma seem to have the “wrong” shade – is that an artifact of the colour gradient and scale in use?

Multivariate Elements: geographical distribution and purple-blue colour gradient (as the marker for mean elevation)

Comments:

Is the ‘mean’ the right measurement to use for this map? It depends on the author’s purpose.

Would there be ways to include other variables in this chart? Population density with texture, for instance.

15.1.3.3 Network Diagram

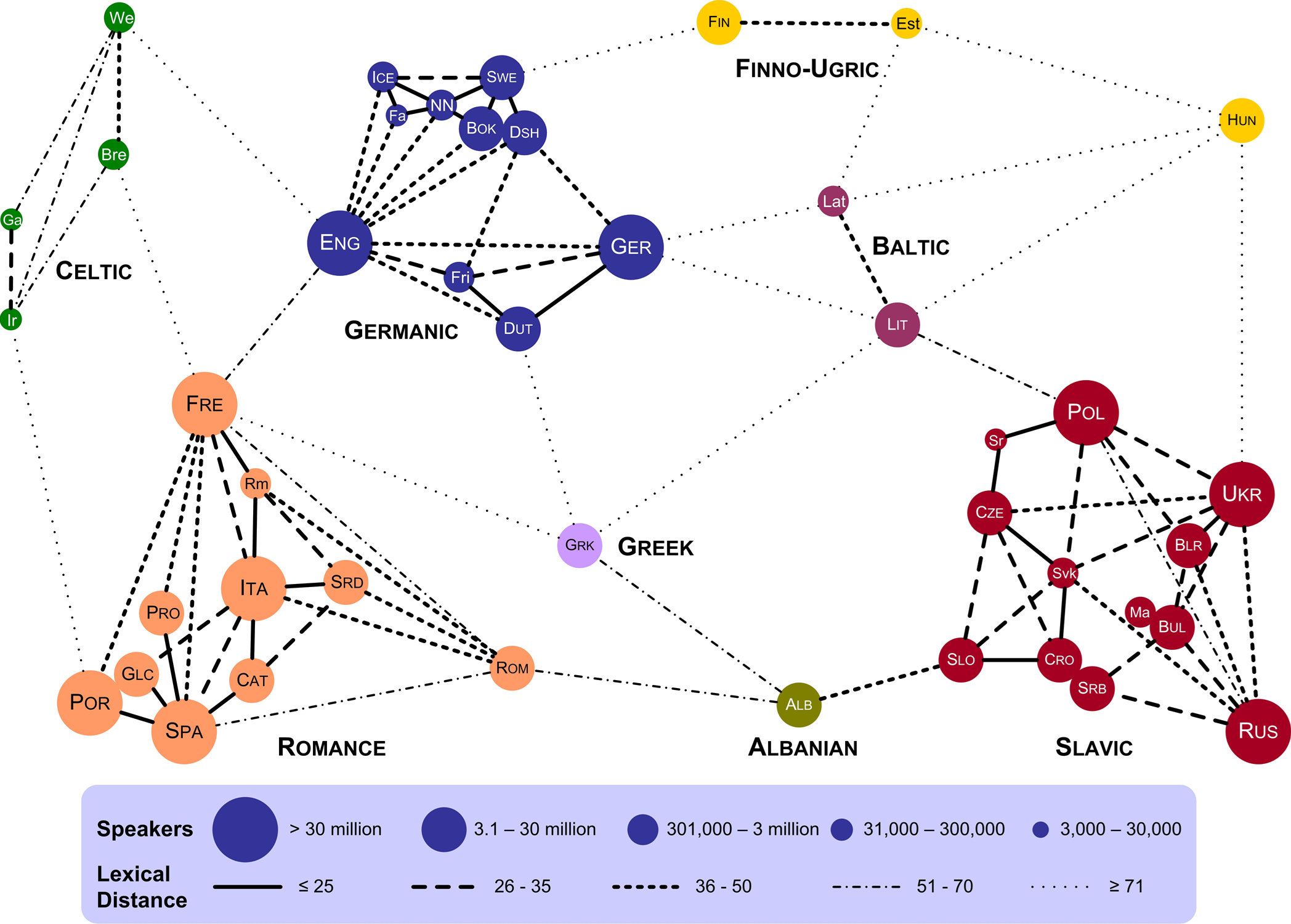

Example: Lexical Distances (see Figure 15.4)

Figure 15.4: Lexical distance of European languages (T. Elms, [105).

Data:

speakers and language groups for 43 European languages

lexical distances between languages

Some Questions and Comparisons:

Are there languages that are lexically closer to languages in other lexical groups than to languages in their own groups? French is lexically closer to English than it is to Romanian, say.

Which language has the most links to other languages? English has 10 links.

Are there languages that are lexically close to multiple languages in other groups? Greek is lexically close to 5 groups.

Is there a correlation between the number of speakers and the number of languages in a language group? Language groups with more speakers tend to have more languages.

Does the bubble size refer only to European speakers? Portuguese is as large as French?

Multivariate Elements:

colour and cluster for language group

line style for lexical distance

bubble size for number of speakers

Comments:

How is lexical distance computed?

Some language pairs are not joined by links – does this mean that their lexical distance is large enough not to be rendered?

Are the actual geometrical distances meaningful? For instance, Estonian is closer to French in the chart than it is to Portuguese – is it also lexically closer?

15.1.4 Visualization Catalogue

Here are some examples of other types of visualizations; more comprehensive catalogues can be found in [83], [83], [106]–[109], among others.

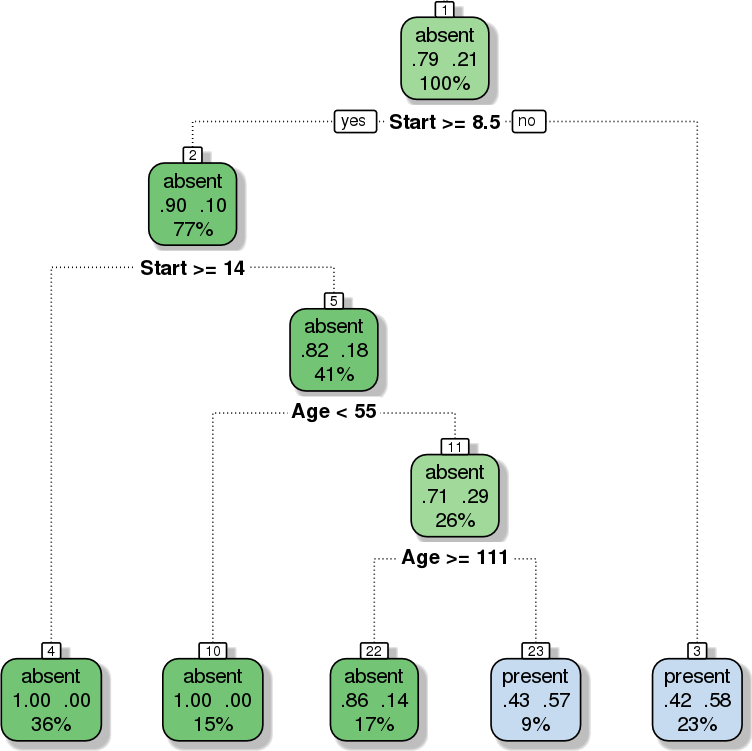

Figure 15.5: Decision Tree: classification scheme for the kyphosis dataset (personal file).

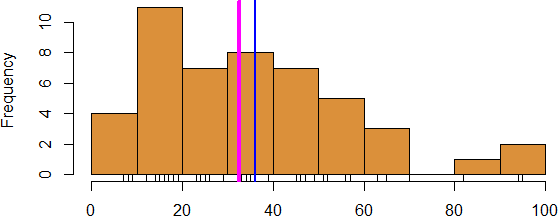

Figure 15.6: Histogram of reported weekly work hours (personal file).

Figure 15.7: Decision tree bubble chart: estimated average project effort (in red) over-layed over product complexity, programmer capability, and product count in NASA’s COCOMO dataset (personal file).

Figure 15.8: Association rules network: diagnosis network around COPD in the Danish Medical Dataset [62].

Figure 15.9: Classification scatterplot: artificial dataset (personal file).

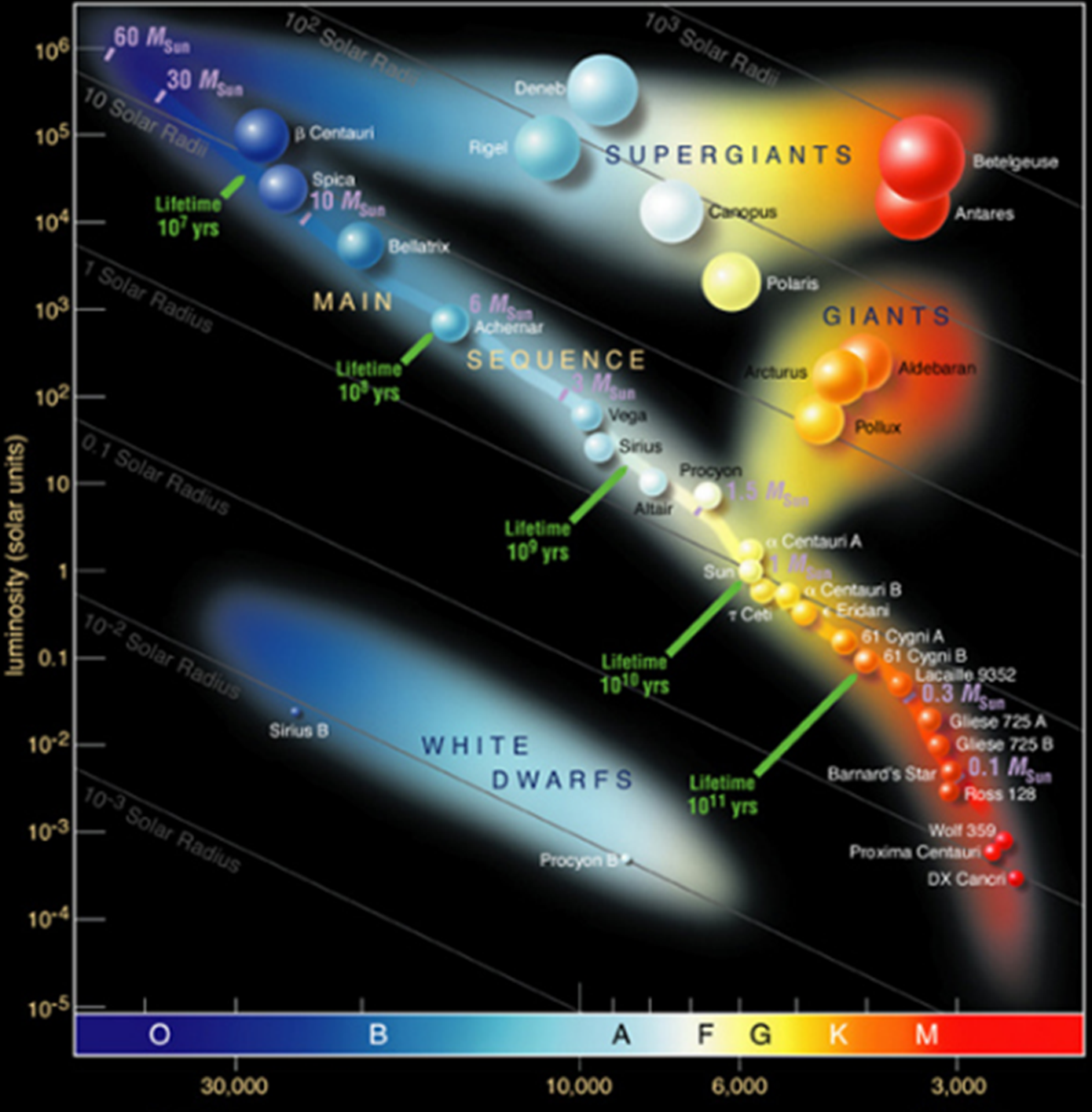

Figure 15.10: Classification bubble chart: Hertzsprung- Russell diagram of stellar evolution (European Southern Observatory).

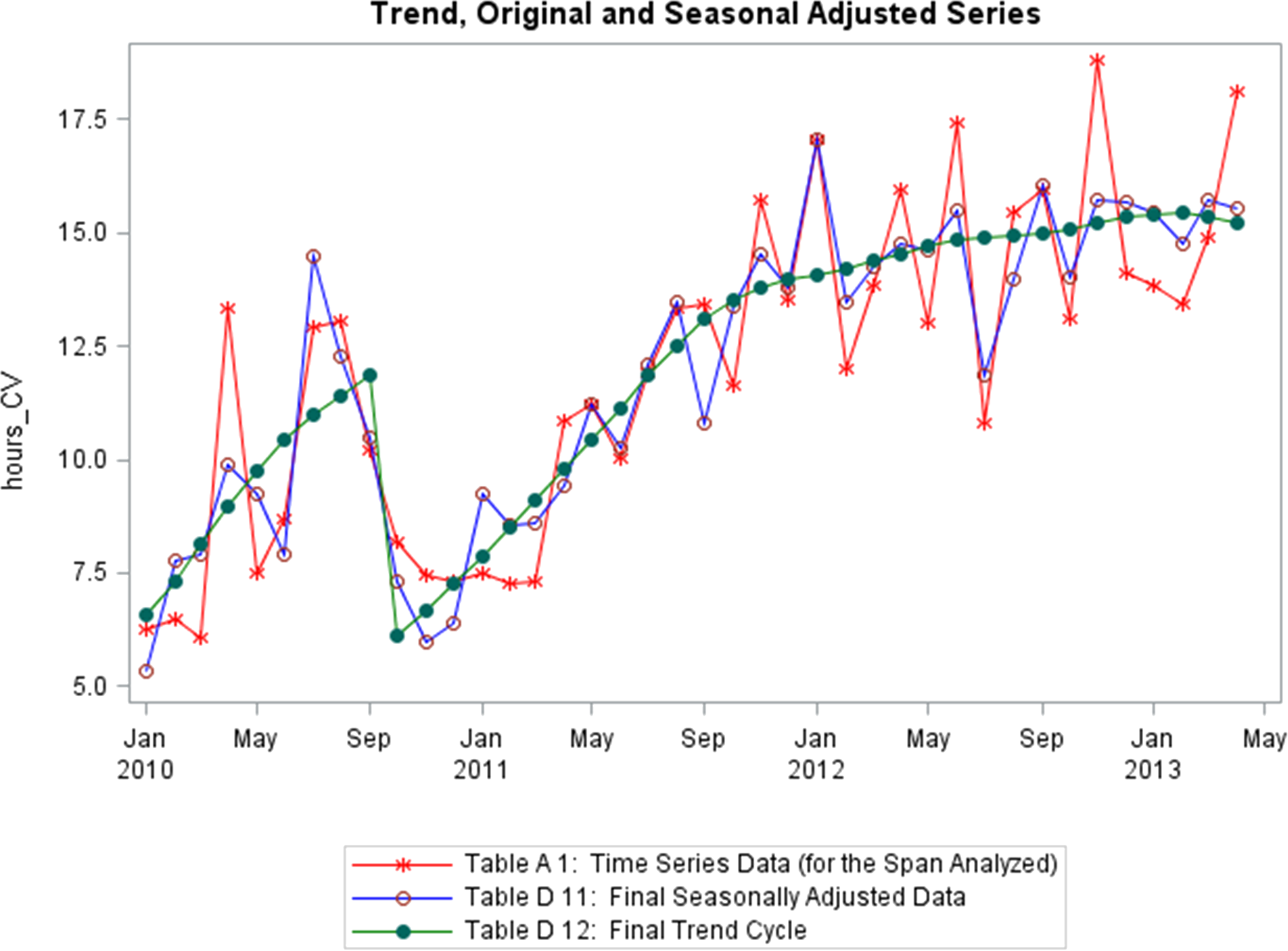

Figure 15.11: Time series: trend, seasonality, shifts of a supply chain metric (personal file).

15.2 A Word About Accessibility

While visual displays can help provide analysts with insight, some work remains to be done in regard to visual impairment – short of describing the features/emerging structures in a visualization, graphs can at best succeed in conveying relevant information to a subset of the population.

The onus remains on the analyst to not only produce clear and meaningful visualizations (through a clever use of contrast, say), but also to describe them and their features in a fashion that allows all to “see” the insights. One drawback is that in order for this description to be done properly, the analyst needs to have seen all the insights, which is not always possible. Examples of “data physicalizations” can be found in [110].

15.3 Fundamental Principles of Analytical Design

In his 2006 offering Beautiful Evidence, E. Tufte highlights what he calls the Fundamental Principles of Analytical Design [104]. Tufte suggests that we present evidence to assist our thinking processes [104, p. 137].

In this regard, his principles are universal – a strong argument can be made that they are dependent neither on technology nor on culture.

Reasoning (and communicating our thoughts) is intertwined with our lives in a causal and dynamic multivariate Universe (the 4 dimensions of space-time making up only a small subset of available variates); whatever cognitive skills allow us to live and evolve can also be brought to bear on the presentation of evidence. Tufte also highlights a particular symmetry to visual displays of evidence, being that consumers of charts should be seeking exactly what producers of charts should be providing (more on exactly what that is in a little bit).

Physical science displays tend to be less descriptive and verbal, and more visual and quantitative; up to now, these trends have tended to be reversed when dealing with evidence displays about human behaviour.

In spite of this, Tufte argues that his principles of analytical design can also be applied to social science and medicine. To demonstrate the universality of his principles, he describes in detail how they are applied to Minard’s celebrated March to Moscow.

His lengthy analysis of the image is well worth the read [104, pp. 122–139] – it will not be repeated here (we discuss the chart in [111], [112]). Rather, we will illustrate the principles with the help of the Gapminder’s Foundation 2012 Health and Wealth of Nations data visualization (see Figure 15.2), a bubble chart plotting 2012 life expectancy, adjusted income per person in USD (log-scaled), population, and continental membership for 193 UN members and 5 other countries, using the latest available data (a high-resolution version of the image is available on the Gapminder website.)

Tufte identifies 6 basic properties of superior analytical charts:

meaningful comparisons

causal and underlying structures

multivariate links

integrated and relevant data

honest documentation

primary focus on content

15.3.1 Comparisons

Show comparisons, contrasts, differences. [104, p. 127]

Comparisons come in varied flavours: for instance, one could compare a:

unit at a given time against the same unit at a later time;

unit’s component against another of its components;

unit against another unit,

or any number of combinations of these flavours.

Tufte further explains that

[…] the fundamental analytical act in statistical reasoning is to answer the question "“Compared with what?” Whether we are evaluating changes over space or time, searching big data bases, adjusting and controlling for variables, designing experiments, specifying multiple regressions, or doing just about any kind of evidence-based reasoning, **the essential point is to make intelligent and appropriate comparisons*}** [emphasis added]. Thus, visual displays […] should show comparisons. [104, p. 127]

Not every comparison will turn out to be insightful, but avoiding comparisons altogether is equivalent to producing a useless display, built from a single datum.

15.3.1.1 Comparisons - Health and Wealth of Nations

First, note that each bubble represents a different country, and that the location of each bubble’s centre is a precise point corresponding to the country’s life expectancy and its GDP per capita. The size of the bubble correlates with the country’s population and its colour is linked to continental membership.

The chart’s compass provides a handy comparison tool:

a bubble further to the right (resp. the left) represents a “wealthier” (resp. “poorer”) country;

a bubble further above (resp. below) represents a “healthier” (resp. “sicker”) country.

For instance, a comparison between Japan, Germany and the USA shows that Japan is healthier than Germany, which is itself healthier than the USA (as determined by life expectancy) while the USA is wealthier than Germany, which is itself wealthier than Japan (as determined by GDP per capita, see Figure 15.12).

Figure 15.12: Comparisons in the Gapminder chart: country-to-country.

It is possible for two countries to have roughly the same health and the same wealth: consider Indonesia and Fiji, or India and Tuvalu, for instance (see Figure 15.13).

Figure 15.13: Comparisons in the Gapminder chart: country-to-country overlap.

In each pair, the centres of both bubbles (nearly) overlap: any difference in the data must be found in the bubbles’ area or in their colour.

Countries can also be compared against world values for life expectancy and GDP per capita (a shade under 70 years and in the neighbourhood of \(\$11K\), respectively). The world’s mean life expectancy and income per person are traced in light blue (see last two images in Figure 15.14).

Figure 15.14: Comparisons in the Gapminder chart country-to-world-life-expectancy and country-to-world-income-per-person.

Wealthier, healthier, poorer, and sicker are relative terms, but we can also use them to classify the world’s nations with respect to these mean values, “wealthier” meaning “wealthier than the average country”, and so on.

15.3.2 Causality, Mechanism, Structure, Explanation

Show causality, mechanism, explanation, systematicstructure. [104, p. 128]

In essence, this is the core principle behind data visualization: the display needs to explain something, it needs to provide (potential) links between cause and effect. As Tufte points out,

[…] often the reason that we examine evidence is to understand causality, mechanism, dynamics, process, or systematic structure [emphasis added]. Scientific research involves causal thinking, for Nature’s laws are causal laws. […] Reasoning about reforms and making decisions also demands causal logic. To produce the desired effects, we need to know about and govern the causes; thus “policy-thinking is and must be causality-thinking” [104, p. 128], [113].

Note also that

simply collecting data may provoke thoughts about cause and effect: measurements are inherently comparative, and comparisons promptly lead to reasoning about various sources of differences and variability [104, p. 128].

Finally, if the visualization can be removed without diminishing the narrative, then that chart should in all probability be excluded from the final product, no matter how pretty and modern it looks, or how costly it was to produce.

15.3.2.1 Causality, Mechanism, Structure, Explanation - Health and Wealth of Nations

At a glance, the relation between life expectancy and the logarithm of the income per person seems to be increasing more or less linearly. Without access to the data, the exact parameter values cannot be estimated analytically, but an approximate line-of-best-fit has been added to the chart (see Figure 15.15).

Figure 15.15: Approximate line of best fit for the Gapminder chart.

Using the points \((10K,73.5)\) and \((50K,84.5)\) yields a line with equation \[\mbox{Life Expectancy} \approx 6.83 \times \ln (\mbox{Income Per Capita})+10.55\] The exact form of the relationship and the numerical values of the parameters are of little significance at this stage – the key insight is that wealthier countries appear to be healthier, generally, and vice-versa (although whether wealth drives health, health drives wealth, or some other factor(s) [education?] drive both wealth and health cannot be answered without further analysis and access to knowledge external to the chart).

The chart also highlights an interesting feature in the data, namely that the four quadrants created by separating the data along the Earth’s average life expectancy and the GDP per capita for the entire planet do not host the same patterns.

Naïvely, it might have been expected that each of the quadrants would contain about 25% of the world’s countries (although the large population of China and India muddle the picture somewhat). However, one quadrant is substantially under-represented in the visualization. Should it come as a surprise that there are so few “wealthier” yet “sicker” countries? (see Figure 15.16).

Figure 15.16: Close-up, bottom right quadrant.

It could even be argued that Russia and Kazakhstan are in fact too near the separators to really be considered clear-cut members of the quadrant, so that the overwhelming majority of the planet’s countries are found in one of only three quadrants.

In the same vein, when we consider the data visualization as a whole, there seems to be one group of outliers below the main trend, to the right, and to a lesser extent, one group above the main trend, to the left (see Figure @ref{fig:Gapminderoutliers}).

Figure 15.17: Potential outliers in the Gapminder chart.

These cry out for an explanation: South Africa, for instance, has a relatively high GDP per capita but a low life expectancy (potentially, income disparity between a poorer majority and a substantially wealthier minority might help push the bubble to the right, while the lower life expectancy of the majority drives the overall life expectancy to the bottom).

This brings up a crucial point about data visualization: it seems virtually certain that the racial politics of apartheid played a major role in the position of the South African outlier, but the chart emphatically DOES NOT provide a proof of that assertion. Charts suggest, but proof comes from deeper domain-specific analyses.

15.3.3 Multivariate Analysis

Show multivariate data; that is, show more than 1 or 2 variables. [104, p. 130]

In an age where data collection is becoming easier by the minute, this seems like a no-brainer: why waste time on uninformative univariate plots? Indeed,

nearly all the interesting worlds (physical, biological, imaginary, human) we seek to understand are inevitably multivariate in nature. [104, p. 129]

Furthermore, as Tufte suggest,

the analysis of cause and effect, initially bivariate, quickly becomes multivariate through such necessary elaborations as the conditions under which the causal relation holds, interaction effects, multiple causes, multiple effects, causal sequences, sources of bias, spurious correlation, sources of measurement error, competing variables, and whether the alleged cause is merely a proxy or a marker variable (see for instance, [114]). [104, p. 129]

While we should not dismiss low-dimensional evidence simply because it is low-dimensional, Tufte cautions that

reasoning about evidence should not be stuck in 2 dimensions, for the world we seek to understand is profoundly multivariate [emphasis added]. [104, p. 130]

Consultants and analysts may question the ultimate validity of this principle: after all, doesn’t Occam’s Razor warn us that “it is futile to do with more things that which can be done with fewer”? This would seem to be a fairly strong admonition to not reject low-dimensional visualizations out of hand. This interpretation depends, of course, on what it means to “do with fewer”: are we attempting to “do with fewer”, or to “do with fewer”?

If it is the former, then we can produce simple charts to represent the data (which quickly balloons into a multivariate meta-display), but any significant link between 3 and more variables is unlikely to be shown, which drastically reduces the explanatory power of the charts.

If it is the latter, the difficulty evaporates: we simply retain as many features as are necessary to maintain the desired explanatory power.

15.3.3.1 Multivariate Analysis - Health and Wealth of Nations

Only 4 variables are represented in the display, which we could argue just barely qualifies the data as multivariate. The population size seems uncorrelated with both of the axes’ variates, unlike continental membership: there is a clear divide between the West, most of Asia, and Africa (see Figure @re(fig:Gapclusters). This “clustering” of the world’s nations certainly fits with common wisdom about the state of the planet, which provides some level of validation for the display (is it the only way to cluster the observations?).

Figure 15.18: Potential clusters in the Gapminder chart.

Other variables could also be considered or added, notably the year, allowing for bubble movement: one would expect that life expectancy and GDP per capita have both been increasing over time. The Gapminder Foundation’s online tool can build charts with other variates, leading to interesting inferences and suggestions. Give it a try!

15.3.4 Integration of Evidence

Completely integrate words, numbers, images, diagrams. [104, p. 131]

Data does not live in a vacuum. Tufte’s approach is clear:

the evidence doesn’t care what it is – whether word, number, image. In reasoning about substantive problems, what matters entirely is the evidence, not particular modes of evidence [emphasis added]. [104, p. 130]

The main argument is that evidence from data is better understood when it is presented with context and accompanying meta-data.

Indeed,

words, numbers, pictures, diagrams, graphics, charts, tables belong together [emphasis added]. Excellent maps, which are the heart and soul of good practices in analytical graphics, routinely integrate words, numbers, line-art, grids, measurement scales. [104, p. 131]

Finally, Tufte makes the point that we should think of data visualizations and data tables as elements that provide vital evidence, and as such they should be integrated in the body of the text:

tables of data might be thought of as paragraphs of numbers, tightly integrated with the text for convenience of reading rather than segregated at the back of a report. […] Perhaps the number of data points may stand alone for a while, so we can get a clean look at the data, although techniques of layering and separation may simultaneously allow a clean look as well as bringing other information into the scene. [104, p. 131]

There is a flip side to this, of course, and it is that charts and displays should be annotated with as much text as is required to make the context clear.

When authors and researchers select a single specific method or mode of information during the inquiries, the focus switches from “can we explain what is happening?” to “can the method we selected explain what is happening?”

There is an art to method selection, and experience can often suggest relevant methods, but remember that “when all one has is a hammer, everything looks like a nail”: the goal should be to use whatever (and all) necessary evidence to shed light on “what is happening”.

If that goal is met, it makes no difference which modes of evidence were used.

15.3.4.1 Integration of Evidence - Health and Wealth of Nations

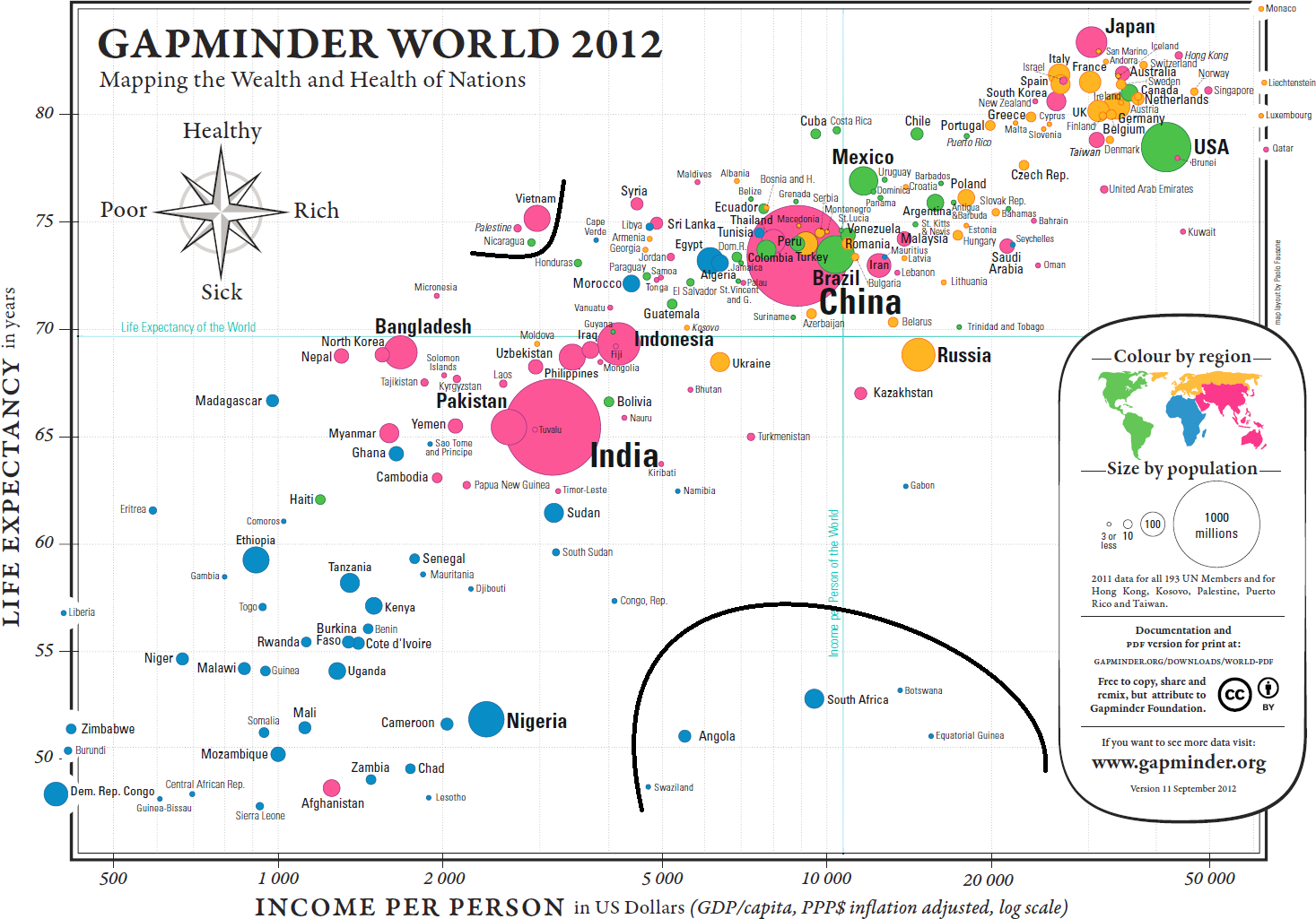

The various details attached to the chart (such as country names, font sizes, axes scale, grid, and world landmarks) provide substantial benefits when it comes to consuming the display. They may become lost in the background, with the consequence of being taken for granted.

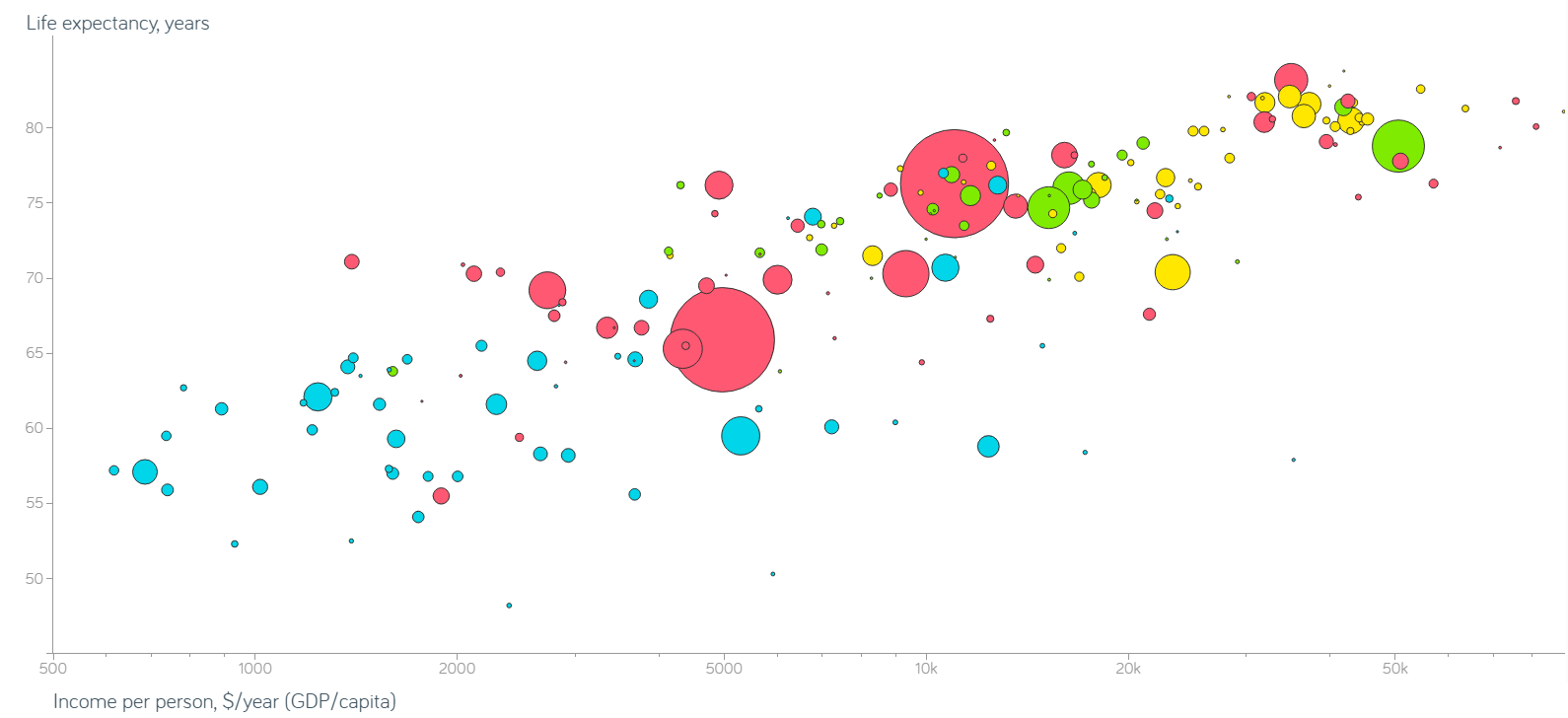

Compare the display obtained from (nearly) the same data, but without integration of evidence (see Figure 15.19).

Figure 15.19: Non-integrated Gapminder chart.

15.3.5 Documentation

Thoroughly describe the evidence. Provide a detailed title, indicate the authors and sponsors, document the data sources, show complete measurement scales, point out relevant issues. [104, p. 133]

We cannot always tell at a glance whether a pretty graphic speaks the truth or presents a relevant piece of information. Documented charts may provide a hint, as

the credibility of an evidence presentation depends significantly on the quality and integrity of the authors and their data sources. Documentation is an essential mechanism of quality control for displays of evidence. Thus authors must be named, sponsors revealed, their interests and agenda unveiled, sources described, scales labeled, details enumerated [emphasis added]. [104, p. 132]

Depending on the context, questions and items to address could include:

What is the title/subject of the visualization?

Who did the analysis? Who created the visualization? (if distinct from the analyst(s))

When was the visualization published? Which version of the visualization is rendered here?

Where did the underlying data come from? Who sponsored the display?

What assumptions were made during data processing and clean-up?

What colour schemes, legends, scales are in use in the chart?

It is not obvious whether all this information can fit inside a single chart in some cases. But, keeping in mind the principle of Integration of Evidence, charts should not be presented in isolation in the first place, and some of the relevant information can be provided in the text, on a webpage, or in an accompanying document.

This is especially important when it comes to discussing the methodological assumptions used for data collection, processing, and analysis. An honest assessment may require sizable amounts of text, and it may not be reasonable to include that information with the display (in that case, a link to the accompanying documentation should be provided):

publicly attributed authorship indicates to readers that someone is taking responsibility for the analysis; conversely, the absence of names signals an evasion of responsibility. […] People do things, not agencies, bureaus, departments, divisions [emphasis added]. [104, pp. 132–133]

15.3.5.1 Documentation - Health and Wealth of Nations

The Gapminder map might just be one of the best-documented charts in the data visualization ecosystem. Let us see if we can answer the questions suggested above.

What is the title/subject of the visualization? The health and wealth of nations in 2012, using the latest available data (2011).

Who did the analysis? Who sponsored the display? Who created the visualization? The analysis was conducted by the Gapminder Foundation; the map layout was created by Paulo Fausone. No data regarding the sponsor is found on the chart or in the documentation. It seems plausible that there is no external sponsor, but that is no certainty.

When was the visualization published? Which version is rendered here? The 11th version of the chart was published in September 2012.

Where did the underlying data come from? What assumptions were made during data processing and clean-up? Typically, the work that goes into preparing the data is swept under the carpet in favour of the visualization itself; there are no explicit source of data on this chart, for instance. However, there is a URL in the legend box that leads to detailed information. For most countries, life expectancy data was collected from:

the Human Mortality database;

the UN Population Division World Population Prospects;

files from historian James C. Riley;

the Human Life Table database;

data from diverse national statistical agencies;

the CIA World Fact book;

the World Bank, and

the South Sudan National Bureau of Statistics.

Benchmark 2005 GDP data was derived via regression analysis from International Comparison Program data for 144 countries, and extended to other jurisdictions using another regression against data from:

the UN Statistical Division;

Maddison Online;

the CIA World Fact book, and

estimates from the World Bank.

The 2012 values were then derived from the 2005 benchmarks using long-term growth rates estimate from:

Maddison Online;

Barro & Ursua;

the United Nations Statistical Division;

the Penn World Table (mark 6.2);

the International Monetary Fund’s World Economic Outlook database;

the World Development Indicators;

Eurostat, and

national statistical offices or some other specific publications.

Population estimates were collated from:

the United Nations Population Division World Population Prospects;

Maddison Online;

Mitchell’s International Historical Statistics;

the United Nations Statistical Division;

the US Census Bureau;

national sources;

undocumented sources, and

“guesstimates”.

Exact figures for countries with a population below 3 million inhabitants were not needed as this marked the lower end of the chart resolution.

What colour schemes, legends, scales are in use in the chart? The Legend Inset is fairly comprehensive (see Figure 15.20):

Figure 15.20: Legend inset for the Gapminder chart.

Perhaps the last item of note is that the scale of the axes differs: life expectancy is measured linearly, whereas GDP per capita is measured on a logarithmic scale.

15.3.6 Content Counts Most of All

Analytical presentations ultimately stand of fall depending on the quality, relevance, and integrity of their content. [104, p. 136]

Any amount of time and money can be spent on graphic designers and focus groups, but

the most effective way to improve a presentation is to get better content [emphasis added] […] design devices and gimmicks cannot salvage failed content. […] The first questions in constructing analytical displays are not “How can this presentation use the color purple?” Not “How large must the logotype be?” Not “How can the presentation use the Interactive Virtual Cyberspace Protocol Display Technology?” Not decoration, not production technology. The first question is “What are the content-reasoning tasks that this display is supposed to help with?” [104, p. 136]

The main objective is to produce a compelling narrative, which may not necessarily be the one that was initially expected to emerge from a solid analysis of sound data. Simply speaking, the visual display should assist in explaining the situation at hand and in answering the original questions that were asked of the data.

15.3.6.1 Contents Counts Most of All - Health and Wealth of Nations

How would we answer the following questions:

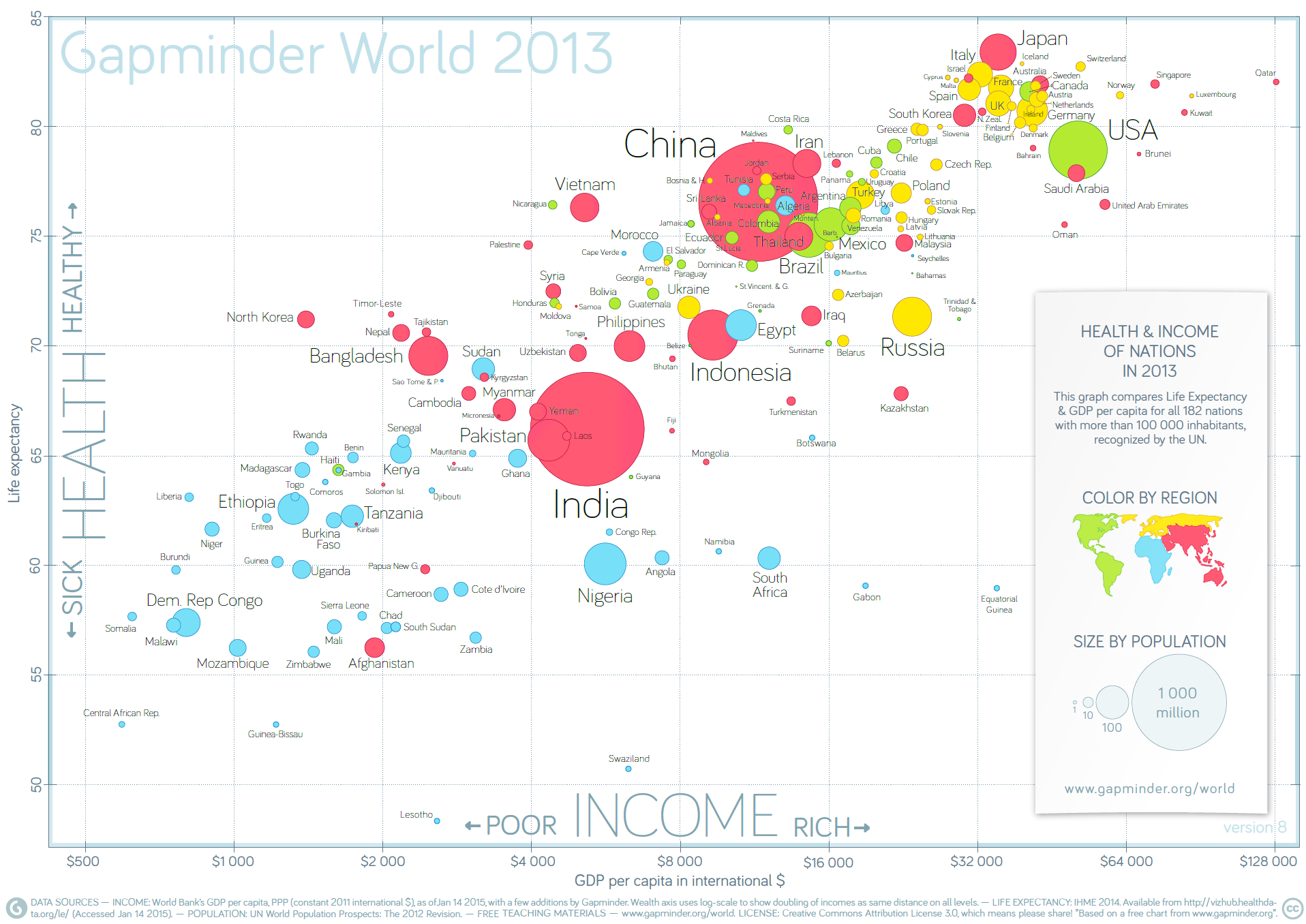

Do we observe similar patterns every year?

Does the shape of the relationship between life expectancy and log-GDP per capita vary continuously over time?

Do countries ever migrate large distances in the display over short periods?

Do exceptional events affect all countries similarly?

What are the effects of secession or annexation?

The 2012 Health and Wealth of Nations data represent a single datum in the general space of data visualizations; in this context, getting better content means getting data for other years, as well as for 2012.

Figure 15.21: Life expectancy and income per capita in 2013, by nation (Gapminder Foundation).

15.4 Basic Visualizations in R

Whenever we analyze data, the first thing we should do is look at it:

for each variable, what are the most common values?

How much variability is present?

Are there any unusual observations?

Producing graphics for data analysis is relatively simple. Producing graphics for publication is relatively more complex and typically

requires a great deal of tweaking to achieve the desired appearance.

Base R provides a number of functions for visualizing data. In this section, we will provide sufficient guidance so that most desired effects can be achieved, but further investigation of the documentation and experimentation will doubtless be necessary for specific needs (advanced visualization functionality is provided by ggplot2).

For now, let’s discuss some of the most common basic methods.

15.4.1 Scatterplots

The most common plotting function in R is the plot() function. It is a generic function, meaning, it calls various methods according to the type of the object passed which is passed to it. In the simplest case, we can pass in a vector and we get a scatter plot of magnitude vs index.

More generally, we can pass in two vectors and a scatter plot of the points formed by matching coordinates is displayed.

For example, the command plot(c(1,2),c(3,5)) would plot the points \((1,3)\) and \((2,5)\).

Here is a more concrete example showing how to plot the graph of the sine function in the range from \(-\pi\) to \(\pi\).

We can add a title to our plot with the parameter main. Similarly,

xlab and ylab can be used to label the x-axis and y-axis

respectively.

The curve is made up of circular black points. This is the default setting for shape and colour. This can be changed by using the argument type.

It accepts the following strings (with given effect)

p– pointsl– linesb– both points and linesc– empty points joined by lineso– overplotted points and liness– stair stepsh– histogram-like vertical linesn– does not produce any points or lines

Similarly, we can specify the colour using the argument col.

plot(x, sin(x),

main=expression("Graph of the sine function, over [-" * pi * "," * pi * "]"),

ylab="sin(x)",

type="l",

col="blue")

Calling plot() multiple times will have the effect of plotting the current graph on the same window, replacing the previous one.

However, we may sometimes wish to overlay the plots in order to compare the results. This is made possible by the functions lines() and points(), which add lines and points respectively, to the existing plot.

plot(x, sin(x),

main="Overlaying Graphs",

ylab="",

type="l",

col="blue")

lines(x,cos(x), col="red")

legend("topleft",

c("sin(x)","cos(x)"),

fill=c("blue","red")

)

The legend() function allows for the appropriate display in the plot.

15.4.2 Barplots

Barplots can be created in R using the barplot() function.

We can supply a vector or matrix to this function, and it will display a bar chart with bar heights equal to the magnitude of the elements in the vector.

Let us suppose that we have a vector of maximum temperatures for seven days, as follows.

We can make a bar chart out of this data using a simple command.

This function can take on a number of arguments (details are available in the help file, which can be queried by entering ?barplot in the console).

Frequently-used arguments include:

mainto specify the titlexlabandylabto provide labels for the axesnames.argto provide a name for each barcolto define colour, etc.

We can also transpose the plot to have horizontal bars by providing the argument horiz = TRUE, such as in the following:

barplot(max.temp,

main = "Maximum Temperatures in a Week",

xlab = "Degree Celsius",

ylab = "Day",

names.arg = c("Sun", "Mon", "Tue", "Wed", "Thu", "Fri", "Sat"),

col = "darkred",

horiz = TRUE)

At times, we may be interested in displaying the count or magnitude for categories in the data. For instance, consider the following vector of age measurements for 21 first-year college students.

Calling barplot(age) does not provide the required plot.

Indeed, this call plots 21 bars with heights corresponding to students’ age – the display does not provide the frequency count for each age.

The values can be quickly found using the table() function, as shown below.

age

16 17 18 19 20 22 31

1 4 10 3 1 1 1 Plotting this data will produce the required barplot (in the code, the argument densityis used to determine the texture of the fill).

barplot(table(age),

main="Age Count of 21 Students",

xlab="Age",

ylab="Count",

border="red",

col="blue",

density=10

)

The chart respects the relative proportions, but R cannot tell that there should be (empty) categories for age=21 and between 22 and 31.

This can be remedied by first setting new factor levels for the age measurements, and re-running the code.

age=factor(age,levels=c(15:31))

barplot(table(age),

main="Age Count of 21 Students",

xlab="Age",

ylab="Count",

border="red",

col="blue",

density=10

)

15.4.3 Histograms

Histograms display the distribution of a continuous variable by dividing the range of scores into a specified number of bins on the \(x\)-axis and displaying the frequency of scores in each bin on the \(y\)-axis.

We can create histograms in R with hist():

the option

freq=FALSEcreates a plot based on probability densities rather than frequencies;the

breaksoption controls the number of bins: the default produces equally spaced breaks when defining the cells of the histogram.

For illustrative purposes, we will use several of the

variables from the Motor Trend Car Road Tests (mtcars) dataset

provided in the base R installation.

Here are four variations on a histogram.

par(mfrow=c(2,2))

hist(mtcars$mpg)

hist(mtcars$mpg,

breaks=12,

col="red",

xlab="Miles Per Gallon",

main="Colored histogram with 12 bins")

hist(mtcars$mpg,

freq=FALSE,

breaks=12,

col="red",

xlab="Miles Per Gallon",

main="Histogram, rug plot, density curve")

rug(jitter(mtcars$mpg))

lines(density(mtcars$mpg), col="blue", lwd=2)

x <- mtcars$mpg

h<-hist(x,

breaks=12,

col="red",

xlab="Miles Per Gallon",

main="Histogram with normal curve and box")

xfit<-seq(min(x), max(x), length=40)

yfit<-dnorm(xfit, mean=mean(x), sd=sd(x))

yfit <- yfit*diff(h$mids[1:2])*length(x)

lines(xfit, yfit, col="blue", lwd=2)

box()

The first histogram demonstrates the default plot with no specified options: five bins are created, and the default axis labels and titles are printed.

In the second histogram, 12 bins have been specified, as well as a red fill for the bars, and more attractive (enticing?) and informative labels and title.

The third histogram uses the same colours, number of bins, labels, and titles as the previous plot but adds a density curve and rug-plot overlay. The density curve is a kernel density estimate and is described in the next section. It provides a smoother description of the distribution of scores. The call to function lines() overlays this curve in blue (and a width twice the default line thickness); a rug plot is a one-dimensional representation of the actual data values.

The fourth histogram is similar to the second but with a superposed normal curve and a box around the figure. The code for superposing the normal curve comes from a suggestion posted to the R-help mailing list by Peter Dalgaard. The surrounding box is produced by the box() function.

15.4.4 Curves

Given an expression for a function \(y(x)\), we can plot the values of \(y\) for various values of \(x\) in a given range.

This can be accomplished using the R function curve().

We can plot the simple polynomial function \(y=3x^{2}+x\) in the range \(x=[1,10]\) as follows:

curve(3*x^2 + x, from=1, to=10, n=300, xlab="xvalue",

ylab="yvalue", col="blue", lwd=2,

main="Plot of (3x^2 + x)")

The important parameters of the curve() function are:

the first parameter is the mathematical expression of the function to plot, written in the format for writing mathematical operations in or LaTeX;

two numeric parameters (

fromandto) that represent the endpoints of the range of \(x\);the integer

nthat represents the number of equally-spaced values of \(x\) between thefromandtopoints;the other parameters (

xlab,ylab,col,lwd,main) have their usual meaning.

15.4.5 Boxplots

A box-and-whiskers plot describes the distribution of a continuous variable by plotting its five-number summary:

the minimum \(Q_0\);

the lower quartile \(Q_1\) (25th percentile);

the median \(Q_2\) (50th percentile);

the upper quartile \(Q_3\) (75th percentile), and

the maximum \(Q_4\).

The belt of the boxplot is found at \(Q_2\), its box boundaries at \(Q_1\) and \(Q_3\), and its whiskers at \(5/2Q_1-3/2Q_3\) and \(5/2Q_3-3/2Q_1\).

For normally distributed variables, it also displays observations which may be identified as potential outliers (which it to say, values outside the box-and-whiskers range \([5/2Q_1-3/2Q_3,5/2Q_3-3/2Q_1]\)).

For normally distributed variables, it also displays observations which may be identified as potential outliers (which it to say, values outside the box-and-whiskers range \([5/2Q_1-3/2Q_3,5/2Q_3-3/2Q_1]\)).

15.4.6 Other Examples

Let’s take a look at some other basic R plots.

15.4.6.1 Scatterplots

On a scatter plot, the features to study depend on the scale of interest:

- for “big” patterns, look at:

- form and direction

- strength

- for “small” patterns, look at:

- deviations from the pattern

- outliers

We start with the swiss built-in R dataset.

str(swiss) # structure of the swiss dataset

pairs(swiss) # scatter plot matrix for the swiss dataset

'data.frame': 47 obs. of 6 variables:

$ Fertility : num 80.2 83.1 92.5 85.8 76.9 76.1 83.8 92.4 82.4 82.9 ...

$ Agriculture : num 17 45.1 39.7 36.5 43.5 35.3 70.2 67.8 53.3 45.2 ...

$ Examination : int 15 6 5 12 17 9 16 14 12 16 ...

$ Education : int 12 9 5 7 15 7 7 8 7 13 ...

$ Catholic : num 9.96 84.84 93.4 33.77 5.16 ...

$ Infant.Mortality: num 22.2 22.2 20.2 20.3 20.6 26.6 23.6 24.9 21 24.4 ...Let’s focus on one specific pair: Fertility vs. Education.

The same plot can be prettified and made more informative:

# add a title and axis labels

plot(swiss$Fertility, swiss$Education, xlab="Fertility", ylab="Education",

main="Education vs Fertility (by province), Switzerland, 1888",

las=1)

# add the line of best fit (in red)

abline(lm(swiss$Education~swiss$Fertility), col="red", lwd=2.5)

# add the smoothing lowess curve (in blue)

lines(lowess(swiss$Fertility,swiss$Education), col="blue", lwd=2.5)

# add a legend

legend(75,50, c("Best Fit","Lowess"), lty=c(1,1),

lwd=c(2.5,2.5),col=c("red","blue"))

Compare that graph with the one found below:

plot(swiss$Education, xlab="Province", ylab="Education",

main="Education by Province, Switzerland, 1888", las=1)

abline(lm(swiss$Education~row(swiss)[,1]), col="red", lwd=2.5)

lines(swiss$Education)

lines(lowess(row(swiss)[,1],swiss$Education), col="blue", lwd=2.5)

legend(5,52, c("Best Fit","Lowess"), lty=c(1,1),

lwd=c(2.5,2.5),col=c("red","blue"))

Even though R will not balk at producing this graph, it is a nonsense graph. Why, exactly?

The main take-away of this example is that being able to produce a graph doesn’t guarantee that it will be useful or meaningful in any way.

Let’s do some thing similar for the infamous built-in iris dataset.

str(iris) # structure of the dataset

summary(iris) # information on the distributions for each feature

# ?iris # information on the dataset itself'data.frame': 150 obs. of 5 variables:

$ Sepal.Length: num 5.1 4.9 4.7 4.6 5 5.4 4.6 5 4.4 4.9 ...

$ Sepal.Width : num 3.5 3 3.2 3.1 3.6 3.9 3.4 3.4 2.9 3.1 ...

$ Petal.Length: num 1.4 1.4 1.3 1.5 1.4 1.7 1.4 1.5 1.4 1.5 ...

$ Petal.Width : num 0.2 0.2 0.2 0.2 0.2 0.4 0.3 0.2 0.2 0.1 ...

$ Species : Factor w/ 3 levels "setosa","versicolor",..: 1 1 1 1 1 1 1 1 1 1 ...

Sepal.Length Sepal.Width Petal.Length Petal.Width

Min. :4.300 Min. :2.000 Min. :1.000 Min. :0.100

1st Qu.:5.100 1st Qu.:2.800 1st Qu.:1.600 1st Qu.:0.300

Median :5.800 Median :3.000 Median :4.350 Median :1.300

Mean :5.843 Mean :3.057 Mean :3.758 Mean :1.199

3rd Qu.:6.400 3rd Qu.:3.300 3rd Qu.:5.100 3rd Qu.:1.800

Max. :7.900 Max. :4.400 Max. :6.900 Max. :2.500

Species

setosa :50

versicolor:50

virginica :50

The function pairs provides a scatter plot matrix of the dataset; the option lower.panel=NULL removes the lower panels (due to redundancy).

pairs(iris[1:4], main = "Anderson's Iris Data", pch = 21,

lower.panel=NULL, labels=c("SL","SW","PL","PW"),

font.labels=2, cex.labels=4.5)

We can compare the sepal width and length variables in a manner similar to what we did with the swiss dataset.

## Iris 1

plot(iris$Sepal.Length, iris$Sepal.Width, xlab="Sepal Length",

ylab="Sepal Width", main="Sepal Width vs Sepal Length,

Anderson's Iris Dataset", las=1,

bg=c("yellow","black","green")[unclass(iris$Species)])

abline(lm(iris$Sepal.Width~iris$Sepal.Length), col="red", lwd=2.5)

lines(lowess(iris$Sepal.Length,iris$Sepal.Width), col="blue", lwd=2.5)

legend(7,4.35, c("Best Fit","Lowess"), lty=c(1,1),

lwd=c(2.5,2.5),col=c("red","blue"))

There does not seem to be a very strong relationship between these variables. What can we say about sepal length and petal length?

## Iris 2

plot(iris$Sepal.Length, iris$Petal.Length, xlab="Sepal Length",

ylab="Petal Length", main="Sepal Width vs Petal Length,

Anderson's Iris Dataset", las=1)

abline(lm(iris$Petal.Length~iris$Sepal.Length), col="red", lwd=2.5)

lines(lowess(iris$Sepal.Length,iris$Petal.Length), col="blue", lwd=2.5)

legend(7,4.35, c("Best Fit","Lowess"), lty=c(1,1),

lwd=c(2.5,2.5),col=c("red","blue"))

Visually, the relationship is striking: the line seems to have a slope of 1! But notice that the axes are unevenly scaled, and have been cutoff away from the origin. The following graph gives a better idea of the situation.

## Iris 3

plot(iris$Sepal.Length, iris$Petal.Length, xlab="Sepal Length",

ylab="Petal Length", main="Sepal Width vs Petal Length,

Anderson's Iris Dataset", xlim=c(0,8), ylim=c(0,8), las=1)

abline(lm(iris$Petal.Length~iris$Sepal.Length), col="red", lwd=2.5)

lines(lowess(iris$Sepal.Length,iris$Petal.Length), col="blue", lwd=2.5)

legend(2,7, c("Best Fit","Lowess"), lty=c(1,1),

lwd=c(2.5,2.5),col=c("red","blue"))

A relationship is still present, but it is affine, not linear as could have been guessed by naively looking at the original graph.

Colour can also be used to highlight various data elements.

# colour each observation differently according to its species

plot(iris$Sepal.Length, iris$Sepal.Width, pch=21,

bg=c("red","green3","blue")[unclass(iris$Species)],

main="Anderson's Iris Data -- Sepal Length vs. Sepal Width", xlim=)

This can be done on all scatterplots concurrently using pairs.

# scatterplot matrix with species membership

pairs(iris[1:4], main = "Anderson's Iris Data", pch = 21,

bg = c("red", "green3", "blue")[unclass(iris$Species)],

lower.panel=NULL, labels=c("SL","SW","PL","PW"),

font.labels=2, cex.labels=4.5)

15.4.6.2 Histograms and Bar Charts

In histograms or frequency charts, users should stay on the lookout for bin size effects. For instance, what does the distribution of the Education variable in the swiss dataset look like?

The distribution pattern is distintcly different with 10 and 20 bins. Don’t get too carried away, however: too many bins may end up masking trends if the dataset isn’t large enough.

We can also look for best fits for various parametric distributions:

#Swiss 1 - default number of bine

hist(swiss$Education, freq=FALSE, xlab="Education",

main="Education Distribution, by Province, Switzerland, 1888",

col="firebrick1", ylim=c(0,0.15))

curve(dgamma(x,shape=mean(swiss$Education)),add=TRUE, col="darkblue",

lwd=4) # fit a gamma distribution

curve(dexp(x,rate=1/mean(swiss$Education)),add=TRUE, col="black",

lwd=4) # fit an exponential distribution

curve(dnorm(x,mean=mean(swiss$Education),sd=sd(swiss$Education)),

add=TRUE, col="green3", lwd=4) # fit a normal distribution

legend(40,0.05, c("Gamma","Exponential","Normal"), lty=c(1,1),

lwd=c(4,4),col=c("darkblue","black", "green3"))

# Swiss 2 - 10 bins

hist(swiss$Education, breaks=10, freq=FALSE,xlab="Education",main="Education

Distribution, by Province, Switzerland, 1888", col="firebrick1",

ylim=c(0,0.15))

curve(dgamma(x,shape=mean(swiss$Education)),add=TRUE,

col="darkblue", lwd=4)

curve(dexp(x,rate=1/mean(swiss$Education)),add=TRUE,

col="black", lwd=4)

curve(dnorm(x,mean=mean(swiss$Education),sd=sd(swiss$Education)),

add=TRUE, col="green3", lwd=4)

legend(40,0.05, c("Gamma","Exponential","Normal"), lty=c(1,1),

lwd=c(4,4),col=c("darkblue","black", "green3"))

# Swiss 3 - 20 bins

hist(swiss$Education, breaks=20, freq=FALSE, xlab="Education",

main="Education Distribution, by Province, Switzerland, 1888",

col="firebrick1", ylim=c(0,0.15))

curve(dgamma(x,shape=mean(swiss$Education)),add=TRUE,

col="darkblue", lwd=4)

curve(dexp(x,rate=1/mean(swiss$Education)),add=TRUE,

col="black", lwd=4)

curve(dnorm(x,mean=mean(swiss$Education),sd=sd(swiss$Education)), add=TRUE,

col="green3", lwd=4)

legend(40,0.05, c("Gamma","Exponential","Normal"), lty=c(1,1),

lwd=c(4,4),col=c("darkblue","black", "green3"))

With a small number of bins, the exponential distribution seems like a good fit, visually. With a larger number of bins, neither of the three families seems particularly well-advised.

And now, can you figure out what is happening with these visualizations of the iris dataset?

hist(iris$Sepal.Length, freq=FALSE, xlab="Sepal.Length",

main="Sepal.Length Distribution", col="firebrick1", ylim=c(0,0.15))

# Another feature

hist(iris$Sepal.Width, freq=FALSE, xlab="Sepal.Width",

main="Sepal.Width Distribution", col="firebrick1", ylim=c(0,0.15))

Histograms (1D data representations) can be combined with scatterplots (2D data representations) to provide marginal information.

# create our own function (see R-blogger's scatterhist function)

scatterhist = function(x, y, xlab="", ylab=""){

zones=matrix(c(2,0,1,3), ncol=2, byrow=TRUE)

layout(zones, widths=c(4/5,1/5), heights=c(1/5,4/5))

xhist = hist(x, plot=FALSE)

yhist = hist(y, plot=FALSE)

top = max(c(xhist$counts, yhist$counts))

par(mar=c(3,3,1,1))

plot(x,y)

par(mar=c(0,3,1,1))

barplot(xhist$counts, axes=FALSE, ylim=c(0, top), space=0)

par(mar=c(3,0,1,1))

barplot(yhist$counts, axes=FALSE, xlim=c(0, top), space=0, horiz=TRUE)

par(oma=c(3,3,0,0))

mtext(xlab, side=1, line=1, outer=TRUE, adj=0,

at=.8 * (mean(x) - min(x))/(max(x)-min(x)))

mtext(ylab, side=2, line=1, outer=TRUE, adj=0,

at=(.8 * (mean(y) - min(y))/(max(y) - min(y))))

}

ds = iris

with(ds, scatterhist(iris$Sepal.Length, iris$Sepal.Width,

xlab="Sepal.Length", ylab="Sepal.Width"))

15.4.6.3 Bubble Charts

Bubble charts are a neat way to show at least 3 variables on the same 2D display. The location of the bubbles’ centre takes care of 2 variables: the size, colour, and shape of the bubbles can also be used to represent different data elements.

For this first example, we’ll take a look at some Statistics Canada 2011 demographic data regarding Canada’s census metropolitan areas (CMA) and census agglomerations (CA).

can.2011=read.csv("data/Canada2011.csv", head=TRUE) # import the data

head(can.2011) # take a look at the first 6 entries

str(can.2011) # take a look at the structure of the data

summary(can.2011[,3:12], maxsum=13) # provide a distribution information

# for features 3 to 12, allowing for up to 13 factors in the categorical

# distributions Geographic.code Geographic.name Province Region Type pop_2011

1 1 St. John's NL Atlantic CMA 196966

2 5 Bay Roberts NL Atlantic CA 10871

3 10 Grand Falls-Windsor NL Atlantic CA 13725

4 15 Corner Brook NL Atlantic CA 27202

5 105 Charlottetown PE Atlantic CA 64487

6 110 Summerside PE Atlantic CA 16488

log_pop_2011 pop_rank_2011 priv_dwell_2011 occ_private_dwell_2011

1 5.294391 20 84542 78960

2 4.036269 147 4601 4218

3 4.137512 128 6134 5723

4 4.434601 94 11697 11110

5 4.809472 52 28864 26192

6 4.217168 120 7323 6941

occ_rate_2011 med_total_income_2011

1 0.9339736 33420

2 0.9167572 24700

3 0.9329964 26920

4 0.9498162 27430

5 0.9074279 30110

6 0.9478356 27250

'data.frame': 147 obs. of 12 variables:

$ Geographic.code : int 1 5 10 15 105 110 205 210 215 220 ...

$ Geographic.name : chr "St. John's" "Bay Roberts" "Grand Falls-Windsor" "Corner Brook" ...

$ Province : chr "NL" "NL" "NL" "NL" ...

$ Region : chr "Atlantic" "Atlantic" "Atlantic" "Atlantic" ...

$ Type : chr "CMA" "CA" "CA" "CA" ...

$ pop_2011 : int 196966 10871 13725 27202 64487 16488 390328 26359 45888 35809 ...

$ log_pop_2011 : num 5.29 4.04 4.14 4.43 4.81 ...

$ pop_rank_2011 : int 20 147 128 94 52 120 13 97 67 78 ...

$ priv_dwell_2011 : int 84542 4601 6134 11697 28864 7323 177295 11941 21708 16788 ...

$ occ_private_dwell_2011: int 78960 4218 5723 11110 26192 6941 165153 11123 19492 15256 ...

$ occ_rate_2011 : num 0.934 0.917 0.933 0.95 0.907 ...

$ med_total_income_2011 : int 33420 24700 26920 27430 30110 27250 33400 25500 26710 26540 ...

Province Region Type pop_2011

Length:147 Length:147 Length:147 Min. : 10871

Class :character Class :character Class :character 1st Qu.: 18429

Mode :character Mode :character Mode :character Median : 40077

Mean : 186632

3rd Qu.: 98388

Max. :5583064

log_pop_2011 pop_rank_2011 priv_dwell_2011 occ_private_dwell_2011

Min. :4.036 Min. : 1.0 Min. : 4601 Min. : 4218

1st Qu.:4.265 1st Qu.: 37.5 1st Qu.: 8292 1st Qu.: 7701

Median :4.603 Median : 74.0 Median : 17428 Median : 16709

Mean :4.720 Mean : 74.0 Mean : 78691 Mean : 74130

3rd Qu.:4.993 3rd Qu.:110.5 3rd Qu.: 44674 3rd Qu.: 41142

Max. :6.747 Max. :147.0 Max. :2079459 Max. :1989705

occ_rate_2011 med_total_income_2011

Min. :0.6492 Min. :22980

1st Qu.:0.9173 1st Qu.:27630

Median :0.9348 Median :29910

Mean :0.9276 Mean :31311

3rd Qu.:0.9475 3rd Qu.:33255

Max. :0.9723 Max. :73030

NA's :5 Before jumping aboard the bubble chart train, let’s see what the dataset looks like in the scatterplot framework for 5 of the variables, grouped along regions.

It’s … underwhelming, if that’s a word.49. There are some interesting tidbits, but nothing jumps as being meaningful beyond a first pass.

Can anything else be derived using bubble charts? We use median income as the radius for the bubbles, and focus on occupancy rates and population.

radius.med.income.2011<-sqrt(can.2011$med_total_income_2011/pi)

symbols(can.2011$log_pop_2011, can.2011$occ_rate_2011,

circles=radius.med.income.2011, inches=0.45,

fg="white", bg="red", xlab="Population (log)",

ylab="Occupancy Rate")

title("Total Median Income, by CMA and CA (2011)")

Clearly, an increase in population seems to be associated with (and not necessarily a cause of) a rise in occupancy rates. But the median income seems to have very little correlation with either of the other two variables. Perhaps such a correlation is hidden by the default unit used to draw the bubbles? Let’s shrink it from 0.45 to 0.25 and see if anything pops out.

symbols(can.2011$log_pop_2011, can.2011$occ_rate_2011, circles=radius.med.income.2011,

inches=0.25, fg="white", bg="red",

xlab="Population (log)", ylab="Occupancy Rate")

title("Total Median Income, by CMA and CA (2011)")

Not much to it. But surely there would be a relationship in these quantities if we included the CMA/CA’s region?

symbols(can.2011$log_pop_2011, can.2011$occ_rate_2011, circles=radius.med.income.2011,

inches=0.15, fg="white",

bg=c("red","blue","black","green","yellow","violet")[factor(

can.2011$Region)],

xlab="Population (log)", ylab="Occupancy Rate")

title("Total Median Income, by CMA and CA (2011) - colours represent

oeographical regions.")

What do you think?

Perhaps the CA distort the full picture (given that they are small and more numerous).

Let’s analyze the subset of CMAs instead.

can.2011.CMA=read.csv("data/Canada2011_CMA.csv", head=TRUE) # import the data

str(can.2011.CMA) #dataset structure (notice the number of observations)

summary(can.2011.CMA[,3:12], maxsum=13) # feature by feature distribution'data.frame': 33 obs. of 15 variables:

$ Geographic.code : int 1 205 305 310 408 421 433 442 462 505 ...

$ Geographic.name : chr "St. John's" "Halifax" "Moncton" "Saint John" ...

$ Province : chr "NL" "NS" "NB" "NB" ...

$ Region : chr "Atlantic" "Atlantic" "Atlantic" "Atlantic" ...

$ Type : chr "CMA" "CMA" "CMA" "CMA" ...

$ pop_2011 : int 196966 390328 138644 127761 157790 765706 201890 151773 3824221 1236324 ...

$ log_pop_2011 : num 5.29 5.59 5.14 5.11 5.2 ...

$ fem_pop_2011 : int 103587 204579 70901 65834 79770 394935 104026 78105 1971520 647083 ...

$ prop_fem_2011 : num 52.6 52.4 51.1 51.5 50.6 ...

$ pop_rank_2011 : int 20 13 29 31 26 7 19 27 2 4 ...

$ priv_dwell_2011 : int 84542 177295 62403 56775 73766 361447 99913 74837 1696210 526627 ...

$ occ_private_dwell_2011: int 78960 165153 58294 52281 69507 345892 91099 70138 1613260 498636 ...

$ occ_rate_2011 : num 0.934 0.932 0.934 0.921 0.942 ...

$ med_unemployment_2011 : num 6.6 6.2 7.45 6.75 7.8 5.15 6.4 8.9 8.1 6.3 ...

$ med_total_income_2011 : int 33420 33400 30690 29910 29560 33760 27620 27050 28870 39170 ...

Province Region Type pop_2011

Length:33 Length:33 Length:33 Min. : 118975

Class :character Class :character Class :character 1st Qu.: 159561

Mode :character Mode :character Mode :character Median : 260600

Mean : 700710

3rd Qu.: 721053

Max. :5583064

log_pop_2011 fem_pop_2011 prop_fem_2011 pop_rank_2011

Min. :5.075 Min. : 63260 Min. :50.55 Min. : 1

1st Qu.:5.203 1st Qu.: 83243 1st Qu.:51.55 1st Qu.: 9

Median :5.416 Median : 135561 Median :52.17 Median :17

Mean :5.553 Mean : 365093 Mean :52.05 Mean :17

3rd Qu.:5.858 3rd Qu.: 378690 3rd Qu.:52.41 3rd Qu.:25

Max. :6.747 Max. :2947971 Max. :53.17 Max. :33

priv_dwell_2011 occ_private_dwell_2011

Min. : 53730 Min. : 48848

1st Qu.: 72817 1st Qu.: 67767

Median : 110314 Median : 104237

Mean : 291519 Mean : 275587

3rd Qu.: 294150 3rd Qu.: 282186

Max. :2079459 Max. :1989705 We can use the median income for the bubbles radius again, but this time we’ll look at population and unemployment.

radius.med.income.2011.CMA<-sqrt(can.2011.CMA$med_total_income_2011/pi)

symbols(can.2011.CMA$log_pop_2011, can.2011.CMA$med_unemployment_2011,

circles=radius.med.income.2011.CMA, inches=0.25, fg="white",

bg=c("red","blue","gray","green","yellow")[factor(can.2011.CMA$Region)],

ylab="Population (log)", xlab="Unemployment Rate")

title("Total Median Income, by CMA (2011)")

text(can.2011.CMA$log_pop_2011, can.2011.CMA$med_unemployment_2011,

can.2011.CMA$Geographic.code, cex=0.5)

Part of the problem is that median income seems to be roughly uniform among CMAs. What if we used rank statistics instead? Switch the radius to population rank, say?

radius.pop.rank.2011.CMA<-sqrt(can.2011.CMA$pop_rank_2011/pi)

symbols(can.2011.CMA$med_total_income_2011, can.2011.CMA$med_unemployment_2011,

circles=radius.pop.rank.2011.CMA, inches=0.25, fg="white",

bg=c("red","blue","gray","green","yellow")[factor(can.2011.CMA$Region)],

ylab="Median Income", xlab="Unemployment Rate")

title("Population Rank, by CMA and CA (2011)")

text(can.2011.CMA$med_total_income_2011, can.2011.CMA$med_unemployment_2011,

can.2011.CMA$Geographic.code, cex=0.5)

There’s a bit more structure in there, isn’t there? Can you figure out to what regions the colours correspond? (Hint: look at the first digit of the bubble labels, and the frequency of the colours).

15.4.7 Exercises

Consider the following Australian population figures, by state (in 1000s):

| Year | NSW | Vic. | Qld | SA | WA | Tas. | NT | ACT | Aust. |

| 1917 | 1904 | 1409 | 683 | 440 | 306 | 193 | 5 | 3 | 4941 |

| 1927 | 2402 | 1727 | 873 | 565 | 392 | 211 | 4 | 8 | 6182 |

| 1937 | 2693 | 1853 | 993 | 589 | 457 | 233 | 6 | 11 | 6836 |

| 1947 | 2985 | 2055 | 1106 | 646 | 502 | 257 | 11 | 17 | 7579 |

| 1957 | 3625 | 2656 | 1413 | 873 | 688 | 326 | 21 | 38 | 9640 |

| 1967 | 4295 | 3274 | 1700 | 1110 | 879 | 375 | 62 | 103 | 11799 |

| 1977 | 5002 | 3837 | 2130 | 1286 | 1204 | 415 | 104 | 214 | 14192 |

| 1987 | 5617 | 4210 | 2675 | 1393 | 1496 | 449 | 158 | 265 | 16264 |

| 1997 | 6274 | 4605 | 3401 | 1480 | 1798 | 474 | 187 | 310 | 18532 |

| 2007 | 6889 | 5205 | 4182 | 1585 | 2106 | 493 | 215 | 340 | 21017 |

| 2017 | 7861 | 6324 | 4928 | 1723 | 2580 | 521 | 246 | 410 | 24599 |

Graph the New South Wales (NSW) population with all defaults using

plot(). Redo the graph by adding a title, a line to connect the points, and some colour.Compare the population of New South Wales (NSW) and the Australian Capital Territory (ACT) by using the functions

plot()andlines(), then add a legend to appropriately display your graph.Use a bar chart to graph the population of Queensland (QLD), add an appropriate title to your graph, and display the years from 1917 to 2017 on the appropriate bars.

Create a light blue histogram for the population of South Australia (SA).

15.5 Introduction to Dashboards

Dashboards are a helpful way to communicate and report data. They are versatile in that they support multiple types of reporting. Dashboards are predominantly used in business intelligence contexts, but they are being used more frequently to communicate data and visualize analysis for non-business services also. Popular dashboarding platforms include Tableau, and Power BI, although there are other options, such as Excel, R + Shiny, Geckoboard, Matillion, JavaScript, etc.

These technologies aim to make creating data reports as simple and user-friendly as possible. They are intuitive and powerful; creating a dashboard with these programs is quite easy, and there are tons of how-to guides available online [115]–[117].

In spite of their ease of use, however, dashboards suffer from the same limitations as other forms of data communication, to wit: how can results be conveyed effectively and how can an insightful data story be relayed to the desired audience? Putting together a “good” dashboard is more complicated then simply learning to use a dashboarding application [118].

15.5.1 Dashboard Fundamentals

Effective dashboarding requires that the designers answer questions about the planned-for display:

who is the target audience?

what value does the dashboard bring?

what type of dashboard is being created?

Answering these questions can guide and inform the visualization choices that go into creating dashboards.

Selecting the target audience helps inform data decisions that meet the needs and abilities of the audience. When thinking of an audience, consider their role (what decisions do they make?), their workflow (will they use the dashboard on a daily basis or only once?), and data expertise level (what is their level of data understanding?).

When creating a dashboard, its important to understand (and keep in mind) why one is needed in the first place – does it find value in:

helping managers make decisions?

educating people?

setting goals/expectations?

evaluating and communicating progress?

Dashboards can be used to communicate numerous concepts, but not all of them can necessarily be displayed in the same space and at the same time so it becomes important to know where to direct the focus to meet individual dashboards goals. Dashboard decisions should also be informed by the scope, the time horizon, the required level of detail, and the dashboard’s point-of-view.In general,

the scope of the dashboard could be either broad or specific – an example of a broad score would be displaying information about an entire organization, whereas a specific scope could focus on a specific product or process;

the time horizon is important for data decisions – it could be either historical, real-time, snapshot, or predictive:

historical dashboards look at past data to evaluate previous trends;

real-time dashboards refresh and monitor activity as it happens;

snapshot dashboards show data from a single time point, and

predictive dashboards use analytical results and trend-tracking to predict future performances;

the level of detail in a dashboard can either be high level or drill-able – high level dashboards provide only the most critical numbers and data; drill-able dashboards provide the ability to “drill down” into the data in order to gain more context.

the dashboard point of view can be prescriptive or exploratory – a prescriptive dashboard prescribes a solution to an identified problem by using the data as proof; an exploratory dashboard uses data to explore the data and find possible issues to be tackled.

The foundation of good dashboards comes down to deciding what information is most important to the audience in the context of interest; such dashboards should have a core theme based on either a problem to solve or a data story to tell, while removing extraneous information from the process.

15.5.2 Dashboard Structure

The dashboard structure is informed by four main considerations:

form – format in which the dashboard is delivered;

layout – physical look of the dashboard

design principles – fundamental objectives to guide design

functionality – capabilities of the dashboard

Dashboards can be presented on paper, in a slide deck, in an online application, over email (messaging), on a large screen, on a mobile phone screen, etc.

Selecting a format that suits the dashboard needs is a necessity; various formats might need to be tried before arriving at a final format decision.

The structure of the dashboard itself is important because visuals that tell similar stories (or different aspects of the same story) should be kept close together, as physical proximity of interacting components is expected from the viewers and consumers. Poor structural choices can lead to important dashboard elements being undervalued.

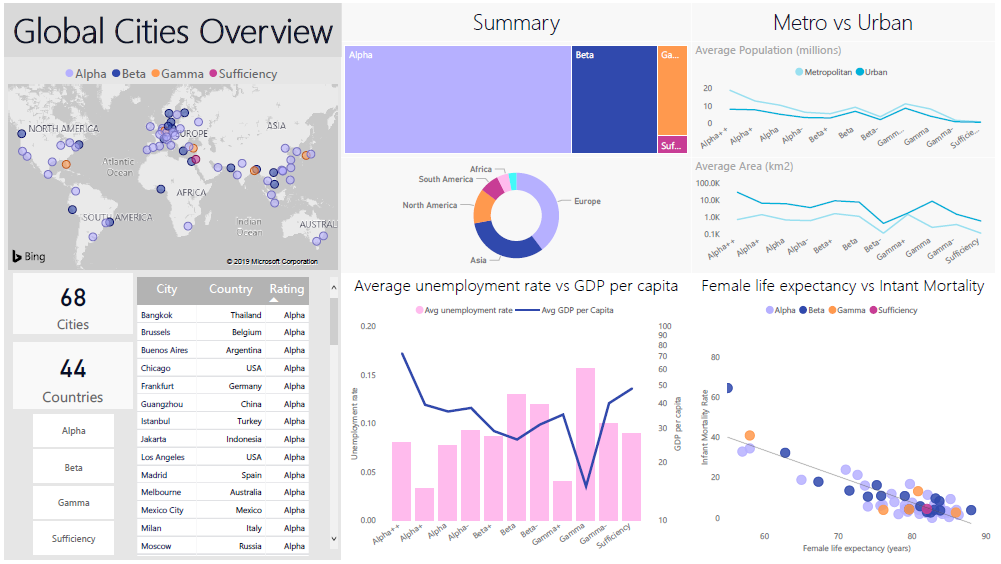

The dashboard shown in Figure 15.22 provides an example of group visuals that tell similar stories (the corresponding Power BI file can be found at ???).

Figure 15.22: An exploratory dashboard showing metrics about various cities ranked on the Global Cities Index. The dashboard goal is to allow a general audience to compare and contrast the various globally ranked cities – statistics that contribute to a ‘higher’ ranking immediately pop out. Viewers can also very easily make comparisons between high- and low-ranking cities. The background is kept neutral with a fair amount of blank space in order to keep the dashboard open and easy to read. The colours complement each other (via the use of a colour theme picker in Power BI) and are clearly indicative of ratings rather than comparative statistics (personal file).

Knowing which visual displays to use with the “right” data helps dashboards achieve structural integrity:

distributions can be displayed with bar charts and scatter plots;

compositions with pie charts, bar charts, and tree maps;

comparisons use bubble charts and bullet plots, and

trends are presented with line charts and area plots.

An interesting feature of dashboard structure is that it can be used to guide viewer attention; critical dashboard elements can be highlighted with the help of visual cues such as use of icons, colours, and fonts. Using filters is a good way to allow dashboard viewers of a dashboard to customize the dashboard scope (to some extent) and to investigate specific data categories more closely.

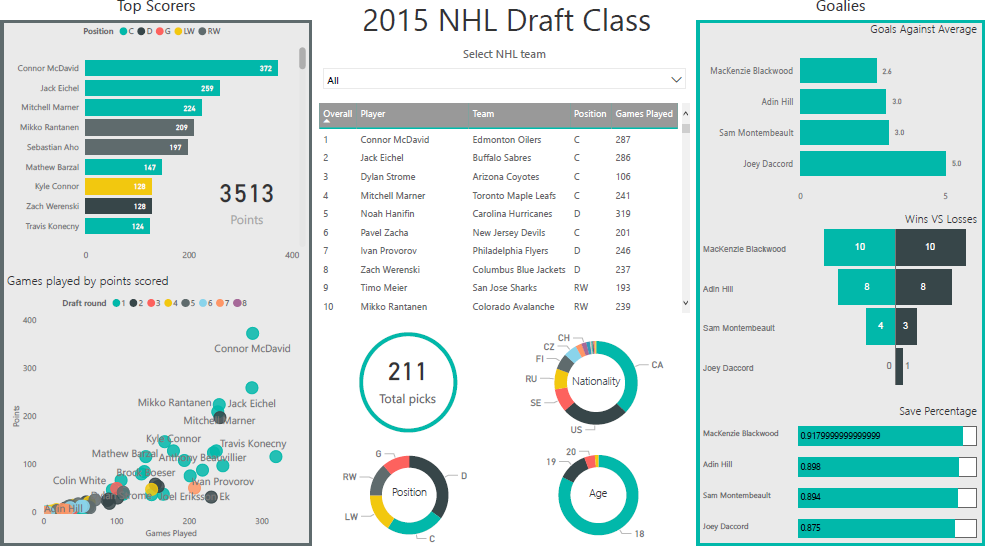

The dashboard shown in Figure 15.23 provides an example of a dashboard that makes use of an interactive filter to analyze data from specific categories.

Figure 15.23: An exploratory dashboard showing information about the National Hockey League draft class of 2015. The dashboard displays professional statistics (as of August 2019) of hockey players drafted into the NHL in 2015, as well as their overall draft position. This dashboard allows casual hockey fans to evaluate the performance of players drafted in 2015. It provides demographic information to give context to possible market deficiencies during this draft year (i.e. defence players were drafted more frequently than any other position). This dashboard is designed to be interactive; the filter tool at the top allows dashboard viewers to drill-down on specific teams (personal file).

15.5.3 Dashboard Design

An understanding of design improves dashboards; dissonant designs typically make for poor data communication. Design principles are discussed in [83], [104], [106], [109], [119], [120]. For dashboards, the crucial principles relate to the use of grids, white space, colour, and visuals.

When laying out a dashboard, gridding helps direct viewer attention and makes the space easier to parse; note, in Figure 15.22, how the various visuals are aligned in a grid format to lay the data out in a clean, readable manner.

In order to help viewers avoid becoming overwhelmed by clutter or information overload, consider leaving a enough blank space around and within the various charts; note, in Figure 15.23, that while the dashboard displays a lot of information, there is a lot of blank/white space between the various visuals, which provides viewers with space to breathe, so to speak. In general, clutter shuts down the communication process (see Figure 15.24 for two impressive examples of data communication breakdown).

Colour provides meaning to data visualizations – bright colours, for instance, should be used as alarm indicators as they immediately draw the viewer’s attention. Colour themes create cohesiveness, which improves the overall readability of a dashboard. There are no perfect dashboards – no collection of charts will ever suit everyone who encounters it.

That being said, dashboards that are elegant (as well as truthful and functional) will deliver a bigger bang for their buck [106], [107]. In the same vein, keep in mind that all dashboards are by necessity incomplete. A good dashboards may still lead to dead ends, but it should allow its users to ask: “Why? What is the root cause of the problem?”

Finally, designers and viewers alike must remember that a dashboard can only be as good as the data it uses; a dashboard with badly processed or unrepresentative data, or which is showing the results of poor analyses, cannot be an effective communication tool, independently of design.

15.5.4 Examples

Dashboards are used in varied contexts, such as:

interactive displays that allows people to explore motor insurance claims by city, province, driver age, etc.;

a PDF file showing key audit metrics that gets e-mailed to a Department’s DG on a weekly basis;

a wall-mounted screen that shows call centre statistics in real-time;

a mobile app that allows hospital administrators to review wait times on an hourly- and daily-basis for the current year and the previous year; etc.

15.5.4.1 The Ugly

While the previous dashboards all have some strong elements, it is a little bit harder to be generous for the two examples provided in Figure 15.24. Is it easy to figure out, at a glance, who their audience is meant to be? What are their strengths (do they have any)? What are their limitations? How could they be improved?

The first of these is simply “un-glanceable” and the overuse of colour makes it unpleasant to look at; the second one features 3D visualizations (rarely a good idea), distracting borders and background, lack of filtered data, insufficient labels and context, among others.

15.5.4.2 Golden Rules

In a (since-deleted) blog article, N. Smith posted his 6 Golden Rules:

consider the audience (who are you trying to inform?does the DG really need to know that the servers are operating at 88% capacity?);

select the right type of dashboard (operational, strategic/executive, analytical);

group data logically, use space wisely (split functional areas: product, sales/marketing, finance, people, etc.);

make the data relevant to the audience (scope and reach of data, different dashboards for different departments, etc.);

avoid cluttering the dashboard (present the most important metrics only), and

refresh your data at the right frequency (real-time, daily, weekly, monthly, etc.).

With dashboards, as with data analysis and data visualization in general, there is no substitute for practice: the best way to become a proficient builder of dashboards is to … well, to go out and build dashboards, try things out, and, frequently, to stumble and learn from the mistakes.

A more complete (and slightly different) take on dashboarding and storytelling with data is provided in [83].

15.6 ggplot2 Visualizations in R

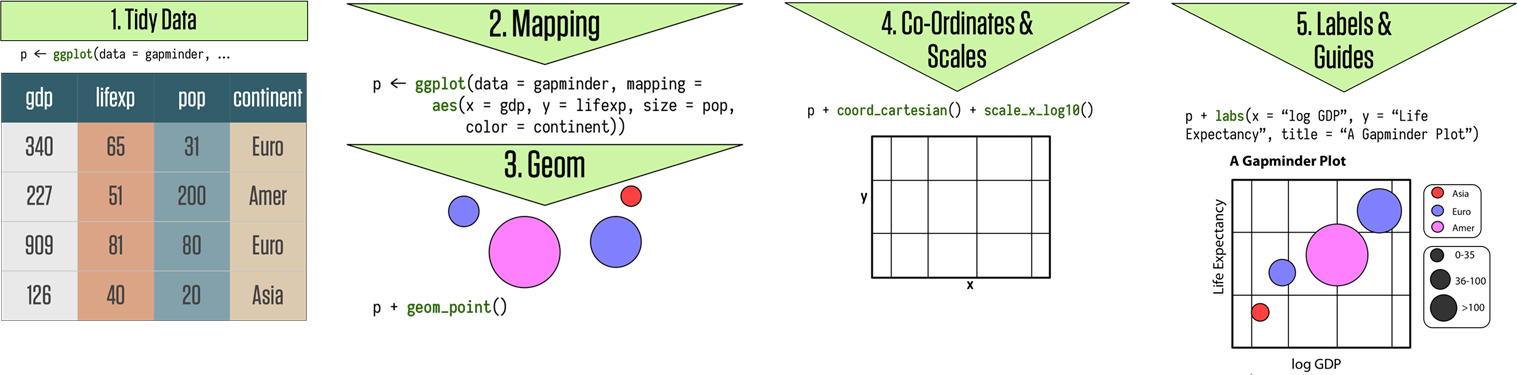

While R has become one of the world’s leading languages for statistical and data analysis, its plots are rarely of high-enough quality for publication. Enter Hadley Wickam’s ggplot2, an aesthetically pleasing and logical approach to data visualization based on the grammar of graphics. In this section, we introduce ggplot2’s basic elements, and present some examples illustrating how it is used in practice.

15.6.1 Basics of ggplot2’s Grammar

Four graphical systems are frequently used with R:

The

basegraphics system, written by Ross Ihaka, is included in everyRinstallation (the graphs produced in the section Basic Visualizations inRrely onbasegraphics functions).The

gridgraphics system, written by Paul Murrell in 2011, is implemented through thegridpackage, which offers a lower-level alternative to the standard graphics system. The user can create arbitrary rectangular regions on graphics devices, define coordinate systems for each region, and use a rich set of drawing primitives to control the arrangement and appearance of graphic elements.This flexibility makes grid a valuable tool for software developers. But the grid package doesn’t provide functions for producing statistical graphics or complete plots. As a result, it is rarely used directly by data analysts and won’t be discussed further (see Dr. Murrell’s Grid website).

The

latticepackage, written by Deepayan Sarkar in 2008, implements trellis graphs, as outlined by Cleveland (1985, 1993). Basically, trellis graphs display the distribution of a variable or the relationship between variables, separately for each level of one or more other variables. Built using thegridpackage, thelatticepackage has grown beyond Cleveland’s original approach to visualizing multivariate data and now provides a comprehensive alternative system for creating statistical graphics inR.Finally, the